目录

十一、mongodb分片介绍

十二、mongodb分片搭建

十三、mongodb分片测试

十四、mongodb备份恢复

十一、mongodb分片介绍

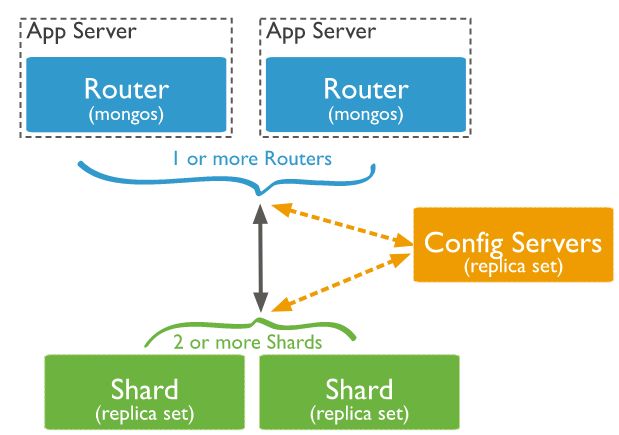

分片就是将数据库进行拆分,将大型集合分隔到不同服务器上。比如,本来100G的数据,可以分割成10份存储到10台服务器上,这样每台机器只有10G的数据。

通过一个mongos的进程(路由)实现分片后的数据存储与访问,也就是说mongos是整个分片架构的核心,对客户端而言是不知道是否有分片的,客户端只需要把读写操作转达给mongos即可。

虽然分片会把数据分隔到很多台服务器上,但是每一个节点都是需要有一个备用角色的,这样能保证数据的高可用。

当系统需要更多空间或者资源的时候,分片可以让我们按需方便扩展,只需要把mongodb服务的机器加入到分片集群中即可

- MongoDB分片架构图

- MongoDB分片相关概念

mongos: 数据库集群请求的入口,所有的请求都通过mongos进行协调,不需要在应用程序添加一个路由选择器,mongos自己就是一个请求分发中心,它负责把对应的数据请求请求转发到对应的shard服务器上。在生产环境通常有多mongos作为请求的入口,防止其中一个挂掉所有的mongodb请求都没有办法操作。

config server: 配置服务器,存储所有数据库元信息(路由、分片)的配置。mongos本身没有物理存储分片服务器和数据路由信息,只是缓存在内存里,配置服务器则实际存储这些数据。mongos第一次启动或者关掉重启就会从 config server 加载配置信息,以后如果配置服务器信息变化会通知到所有的 mongos 更新自己的状态,这样 mongos 就能继续准确路由。在生产环境通常有多个 config server 配置服务器,因为它存储了分片路由的元数据,防止数据丢失!

shard: 存储了一个集合部分数据的MongoDB实例,每个分片是单独的mongodb服务或者副本集,在生产环境中,所有的分片都应该是副本集。

十二、mongodb分片搭建

- 分片搭建 -服务器规划

三台机器 A B C

A搭建:mongos、config server、副本集1主节点、副本集2仲裁、副本集3从节点

B搭建:mongos、config server、副本集1从节点、副本集2主节点、副本集3仲裁

C搭建:mongos、config server、副本集1仲裁、副本集2从节点、副本集3主节点

端口分配:mongos 20000、config 21000、副本集1 27001、副本集2 27002、副本集3 27003

三台机器全部关闭firewalld服务和selinux,或者增加对应端口的规则

- 分片搭建 – 创建目录

分别在三台机器上创建各个角色所需要的目录

mkdir -p /data/mongodb/mongos/log

mkdir -p /data/mongodb/config/{data,log}

mkdir -p /data/mongodb/shard1/{data,log}

mkdir -p /data/mongodb/shard2/{data,log}

mkdir -p /data/mongodb/shard3/{data,log}

[root@minglinux-01 ~] mkdir -p /data/mongodb/mongos/log

[root@minglinux-01 ~] mkdir -p /data/mongodb/config/{data,log}

[root@minglinux-01 ~] mkdir -p /data/mongodb/shard1/{data,log}

[root@minglinux-01 ~] mkdir -p /data/mongodb/shard2/{data,log}

[root@minglinux-01 ~] mkdir -p /data/mongodb/shard3/{data,log}

[root@minglinux-02 ~] mkdir -p /data/mongodb/mongos/log

[root@minglinux-02 ~] mkdir -p /data/mongodb/config/{data,log}

[root@minglinux-02 ~] mkdir -p /data/mongodb/shard1/{data,log}

[root@minglinux-02 ~] mkdir -p /data/mongodb/shard2/{data,log}

[root@minglinux-02 ~] mkdir -p /data/mongodb/shard3/{data,log}

[root@minglinux-03 ~] mkdir -p /data/mongodb/mongos/log

[root@minglinux-03 ~] mkdir -p /data/mongodb/config/{data,log}

[root@minglinux-03 ~] mkdir -p /data/mongodb/shard1/{data,log}

[root@minglinux-03 ~] mkdir -p /data/mongodb/shard2/{data,log}

[root@minglinux-03 ~] mkdir -p /data/mongodb/shard3/{data,log}

- 分片搭建–config server配置

mongodb3.4版本以后需要对config server创建副本集

添加配置文件(三台机器都操作)

mkdir /etc/mongod/

vim /etc/mongod/config.conf //加入如下内容

pidfilepath = /var/run/mongodb/configsrv.pid

dbpath = /data/mongodb/config/data

logpath = /data/mongodb/config/log/congigsrv.log

logappend = true

bind_ip = 0.0.0.0 #0.0.0.0是全部监听

port = 21000

fork = true

configsvr = true #declare this is a config db of a cluster;

replSet=configs #副本集名称

maxConns=20000 #设置最大连接数

启动三台机器的config server

mongod -f /etc/mongod/config.conf //三台机器都要操作

登录任意一台机器的21000端口,初始化副本集

mongo --port 21000

config = { _id: "configs", members: [ {_id : 0, host : "192.168.133.130:21000"},{_id : 1, host : "192.168.133.132:21000"},{_id : 2, host : "192.168.133.133:21000"}] }

rs.initiate(config)

{ "ok" : 1 }

#A机器

[root@minglinux-01 ~] mkdir /etc/mongod/

[root@minglinux-01 ~] vim /etc/mongod/config.conf

#写入以下内容

1 pidfilepath = /var/run/mongodb/configsrv.pid

2 dbpath = /data/mongodb/config/data

3 logpath = /data/mongodb/config/log/congigsrv.log

4 logappend = true

5 bind_ip = 192.168.162.130 #0.0.0.0全部监听,安全起见改为本机ip

6 port = 21000

7 fork = true

8 configsvr = true #declare this is a config db of a cluster;

9 replSet=configs #副本集名称

10 maxConns=20000 #设置最大连接数

#启动机器的config server

[root@minglinux-01 ~] mongod -f /etc/mongod/config.conf

about to fork child process, waiting until server is ready for connections.

forked process: 2712

child process started successfully, parent exiting

[root@minglinux-01 ~] ps aux |grep mongo

mongod 2025 1.0 5.9 1526936 110112 ? Sl 15:19 5:15 /usr/bin/mongod -f /etc/mongod.conf

root 2712 3.8 2.6 1084480 48976 ? Sl 23:23 0:00 mongod -f /etc/mongod/config.conf

root 2746 0.0 0.0 112720 984 pts/0 S+ 23:23 0:00 grep --color=auto mongo

[root@minglinux-01 ~] netstat -lntp |grep mongo

tcp 0 0 192.168.162.130:21000 0.0.0.0:* LISTEN 2712/mongod

tcp 0 0 192.168.162.130:27017 0.0.0.0:* LISTEN 2025/mongod

tcp 0 0 127.0.0.1:27017 0.0.0.0:* LISTEN 2025/mongod

#B机器

[root@minglinux-02 ~] mkdir /etc/mongod/

[root@minglinux-02 ~] vim /etc/mongod/config.conf

#写入以下内容

pidfilepath = /var/run/mongodb/configsrv.pid

dbpath = /data/mongodb/config/data

logpath = /data/mongodb/config/log/congigsrv.log

logappend = true

bind_ip = 192.168.162.132

port = 21000

fork = true

configsvr = true #declare this is a config db of a cluster;

replSet=configs #副本集名称

maxConns=20000 #设置最大连接数

#启动机器的config server

[root@minglinux-02 ~] mongod -f /etc/mongod/config.conf

about to fork child process, waiting until server is ready for connections.

forked process: 11559

child process started successfully, parent exiting

[root@minglinux-02 ~] ps aux |grep mongo

mongod 3267 1.0 4.7 1459264 88640 ? Sl 3月08 5:07 /usr/bin/mongod -f /etc/mongod.conf

root 11559 2.8 2.5 1084412 47828 ? Sl 07:22 0:00 mongod -f /etc/mongod/config.conf

root 11593 0.0 0.0 112720 980 pts/0 S+ 07:22 0:00 grep --color=auto mongo

[root@minglinux-02 ~] netstat -lntp |grep mongo

tcp 0 0 192.168.162.132:21000 0.0.0.0:* LISTEN 11559/mongod

tcp 0 0 192.168.162.132:27017 0.0.0.0:* LISTEN 3267/mongod

tcp 0 0 127.0.0.1:27017 0.0.0.0:* LISTEN 3267/mongod

#C机器

[root@minglinux-03 ~] mkdir /etc/mongod/

[root@minglinux-03 ~] vim /etc/mongod/config.conf

#写入以下内容

1 pidfilepath = /var/run/mongodb/configsrv.pid

2 dbpath = /data/mongodb/config/data

3 logpath = /data/mongodb/config/log/congigsrv.log

4 logappend = true

5 bind_ip = 192.168.162.128

6 port = 21000

7 fork = true

8 configsvr = true #declare this is a config db of a cluster;

9 replSet=configs #副本集名称

10 maxConns=20000 #设置最大连接数

#启动机器的config server

[root@minglinux-03 ~] mongod -f /etc/mongod/config.conf

about to fork child process, waiting until server is ready for connections.

forked process: 3524

child process started successfully, parent exiting

[root@minglinux-03 ~] ps aux |grep mongo

mongod 2785 1.1 4.8 1461456 91068 ? Sl 15:29 5:01 /usr/bin/mongod -f /etc/mongod.conf

root 3524 3.4 2.4 1084552 45324 ? Sl 23:00 0:00 mongod -f /etc/mongod/config.conf

root 3558 0.0 0.0 112720 984 pts/1 S+ 23:00 0:00 grep --color=auto mongo

[root@minglinux-03 ~] netstat -lntp |grep mongo

tcp 0 0 192.168.162.128:21000 0.0.0.0:* LISTEN 3524/mongod

tcp 0 0 192.168.162.128:27017 0.0.0.0:* LISTEN 2785/mongod

tcp 0 0 127.0.0.1:27017 0.0.0.0:* LISTEN 2785/mongod

#登录130机器的21000端口,初始化副本集

[root@minglinux-01 ~] mongo --host 192.168.162.130 --port 21000

···

> config = { _id: "configs", members: [ {_id : 0, host : "192.168.162.130:21000"},{_id : 1, host : "192.168.162.132:21000"},{_id : 2, host : "192.168.162.128:21000"}] }

{

"_id" : "configs",

"members" : [

{

"_id" : 0,

"host" : "192.168.162.130:21000"

},

{

"_id" : 1,

"host" : "192.168.162.132:21000"

},

{

"_id" : 2,

"host" : "192.168.162.128:21000"

}

]

}

> rs.initiate(config)

{ "ok" : 1 }

configs:OTHER> rs.status()

{

"set" : "configs",

"date" : ISODate("2019-03-08T15:46:37.425Z"),

"myState" : 1,

"term" : NumberLong(1),

"syncingTo" : "",

"syncSourceHost" : "",

"syncSourceId" : -1,

"configsvr" : true,

"heartbeatIntervalMillis" : NumberLong(2000),

"optimes" : {

"lastCommittedOpTime" : {

"ts" : Timestamp(1552059991, 1),

"t" : NumberLong(1)

},

"readConcernMajorityOpTime" : {

"ts" : Timestamp(1552059991, 1),

"t" : NumberLong(1)

},

"appliedOpTime" : {

"ts" : Timestamp(1552059991, 1),

"t" : NumberLong(1)

},

"durableOpTime" : {

"ts" : Timestamp(1552059991, 1),

"t" : NumberLong(1)

}

},

"members" : [

{

"_id" : 0,

"name" : "192.168.162.130:21000",

"health" : 1,

"state" : 1,

"stateStr" : "PRIMARY",

"uptime" : 1416,

"optime" : {

"ts" : Timestamp(1552059991, 1),

"t" : NumberLong(1)

},

"optimeDate" : ISODate("2019-03-08T15:46:31Z"),

"syncingTo" : "",

"syncSourceHost" : "",

"syncSourceId" : -1,

"infoMessage" : "could not find member to sync from",

"electionTime" : Timestamp(1552059973, 1),

"electionDate" : ISODate("2019-03-08T15:46:13Z"),

"configVersion" : 1,

"self" : true,

"lastHeartbeatMessage" : ""

},

{

"_id" : 1,

"name" : "192.168.162.132:21000",

"health" : 1,

"state" : 2,

"stateStr" : "SECONDARY",

"uptime" : 35,

"optime" : {

"ts" : Timestamp(1552059991, 1),

"t" : NumberLong(1)

},

"optimeDurable" : {

"ts" : Timestamp(1552059991, 1),

"t" : NumberLong(1)

},

"optimeDate" : ISODate("2019-03-08T15:46:31Z"),

"optimeDurableDate" : ISODate("2019-03-08T15:46:31Z"),

"lastHeartbeat" : ISODate("2019-03-08T15:46:35.422Z"),

"lastHeartbeatRecv" : ISODate("2019-03-08T15:46:36.494Z"),

"pingMs" : NumberLong(1),

"lastHeartbeatMessage" : "",

"syncingTo" : "192.168.162.130:21000",

"syncSourceHost" : "192.168.162.130:21000",

"syncSourceId" : 0,

"infoMessage" : "",

"configVersion" : 1

},

{

"_id" : 2,

"name" : "192.168.162.128:21000",

"health" : 1,

"state" : 2,

"stateStr" : "SECONDARY",

"uptime" : 35,

"optime" : {

"ts" : Timestamp(1552059991, 1),

"t" : NumberLong(1)

},

"optimeDurable" : {

"ts" : Timestamp(1552059991, 1),

"t" : NumberLong(1)

},

"optimeDate" : ISODate("2019-03-08T15:46:31Z"),

"optimeDurableDate" : ISODate("2019-03-08T15:46:31Z"),

"lastHeartbeat" : ISODate("2019-03-08T15:46:35.430Z"),

"lastHeartbeatRecv" : ISODate("2019-03-08T15:46:36.567Z"),

"pingMs" : NumberLong(0),

"lastHeartbeatMessage" : "",

"syncingTo" : "192.168.162.132:21000",

"syncSourceHost" : "192.168.162.132:21000",

"syncSourceId" : 1,

"infoMessage" : "",

"configVersion" : 1

}

],

"ok" : 1

}

- 分片搭建–分片配置

添加配置文件(三台机器都操作)

vim /etc/mongod/shard1.conf //加入如下内容

pidfilepath = /var/run/mongodb/shard1.pid

dbpath = /data/mongodb/shard1/data

logpath = /data/mongodb/shard1/log/shard1.log

logappend = true

bind_ip = 0.0.0.0 #安全起见,这里用本机ip较好

port = 27001

fork = true

httpinterface=true #打开web监控

rest=true

replSet=shard1 #副本集名称

shardsvr = true #declare this is a shard db of a cluster;

maxConns=20000 #设置最大连接数添加配置文件(三台机器都操作)

vim /etc/mongod/shard2.conf //加入如下内容

pidfilepath = /var/run/mongodb/shard2.pid

dbpath = /data/mongodb/shard2/data

logpath = /data/mongodb/shard2/log/shard2.log

logappend = true

bind_ip = 0.0.0.0

port = 27002

fork = true

httpinterface=true #打开web监控

rest=true

replSet=shard2 #副本集名称

shardsvr = true #declare this is a shard db of a cluster;

maxConns=20000 #设置最大连接数添加配置文件(三台机器都操作)

vim /etc/mongod/shard3.conf //加入如下内容

pidfilepath = /var/run/mongodb/shard3.pid

dbpath = /data/mongodb/shard3/data

logpath = /data/mongodb/shard3/log/shard3.log

logappend = true

bind_ip = 0.0.0.0

port = 27003

fork = true

httpinterface=true #打开web监控

rest=true

replSet=shard3 #副本集名称

shardsvr = true #declare this is a shard db of a cluster;

maxConns=20000 #设置最大连接数

启动shard1

mongod -f /etc/mongod/shard1.conf //三台机器都要操作

登录130或者132任何一台机器的27001端口初始化副本集,128之所以不行,是因为shard1我们把128这台机器的27001端口作为了仲裁节点

mongo --port 27001

use admin

config = { _id: "shard1", members: [ {_id : 0, host : "192.168.162.130:27001"}, {_id: 1,host : "192.168.162.132:27001"},{_id : 2, host : "192.168.162.128:27001",arbiterOnly:true}] }

rs.initiate(config)启动shard2

mongod -f /etc/mongod/shard2.conf //三台机器都要操作

登录132或者128任何一台机器的27002端口初始化副本集,130之所以不行,是因为shard2我们把130这台机器的27002端口作为了仲裁节点

mongo --port 27002

use admin

config = { _id: "shard2", members: [ {_id : 0, host : "192.168.162.130:27002" ,arbiterOnly:true},{_id : 1, host : "192.168.162.132:27002"},{_id : 2, host : "192.168.162.128:27002"}] }

rs.initiate(config)启动shard3

mongod -f /etc/mongod/shard3.conf //三台机器都要操作

登录130或者133任何一台机器的27003端口初始化副本集,132之所以不行,是因为shard3我们把132这台机器的27003端口作为了仲裁节点

mongo --port 27003

use admin

config = { _id: "shard3", members: [ {_id : 0, host : "192.168.162.130:27003"}, {_id : 1, host : "192.168.162.132:27003", arbiterOnly:true}, {_id : 2, host : "192.168.162.128:27003"}] }

rs.initiate(config)

#A机器

[root@minglinux-01 ~] vim /etc/mongod/shard1.conf

#写入以下内容

4 logappend = true

5 bind_ip = 192.168.162.130

6 port = 27001

7 fork = true

8 httpinterface=true #打开web监控

9 rest=true

10 replSet=shard1 #副本集名称

11 shardsvr = true #declare this is a shard db of a cluster;

12 maxConns=20000 #设置最大连接数

[root@minglinux-01 ~] cp /etc/mongod/shard1.conf /etc/mongod/shard2.conf

[root@minglinux-01 ~] cp /etc/mongod/shard1.conf /etc/mongod/shard3.conf

[root@minglinux-01 ~] sed -i 's/shard1/shard2/g' /etc/mongod/shard2.conf

[root@minglinux-01 ~] sed -i 's/shard1/shard3/g' /etc/mongod/shard3.conf

#将shard1、shard2、shard3文件传到B、C机器

[root@minglinux-01 ~] cd /etc/mongod/

[root@minglinux-01 /etc/mongod] ls

config.conf shard1.conf shard2.conf shard3.conf

[root@minglinux-01 /etc/mongod] scp shard1.conf shard2.conf shard3.conf 192.168.162.132:/etc/mongod

[email protected]'s password:

shard1.conf 100% 366 135.4KB/s 00:00

shard2.conf 100% 366 110.7KB/s 00:00

shard3.conf 100% 366 68.6KB/s 00:00

[root@minglinux-01 /etc/mongod] scp shard1.conf shard2.conf shard3.conf 192.168.162.128:/etc/mongod

[email protected]'s password:

shard1.conf 100% 366 223.4KB/s 00:00

shard2.conf 100% 366 200.9KB/s 00:00

shard3.conf 100% 366 258.6KB/s 00:00

#然后在B、C机器上修改文件中的监听ip

- 分片搭建–分片配置

启动shard1

mongod -f /etc/mongod/shard1.conf //三台机器都要操作

登录130或者132任何一台机器的27001端口初始化副本集,128之所以不行,是因为shard1我们把128这台机器的27001端口作为了仲裁节点

mongo --port 27001

use admin

config = { _id: "shard1", members: [ {_id : 0, host : "192.168.162.130:27001"}, {_id: 1,host : "192.168.162.132:27001"},{_id : 2, host : "192.168.162.128:27001",arbiterOnly:true}] }

rs.initiate(config)启动shard2

mongod -f /etc/mongod/shard2.conf //三台机器都要操作

登录132或者128任何一台机器的27002端口初始化副本集,130之所以不行,是因为shard2我们把130这台机器的27002端口作为了仲裁节点

mongo --port 27002

use admin

config = { _id: "shard2", members: [ {_id : 0, host : "192.168.162.130:27002" ,arbiterOnly:true},{_id : 1, host : "192.168.162.132:27002"},{_id : 2, host : "192.168.162.128:27002"}] }

rs.initiate(config)启动shard3

mongod -f /etc/mongod/shard3.conf //三台机器都要操作

登录130或者128任何一台机器的27003端口初始化副本集,132之所以不行,是因为shard3我们把132这台机器的27003端口作为了仲裁节点

mongo --port 27003

use admin

config = { _id: "shard3", members: [ {_id : 0, host : "192.168.162.130:27003"}, {_id : 1, host : "192.168.162.132:27003", arbiterOnly:true}, {_id : 2, host : "192.168.162.128:27003"}] }

rs.initiate(config)

#启动shard1

[root@minglinux-01 /etc/mongod] mongod -f /etc/mongod/shard1.conf

about to fork child process, waiting until server is ready for connections.

forked process: 2946

child process started successfully, parent exiting

#B、C机器进行同样操作

[root@minglinux-01 /etc/mongod] mongo --host 192.168.162.130 --port 27001

···

> use admin

switched to db admin

> config = { _id: "shard1", members: [ {_id : 0, host : "192.168.162.130:27001"}, {_id: 1,host : "192.168.162.132:27001"},{_id : 2, host : "192.168.162.128:27001",arbiterOnly:true}] }

{

"_id" : "shard1",

"members" : [

{

"_id" : 0,

"host" : "192.168.162.130:27001"

},

{

"_id" : 1,

"host" : "192.168.162.132:27001"

},

{

"_id" : 2,

"host" : "192.168.162.128:27001",

"arbiterOnly" : true

}

]

}

> rs.initiate(config)

{ "ok" : 1 }

shard1:SECONDARY> #稍等下可以看到自动变PRIMARY

shard1:PRIMARY> rs.status()

{

"set" : "shard1",

"date" : ISODate("2019-03-08T16:45:27.867Z"),

"myState" : 1,

"term" : NumberLong(1),

"syncingTo" : "",

"syncSourceHost" : "",

"syncSourceId" : -1,

"heartbeatIntervalMillis" : NumberLong(2000),

"optimes" : {

"lastCommittedOpTime" : {

"ts" : Timestamp(1552063522, 1),

"t" : NumberLong(1)

},

"appliedOpTime" : {

"ts" : Timestamp(1552063522, 1),

"t" : NumberLong(1)

},

"durableOpTime" : {

"ts" : Timestamp(1552063522, 1),

"t" : NumberLong(1)

}

},

"members" : [

{

"_id" : 0,

"name" : "192.168.162.130:27001",

"health" : 1,

"state" : 1,

"stateStr" : "PRIMARY",

"uptime" : 794,

"optime" : {

"ts" : Timestamp(1552063522, 1),

"t" : NumberLong(1)

},

"optimeDate" : ISODate("2019-03-08T16:45:22Z"),

"syncingTo" : "",

"syncSourceHost" : "",

"syncSourceId" : -1,

"infoMessage" : "could not find member to sync from",

"electionTime" : Timestamp(1552063470, 1),

"electionDate" : ISODate("2019-03-08T16:44:30Z"),

"configVersion" : 1,

"self" : true,

"lastHeartbeatMessage" : ""

},

{

"_id" : 1,

"name" : "192.168.162.132:27001",

"health" : 1,

"state" : 2,

"stateStr" : "SECONDARY",

"uptime" : 68,

"optime" : {

"ts" : Timestamp(1552063522, 1),

"t" : NumberLong(1)

},

"optimeDurable" : {

"ts" : Timestamp(1552063522, 1),

"t" : NumberLong(1)

},

"optimeDate" : ISODate("2019-03-08T16:45:22Z"),

"optimeDurableDate" : ISODate("2019-03-08T16:45:22Z"),

"lastHeartbeat" : ISODate("2019-03-08T16:45:26.374Z"),

"lastHeartbeatRecv" : ISODate("2019-03-08T16:45:27.247Z"),

"pingMs" : NumberLong(0),

"lastHeartbeatMessage" : "",

"syncingTo" : "192.168.162.130:27001",

"syncSourceHost" : "192.168.162.130:27001",

"syncSourceId" : 0,

"infoMessage" : "",

"configVersion" : 1

},

{

"_id" : 2,

"name" : "192.168.162.128:27001",

"health" : 1,

"state" : 7,

"stateStr" : "ARBITER",

"uptime" : 68,

"lastHeartbeat" : ISODate("2019-03-08T16:45:26.331Z"),

"lastHeartbeatRecv" : ISODate("2019-03-08T16:45:25.975Z"),

"pingMs" : NumberLong(0),

"lastHeartbeatMessage" : "",

"syncingTo" : "",

"syncSourceHost" : "",

"syncSourceId" : -1,

"infoMessage" : "",

"configVersion" : 1

}

],

"ok" : 1

}

#启动shard2

[root@minglinux-01 /etc/mongod] mongod -f /etc/mongod/shard2.conf

about to fork child process, waiting until server is ready for connections.

forked process: 3159

child process started successfully, parent exiting

#B、C机器进行同样启动操作

#130这台机器的27002端口作为了仲裁节点,只能登录132或者128任何一台机器的27002端口初始化副本集

[root@minglinux-02 /etc/mongod] mongo --host 192.168.162.132 --port 27002

···

> use admin

switched to db admin

> config = { _id: "shard2", members: [ {_id : 0, host : "192.168.162.130:27002" ,arbiterOnly:true},{_id : 1, host : "192.168.162.132:27002"},{_id : 2, host : "192.168.162.128:27002"}] }

{

"_id" : "shard2",

"members" : [

{

"_id" : 0,

"host" : "192.168.162.130:27002",

"arbiterOnly" : true

},

{

"_id" : 1,

"host" : "192.168.162.132:27002"

},

{

"_id" : 2,

"host" : "192.168.162.128:27002"

}

]

}

> rs.initiate(config)

{ "ok" : 1 }

shard2:OTHER>

shard2:SECONDARY> rs.status()

{

"set" : "shard2",

"date" : ISODate("2019-03-09T01:05:17.368Z"),

"myState" : 1,

"term" : NumberLong(1),

"syncingTo" : "",

"syncSourceHost" : "",

"syncSourceId" : -1,

"heartbeatIntervalMillis" : NumberLong(2000),

"optimes" : {

"lastCommittedOpTime" : {

"ts" : Timestamp(1552093515, 1),

"t" : NumberLong(1)

},

"appliedOpTime" : {

"ts" : Timestamp(1552093515, 1),

"t" : NumberLong(1)

},

"durableOpTime" : {

"ts" : Timestamp(1552093515, 1),

"t" : NumberLong(1)

}

},

"members" : [

{

"_id" : 0,

"name" : "192.168.162.130:27002",

"health" : 1,

"state" : 7,

"stateStr" : "ARBITER",

"uptime" : 34,

"lastHeartbeat" : ISODate("2019-03-09T01:05:17.350Z"),

"lastHeartbeatRecv" : ISODate("2019-03-09T01:05:14.982Z"),

"pingMs" : NumberLong(1),

"lastHeartbeatMessage" : "",

"syncingTo" : "",

"syncSourceHost" : "",

"syncSourceId" : -1,

"infoMessage" : "",

"configVersion" : 1

},

{

"_id" : 1,

"name" : "192.168.162.132:27002",

"health" : 1,

"state" : 1,

"stateStr" : "PRIMARY",

"uptime" : 372,

"optime" : {

"ts" : Timestamp(1552093515, 1),

"t" : NumberLong(1)

},

"optimeDate" : ISODate("2019-03-09T01:05:15Z"),

"syncingTo" : "",

"syncSourceHost" : "",

"syncSourceId" : -1,

"infoMessage" : "could not find member to sync from",

"electionTime" : Timestamp(1552093493, 1),

"electionDate" : ISODate("2019-03-09T01:04:53Z"),

"configVersion" : 1,

"self" : true,

"lastHeartbeatMessage" : ""

},

{

"_id" : 2,

"name" : "192.168.162.128:27002",

"health" : 1,

"state" : 2,

"stateStr" : "SECONDARY",

"uptime" : 34,

"optime" : {

"ts" : Timestamp(1552093515, 1),

"t" : NumberLong(1)

},

"optimeDurable" : {

"ts" : Timestamp(1552093515, 1),

"t" : NumberLong(1)

},

"optimeDate" : ISODate("2019-03-09T01:05:15Z"),

"optimeDurableDate" : ISODate("2019-03-09T01:05:15Z"),

"lastHeartbeat" : ISODate("2019-03-09T01:05:17.350Z"),

"lastHeartbeatRecv" : ISODate("2019-03-09T01:05:16.070Z"),

"pingMs" : NumberLong(1),

"lastHeartbeatMessage" : "",

"syncingTo" : "192.168.162.132:27002",

"syncSourceHost" : "192.168.162.132:27002",

"syncSourceId" : 1,

"infoMessage" : "",

"configVersion" : 1

}

],

"ok" : 1

}

shard2:PRIMARY> #130为仲裁,所以只能是132或128为PRIMARY

#启动shard3

[root@minglinux-01 /etc/mongod] mongod -f /etc/mongod/shard3.conf

about to fork child process, waiting until server is ready for connections.

forked process: 3220

child process started successfully, parent exiting

#B、C机器也一样启动操作

#132机器作为仲裁,登录130或者128任何一台机器的27003端口初始化副本集

[root@minglinux-03 /etc/mongod] mongo --host 192.168.162.128 --port 27003

···

> use admin

switched to db admin

> config = { _id: "shard3", members: [ {_id : 0, host : "192.168.162.130:27003"}, {_id : 1, host : "192.168.162.132:27003", arbiterOnly:true}, {_id : 2, host : "192.168.162.128:27003"}] }

{

"_id" : "shard3",

"members" : [

{

"_id" : 0,

"host" : "192.168.162.130:27003"

},

{

"_id" : 1,

"host" : "192.168.162.132:27003",

"arbiterOnly" : true

},

{

"_id" : 2,

"host" : "192.168.162.128:27003"

}

]

}

> rs.initiate(config)

{ "ok" : 1 }

shard3:OTHER>

shard3:SECONDARY> rs.status()

{

"set" : "shard3",

"date" : ISODate("2019-03-08T17:12:44.166Z"),

"myState" : 1,

"term" : NumberLong(1),

"syncingTo" : "",

"syncSourceHost" : "",

"syncSourceId" : -1,

"heartbeatIntervalMillis" : NumberLong(2000),

"optimes" : {

"lastCommittedOpTime" : {

"ts" : Timestamp(1552065154, 1),

"t" : NumberLong(1)

},

"appliedOpTime" : {

"ts" : Timestamp(1552065154, 1),

"t" : NumberLong(1)

},

"durableOpTime" : {

"ts" : Timestamp(1552065154, 1),

"t" : NumberLong(1)

}

},

"members" : [

{

"_id" : 0,

"name" : "192.168.162.130:27003",

"health" : 1,

"state" : 2,

"stateStr" : "SECONDARY",

"uptime" : 32,

"optime" : {

"ts" : Timestamp(1552065154, 1),

"t" : NumberLong(1)

},

"optimeDurable" : {

"ts" : Timestamp(1552065154, 1),

"t" : NumberLong(1)

},

"optimeDate" : ISODate("2019-03-08T17:12:34Z"),

"optimeDurableDate" : ISODate("2019-03-08T17:12:34Z"),

"lastHeartbeat" : ISODate("2019-03-08T17:12:43.360Z"),

"lastHeartbeatRecv" : ISODate("2019-03-08T17:12:43.984Z"),

"pingMs" : NumberLong(0),

"lastHeartbeatMessage" : "",

"syncingTo" : "192.168.162.128:27003",

"syncSourceHost" : "192.168.162.128:27003",

"syncSourceId" : 2,

"infoMessage" : "",

"configVersion" : 1

},

{

"_id" : 1,

"name" : "192.168.162.132:27003",

"health" : 1,

"state" : 7,

"stateStr" : "ARBITER",

"uptime" : 32,

"lastHeartbeat" : ISODate("2019-03-08T17:12:43.360Z"),

"lastHeartbeatRecv" : ISODate("2019-03-08T17:12:43.863Z"),

"pingMs" : NumberLong(0),

"lastHeartbeatMessage" : "",

"syncingTo" : "",

"syncSourceHost" : "",

"syncSourceId" : -1,

"infoMessage" : "",

"configVersion" : 1

},

{

"_id" : 2,

"name" : "192.168.162.128:27003",

"health" : 1,

"state" : 1,

"stateStr" : "PRIMARY",

"uptime" : 260,

"optime" : {

"ts" : Timestamp(1552065154, 1),

"t" : NumberLong(1)

},

"optimeDate" : ISODate("2019-03-08T17:12:34Z"),

"syncingTo" : "",

"syncSourceHost" : "",

"syncSourceId" : -1,

"infoMessage" : "could not find member to sync from",

"electionTime" : Timestamp(1552065143, 1),

"electionDate" : ISODate("2019-03-08T17:12:23Z"),

"configVersion" : 1,

"self" : true,

"lastHeartbeatMessage" : ""

}

],

"ok" : 1

}

shard3:PRIMARY> #132是仲裁,128成为了PRIMARY

- 分片搭建–配置路由服务器

添加配置文件(三台机器都操作)

vim /etc/mongod/mongos.conf //加入如下内容

pidfilepath = /var/run/mongodb/mongos.pid

logpath = /data/mongodb/mongos/log/mongos.log

logappend = true

bind_ip = 0.0.0.0

port = 20000

fork = true

configdb = configs/192.168.162.130:21000, 192.168.162.132:21000, 192.168.162.128:21000 #监听的配置服务器,只能有1个或者3个,configs为配置服务器的副本集名字

maxConns=20000 #设置最大连接数

启动mongos服务,注意命令,前面都是mongod,这里是mongos

mongos -f /etc/mongod/mongos.conf

[root@minglinux-01 ~] vim /etc/mongod/mongos.conf

#加入以下内容

1 pidfilepath = /var/run/mongodb/mongos.pid

1 pidfilepath = /var/run/mongodb/mongos.pid

2 logpath = /data/mongodb/mongos/log/mongos.log

3 logappend = true

4 bind_ip = 0.0.0.0

5 port = 20000

6 fork = true 7 configdb = configs/192.168.162.130:21000, 192.168.162.132:21000, 192.168.162.128:21000 #监听的配置服务器,只能有1个或者3个,configs为配置服务器的副本集名字 maxConns=20000 #设置最大连接

#130机器上将mongos.conf传输到另外两台机器上

[root@minglinux-01 ~] scp /etc/mongod/mongos.conf 192.168.162.132:/etc/mongod/

[email protected]'s password:

mongos.conf 100% 366 223.4KB/s 00:00

[root@minglinux-01 ~] scp /etc/mongod/mongos.conf 192.168.162.128:/etc/mongod/

[email protected]'s password:

mongos.conf 100% 366 223.2KB/s 00:00

#启动mongos服务,三台机器都一样启动

[root@minglinux-01 ~] mongos -f /etc/mongod/mongos.conf

about to fork child process, waiting until server is ready for connections.

forked process: 2326

ERROR: child process failed, exited with error number 48

#有错误。。。

#看下日志

[root@minglinux-01 ~] tail -4 /data/mongodb/mongos/log/mongos.log

2019-03-09T16:28:00.229+0800 E NETWORK [mongosMain] listen(): bind() failed Address already in use for socket: 0.0.0.0:20000

2019-03-09T16:28:00.229+0800 E NETWORK [mongosMain] addr already in use

2019-03-09T16:28:00.229+0800 E NETWORK [mongosMain] Failed to set up sockets during startup.

2019-03-09T16:28:00.229+0800 I CONTROL [mongosMain] shutting down with code:48

#查一下2000端口

[root@minglinux-01 ~] netstat -lntp | grep 20000

tcp 0 0 0.0.0.0:20000 0.0.0.0:* LISTEN 1935/mongos

[root@minglinux-01 ~] kill 1935 #杀死进程重新启动

[root@minglinux-01 ~] netstat -lntp | grep 20000

[root@minglinux-01 ~] mongos -f /etc/mongod/mongos.conf

about to fork child process, waiting until server is ready for connections.

forked process: 2376

child process started successfully, parent exiting

#另外两台机器一样启动即可

- 分片搭建–启用分片

登录任何一台20000端口

mongo --port 20000

把所有分片和路由器串联

sh.addShard("shard1/192.168.162.130:27001,192.168.162.132:27001,192.168.162.128:27001")

sh.addShard("shard2/192.168.162.130:27002,192.168.162.132:27002,192.168.162.128:27002")

sh.addShard("shard3/192.168.162.130:27003,192.168.162.132:27003,192.168.162.128:27003")

查看集群状态

sh.status()

#登录130机器的20000端口,把所有分片和路由器串联

[root@minglinux-01 ~] mongo --host 192.168.162.130 --port 20000

···

mongos>

mongos> sh.addShard("shard1/192.168.162.130:27001,192.168.162.132:27001,192.168.162.128:27001")

{ "shardAdded" : "shard1", "ok" : 1 }

mongos>sh.addShard("shard2/192.168.162.130:27002,192.168.162.132:27002,192.168.162.128:27002")

{ "shardAdded" : "shard2", "ok" : 1 }

mongos> sh.addShard("shard3/192.168.162.130:27003,192.168.162.132:27003,192.168.162.128:27003")

{ "shardAdded" : "shard3", "ok" : 1 }

#查看集群状态

mongos> sh.status()

--- Sharding Status ---

sharding version: {

"_id" : 1,

"minCompatibleVersion" : 5,

"currentVersion" : 6,

"clusterId" : ObjectId("5c828e47ff99880b3fc7d789")

}

shards:

{ "_id" : "shard1", "host" : "shard1/192.168.162.130:27001,192.168.162.132:27001", "state" : 1 }

{ "_id" : "shard2", "host" : "shard2/192.168.162.128:27002,192.168.162.132:27002", "state" : 1 }

{ "_id" : "shard3", "host" : "shard3/192.168.162.128:27003,192.168.162.130:27003", "state" : 1 }

active mongoses:

"3.4.19" : 1

autosplit:

Currently enabled: yes

balancer:

Currently enabled: yes

Currently running: no

NaN

Failed balancer rounds in last 5 attempts: 0

Migration Results for the last 24 hours:

No recent migrations

databases:

十三、mongodb分片测试

登录任何一台20000端口

mongo --port 20000

use admin

db.runCommand({ enablesharding : "testdb"}) 或者

sh.enableSharding("testdb") //指定要分片的数据库

db.runCommand( { shardcollection : "testdb.table1",key : {id: 1} } ) 或者

sh.shardCollection("testdb.table1",{"id":1} ) //#指定数据库里需要分片的集合和片键

use testdb

for (var i = 1; i <= 10000; i++) db.table1.save({id:i,"test1":"testval1"})//插入测试数据

db.table1.stats()//查看table1状态

#登录130机器的20000端口

[root@minglinux-01 ~] mongo --host 192.168.162.130 --port 20000

mongos> use admin

switched to db admin

mongos> sh.enableSharding("testdb") #指定要分片的数据库

{ "ok" : 1 }

mongos> sh.shardCollection("testdb.table1",{"id":1} ) #指定数据库里需要分片的集合和片键

{ "collectionsharded" : "testdb.table1", "ok" : 1 }

mongos> use testdb

switched to db testdb

mongos> for (var i = 1; i <= 10000; i++) db.table1.save({id:i,"test1":"testval1"}) #插入测试数据

WriteResult({ "nInserted" : 1 })

mongos>

mongos> show dbs

admin 0.000GB

config 0.001GB

testdb 0.000GB

mongos> sh.status()

--- Sharding Status ---

sharding version: {

"_id" : 1,

"minCompatibleVersion" : 5,

"currentVersion" : 6,

"clusterId" : ObjectId("5c828e47ff99880b3fc7d789")

}

shards:

{ "_id" : "shard1", "host" : "shard1/192.168.162.130:27001,192.168.162.132:27001", "state" : 1 }

{ "_id" : "shard2", "host" : "shard2/192.168.162.128:27002,192.168.162.132:27002", "state" : 1 }

{ "_id" : "shard3", "host" : "shard3/192.168.162.128:27003,192.168.162.130:27003", "state" : 1 }

active mongoses:

"3.4.19" : 1

autosplit:

Currently enabled: yes

balancer:

Currently enabled: yes

Currently running: no

NaN

Failed balancer rounds in last 5 attempts: 0

Migration Results for the last 24 hours:

No recent migrations

databases:

{ "_id" : "testdb", "primary" : "shard3", "partitioned" : true }

testdb.table1

shard key: { "id" : 1 }

unique: false

balancing: true

chunks:

shard3 1

{ "id" : { "$minKey" : 1 } } -->> { "id" : { "$maxKey" : 1 } } on : shard3 Timestamp(1, 0)

#再新建两个数据库

mongos> sh.enableSharding("testdb2")

{ "ok" : 1 }

mongos> sh.shardCollection("testdb2.cl2",{"id":1} )

{ "collectionsharded" : "testdb2.cl2", "ok" : 1 }

mongos> sh.enableSharding("testdb3")

{ "ok" : 1 }

mongos> sh.shardCollection("testdb3.cl3",{"id":1} )

{ "collectionsharded" : "testdb3.cl3", "ok" : 1 }

mongos> sh.status() #看下3个库的分片情况

--- Sharding Status ---

sharding version: {

"_id" : 1,

"minCompatibleVersion" : 5,

"currentVersion" : 6,

"clusterId" : ObjectId("5c828e47ff99880b3fc7d789")

}

shards:

{ "_id" : "shard1", "host" : "shard1/192.168.162.130:27001,192.168.162.132:27001", "state" : 1 }

{ "_id" : "shard2", "host" : "shard2/192.168.162.128:27002,192.168.162.132:27002", "state" : 1 }

{ "_id" : "shard3", "host" : "shard3/192.168.162.128:27003,192.168.162.130:27003", "state" : 1 }

active mongoses:

"3.4.19" : 1

autosplit:

Currently enabled: yes

balancer:

Currently enabled: yes

Currently running: no

NaN

Failed balancer rounds in last 5 attempts: 0

Migration Results for the last 24 hours:

No recent migrations

databases:

{ "_id" : "testdb", "primary" : "shard3", "partitioned" : true }

testdb.table1

shard key: { "id" : 1 }

unique: false

balancing: true

chunks:

shard3 1

{ "id" : { "$minKey" : 1 } } -->> { "id" : { "$maxKey" : 1 } } on : shard3 Timestamp(1, 0)

{ "_id" : "testdb2", "primary" : "shard1", "partitioned" : true }

testdb2.cl2

shard key: { "id" : 1 }

unique: false

balancing: true

chunks:

shard1 1

{ "id" : { "$minKey" : 1 } } -->> { "id" : { "$maxKey" : 1 } } on : shard1 Timestamp(1, 0)

{ "_id" : "testdb3", "primary" : "shard1", "partitioned" : true }

testdb3.cl3

shard key: { "id" : 1 }

unique: false

balancing: true

chunks:

shard1 1

{ "id" : { "$minKey" : 1 } } -->> { "id" : { "$maxKey" : 1 } } on : shard1 Timestamp(1, 0)

十四、mongodb备份恢复

- 备份

备份指定库

mongodump --host 127.0.0.1 --port 20000 -d mydb -o /tmp/mongobak

它会在/tmp/目录下面生成一个mydb的目录

备份所有库

mongodump --host 127.0.0.1 --port 20000 -o /tmp/mongobak/alldatabase

指定备份集合

mongodump --host 127.0.0.1 --port 20000 -d mydb -c c1 -o /tmp/mongobak/

它依然会生成mydb目录,再在这目录下面生成两个文件

导出集合为json文件

mongoexport --host 127.0.0.1 --port 20000 -d mydb -c c1 -o /tmp/mydb2/1.json

#备份指定testdb库

[root@minglinux-01 ~] mkdir /tmp/mongobak

[root@minglinux-01 ~] mongodump --host 127.0.0.1 --port 20000 -d testdb -o /tmp/mongobak

2019-03-09T22:24:38.148+0800 writing testdb.table1 to

2019-03-09T22:24:38.247+0800 done dumping testdb.table1 (10000 documents)

[root@minglinux-01 ~] ls /tmp/mongobak/

testdb

[root@minglinux-01 ~] ls /tmp/mongobak/testdb/

table1.bson table1.metadata.json

[root@minglinux-01 ~] cd !$

cd /tmp/mongobak/testdb/

[root@minglinux-01 /tmp/mongobak/testdb] du -sh *

528K table1.bson

4.0K table1.metadata.json

[root@minglinux-01 /tmp/mongobak/testdb] cat table1.metadata.json #记录库的相关信息

{"options":{},"indexes":[{"v":2,"key":{"_id":1},"name":"_id_","ns":"testdb.table1"},{"v":2,"key":{"id":1.0},"name":"id_1","ns":"testdb.table1"}]}

#备份所有库

[root@minglinux-01 /tmp/mongobak/testdb] mongodump --host 127.0.0.1 --port 20000 -o /tmp/mongoall

[root@minglinux-01 /tmp/mongobak/testdb] ls !$ #可以看到备份了所有库

ls /tmp/mongoall

admin config testdb testdb2 testdb3

#备份指定集合

[root@minglinux-01 /tmp/mongobak/testdb] mongodump --host 127.0.0.1 --port 20000 -d testdb -c table1 -o /tmp/mongobak2

2019-03-09T22:37:56.193+0800 writing testdb.table1 to

2019-03-09T22:37:56.423+0800 done dumping testdb.table1 (10000 documents)

[root@minglinux-01 /tmp/mongobak/testdb] ls !$

ls /tmp/mongobak2

testdb

[root@minglinux-01 /tmp/mongobak/testdb] ls !$/testdb

ls /tmp/mongobak2/testdb

table1.bson table1.metadata.json

#若testdb下有多个表会仅显示table1,这里原本就只有一个

#导出集合为json文件

[root@minglinux-01 /tmp/mongobak/testdb] mongoexport --host 127.0.0.1 --port 20000 -d testdb -c table1 -o /tmp/mongobak3/table1.json

[root@minglinux-01 /tmp/mongobak/testdb] ls /tmp/mongobak3/table1.json

/tmp/mongobak3/table1.json

[root@minglinux-01 /tmp/mongobak/testdb] du -sh !$

du -sh /tmp/mongobak3/table1.json

732K /tmp/mongobak3/table1.json

[root@minglinux-01 /tmp/mongobak/testdb] head -5 /tmp/mongobak3/table1.json

{"_id":{"$oid":"5c8389f926ef9a751afb9625"},"id":1.0,"test1":"testval1"}

{"_id":{"$oid":"5c8389f926ef9a751afb9626"},"id":2.0,"test1":"testval1"}

{"_id":{"$oid":"5c8389f926ef9a751afb9627"},"id":3.0,"test1":"testval1"}

{"_id":{"$oid":"5c8389f926ef9a751afb9628"},"id":4.0,"test1":"testval1"}

{"_id":{"$oid":"5c8389f926ef9a751afb962a"},"id":6.0,"test1":"testval1"}

#table1的内容就是一万条数据

- 恢复

恢复所有库

mongorestore -h 127.0.0.1 --port 20000 --drop dir/ //其中dir是备份所有库的目录名字,其中--drop可选,意思是当恢复之前先把之前的数据删除,不建议使用

恢复指定库

mongorestore -d mydb dir/ //-d跟要恢复的库名字,dir就是该库备份时所在的目录

恢复集合

mongorestore -d mydb -c testc dir/mydb/testc.bson // -c后面跟要恢复的集合名字,dir是备份mydb库时生成文件所在路径,这里是一个bson文件的路径

导入集合

mongoimport -d mydb -c testc --file /tmp/testc.json

#删除数据库

[root@minglinux-01 /tmp/mongobak/testdb] mongo --host 192.168.162.130 --port 20000

mongos> show dbs

admin 0.000GB

config 0.001GB

testdb 0.000GB

testdb2 0.000GB

testdb3 0.000GB

mongos> use testdb

switched to db testdb

mongos> db.dropDatabase()

{ "dropped" : "testdb", "ok" : 1 }

mongos> use testdb2

switched to db testdb2

mongos> db.dropDatabase()

{ "dropped" : "testdb2", "ok" : 1 }

mongos> use testdb3

switched to db testdb3

mongos> db.dropDatabase()

{ "dropped" : "testdb3", "ok" : 1 }

mongos> show dbs

admin 0.000GB

config 0.001GB

#恢复所有库

[root@minglinux-01 /tmp/mongobak/testdb] mongorestore -h 127.0.0.1 --port 20000 --drop

/tmp/mongoall/

admin/ config/ testdb/ testdb2/ testdb3/

[root@minglinux-01 /tmp/mongobak/testdb] mongorestore -h 127.0.0.1 --port 20000 --drop /tmp/mongoall/

2019-03-09T23:07:41.666+0800 preparing collections to restore from

2019-03-09T23:07:41.670+0800 Failed: cannot do a full restore on a sharded system - remove the 'config' directory from the dump directory first

[root@minglinux-01 /tmp/mongobak/testdb] rm -rf /tmp/mongoall/config #将备份目录中的config库和admin库删除,不需要恢复这两个库

[root@minglinux-01 /tmp/mongobak/testdb] rm -rf /tmp/mongoall/admin

[root@minglinux-01 /tmp/mongobak/testdb] mongorestore -h 127.0.0.1 --port 20000 --drop /tmp/mongoall

2019-03-09T23:09:17.877+0800 preparing collections to restore from

2019-03-09T23:09:17.888+0800 reading metadata for testdb2.cl2 from /tmp/mongoall/testdb2/cl2.metadata.json

2019-03-09T23:09:17.901+0800 reading metadata for testdb.table1 from /tmp/mongoall/testdb/table1.metadata.json

2019-03-09T23:09:17.917+0800 reading metadata for testdb3.cl3 from /tmp/mongoall/testdb3/cl3.metadata.json

2019-03-09T23:09:18.031+0800 restoring testdb3.cl3 from /tmp/mongoall/testdb3/cl3.bson

2019-03-09T23:09:18.039+0800 restoring testdb2.cl2 from /tmp/mongoall/testdb2/cl2.bson

2019-03-09T23:09:18.061+0800 restoring testdb.table1 from /tmp/mongoall/testdb/table1.bson

2019-03-09T23:09:18.086+0800 restoring indexes for collection testdb3.cl3 from metadata

2019-03-09T23:09:18.089+0800 restoring indexes for collection testdb2.cl2 from metadata

2019-03-09T23:09:18.105+0800 finished restoring testdb2.cl2 (0 documents)

2019-03-09T23:09:18.119+0800 finished restoring testdb3.cl3 (0 documents)

2019-03-09T23:09:19.218+0800 restoring indexes for collection testdb.table1 from metadata

2019-03-09T23:09:19.272+0800 finished restoring testdb.table1 (10000 documents)

2019-03-09T23:09:19.272+0800 done

#再登录2000端口看看

[root@minglinux-01 /tmp/mongobak/testdb] mongo --host 192.168.162.130 --port 20000

mongos> show dbs

admin 0.000GB

config 0.001GB

testdb 0.000GB

testdb2 0.000GB

testdb3 0.000GB