hadoop+hive三节点环境搭建

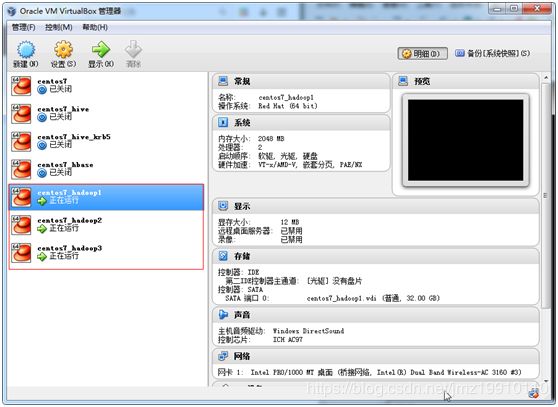

以下使用虚拟机搭建Hadoop+Hive环境

虚拟机:Oracle VirtualBox

操作系统:centos7.6

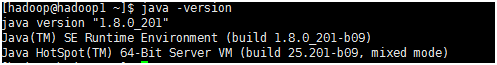

JDK:1.8.0_201

MySql: 5.7.25

Hadoop:hadoop-2.8.1

Hive:apache-hive-1.2.2-bin

Hadoop集群为三个节点:

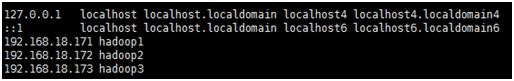

主节点hadoop1 192.168.18.171

从节点hadoop2 192.168.18.172

从节点Hadoop3 192.168.18.173

1、Centos7镜像下载

1、Centos7镜像下载

http://mirrors.aliyun.com/centos/7/isos/x86_64/

2、三个节点分别设置hostname,hadoop1、hadoop2、hadoop3

永久修改hostname,以hadoop1为例:

Centos7:hostnamectl set-hostname hadoop1

Centos6:

① vi /etc/sysconfig/network

修改localhost.localdomain为hadoop1

② vi /etc/hosts

添加一条:192.168.18.171 hadoop1

③ reboot

每个节点的host文件都配置包含三个节点信息:

3、关闭防火墙和selinux

三台节点都需要需要关闭防火墙和selinux

systemctl stop firewalld.service

systemctl disable firewalld.service

vi /etc/selinux/config

重启设备生效

4、创建hadoop用户

为三个节点分别创建相同的用户hadoop,后续都在此用户下操作:

useradd -m hadoop

passwd hadoop

为hadoop添加sudo权限:hadoop ALL=(ALL) ALL

切换到hadoop用户:su - hadoop

注意:三个节点的用户名必须相同,不然以后会对后面ssh及hadoop集群搭建

产生影响

5、设置ssh免密登录

以hadoop1节点ssh免密登陆hadoop2、hadoop3节点设置为例:

生成hadoop1的rsa密钥:ssh-keygen -t rsa

设置全部采用默认值进行回车

将生成的rsa追加写入授权文件:

cat ~/.ssh/id_rsa.pub >> ~/.ssh/authorized_keys

给授权文件权限:chmod 600 ~/.ssh/authorized_keys

进行本机ssh测试:

ssh hadoop1正常免密登陆后所有的ssh第一次都需要密码,此后都不需要密码

将hadoop1上的authorized_keys分别传到hadoop2、hadoop3

sudo scp ~/.ssh/id_rsa.pub hadoop@hadoop2:~/

sudo scp ~/.ssh/id_rsa.pub hadoop@hadoop3:~/

以hadoop2为例(hadoop3同样操作):

登陆到hadoop2操作:$ssh hadoop2输入密码登陆

cat ~/id_rsa.pub >> ~/.ssh/authorized_keys

修改authorized_keys权限:chmod 600 ~/.ssh/authorized_keys

退出hadoop2:exit

进行免密ssh登陆测试:ssh hadoop2

6、JDK安装,三个节点都需要安装

下载地址:https://www.oracle.com/technetwork/java/javase/downloads/jdk8-downloads-2133151.html

为jdk8的新版本,下载jdk-8u201-linux-x64.tar.gz

解压:sudo tar -zxvf jdk-8u201-linux-x64.tar.gz -C /usr/local/

配置hadoop用户的JAVA环境变量

vi ~/.bashrc

文件末尾追加以下内容:

export JAVA_HOME=/usr/local/jdk1.8.0_201

export JRE_HOME= J A V A H O M E / j r e e x p o r t C L A S S P A T H = . : {JAVA_HOME}/jre export CLASSPATH=.: JAVAHOME/jreexportCLASSPATH=.:{JAVA_HOME}/lib: J R E H O M E / l i b e x p o r t P A T H = {JRE_HOME}/lib export PATH= JREHOME/libexportPATH={JAVA_HOME}/bin:$PATH

使环境变量生效:source ~/.bashrc

7、Hadoop安装,在hadoop1节点安装,拷贝到hadoop2、hadoop3节点

下载地址:http://archive.apache.org/dist/hadoop/common/hadoop-2.8.1/

下载hadoop-2.8.1.tar.gz

解压:sudo tar -zxvf hadoop-2.8.1.tar.gz -C /usr/local/

sudo chown -R hadoop:hadoop hadoop-2.8.1/

8、Hadoop主节点hadoop1配置文件(路径/usr/local/hadoop-2.8.1/etc/hadoop)修改

8.1、core-site.xml

fs.default.name

hdfs://hadoop1:9000

hadoop.tmp.dir

file:/home/hadoop/hadoop/tmp

8.2、hdfs-site.xml

dfs.replication

2

dfs.namenode.name.dir

file:/home/hadoop/hadoop/tmp/dfs/name

dfs.datanode.data.dir

file:/home/hadoop/hadoop/tmp/dfs/data

dfs.namenode.secondary.http-address

hadoop1:9001

8.3、mapred-site.xml

cp mapred-site.xml.template mapred-site.xml

mapreduce.framework.name

yarn

8.4、yarn-site.xml

# The java implementation to use.

export JAVA_HOME=/usr/local/jdk1.8.0_201

8.6、编辑slaves文件

hadoop2

hadoop3

9、Hadoop其他节点配置

从主节点Hadoop安装目录复制到hadoop2、hadoop3节点

在hadoop2和hadoop3的/usr/local/目录下分别创建目录hadoop-2.8.1:

sudo chown -R hadoop:hadoop hadoop-2.8.1/

sudo scp -r /usr/local/hadoop-2.8.1/* hadoop@hadoop2:/usr/local/hadoop-2.8.1/

sudo scp -r /usr/local/hadoop-2.8.1/* hadoop@hadoop3:/usr/local/hadoop-2.8.1/

10、Hadoop环境变量配置

配置hadoop用户的HAOOP_HOME变量(每个节点都需要此步操作)

vi ~/.bashrc

# hadoop environment vars

export HADOOP_HOME=/usr/local/hadoop-2.8.1

export HADOOP_INSTALL= H A D O O P H O M E e x p o r t H A D O O P M A P R E D H O M E = {HADOOP_HOME} export HADOOP_MAPRED_HOME= HADOOPHOMEexportHADOOPMAPREDHOME={HADOOP_HOME}

export HADOOP_COMMON_HOME= H A D O O P H O M E e x p o r t H A D O O P H D F S H O M E = {HADOOP_HOME} export HADOOP_HDFS_HOME= HADOOPHOMEexportHADOOPHDFSHOME={HADOOP_HOME}

export YARN_HOME= H A D O O P H O M E e x p o r t H A D O O P C O M M O N L I B N A T I V E D I R = {HADOOP_HOME} export HADOOP_COMMON_LIB_NATIVE_DIR= HADOOPHOMEexportHADOOPCOMMONLIBNATIVEDIR={HADOOP_HOME}/lib/native

export HADOOP_OPTS="-Djava.library.path= H A D O O P H O M E / l i b " e x p o r t P A T H = {HADOOP_HOME}/lib" export PATH= HADOOPHOME/lib"exportPATH={JAVA_HOME}/bin: H A D O O P H O M E / b i n : {HADOOP_HOME}/bin: HADOOPHOME/bin:PATH

使环境变量生效:source ~/.bashrc

![]()

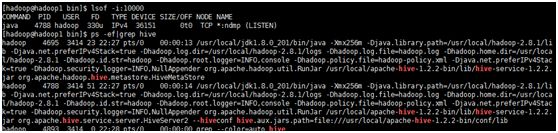

11、Hadoop启动

格式化namenode:hadoop namenode -format

启动Hadoop(脚本目录/usr/local/hadoop-2.8.1/sbin):

cd /usr/local/hadoop-2.8.1/sbin

./start-dfs.sh

./start-yarn.sh

jps查看一下进程,hadoop分布式搭建完成

12、安装MySQL数据库(在主节点hadoop1安装)

使用MySQL作为hive的元数据库

CentOS7默认数据库是mariadb,需要下载安装mysql yum源

wget -i -c http://dev.mysql.com/get/mysql57-community-release-el7-10.noarch.rpm

yum -y install mysql57-community-release-el7-10.noarch.rpm

yum -y install mysql-community-server

启动服务:systemctl start mysqld.service

默认密码grep ‘temporary password’ /var/log/mysqld.log,需要修改

5.7默认开启密码策略,修改不符合策略会报错:

mysql> alter user ‘root’@‘localhost’ IDENTIFIED BY ‘123456’;

ERROR 1819 (HY000): Your password does not satisfy the current policy requirements

需修改/etc/my.cnf 增加validate_password = off,重启mysqld

mysql -u root -p

alter user ‘root’@‘localhost’ IDENTIFIED BY ‘123456’;

允许远程登录:

GRANT ALL PRIVILEGES ON . TO ‘root’@’%’ IDENTIFIED BY ‘123456’ WITH GRANT OPTION;

flush privileges;

13、安装hive

下载地址http://archive.apache.org/dist/hive/hive-1.2.2/

下载apache-hive-1.2.2-bin.tar.gz

解压:sudo tar -zxvf apache-hive-1.2.2-bin.tar.gz -C /usr/local/

配置环境变量vi ~/.bashrc

# hive environment vars

export HIVE_HOME=/usr/local/apache-hive-1.2.2-bin

export HIVE_CONF_DIR= H I V E H O M E / c o n f e x p o r t P A T H = {HIVE_HOME}/conf export PATH= HIVEHOME/confexportPATH={JAVA_HOME}/bin: H A D O O P H O M E / b i n : {HADOOP_HOME}/bin: HADOOPHOME/bin:{HIVE_HOME}/bin: P A T H 环 境 变 量 生 效 : s o u r c e / . b a s h r c [ h a d o o p @ h a d o o p 1 a p a c h e − h i v e − 1.2.2 − b i n ] PATH 环境变量生效:source ~/.bashrc [hadoop@hadoop1 apache-hive-1.2.2-bin] PATH环境变量生效:source /.bashrc[hadoop@hadoop1apache−hive−1.2.2−bin] echo $HIVE_HOME

/usr/local/apache-hive-1.2.2-bin

修改配置文件之前,先创建以下目录:

mkdir /home/hadoop/hive

mkdir /home/hadoop/hive/warehouse

mkdir /home/hadoop/hive/tmp

hive配置文件目录/usr/local/apache-hive-1.2.2-bin/conf

①修改hive-site.xml

若不存在则cp hive-default.xml.template hive-site.xml

# Config

export HADOOP_HOME=/usr/local/hadoop-2.8.1

export HIVE_CONF_DIR=/usr/local/apache-hive-1.2.2-bin/conf

export HIVE_AUX_JARS_PATH=/usr/local/apache-hive-1.2.2-bin/lib

③添加数据驱动包

配置的是MySQL数据库,将mysql 的驱动包下载地址:

https://dev.mysql.com/get/Downloads/Connector-J/mysql-connector-java-5.1.47.zip

解压将mysql-connector-java-5.1.47.jar上传到 /usr/local/apache-hive-1.2.2-bin/lib

首先初始化数据库,进入目录/usr/local/apache-hive-1.2.2-bin/bin

初始化的时候注意要将mysql启动

输入:schematool -initSchema -dbType mysql

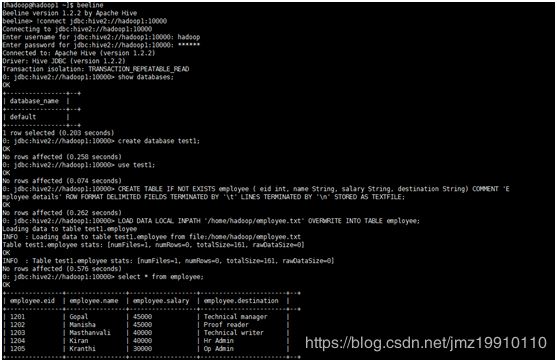

启动服务:

nohup hive --service metastore &

nohup hive --service hiveserver2 &

Hive的端口是10000,查看是否正常启动

使用hive自带的beeline客户端连接测试:

在/home/hadoop目录下创建employee.txt(列分隔符\t,行分隔符\n),内容如下:

1201 Gopal 45000 Technical manager

1202 Manisha 45000 Proof reader

1203 Masthanvali 40000 Technical writer

1204 Kiran 40000 Hr Admin

1205 Kranthi 30000 Op Admin

建表语句:

CREATE TABLE IF NOT EXISTS employee ( eid int, name String, salary String, destination String) COMMENT ‘Employee details’ ROW FORMAT DELIMITED FIELDS TERMINATED BY ‘\t’ LINES TERMINATED BY ‘\n’ STORED AS TEXTFILE;