Kaggle入门课程之Machine Learning

第一讲

- panda库对csv数据的处理

import pandas as pd

# save filepath to variable for easier access

melbourne_file_path = '../input/melbourne-housing-snapshot/melb_data.csv'

# read the data and store data in DataFrame titled melbourne_data

melbourne_data = pd.read_csv(melbourne_file_path)

# print a summary of the data in Melbourne data

melbourne_data.describe()

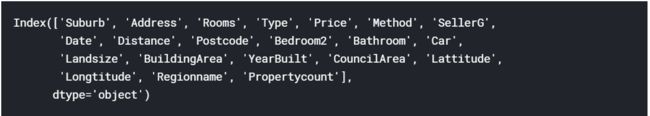

melbourne_data.columns

# 效果显示如下

melbourne_data = melbourne_data.dropna(axis=0)

# 清除行缺失值

melbourne_features = ['Rooms', 'Bathroom', 'Landsize', 'Lattitude', 'Longtitude']

X = melbourne_data[melbourne_features]

# 将特征数据放入x中

X.head()

# 返回数据前5行,用来检测数据格式是否正确

- scikit-learn库建立模型

-

- 模型的建立

from sklearn.tree import DecisionTreeRegressor

#设定random_state使得下次模拟仍然有相同的数据

melbourne_model = DecisionTreeRegressor(random_state=1)

#Fit model

melbourne_model.fit(X, y)

-

- 数据的测试

print("Making predictions for the following 5 houses:")

print(X.head())

print("The predictions are")

print(melbourne_model.predict(X.head()))

- 平均最小误差的实现

from sklearn.metrics import mean_absolute_error

predicted_home_prices = melbourne_model.predict(X)

mean_absolute_error(y, predicted_home_prices)

- 测试集的实现

from sklearn.model_selection import train_test_split

train_X, val_X, train_y, val_y = train_test_split(X, y, random_state = 0)

# Define model

melbourne_model = DecisionTreeRegressor()

# Fit model

melbourne_model.fit(train_X, train_y)

# get predicted prices on validation data

val_predictions = melbourne_model.predict(val_X)

print(mean_absolute_error(val_y, val_predictions))

- 对最大叶节点个数有了约束

from sklearn.metrics import mean_absolute_error

from sklearn.tree import DecisionTreeRegressor

def get_mae(max_leaf_nodes, train_X, val_X, train_y, val_y):

model = DecisionTreeRegressor(max_leaf_nodes=max_leaf_nodes, random_state=0)

model.fit(train_X, train_y)

preds_val = model.predict(val_X)

mae = mean_absolute_error(val_y, preds_val)

return(mae)

- 使用for循环对叶节点数进行选取

for max_leaf_nodes in [5, 50, 500, 5000]:

my_mae = get_mae(max_leaf_nodes, train_X, val_X, train_y, val_y)

print("Max leaf nodes: %d \t\t Mean Absolute Error: %d" %(max_leaf_nodes, my_mae))

- 利用循环对字典中的键值对进行读取

candidate_max_leaf_nodes = [5, 25, 50, 100, 250, 500]

# 利用循环实现字典

scores = {leaf_size: get_mae(leaf_size, train_X, val_X, train_y, val_y) for leaf_size in candidate_max_leaf_nodes}

# min 函数的用法:如下取出的是key

best_tree_size = min(scores, key=scores.get)

如下取出的是value

best_tree_size = min(scores)

- 随机树的实现

from sklearn.ensemble import RandomForestRegressor

from sklearn.metrics import mean_absolute_error

forest_model = RandomForestRegressor(random_state=1)

forest_model.fit(train_X, train_y)

melb_preds= rf_model.predict(val_X)

print(mean_absolute_error(val_y, melb_preds))

- 缺失值的处理

-

- 删除某一列的元素,当缺失值较多时

data_without_missing_values = original_data.dropna(axis=1)

#axis为0时代表删除行

#drop,dropna具有同样的效果

cols_with_missing = [col for col in original_data.columns

if original_data[col].isnull().any()]

reduced_original_data = original_data.drop(cols_with_missing, axis=1)

reduced_test_data = test_data.drop(cols_with_missing, axis=1)

#把训练集和测试集中相对应的元素都删掉

-

- 采取近似值即是平均值来代替缺失值

# 优点:可以使得学习过程流水化

from sklearn.impute import SimpleImputer

my_imputer = SimpleImputer()

data_with_imputed_values = my_imputer.fit_transform(original_data)

-

- 方法2的扩展

# make copy to avoid changing original data (when Imputing)

new_data = original_data.copy()

# make new columns indicating what will be imputed

cols_with_missing = (col for col in new_data.columns

if new_data[col].isnull().any())

# 没看懂

for col in cols_with_missing:

new_data[col + '_was_missing'] = new_data[col].isnull()

# Imputation

my_imputer = SimpleImputer()

new_data = pd.DataFrame(my_imputer.fit_transform(new_data))

new_data.columns = original_data.columns

dropna的用法

DataFrame.dropna(axis=0, how='any', thresh=None, subset=None, inplace=False)

# axis=0 ,1 删除缺失值的行或列

# how='any'' all ' 对应删除任意为nan 或者全部为nan

# thresh=3 至少有3个nan值才不会被删除

# subet= ['price']当price列为nan时才被删除

# inplace 为true时不返回值

处理非数字数据的方法

# 找到具有缺失值的列

cols_with_missing = [col for col in train_data.columns

if train_data[col].isnull().any()]

# 将无关数据删除

candidate_train_predictors = train_data.drop(['Id', 'SalePrice'] + cols_with_missing, axis=1)

candidate_test_predictors = test_data.drop(['Id'] + cols_with_missing, axis=1)

# 将处理后的列组合成新的列

low_cardinality_cols = [cname for cname in candidate_train_predictors.columns if

candidate_train_predictors[cname].nunique() < 10 and

candidate_train_predictors[cname].dtype == "object"]

numeric_cols = [cname for cname in candidate_train_predictors.columns if

candidate_train_predictors[cname].dtype in ['int64', 'float64']]

my_cols = low_cardinality_cols + numeric_cols

# 对处理后的数据进行展示

train_predictors.dtypes.sample(10)

# 将文本数据进行数字化

one_hot_encoded_training_predictors = pd.get_dummies(train_predictors)

# 将多个文件中不存在的列移除

final_train, final_test = one_hot_encoded_training_predictors.align(one_hot_encoded_test_predictors,

join='left',

axis=1)