京东云上,centos6.9环境下编译 hadoop-2.6.0-cdh5.15.1,以及遇到的坑

为什么要编译 Hadoop

官方提供的Hadoop bin包是不支持一些类似snappy,zlib等本地库功能,所以我们需要重新编译使Hadoop支持这些功能

1. 编译前的准备

在 hadoop-2.6.0-cdh5.15.1-src.tar.gz 中有一个 BUILDING.txt 文件,里面说明了我们编译需要准备哪些东西,以及编译命令

Requirements:

* Windows System

* JDK 1.7+

* Maven 3.0 or later

* Findbugs 1.3.9 (if running findbugs)

* ProtocolBuffer 2.5.0

* CMake 2.6 or newer

* Windows SDK or Visual Studio 2010 Professional

* Unix command-line tools from GnuWin32 or Cygwin: sh, mkdir, rm, cp, tar, gzip

* zlib headers (if building native code bindings for zlib)

* Internet connection for first build (to fetch all Maven and Hadoop dependencies)

If using Visual Studio, it must be Visual Studio 2010 Professional (not 2012).

Do not use Visual Studio Express. It does not support compiling for 64-bit,

which is problematic if running a 64-bit system. The Windows SDK is free to

download here:

http://www.microsoft.com/en-us/download/details.aspx?id=8279

准备安装依赖文件,其中 JDK 必须为 1.7 版本,protobuf 必须为 2.5.0 版本,否则会报错。如果想使用后面下载完的 maven 仓库则 maven 的版本必须是 3.0 以上

apache-maven-3.3.9-bin.tar.gz 下载地址: http://apache.fayea.com/maven/maven-3/3.3.9/binaries/apache-maven-3.3.9-bin.tar.gz

hadoop-2.6.0-cdh5.15.1-src.tar.gz 下载地址: http://archive.cloudera.com/cdh5/cdh/5/hadoop-2.6.0-cdh5.15.1-src.tar.gz

jdk-7u80-linux-x64.tar.gz 下载地址 :https://download.oracle.com/otn/java/jdk/7u80-b15/jdk-7u80-linux-x64.tar.gz

protobuf-2.5.0.tar.gz 下载地址 由于现在protobuf-2.5.0.tar.gz已经无法在官网https://code.google.com/p/protobuf/downloads/list中下载,可在 https://github.com/protocolbuffers/protobuf/releases 找到相应的版本

2. 安装依赖库

在root用户下安装如下依赖

[root@hadoop000 ~]# yum install -y svn ncurses-devel

[root@hadoop000 ~]# yum install -y gcc gcc-c++ make cmake

[root@hadoop000 ~]# yum install -y openssl openssl-devel svn ncurses-devel zlib-devel libtool

[root@hadoop000 ~]# yum install -y snappy snappy-devel bzip2 bzip2-devel lzo lzo-devel lzop autoconf automake cmake3. 上传软件到云服务器

此次编译用hadoop用户,若hadoop用户不存在需要创建一下

[hadoop@hadoop000 app]$ ll

total 46912

drwxrwxr-x 6 hadoop hadoop 4096 Aug 21 20:18 apache-maven-3.6.1

drwxr-xr-x 40 hadoop hadoop 4096 Nov 29 2018 flink-1.7.0

lrwxrwxrwx 1 hadoop hadoop 23 Aug 21 18:38 hadoop -> hadoop-2.6.0-cdh5.15.1/

drwxrwxr-x 11 hadoop hadoop 4096 Aug 21 19:07 hadoop-2.6.0-cdh5.15.1

-rw-r--r-- 1 root root 48019244 Aug 27 16:55 hadoop-2.6.0-cdh5.15.1-src.tar.gz

lrwxrwxrwx 1 hadoop hadoop 16 Aug 21 18:38 zookeeper -> zookeeper-3.4.6/

drwxr-xr-x 10 hadoop hadoop 4096 Feb 20 2014 zookeeper-3.4.64. 安装JDK并配置环境变量

root用户:

解压安装包,安装目录必须是/usr/java,安装后记得修改拥有者为root和root用户组

添加环境变量

[root@hadoop000 jdk1.7.0_80]# vim /etc/profile

#添加如下两行环境变量

export JAVA_HOME=/usr/java/jdk1.7.0_80

export PATH=$JAVA_HOME/bin:$PATH

[root@hadoop000 jdk1.7.0_80]# source /etc/profile

#测试java是否安装成功

[root@hadoop000 jdk1.7.0_80]# java -version

java version "1.7.0_80"

Java(TM) SE Runtime Environment (build 1.7.0_80-b15)

[root@hadoop000 usr]# cd java

[root@hadoop000 java]# ll

total 8

drwxr-xr-x 8 uucp 143 4096 Apr 11 2015 jdk1.7.0_80

drwxr-xr-x 8 root root 4096 Apr 11 2015 jdk1.8.0_45

[root@hadoop000 java]# chown -R root:root jdk1.7.0_80/

[root@hadoop000 java]# ll

total 8

drwxr-xr-x 8 root root 4096 Apr 11 2015 jdk1.7.0_80

drwxr-xr-x 8 root root 4096 Apr 11 2015 jdk1.8.0_45

[root@hadoop000 java]#

5. 安装maven并配置环境变量

#修改haoop用户的环境变量

[hadoop@hadoop000 ~]$ vim ~/.bash_profile

export JAVA_HOME=/usr/java/jdk1.7.0_80

#添加或修改如下内容,注意MAVEN_OPTS设置了maven运行的内存,防止内存太小导致编译失败

export MAVEN_HOME=/home/hadoop/app/apache-maven-3.3.9

export MAVEN_OPTS="-Xms1024m -Xmx1024m"

export PATH=$MAVEN_HOME/bin:$JAVA_HOME/bin:$PATH

[hadoop@hadoop000 ~]$ source ~/.bash_profile

[hadoop@hadoop000 ~]$ which mvn

~/app/apache-maven-3.3.9/bin/mvn配置mavensetting.xml的仓库地址

[hadoop@hadoop000 ~]$ vim ~/app/apache-maven-3.3.9/conf/settings.xml

#配置maven的本地仓库位置

/home/hadoop/maven_repo/repo

#添加阿里云中央仓库地址,

nexus-aliyun

central

Nexus aliyun

http://maven.aliyun.com/nexus/content/groups/public

6. 安装protobuf并配置环境变量

[hadoop@hadoop000 ~]$ tar -zxvf ~/soft/protobuf-2.5.0.tar.gz -C ~/app/

## 编译软件

[hadoop@hadoop001 protobuf-2.5.0]$ cd ~/app/protobuf-2.5.0/

## --prefix= 是用来待会编译好的包放在为路径,软件要在安装的路径#make进行编译,make install进行安装

[hadoop@hadoop000 protobuf-2.5.0]$ ./configure --prefix=/home/hadoop/app/protobuf-2.5.0

## 编译以及安装

[hadoop@hadoop000 protobuf-2.5.0]$ make

[hadoop@hadoop000 protobuf-2.5.0]$ make install

## 添加环境变量

[hadoop@hadoop000 protobuf-2.5.0]$ vim ~/.bash_profile

export HADOOP_HOME=/home/hadoop/app/hadoop

export ZOOKEEPER_HOME=/home/hadoop/app/zookeeper

export MAVEN_HOME=/home/hadoop/app/apache-maven-3.3.9

export MAVEN_OPTS="-Xms1024m -Xmx1024m"

#追加如下两行内容,未编译前是没有bin目录的

export PROTOBUF_HOME=/home/hadoop/app/protobuf-2.5.0

export PATH=$PROTOBUF_HOME/bin:$MAVEN_HOME/bin:$HADOOP_HOME/sbin:$HADOOP_HOME/bin:$ZOOKEEPER_HOME/bin:$PATH

[hadoop@hadoop001 protobuf-2.5.0]$ source ~/.bash_profile 验证编译和设置是否成功,若出现libprotoc 2.5.0则为生效

[hadoop@hadoop000 protobuf-2.5.0]$ protoc --version

libprotoc 2.5.0

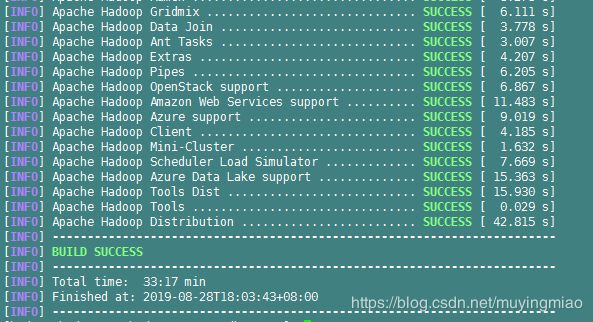

7. 编译hadoop

进入目录:/home/hadoop/app/hadoop-2.6.0-cdh5.15.1,修改pom.xml文件,将下图的https换成http

[hadoop@hadoop000 hadoop-2.6.0-cdh5.15.1]$ mvn clean package -Pdist,native -DskipTests -Dtar

[INFO] Executed tasks

[INFO]

[INFO] --- maven-javadoc-plugin:2.8.1:jar (module-javadocs) @ hadoop-dist ---

[INFO] Building jar: /home/hadoop/app/hadoop-2.6.0-cdh5.15.1/hadoop-dist/target/hadoop-dist-2.6.0-cdh5.15.1-javadoc.jar

[INFO] ------------------------------------------------------------------------

[INFO] Reactor Summary for Apache Hadoop Main 2.6.0-cdh5.15.1:

[INFO]

[INFO] Apache Hadoop Main ................................. SUCCESS [ 4.257 s]

[INFO] Apache Hadoop Build Tools .......................... SUCCESS [ 1.886 s]

[INFO] Apache Hadoop Project POM .......................... SUCCESS [ 2.301 s]

[INFO] Apache Hadoop Annotations .......................... SUCCESS [ 3.889 s]

[INFO] Apache Hadoop Assemblies ........................... SUCCESS [ 0.511 s]

[INFO] Apache Hadoop Project Dist POM ..................... SUCCESS [ 1.907 s]

[INFO] Apache Hadoop Maven Plugins ........................ SUCCESS [ 6.213 s]

[INFO] Apache Hadoop MiniKDC .............................. SUCCESS [ 7.917 s]

[INFO] Apache Hadoop Auth ................................. SUCCESS [ 9.816 s]

[INFO] Apache Hadoop Auth Examples ........................ SUCCESS [ 4.104 s]

[INFO] Apache Hadoop Common ............................... SUCCESS [02:17 min]

[INFO] Apache Hadoop NFS .................................. SUCCESS [ 9.221 s]

[INFO] Apache Hadoop KMS .................................. SUCCESS [ 14.587 s]

[INFO] Apache Hadoop Common Project ....................... SUCCESS [ 0.051 s]

[INFO] Apache Hadoop HDFS ................................. SUCCESS [06:42 min]

[INFO] Apache Hadoop HttpFS ............................... SUCCESS [ 54.012 s]

[INFO] Apache Hadoop HDFS BookKeeper Journal .............. SUCCESS [ 12.840 s]

[INFO] Apache Hadoop HDFS-NFS ............................. SUCCESS [ 11.015 s]

[INFO] Apache Hadoop HDFS Project ......................... SUCCESS [ 0.148 s]

[INFO] hadoop-yarn ........................................ SUCCESS [ 0.186 s]

[INFO] hadoop-yarn-api .................................... SUCCESS [07:45 min]

[INFO] hadoop-yarn-common ................................. SUCCESS [01:15 min]

[INFO] hadoop-yarn-server ................................. SUCCESS [ 0.213 s]

[INFO] hadoop-yarn-server-common .......................... SUCCESS [ 25.428 s]

[INFO] hadoop-yarn-server-nodemanager ..................... SUCCESS [ 51.318 s]

[INFO] hadoop-yarn-server-web-proxy ....................... SUCCESS [ 12.282 s]

[INFO] hadoop-yarn-server-applicationhistoryservice ....... SUCCESS [ 16.661 s]

[INFO] hadoop-yarn-server-resourcemanager ................. SUCCESS [ 55.619 s]

[INFO] hadoop-yarn-server-tests ........................... SUCCESS [ 4.430 s]

[INFO] hadoop-yarn-client ................................. SUCCESS [ 15.359 s]

[INFO] hadoop-yarn-applications ........................... SUCCESS [ 0.194 s]

[INFO] hadoop-yarn-applications-distributedshell .......... SUCCESS [ 8.804 s]

[INFO] hadoop-yarn-applications-unmanaged-am-launcher ..... SUCCESS [ 9.217 s]

[INFO] hadoop-yarn-site ................................... SUCCESS [ 0.125 s]

[INFO] hadoop-yarn-registry ............................... SUCCESS [ 14.428 s]

[INFO] hadoop-yarn-project ................................ SUCCESS [ 20.549 s]

[INFO] hadoop-mapreduce-client ............................ SUCCESS [ 0.293 s]

[INFO] hadoop-mapreduce-client-core ....................... SUCCESS [ 50.975 s]

[INFO] hadoop-mapreduce-client-common ..................... SUCCESS [ 58.558 s]

[INFO] hadoop-mapreduce-client-shuffle .................... SUCCESS [ 12.974 s]

[INFO] hadoop-mapreduce-client-app ........................ SUCCESS [ 29.624 s]

[INFO] hadoop-mapreduce-client-hs ......................... SUCCESS [ 18.959 s]

[INFO] hadoop-mapreduce-client-jobclient .................. SUCCESS [ 18.554 s]

[INFO] hadoop-mapreduce-client-hs-plugins ................. SUCCESS [ 7.321 s]

[INFO] hadoop-mapreduce-client-nativetask ................. SUCCESS [02:31 min]

[INFO] Apache Hadoop MapReduce Examples ................... SUCCESS [ 8.364 s]

[INFO] hadoop-mapreduce ................................... SUCCESS [ 4.175 s]

[INFO] Apache Hadoop MapReduce Streaming .................. SUCCESS [ 5.994 s]

[INFO] Apache Hadoop Distributed Copy ..................... SUCCESS [ 11.467 s]

[INFO] Apache Hadoop Archives ............................. SUCCESS [ 3.111 s]

[INFO] Apache Hadoop Archive Logs ......................... SUCCESS [ 3.157 s]

[INFO] Apache Hadoop Rumen ................................ SUCCESS [ 8.273 s]

[INFO] Apache Hadoop Gridmix .............................. SUCCESS [ 6.111 s]

[INFO] Apache Hadoop Data Join ............................ SUCCESS [ 3.778 s]

[INFO] Apache Hadoop Ant Tasks ............................ SUCCESS [ 3.007 s]

[INFO] Apache Hadoop Extras ............................... SUCCESS [ 4.207 s]

[INFO] Apache Hadoop Pipes ................................ SUCCESS [ 6.205 s]

[INFO] Apache Hadoop OpenStack support .................... SUCCESS [ 6.867 s]

[INFO] Apache Hadoop Amazon Web Services support .......... SUCCESS [ 11.483 s]

[INFO] Apache Hadoop Azure support ........................ SUCCESS [ 9.019 s]

[INFO] Apache Hadoop Client ............................... SUCCESS [ 4.185 s]

[INFO] Apache Hadoop Mini-Cluster ......................... SUCCESS [ 1.632 s]

[INFO] Apache Hadoop Scheduler Load Simulator ............. SUCCESS [ 7.669 s]

[INFO] Apache Hadoop Azure Data Lake support .............. SUCCESS [ 15.363 s]

[INFO] Apache Hadoop Tools Dist ........................... SUCCESS [ 15.930 s]

[INFO] Apache Hadoop Tools ................................ SUCCESS [ 0.029 s]

[INFO] Apache Hadoop Distribution ......................... SUCCESS [ 42.815 s]

[INFO] ------------------------------------------------------------------------

[INFO] BUILD SUCCESS

[INFO] ------------------------------------------------------------------------

[INFO] Total time: 33:17 min

[INFO] Finished at: 2019-08-28T18:03:43+08:00

[INFO] ------------------------------------------------------------------------

编译过程中遇到的问题:

1).包下载不完整

Failed to execute goal org.apache.maven.plugins:maven-antrun-plugin:1.7:run (dist) on project hadoop-hdfs-httpfs: An Ant BuildException has occured: exec returned: 2

[ERROR] around Ant part ...... @

10:136 in /home/hadoop/app/hadoop-2.6.0-cdh5.15.1/hadoop-hdfs-project/hadoop-hdfs-httpfs/target/antrun/build-main.xml 这是因为tomcat的压缩包没有下载完成,需要自己下载一个对应版本的apache-tomcat-6.0.53.tar.gz的压缩包放到指定路径下面去即可

这两个路径下面需要放上这个tomcat的 压缩包

/export/servers/hadoop-2.6.0-cdh5.14.0/hadoop-hdfs-project/hadoop-hdfs-httpfs/downloads

/export/servers/hadoop-2.6.0-cdh5.14.0/hadoop-common-project/hadoop-kms/downloads

[hadoop@hadoop000 embed]$ cd /home/hadoop/app/hadoop-2.6.0-cdh5.15.1/hadoop-hdfs-project/hadoop-hdfs-httpfs/

[hadoop@hadoop000 hadoop-hdfs-httpfs]$ ll

total 44

drwxrwxr-x 2 hadoop hadoop 4096 Aug 10 2018 dev-support

drwxrwxr-x 2 hadoop hadoop 4096 Aug 28 00:21 downloads

-rw-rw-r-- 1 hadoop hadoop 22159 Aug 10 2018 pom.xml

-rw-rw-r-- 1 hadoop hadoop 795 Aug 10 2018 README.txt

drwxrwxr-x 5 hadoop hadoop 4096 Aug 10 2018 src

drwxrwxr-x 14 hadoop hadoop 4096 Aug 28 05:00 target

[hadoop@hadoop000 hadoop-hdfs-httpfs]$ cd downloads/

[hadoop@hadoop000 downloads]$ ll

total 184

-rw-rw-r-- 1 hadoop hadoop 185096 Aug 28 00:23 apache-tomcat-6.0.53.tar.gz

[hadoop@hadoop000 downloads]$ rm -rf apache-tomcat-6.0.53.tar.gz

[hadoop@hadoop000 downloads]$ rz2).hadoop编译的时候会同时编译一个亚马逊的服务,就会遇到这个问题,这个服务在模块hadoop-tools/hadoop-aws。网上的处理方式是通过本地安装这个库来跳过这个问题,报错如下:

[ERROR] Failed to execute goal on project hadoop-aws: Could not resolve dependencies for project org.apache.hadoop:hadoop-aws:jar:2.6.0-cdh5.15.1: Failed to collect dependencies at com.amazonaws:DynamoDBLocal:jar:[1.11.86,2.0): No versions available for com.amazonaws:DynamoDBLocal:jar:[1.11.86,2.0) within specified range -> [Help 1]

[ERROR]

[ERROR] To see the full stack trace of the errors, re-run Maven with the -e switch.

[ERROR] Re-run Maven using the -X switch to enable full debug logging.

[ERROR]

[ERROR] For more information about the errors and possible solutions, please read the following articles:

[ERROR] [Help 1] http://cwiki.apache.org/confluence/display/MAVEN/DependencyResolutionException

[ERROR]

[ERROR] After correcting the problems, you can resume the build with the command

[ERROR] mvn -rf :hadoop-aws 亚马逊官网对于这个问题的处理方式可以通过查看这个网址:

https://docs.aws.amazon.com/amazondynamodb/latest/developerguide/DynamoDBLocal.html

本次通过下载相应的依赖文件,然后把下载好的文件拷贝到服务器上,因为这个下载的是一个很广泛的版本,在下载好后通过查看maven版本,得到一个可用的版本。这里的可用版本是1.11.477,修改hadoop-2.6.0-cdh5.15.1中的hadoop-project项目中的pom.xml文件中对应DynamoDBLocal的版本为1.11.477,重新编译即可。

网上也提供了另一种方案:

首先找到hadoop-2.6.0-cdh5.14.2/hadoop-project/pom.xml,查找DynamoDBLocal,会找到一个dependencies和repositories。

1、修改dependencies中的version,初始version是[1.11.86,2.0),修改为[1.11,2.0),

2、然后再修改repositories中的所有信息,按照官网上的内容直接替换原有的源地址:

dynamodb-local-oregon

DynamoDB Local Release Repository

https://s3-us-west-2.amazonaws.com/dynamodb-local/release

然后修改maven中的setting.xml,增加mirror

dynamodb-local-oregon

DynamoDB Local Release Repository

https://s3-ap-southeast-1.amazonaws.com/dynamodb-local-singapore/release

*

修改完settting.xml后,执行mvn help:effective-settings来查看配置是否生效。

然后重新执行编译命令,这个需要编译一段时间才会有结果

自己的解决方案,亲测可用

找到hadoop-2.6.0-cdh5.14.2/hadoop-project/pom.xml,查找DynamoDBLocal,修改dependencies中的version:初始version是"[1.11.86,2.0)",修改为"1.11.86",然后下载该版本的依赖包,下载地址 https://www.kumapai.com/open/518-DynamoDBLocal/1-11-86

通过mvn 命令将包加入本地仓库:

mvn install:install-file -Dfile=/home/hadoop/software/DynamoDBLocal-1.11.86.jar -DgroupId=com.amazonaws -DartifactId=DynamoDBLocal -Dversion=1.11.86 -Dpackaging=jar

重新编译:

[hadoop@hadoop000 hadoop-2.6.0-cdh5.15.1]$ mvn clean package -Pdist,native -DskipTests -Dtar

注意一下这个依赖包拥有者和组为hadoop:hadoop,继续报错

[INFO] Total time: 29:33 min

[INFO] Finished at: 2019-08-28T13:04:49+08:00

[INFO] ------------------------------------------------------------------------

[ERROR] Failed to execute goal org.apache.maven.plugins:maven-compiler-plugin:2.5.1:testCompile (default-testCompile) on project hadoop-aws: Compilation failure: Compilation failure:

[ERROR] /home/hadoop/app/hadoop-2.6.0-cdh5.15.1/hadoop-tools/hadoop-aws/src/test/java/org/apache/hadoop/fs/s3a/s3guard/DynamoDBLocalClientFactory.java:[31,31] error: package org.apache.comons.lang3 does not exist

[ERROR] /home/hadoop/app/hadoop-2.6.0-cdh5.15.1/hadoop-tools/hadoop-aws/src/test/java/org/apache/hadoop/fs/s3a/s3guard/DynamoDBLocalClientFactory.java:[104,8] error: cannot find symbol

[ERROR] symbol: variable StringUtils

[ERROR] location: class DynamoDBLocalClientFactory

[ERROR] /home/hadoop/app/hadoop-2.6.0-cdh5.15.1/hadoop-tools/hadoop-aws/src/test/java/org/apache/hadoop/fs/s3a/s3guard/DynamoDBLocalClientFactory.java:[106,10] error: cannot find symbol

[ERROR] -> [Help 1]在hadoop的主pom.xml文件中加入org.apache.comons.lang3 依赖包

org.apache.commons

commons-lang3

3.5

或者在/home/hadoop/app/hadoop-2.6.0-cdh5.15.1/hadoop-tools/hadoop-aws中加入上述的org.apache.comons.lang3 依赖包,继续编译,经过漫长的等待,终于看到了久未的SUCESS

顺便贴一下自己的maven的setting.xml文件,供大家参考。

/home/hadoop/maven_repo/repo

nexus-aliyun

central

Nexus aliyun

http://maven.aliyun.com/nexus/content/groups/public

cloudera

*

cloudera Readable Name for this Mirror.

http://repository.cloudera.com/artifactory/cloudera-repos/

dynamodb-local-oregon

DynamoDB Local Release Repository

https://s3-ap-southeast-1.amazonaws.com/dynamodb-local-singapore/release

*

localRep

NEORepo

http://maven.aliyun.com/nexus/content/groups/public/

true

always

internal

https://repository.cloudera.com/artifactory/cloudera-repos/

false

NEORepo

http://maven.aliyun.com/nexus/content/groups/public/

true

always