- python网络爬虫(第一章/共三章:网络爬虫库、robots.txt规则(防止犯法)、查看获取网页源代码)

python网络爬虫(第一章/共三章:网络爬虫库、robots.txt规则(防止犯法)、查看获取网页源代码)学习python网络爬虫的完整路径:(第一章即此篇文章)(第二章)python网络爬虫(第二章/共三章:安装浏览器驱动,驱动浏览器加载网页、批量下载资源)-CSDN博客https://blog.csdn.net/2302_78022640/article/details/149431071?

- Python 网络爬虫中 robots 协议使用的常见问题及解决方法

在Python网络爬虫开发中,robots协议的正确应用是保证爬虫合规性的关键。然而,在实际使用过程中,开发者常会遇到各种问题,若处理不当,可能导致爬虫被封禁或引发法律风险。本文将梳理robots协议使用中的常见问题,并提供针对性的解决方法。一、协议解析不准确导致的合规性问题1.1误读User-agent通配符范围问题表现:将User-agent:*错误理解为适用于所有场景,忽略了特定爬虫的单独规

- Python 网络爬虫的基本流程及 robots 协议详解

女码农的重启

python网络爬虫JAVA开发语言

数据驱动的时代,网络爬虫作为高效获取互联网信息的工具,其规范化开发离不开对基本流程的掌握和对robots协议的遵守。本文将系统梳理Python网络爬虫的核心流程,并深入解读robots协议的重要性及实践规范。一、Python网络爬虫的基本流程Python网络爬虫的工作过程可分为四个核心阶段,每个阶段环环相扣,共同构成数据采集的完整链路。1.1发起网络请求这是爬虫与目标服务器交互的第一步,通过发送H

- 156个Python网络爬虫资源,妈妈再也不用担心你找不到资源!_爬虫 csdn资源

本列表包含Python网页抓取和数据处理相关的库。网络相关通用urllib-网络库(标准库)requests-网络库grab-网络库(基于pycurl)pycurl-网络库(与libcurl绑定)urllib3-具有线程安全连接池、文件psot支持、高可用的PythonHTTP库httplib2-网络库RoboBrowser-一个无需独立浏览器即可访问网页的简单、pythonic的库Mechani

- python笔记-Selenium谷歌浏览器驱动下载

hero.zhong

python笔记selenium

Selenium谷歌浏览器驱动下载地址:https://googlechromelabs.github.io/chrome-for-testing/#stable下面是遇到的问题:python网络爬虫技术中使用谷歌浏览器代码,报错:OSError:[WinError193]%1不是有效的Win32应用程序:遇到错误OSError:[WinError193]%1不是有效的Win32应用程序通常意味着

- Python网络爬虫与数据处理工具大全:从入门到精通

俞凯润

Python网络爬虫与数据处理工具大全:从入门到精通awesome-web-scrapingListoflibraries,toolsandAPIsforwebscrapinganddataprocessing.项目地址:https://gitcode.com/gh_mirrors/aw/awesome-web-scraping本文基于知名Python网络爬虫资源库lorien/awesome-w

- Python网络爬虫---urllib库介绍

db_hsk_2099

python爬虫开发语言

1·urllib库简介·用途:urllib库是python的标准库之一,是python内置的HTTP请求库,用于发送HTTP/FTP请求,它可以看作处理URL的组件集合。·特点:简单易用,支持HTTP、HTTPS、FTP等协议。2.urllib库包含4大模块:目录:(1)urllib.request(2)urllib.parse(3)urllib.error(4)urllib.robotparse

- python网络安全实战_基于Python网络爬虫实战

weixin_39907850

python网络安全实战

文件的操作:一般都要使用os模块和os.path模块importos.pathos.path.exists('D:\\Python\\1.txt')#判断文件是否存在abspath(path)#返回path所在的绝对路径dirname(p)#返回目录的路径exists(path)#判断文件是否存在getatime(filename)#返回文件的最后访问时间getctime(filename)#返回

- Python网络爬虫案例实战:动态网页爬取:selenium爬取动态网页

andyyah晓波

Python网络爬虫案例实战python爬虫selenium

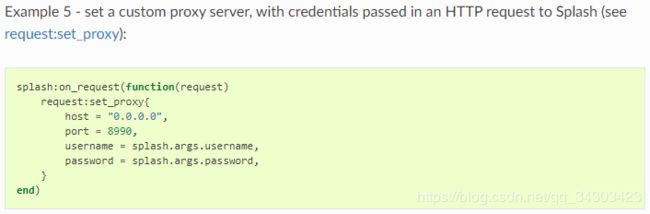

Python网络爬虫案例实战:动态网页爬取:selenium爬取动态网页利用“审查元素”功能找到源地址十分容易,但是有些网站非常复杂。除此之外,有一些数据真实地址的URL也十分冗长和复杂,有些网站为了规避这些爬取会对地址进行加密。因此,在此介绍另一种方法,即使用浏览器渲染引擎,直接用浏览器在显示网页时解析HTML,应用CSS样式并执行JavaScript的语句。此方法在爬虫过程中会打开一个浏览器,

- Python网络爬虫:Scrapy框架的全面解析

4.0啊

Python网络爬虫pythonscrapyipython

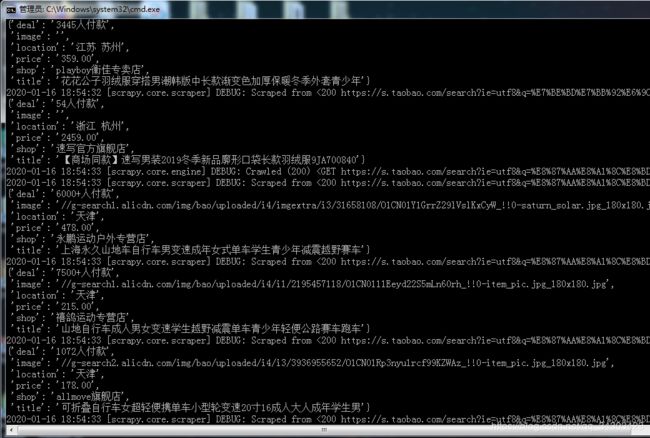

Python网络爬虫:Scrapy框架的全面解析一、引言在当今互联网的时代,数据是最重要的资源之一。为了获取这些数据,我们经常需要编写网络爬虫来从各种网站上抓取信息。Python作为一种强大的编程语言,拥有许多用于网络爬虫的工具和库。其中,Scrapy是一个功能强大且灵活的开源网络爬虫框架,它提供了一种高效的方式来爬取网站并提取所需的数据。本文将深入探讨Scrapy框架的核心概念、使用方法以及高级

- python多线程爬虫和异步爬虫_Python网络爬虫(高性能异步爬虫)

weixin_39542608

一、背景其实爬虫的本质就是client发请求批量获取server的响应数据,如果我们有多个url待爬取,只用一个线程且采用串行的方式执行,那只能等待爬取一个结束后才能继续下一个,效率会非常低。需要强调的是:对于单线程下串行N个任务,并不完全等同于低效,如果这N个任务都是纯计算的任务,那么该线程对cpu的利用率仍然会很高,之所以单线程下串行多个爬虫任务低效,是因为爬虫任务是明显的IO密集型(阻塞)程

- Python网络爬虫基础知识day1

会飞的猪 1

Python网络爬虫python爬虫开发语言分布式知识

什么是网络爬虫:通俗理解:爬虫是一个模拟人类请求网站行为的程序。可以自动请求网页、并数据抓取下来,然后使用一定的规则提取有价值的数据。通用爬虫和聚焦爬虫:通用爬虫:通用爬虫是搜索引擎抓取系统(百度、谷歌、搜狗等)的重要组成部分。主要是将互联网上的网页下载到本地,形成一个互联网内容的镜像备份。聚焦爬虫:是面向特定需求的一种网络爬虫程序,他与通用爬虫的区别在于:聚焦爬虫在实施网页抓取的时候会对内容进行

- python网络爬虫网页前端编程基础、Socket库、使用Socket进行TCP编程、认识HTTP协议、熟悉Cookie等。_python的socket库

软件开发Java

程序员python爬虫前端

最后Python崛起并且风靡,因为优点多、应用领域广、被大牛们认可。学习Python门槛很低,但它的晋级路线很多,通过它你能进入机器学习、数据挖掘、大数据,CS等更加高级的领域。Python可以做网络应用,可以做科学计算,数据分析,可以做网络爬虫,可以做机器学习、自然语言处理、可以写游戏、可以做桌面应用…Python可以做的很多,你需要学好基础,再选择明确的方向。这里给大家分享一份全套的Pytho

- Python网络爬虫入门最佳实践:学会使用Python爬取网页数据的常用技巧

CyMylive.

python爬虫开发语言

一、前言在互联网上,有海量的数据可以被利用。而前往获取这些数据的方法之一就是网络爬虫。网络爬虫是一个自动化的程序,可以浏览互联网上的页面并提取希望的数据。Python是一个流行的编程语言,也是一个非常适合开发网络爬虫的语言。Python有几个强大的库和工具,可以帮助开发人员轻松地编写高效的网络爬虫。本文将介绍Python网络爬虫的入门最佳实践,从基础到高级不同层次地帮助用户掌握Python网络爬虫

- python爬虫scrapy入门看这篇就够了_Python网络爬虫4 - scrapy入门

weixin_39977136

scrapy作为一款强大的爬虫框架,当然要好好学习一番,本文便是本人学习和使用scrapy过后的一个总结,内容比较基础,算是入门笔记吧,主要讲述scrapy的基本概念和使用方法。scrapyframework首先附上scrapy经典图如下:scrapy框架包含以下几个部分ScrapyEngine引擎Spiders爬虫Scheduler调度器Downloader下载器ItemPipeline项目管道

- Python网络爬虫技术解析:从基础实现到反爬应对

小张在编程

Python学习python爬虫开发语言

网络爬虫(WebCrawler)是一种通过自动化程序模拟人类浏览器行为,从互联网页面中提取结构化数据的技术。其核心逻辑围绕“请求-解析-存储”流程展开,广泛应用于行业数据监测、竞品分析、学术研究等场景。本文将系统解析爬虫核心技术,并结合工程实践探讨反爬应对策略。一、爬虫核心技术基础1.1HTTP协议与请求-响应模型网络爬虫的本质是模拟客户端与服务器的HTTP交互。客户端通过发送HTTP请求(GET

- python网络爬虫的基本使用

逾非时

python爬虫开发语言

各位帅哥美女点点关注,有关注才有动力啊网络爬虫引言我们平时都说Python爬虫,其实这里可能有个误解,爬虫并不是Python独有的,可以做爬虫的语言有很多例如:PHP、JAVA、C#、C++、Python。为什么Python的爬虫技术会异军突起呢?Python火并不是因为爬虫技术,而是AI人工智能、数据分析(GoogleAlphaGo)等等功能;这些Java其实也能做,而选择Python做爬虫是因

- 30个小时搞定Python网络爬虫

企鹅侠客

运维实用资源爬虫网络协议python面试

本文分享一套结构完整、内容深入的Python网络爬虫学习资料,适合从入门到进阶系统学习。总共10个章节,包含基础语法、核心爬虫技术、反爬破解、Scrapy框架、分布式爬虫实战等内容,覆盖大部分真实爬虫开发需求。学习资料结构概览第一章:Python网络爬虫之基础包含Python入门知识、语法、控制流、文件操作、异常处理与OOP基础。第二章:工作原理详解深入讲解爬虫的基本概念、抓取流程与网页结构。第三

- Python爬虫学习资源

python游乐园

文本处理python爬虫学习

书籍《Python网络爬虫从入门到实践》内容由浅入深,详细介绍了Python爬虫的基础知识和实践技巧,包括网页解析、数据存储、反爬虫策略等。书中配有大量的示例代码和案例分析,适合初学者快速上手。《Python网络数据采集》这本书涵盖了网页抓取的各个方面,包括如何处理HTML和XML、使用正则表达式、处理表单和登录验证等。书中还介绍了如何使用Scrapy框架进行大规模数据采集,以及如何处理反爬虫机制

- 网络爬虫-Python网络爬虫和C#网络爬虫

笑非不退

C#python爬虫python

爬虫是一种从互联网抓取数据信息的自动化程序,通过HTTP协议向网站发送请求,获取网页内容,并通过分析网页内容来抓取和存储网页数据。爬虫可以在抓取过程中进行各种异常处理、错误重试等操作,确保爬取持续高效地运行1、Python网络爬虫Python网络爬虫详细介绍Python网络爬虫是自动化程序,用来抓取网页上的数据。通过网络爬虫,你可以从互联网上采集、处理数据,比如抓取产品信息、新闻内容等。Pytho

- 2024年最新从入门到实战:Python网络爬虫指南

2401_84689601

程序员python爬虫开发语言

随着互联网的快速发展,大量的信息被存储在网站上,这些信息对于数据分析、市场研究和其他领域的决策制定至关重要。然而,手动收集这些信息是非常耗时且效率低下的。这时,网络爬虫就派上了用场。本文将介绍如何使用Python来构建和运行简单的网络爬虫,以及如何将其应用于实际项目中。什么是网络爬虫?网络爬虫(WebCrawler)是一种自动获取互联网信息的程序,它通过访问网页、提取数据并保存数据的方式来实现信息

- Python网络爬虫与数据采集实战——网络爬虫的基本流程

m0_74823658

面试学习路线阿里巴巴python爬虫开发语言

网络爬虫(WebScraper)是用于自动化地从互联网上抓取信息的程序。它广泛应用于搜索引擎、数据采集、市场分析等领域。本文将详细探讨网络爬虫的基本流程,包括URL提取、HTTP请求与响应、数据解析与存储,以及一个实际的爬虫示例。文章不仅关注基础概念,更会深入到实际开发中遇到的技术难点和最新的技术解决方案。1.URL提取URL提取是网络爬虫中最基础的步骤之一,爬虫首先需要从目标网站中提取出需要抓取

- 【愚公系列】《Python网络爬虫从入门到精通》056-Scrapy_Redis分布式爬虫(Scrapy-Redis 模块)

愚公搬代码

愚公系列-书籍专栏python爬虫scrapy

【技术大咖愚公搬代码:全栈专家的成长之路,你关注的宝藏博主在这里!】开发者圈持续输出高质量干货的"愚公精神"践行者——全网百万开发者都在追更的顶级技术博主!江湖人称"愚公搬代码",用七年如一日的精神深耕技术领域,以"挖山不止"的毅力为开发者们搬开知识道路上的重重阻碍!【行业认证·权威头衔】✔华为云天团核心成员:特约编辑/云享专家/开发者专家/产品云测专家✔开发者社区全满贯:CSDN博客&商业化双料

- python网络爬虫课程设计题目_山东建筑大学计算机网络课程设计《基于Python的网络爬虫设计》...

weixin_32243075

山东建筑大学计算机网络课程设计《基于Python的网络爬虫设计》山东建筑大学课程设计成果报告题目:基于Python的网络爬虫设计课程:计算机网络A院(部):管理工程学院专业:信息管理与信息系统班级:学生姓名:学号:指导教师:完成日期:目录1设计目的12设计任务内容13网络爬虫程序总体设计14网络爬虫程序详细设计14.1设计环境和目标分析14.1.1设计环境14.1.2目标分析24.2爬虫运行流程分

- Python中高效的爬虫框架,你用过几个?

IT猫仔

python爬虫开发语言

在信息时代,数据是无价之宝。许多开发者和数据分析师需要从互联网上采集大量的数据,用于各种用途,如分析、建模、可视化等。Python作为一门强大的编程语言,提供了多种高效的爬虫框架,使数据采集变得更加容易和高效。本文将介绍一些Python中高效的爬虫框架,帮助你选择适合你项目需求的工具。一、Scrapy1.Scrapy框架简介Scrapy是一个功能强大的Python网络爬虫框架,专为数据采集而设计。

- python网络爬虫练习_《零基础:21天搞定Python分布爬虫》练习-古诗文网

weixin_39953244

python网络爬虫练习

importrequestsimportredefmain():url='https://www.gushiwen.org/default_1.aspx'headers={"user-agent":"Mozilla/5.0(WindowsNT10.0;WOW64)AppleWebKit/537.36(KHTML,likeGecko)Chrome/63.0.3239.132Safari/537.36

- Python网络爬虫深度教程

jijihusong006

python爬虫开发语言scipyscrapy

以下是一份详细的Python网络爬虫开发教程,包含原理讲解、技术实现和最佳实践,分为多个章节进行系统化讲解:Python网络爬虫深度教程1、Python爬虫+JS逆向,进阶课程,破解难题https://pan.quark.cn/s/9ad78f3f71162、Python+大数据开发V5,黑马班助你就业无忧https://pan.quark.cn/s/79942bacd34a3、传送资料库查询ht

- python网络爬虫

Small Cow

爬虫python爬虫开发语言

一、Python爬虫核心库HTTP请求库requests:简单易用的HTTP请求库,处理GET/POST请求。aiohttp:异步HTTP客户端,适合高并发场景。HTML/XML解析库BeautifulSoup:基于DOM树的解析库,支持多种解析器(如lxml)。lxml:高性能解析库,支持XPath语法。动态页面处理Selenium:模拟浏览器操作,处理JavaScript渲染的页面。Playw

- 【机器学习+爬虫】房屋数据分析预测与可视化系统 计算机毕业设计 爬虫 大数据毕业设计 人工智能 预测模型 数据分析 数据可视化

weixin_45469617

python数据分析scikit-learn机器学习毕业设计大数据数据可视化

演示视频:【机器学习】房屋数据分析预测与可视化系统计算机毕业设计爬虫大数据毕业设计人工智能预测模型数据分析数据可视化技术栈:python、flask、mysql、scikit-learn创新点:Python网络爬虫、机器学习、预测算法、多元线性回归,数据分析与可视化

- python网络爬虫-进阶篇·正则表达式

Tttian622

python爬虫爬虫正则表达式python

正则表达式是一种用于匹配字符串的模式1.匹配字符串的模式在爬虫项目中,想要获取特定的信息,需要精确定位其地址。这个过程需要进行复杂的文本匹配操作。以下是一些常用字符的用法:.:匹配任意单个字符(换行符除外)。*:匹配前面的元素零次或多次。+:匹配前面的元素一次或多次。?:匹配前面的元素零次或一次。^:匹配输入字符串的开始位置。$:匹配输入字符串的结束位置。[]:匹配方括号内的任意字符。|:逻辑或操

- JAVA基础

灵静志远

位运算加载Date字符串池覆盖

一、类的初始化顺序

1 (静态变量,静态代码块)-->(变量,初始化块)--> 构造器

同一括号里的,根据它们在程序中的顺序来决定。上面所述是同一类中。如果是继承的情况,那就在父类到子类交替初始化。

二、String

1 String a = "abc";

JAVA虚拟机首先在字符串池中查找是否已经存在了值为"abc"的对象,根

- keepalived实现redis主从高可用

bylijinnan

redis

方案说明

两台机器(称为A和B),以统一的VIP对外提供服务

1.正常情况下,A和B都启动,B会把A的数据同步过来(B is slave of A)

2.当A挂了后,VIP漂移到B;B的keepalived 通知redis 执行:slaveof no one,由B提供服务

3.当A起来后,VIP不切换,仍在B上面;而A的keepalived 通知redis 执行slaveof B,开始

- java文件操作大全

0624chenhong

java

最近在博客园看到一篇比较全面的文件操作文章,转过来留着。

http://www.cnblogs.com/zhuocheng/archive/2011/12/12/2285290.html

转自http://blog.sina.com.cn/s/blog_4a9f789a0100ik3p.html

一.获得控制台用户输入的信息

&nbs

- android学习任务

不懂事的小屁孩

工作

任务

完成情况 搞清楚带箭头的pupupwindows和不带的使用 已完成 熟练使用pupupwindows和alertdialog,并搞清楚两者的区别 已完成 熟练使用android的线程handler,并敲示例代码 进行中 了解游戏2048的流程,并完成其代码工作 进行中-差几个actionbar 研究一下android的动画效果,写一个实例 已完成 复习fragem

- zoom.js

换个号韩国红果果

oom

它的基于bootstrap 的

https://raw.github.com/twbs/bootstrap/master/js/transition.js transition.js模块引用顺序

<link rel="stylesheet" href="style/zoom.css">

<script src=&q

- 详解Oracle云操作系统Solaris 11.2

蓝儿唯美

Solaris

当Oracle发布Solaris 11时,它将自己的操作系统称为第一个面向云的操作系统。Oracle在发布Solaris 11.2时继续它以云为中心的基调。但是,这些说法没有告诉我们为什么Solaris是配得上云的。幸好,我们不需要等太久。Solaris11.2有4个重要的技术可以在一个有效的云实现中发挥重要作用:OpenStack、内核域、统一存档(UA)和弹性虚拟交换(EVS)。

- spring学习——springmvc(一)

a-john

springMVC

Spring MVC基于模型-视图-控制器(Model-View-Controller,MVC)实现,能够帮助我们构建像Spring框架那样灵活和松耦合的Web应用程序。

1,跟踪Spring MVC的请求

请求的第一站是Spring的DispatcherServlet。与大多数基于Java的Web框架一样,Spring MVC所有的请求都会通过一个前端控制器Servlet。前

- hdu4342 History repeat itself-------多校联合五

aijuans

数论

水题就不多说什么了。

#include<iostream>#include<cstdlib>#include<stdio.h>#define ll __int64using namespace std;int main(){ int t; ll n; scanf("%d",&t); while(t--)

- EJB和javabean的区别

asia007

beanejb

EJB不是一般的JavaBean,EJB是企业级JavaBean,EJB一共分为3种,实体Bean,消息Bean,会话Bean,书写EJB是需要遵循一定的规范的,具体规范你可以参考相关的资料.另外,要运行EJB,你需要相应的EJB容器,比如Weblogic,Jboss等,而JavaBean不需要,只需要安装Tomcat就可以了

1.EJB用于服务端应用开发, 而JavaBeans

- Struts的action和Result总结

百合不是茶

strutsAction配置Result配置

一:Action的配置详解:

下面是一个Struts中一个空的Struts.xml的配置文件

<?xml version="1.0" encoding="UTF-8" ?>

<!DOCTYPE struts PUBLIC

&quo

- 如何带好自已的团队

bijian1013

项目管理团队管理团队

在网上看到博客"

怎么才能让团队成员好好干活"的评论,觉得写的比较好。 原文如下: 我做团队管理有几年了吧,我和你分享一下我认为带好团队的几点:

1.诚信

对团队内成员,无论是技术研究、交流、问题探讨,要尽可能的保持一种诚信的态度,用心去做好,你的团队会感觉得到。 2.努力提

- Java代码混淆工具

sunjing

ProGuard

Open Source Obfuscators

ProGuard

http://java-source.net/open-source/obfuscators/proguardProGuard is a free Java class file shrinker and obfuscator. It can detect and remove unused classes, fields, m

- 【Redis三】基于Redis sentinel的自动failover主从复制

bit1129

redis

在第二篇中使用2.8.17搭建了主从复制,但是它存在Master单点问题,为了解决这个问题,Redis从2.6开始引入sentinel,用于监控和管理Redis的主从复制环境,进行自动failover,即Master挂了后,sentinel自动从从服务器选出一个Master使主从复制集群仍然可以工作,如果Master醒来再次加入集群,只能以从服务器的形式工作。

什么是Sentine

- 使用代理实现Hibernate Dao层自动事务

白糖_

DAOspringAOP框架Hibernate

都说spring利用AOP实现自动事务处理机制非常好,但在只有hibernate这个框架情况下,我们开启session、管理事务就往往很麻烦。

public void save(Object obj){

Session session = this.getSession();

Transaction tran = session.beginTransaction();

try

- maven3实战读书笔记

braveCS

maven3

Maven简介

是什么?

Is a software project management and comprehension tool.项目管理工具

是基于POM概念(工程对象模型)

[设计重复、编码重复、文档重复、构建重复,maven最大化消除了构建的重复]

[与XP:简单、交流与反馈;测试驱动开发、十分钟构建、持续集成、富有信息的工作区]

功能:

- 编程之美-子数组的最大乘积

bylijinnan

编程之美

public class MaxProduct {

/**

* 编程之美 子数组的最大乘积

* 题目: 给定一个长度为N的整数数组,只允许使用乘法,不能用除法,计算任意N-1个数的组合中乘积中最大的一组,并写出算法的时间复杂度。

* 以下程序对应书上两种方法,求得“乘积中最大的一组”的乘积——都是有溢出的可能的。

* 但按题目的意思,是要求得这个子数组,而不

- 读书笔记-2

chengxuyuancsdn

读书笔记

1、反射

2、oracle年-月-日 时-分-秒

3、oracle创建有参、无参函数

4、oracle行转列

5、Struts2拦截器

6、Filter过滤器(web.xml)

1、反射

(1)检查类的结构

在java.lang.reflect包里有3个类Field,Method,Constructor分别用于描述类的域、方法和构造器。

2、oracle年月日时分秒

s

- [求学与房地产]慎重选择IT培训学校

comsci

it

关于培训学校的教学和教师的问题,我们就不讨论了,我主要关心的是这个问题

培训学校的教学楼和宿舍的环境和稳定性问题

我们大家都知道,房子是一个比较昂贵的东西,特别是那种能够当教室的房子...

&nb

- RMAN配置中通道(CHANNEL)相关参数 PARALLELISM 、FILESPERSET的关系

daizj

oraclermanfilespersetPARALLELISM

RMAN配置中通道(CHANNEL)相关参数 PARALLELISM 、FILESPERSET的关系 转

PARALLELISM ---

我们还可以通过parallelism参数来指定同时"自动"创建多少个通道:

RMAN > configure device type disk parallelism 3 ;

表示启动三个通道,可以加快备份恢复的速度。

- 简单排序:冒泡排序

dieslrae

冒泡排序

public void bubbleSort(int[] array){

for(int i=1;i<array.length;i++){

for(int k=0;k<array.length-i;k++){

if(array[k] > array[k+1]){

- 初二上学期难记单词三

dcj3sjt126com

sciet

concert 音乐会

tonight 今晚

famous 有名的;著名的

song 歌曲

thousand 千

accident 事故;灾难

careless 粗心的,大意的

break 折断;断裂;破碎

heart 心(脏)

happen 偶尔发生,碰巧

tourist 旅游者;观光者

science (自然)科学

marry 结婚

subject 题目;

- I.安装Memcahce 1. 安装依赖包libevent Memcache需要安装libevent,所以安装前可能需要执行 Shell代码 收藏代码

dcj3sjt126com

redis

wget http://download.redis.io/redis-stable.tar.gz

tar xvzf redis-stable.tar.gz

cd redis-stable

make

前面3步应该没有问题,主要的问题是执行make的时候,出现了异常。

异常一:

make[2]: cc: Command not found

异常原因:没有安装g

- 并发容器

shuizhaosi888

并发容器

通过并发容器来改善同步容器的性能,同步容器将所有对容器状态的访问都串行化,来实现线程安全,这种方式严重降低并发性,当多个线程访问时,吞吐量严重降低。

并发容器ConcurrentHashMap

替代同步基于散列的Map,通过Lock控制。

&nb

- Spring Security(12)——Remember-Me功能

234390216

Spring SecurityRemember Me记住我

Remember-Me功能

目录

1.1 概述

1.2 基于简单加密token的方法

1.3 基于持久化token的方法

1.4 Remember-Me相关接口和实现

- 位运算

焦志广

位运算

一、位运算符C语言提供了六种位运算符:

& 按位与

| 按位或

^ 按位异或

~ 取反

<< 左移

>> 右移

1. 按位与运算 按位与运算符"&"是双目运算符。其功能是参与运算的两数各对应的二进位相与。只有对应的两个二进位均为1时,结果位才为1 ,否则为0。参与运算的数以补码方式出现。

例如:9&am

- nodejs 数据库连接 mongodb mysql

liguangsong

mongodbmysqlnode数据库连接

1.mysql 连接

package.json中dependencies加入

"mysql":"~2.7.0"

执行 npm install

在config 下创建文件 database.js

- java动态编译

olive6615

javaHotSpotjvm动态编译

在HotSpot虚拟机中,有两个技术是至关重要的,即动态编译(Dynamic compilation)和Profiling。

HotSpot是如何动态编译Javad的bytecode呢?Java bytecode是以解释方式被load到虚拟机的。HotSpot里有一个运行监视器,即Profile Monitor,专门监视

- Storm0.9.5的集群部署配置优化

roadrunners

优化storm.yaml

nimbus结点配置(storm.yaml)信息:

# Licensed to the Apache Software Foundation (ASF) under one

# or more contributor license agreements. See the NOTICE file

# distributed with this work for additional inf

- 101个MySQL 的调节和优化的提示

tomcat_oracle

mysql

1. 拥有足够的物理内存来把整个InnoDB文件加载到内存中——在内存中访问文件时的速度要比在硬盘中访问时快的多。 2. 不惜一切代价避免使用Swap交换分区 – 交换时是从硬盘读取的,它的速度很慢。 3. 使用电池供电的RAM(注:RAM即随机存储器)。 4. 使用高级的RAID(注:Redundant Arrays of Inexpensive Disks,即磁盘阵列

- zoj 3829 Known Notation(贪心)

阿尔萨斯

ZOJ

题目链接:zoj 3829 Known Notation

题目大意:给定一个不完整的后缀表达式,要求有2种不同操作,用尽量少的操作使得表达式完整。

解题思路:贪心,数字的个数要要保证比∗的个数多1,不够的话优先补在开头是最优的。然后遍历一遍字符串,碰到数字+1,碰到∗-1,保证数字的个数大于等1,如果不够减的话,可以和最后面的一个数字交换位置(用栈维护十分方便),因为添加和交换代价都是1