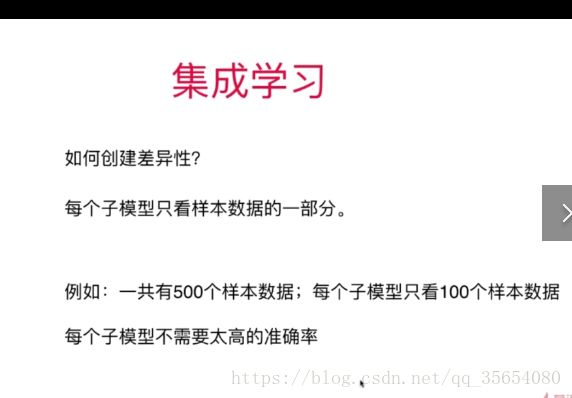

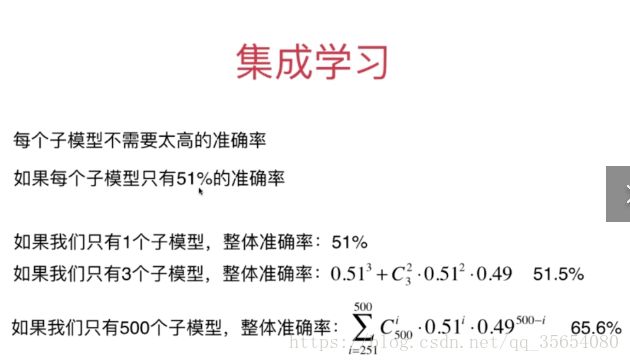

机器学习之集成学习和随机森林

"""集成学习"""

import numpy as np

import matplotlib.pyplot as plt

from sklearn import datasets

from sklearn.model_selection import train_test_split

from sklearn.linear_model import LogisticRegression

from sklearn.svm import SVC

from sklearn.tree import DecisionTreeClassifier

from sklearn.ensemble import VotingClassifier

X,y=datasets.make_moons(n_samples=500,noise=0.3,random_state=42)

X_train,X_test,y_train,y_test=train_test_split(X,y,random_state=42)

"""使用Hard Voting Classifier"""

voting_clf=VotingClassifier(estimators=[

('log_clf',LogisticRegression()),

('svm_clf',SVC()),

('dt_clf',DecisionTreeClassifier())

],voting='hard')#hard表示少数服从多数

voting_clf.fit(X_train,y_train)

print(voting_clf.score(X_test,y_test))

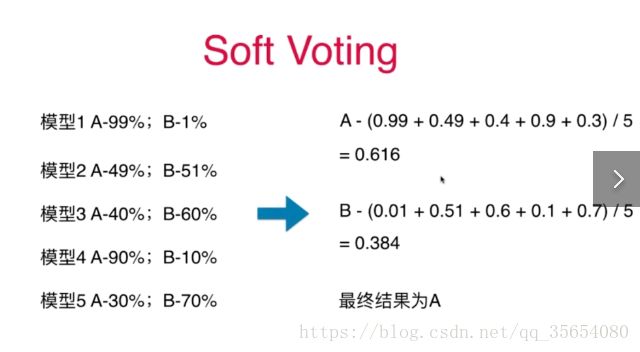

"""Soft Voting Classifier"""

voting_clf2=VotingClassifier(estimators=[

('log_clf',LogisticRegression()),

('svm_clf',SVC(probability=True)),

('dt_clf',DecisionTreeClassifier())

],voting='soft')#hard表示少数服从多数

voting_clf2.fit(X_train,y_train)

print(voting_clf2.score(X_test,y_test))结果:

0.896

0.936

"""集成学习"""

import numpy as np

import matplotlib.pyplot as plt

from sklearn import datasets

from sklearn.model_selection import train_test_split

from sklearn.linear_model import LogisticRegression

from sklearn.svm import SVC

from sklearn.tree import DecisionTreeClassifier

from sklearn.ensemble import VotingClassifier

X,y=datasets.make_moons(n_samples=500,noise=0.3,random_state=42)

X_train,X_test,y_train,y_test=train_test_split(X,y,random_state=42)

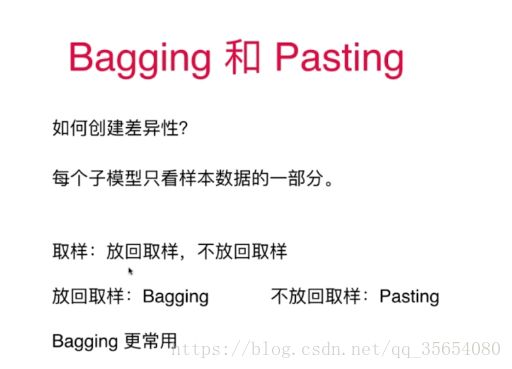

"""使用Bagging"""

from sklearn.tree import DecisionTreeClassifier

from sklearn.ensemble import BaggingClassifier

bagging_clf=BaggingClassifier(DecisionTreeClassifier(),

n_estimators=500,max_samples=100,

bootstrap=True)

bagging_clf.fit(X_train,y_train)

print(bagging_clf.score(X_test,y_test))

bagging_clf2=BaggingClassifier(DecisionTreeClassifier(),

n_estimators=5000,max_samples=100,

bootstrap=True)

bagging_clf2.fit(X_train,y_train)

print(bagging_clf2.score(X_test,y_test))结果:

E:\pythonspace\KNN_function\venv\Scripts\python.exe E:/pythonspace/KNN_function/try.py

E:\pythonspace\KNN_function\venv\lib\site-packages\sklearn\ensemble\weight_boosting.py:29: DeprecationWarning: numpy.core.umath_tests is an internal NumPy module and should not be imported. It will be removed in a future NumPy release.

from numpy.core.umath_tests import inner1d

0.912

0.92

Process finished with exit code 0

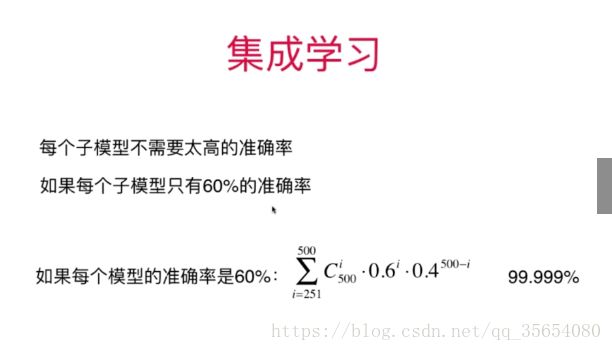

理论上划分的子模型数量越多 ,结果越准确。

"""随机森林和Extra-Trees"""

import numpy as np

import matplotlib.pyplot as plt

from sklearn import datasets

X,y=datasets.make_moons(n_samples=500,noise=0.3,random_state=42)

"""随机森林"""

from sklearn.ensemble import RandomForestClassifier

rf_clf=RandomForestClassifier(n_estimators=500,random_state=666,max_leaf_nodes=16,oob_score=True)

rf_clf.fit(X,y)

print(rf_clf.oob_score_)

"""使用Extra-Trees"""

from sklearn.ensemble import ExtraTreesClassifier

et_clf=ExtraTreesClassifier(n_estimators=500,bootstrap=True,oob_score=True)

et_clf.fit(X,y)

print(et_clf.oob_score_)

"""集成学习解决回归问题"""

from sklearn.ensemble import BaggingRegressor

from sklearn.ensemble import RandomForestRegressor

from sklearn.ensemble import ExtraTreesRegressor

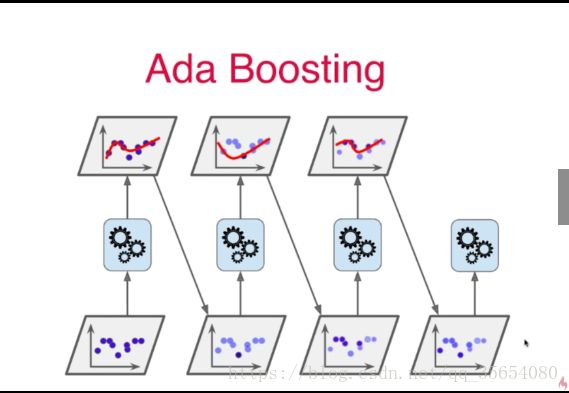

"""AdaBoosting"""

from sklearn.ensemble import AdaBoostClassifier

from sklearn.tree import DecisionTreeClassifier

ada_clf=AdaBoostClassifier(DecisionTreeClassifier(max_depth=2),n_estimators=500)

ada_clf.fit(X_tarin,y_train)

print(ada_clf.score(X_test,y_test))

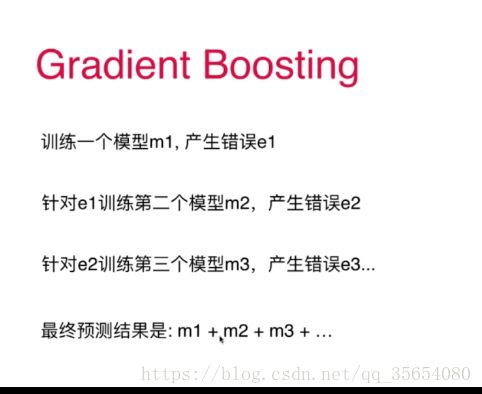

"""Gradient Boosting"""

from sklearn.ensemble import GradientBoostingClassifier

gb_clf=GradientBoostingClassifier(max_depth=2,n_estimators=500)

gb_clf.fit(X_train,y_train)

print(gb_clf.score(X_test,y_test))