单应性矩阵及其应用

参考博客:https://www.learnopencv.com/homography-examples-using-opencv-python-c/

什么是单应性?

考虑图1所示的平面的两个图像(书的顶部)。红点表示两个图像中的相同物理点。在计算机视觉术语中,我们称这些对应点。图1.用四种不同的颜色(红色,绿色,黄色和橙色)显示了四个对应的点。那么单应矩阵是,在一个图像中的点映射到另一图像中的对应点的变换(3×3矩阵)。

![]()

图1:3D平面的两幅图像(本书顶部)通过同影法相关联

现在,由于单应性是一个3×3矩阵,可以将其写为

![]()

考虑第一组对应点- ![]() 在第一张图片和

在第一张图片和![]() 第二张图片中。然后,Homography

第二张图片中。然后,Homography ![]() 通过以下方式映射它们

通过以下方式映射它们

![]()

单应性矩阵的计算

main.cpp:实现单应性矩阵的计算与图像的对齐;其余代码文件和数据下载地址:https://github.com/zwl2017/ORB_Feature

注意:需要在release模式下运行

#include

#include

#include "gms_matcher.h"

#include "ORB_modify.h"

#include

using namespace cv;

using namespace std;

int main(int argc, char** argv)

{

//Check settings file

const string strSettingsFile = "../model//TUM2.yaml";

cv::FileStorage fsSettings(strSettingsFile.c_str(), cv::FileStorage::READ);

cv::Mat img1 = imread("../data//1.png", CV_LOAD_IMAGE_COLOR);

cv::Mat img2 = imread("../data//2.png", CV_LOAD_IMAGE_COLOR);

cv::Mat im_src = img2.clone();

cv::Mat im_dst = img2.clone();

ORB_modify ORB_left(strSettingsFile);

ORB_modify ORB_right(strSettingsFile);

ORB_left.ORB_feature(img1);

ORB_right.ORB_feature(img2);

vector matches_all, matches_gms;

BFMatcher matcher(NORM_HAMMING);

matcher.match(ORB_left.mDescriptors, ORB_right.mDescriptors, matches_all);

// GMS filter

std::vector vbInliers;

gms_matcher gms(ORB_left.mvKeysUn, img1.size(), ORB_right.mvKeysUn, img2.size(), matches_all);

int num_inliers = gms.GetInlierMask(vbInliers, false, false);

cout << "Get total " << num_inliers << " matches." << endl;

// collect matches

for (size_t i = 0; i < vbInliers.size(); i++)

{

if (vbInliers[i] == true)

matches_gms.push_back(matches_all[i]);

}

// draw matching

cv::Mat show = gms.DrawInlier(img1, img2, ORB_left.mvKeysUn, ORB_right.mvKeysUn, matches_gms, 2);

imshow("ORB_matcher", show);

std::vector pts_src, pts_dst;

for (size_t i = 0; i < matches_gms.size(); i++)

{

pts_src.push_back(ORB_left.mvKeysUn[matches_gms[i].queryIdx].pt);

pts_dst.push_back(ORB_right.mvKeysUn[matches_gms[i].trainIdx].pt);

}

// Calculate Homography

cv::Mat h = findHomography(pts_src, pts_dst, RANSAC, 3, noArray(), 2000);

// Output image

cv::Mat im_out;

// Warp source image to destination based on homography

warpPerspective(im_src, im_out, h, im_dst.size());

// Display images

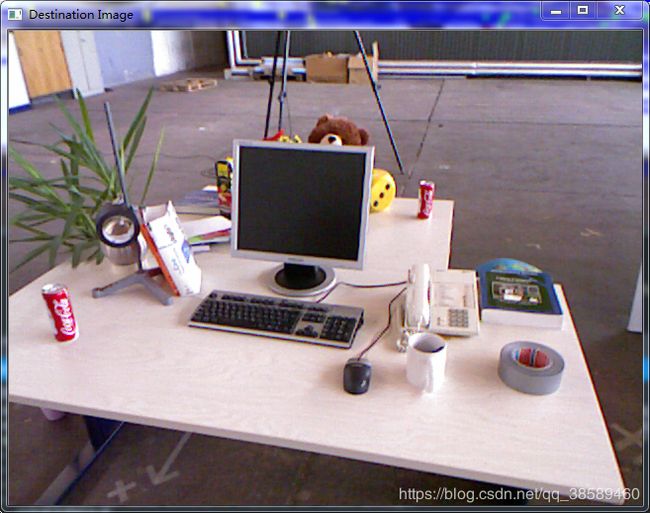

imshow("Source Image", im_src);

imshow("Destination Image", im_dst);

imshow("Warped Source Image", im_out);

waitKey(0);

} 结果:

单应性矩阵的计算与图像校正

注意点击图像的顺序为顺时针

#include

using namespace cv;

using namespace std;

struct userdata {

Mat im;

vector points;

};

void mouseHandler(int event, int x, int y, int flags, void* data_ptr)

{

if (event == EVENT_LBUTTONDOWN)

{

userdata *data = ((userdata *)data_ptr);

circle(data->im, Point(x, y), 3, Scalar(0, 0, 255), 5, CV_AA);

imshow("Image", data->im);

if (data->points.size() < 4)

{

data->points.push_back(Point2f(x, y));

}

}

}

int main(int argc, char** argv)

{

// Read source image.

Mat im_src = imread("../data//book1.jpg");

// Destination image. The aspect ratio of the book is 3/4

Size size(300, 400);

Mat im_dst = Mat::zeros(size, CV_8UC3);

// Create a vector of destination points.

vector pts_dst;

pts_dst.push_back(Point2f(0, 0));

pts_dst.push_back(Point2f(size.width - 1, 0));

pts_dst.push_back(Point2f(size.width - 1, size.height - 1));

pts_dst.push_back(Point2f(0, size.height - 1));

// Set data for mouse event

Mat im_temp = im_src.clone();

userdata data;

data.im = im_temp;

cout << "Click on the four corners of the book -- top left first and" << endl

<< "bottom left last -- and then hit ENTER" << endl;

// Show image and wait for 4 clicks.

imshow("Image", im_temp);

// Set the callback function for any mouse event

setMouseCallback("Image", mouseHandler, &data);

waitKey(0);

// Calculate the homography

Mat h = findHomography(data.points, pts_dst);

// Warp source image to destination

warpPerspective(im_src, im_dst, h, size);

// Show image

imshow("Image", im_dst);

waitKey(0);

return 0;

} 数据可以这里找到:https://github.com/spmallick/learnopencv/tree/master/Homography

结果:

单应性矩阵的计算与图像投影

#include

using namespace cv;

using namespace std;

struct userdata {

Mat im;

vector points;

};

void mouseHandler(int event, int x, int y, int flags, void* data_ptr)

{

if (event == EVENT_LBUTTONDOWN)

{

userdata *data = ((userdata *)data_ptr);

circle(data->im, Point(x, y), 3, Scalar(0, 255, 255), 5, CV_AA);

imshow("Image", data->im);

if (data->points.size() < 4)

{

data->points.push_back(Point2f(x, y));

}

}

}

int main(int argc, char** argv)

{

// Read in the image.

Mat im_src = imread("../data//first-image.jpg");

Size size = im_src.size();

// Create a vector of points.

vector pts_src;

pts_src.push_back(Point2f(0, 0));

pts_src.push_back(Point2f(size.width - 1, 0));

pts_src.push_back(Point2f(size.width - 1, size.height - 1));

pts_src.push_back(Point2f(0, size.height - 1));

// Destination image

Mat im_dst = imread("../data//times-square.jpg");

// Set data for mouse handler

Mat im_temp = im_dst.clone();

userdata data;

data.im = im_temp;

//show the image

imshow("Image", im_temp);

cout << "Click on four corners of a billboard and then press ENTER" << endl;

//set the callback function for any mouse event

setMouseCallback("Image", mouseHandler, &data);

waitKey(0);

// Calculate Homography between source and destination points

Mat h = findHomography(pts_src, data.points);

// Warp source image

warpPerspective(im_src, im_temp, h, im_temp.size());

// Extract four points from mouse data

Point pts_dst[4];

for (int i = 0; i < 4; i++)

{

pts_dst[i] = data.points[i];

}

// Black out polygonal area in destination image.

fillConvexPoly(im_dst, pts_dst, 4, Scalar(0), CV_AA);

// Add warped source image to destination image.

im_dst = im_dst + im_temp;

// Display image.

imshow("Image", im_dst);

waitKey(0);

return 0;

}

结果: