Win10 Python+Nginx+FFmpeg+Django2.2搭建RTMP流媒体服务器并实现摄像头推流并且在网页上显示

Win10 Python+Nginx+FFmpeg+Django2.2 搭建RTMP流媒体服务器并实现摄像头rtmp推流并且在网页上显示

前言:最近在做一个人工智能项目,让我从摄像头读取视频流,然后经过神经网络的处理,从而将处理后的视频流推到HTML上显示。这里就整个实现过程做一个总结。本人是技术能力有限,如有错误之处,请谅解。

第一步:实验之前需要准备的东西有

- Python

- Nginx(这里我们用到的是nginx 1.7.11.3 Gryphon)下载地址:nginx 1.7.11.3 Gryphon

- 服务器状态检查程序stat.xsl 下载地址: nginx-rtmp-module

- ffmpeg 下载地址 ffmpeg

- VLC 下载地址 VLC

- Video.js

- Django

第二步 安装,配置

- 将下载好的nginx 1.7.11.3 Gryphon解压并修改文件名为nginx-1.7.11.3-Gryphon(注意文件的绝对路径上不要有中文名,要不然启动会出错),然后进入nginx-1.7.11.3-Gryphon\conf文件夹,创建nginx-win-rtmp.conf文件 填入以下内容:最好粘贴复制过去(要不然可能格式错误)

#user nobody;

#multiple workers works !

worker_processes 2;

#error_log logs/error.log;

#error_log logs/error.log notice;

#error_log logs/error.log info;

#pid logs/nginx.pid;

events {

worker_connections 8192;

#max value 32768, nginx recycling connections+registry optimization =

#this.value * 20 = max concurrent connections currently tested with one worker

#C1000K should be possible depending there is enough ram/cpu power

#multi_accept on;

}

rtmp {

server {

listen 1935;

chunk_size 4000;

application live {

live on;

# record first 1K of stream

record all;

record_path /tmp/av;

record_max_size 1K;

# append current timestamp to each flv

record_unique on;

# publish only from localhost

allow publish 127.0.0.1;

deny publish all;

#allow play all;

}

}

}

http {

#include /nginx/conf/naxsi_core.rules;

include mime.types;

default_type application/octet-stream;

#log_format main '$remote_addr:$remote_port - $remote_user [$time_local] "$request" '

# '$status $body_bytes_sent "$http_referer" '

# '"$http_user_agent" "$http_x_forwarded_for"';

#access_log logs/access.log main;

# # loadbalancing PHP

# upstream myLoadBalancer {

# server 127.0.0.1:9001 weight=1 fail_timeout=5;

# server 127.0.0.1:9002 weight=1 fail_timeout=5;

# server 127.0.0.1:9003 weight=1 fail_timeout=5;

# server 127.0.0.1:9004 weight=1 fail_timeout=5;

# server 127.0.0.1:9005 weight=1 fail_timeout=5;

# server 127.0.0.1:9006 weight=1 fail_timeout=5;

# server 127.0.0.1:9007 weight=1 fail_timeout=5;

# server 127.0.0.1:9008 weight=1 fail_timeout=5;

# server 127.0.0.1:9009 weight=1 fail_timeout=5;

# server 127.0.0.1:9010 weight=1 fail_timeout=5;

# least_conn;

# }

sendfile off;

#tcp_nopush on;

server_names_hash_bucket_size 128;

## Start: Timeouts ##

client_body_timeout 10;

client_header_timeout 10;

keepalive_timeout 30;

send_timeout 10;

keepalive_requests 10;

## End: Timeouts ##

#gzip on;

server {

listen 80;

server_name localhost;

location /stat {

rtmp_stat all;

rtmp_stat_stylesheet stat.xsl;

}

location /stat.xsl {

root nginx-rtmp-module/;

}

location /control {

rtmp_control all;

}

#charset koi8-r;

#access_log logs/host.access.log main;

## Caching Static Files, put before first location

#location ~* \.(jpg|jpeg|png|gif|ico|css|js)$ {

# expires 14d;

# add_header Vary Accept-Encoding;

#}

# For Naxsi remove the single # line for learn mode, or the ## lines for full WAF mode

location / {

#include /nginx/conf/mysite.rules; # see also http block naxsi include line

##SecRulesEnabled;

##DeniedUrl "/RequestDenied";

##CheckRule "$SQL >= 8" BLOCK;

##CheckRule "$RFI >= 8" BLOCK;

##CheckRule "$TRAVERSAL >= 4" BLOCK;

##CheckRule "$XSS >= 8" BLOCK;

root html;

index index.html index.htm;

}

# For Naxsi remove the ## lines for full WAF mode, redirect location block used by naxsi

##location /RequestDenied {

## return 412;

##}

## Lua examples !

# location /robots.txt {

# rewrite_by_lua '

# if ngx.var.http_host ~= "localhost" then

# return ngx.exec("/robots_disallow.txt");

# end

# ';

# }

#error_page 404 /404.html;

# redirect server error pages to the static page /50x.html

#

error_page 500 502 503 504 /50x.html;

location = /50x.html {

root html;

}

# proxy the PHP scripts to Apache listening on 127.0.0.1:80

#

#location ~ \.php$ {

# proxy_pass http://127.0.0.1;

#}

# pass the PHP scripts to FastCGI server listening on 127.0.0.1:9000

#

#location ~ \.php$ {

# root html;

# fastcgi_pass 127.0.0.1:9000; # single backend process

# fastcgi_pass myLoadBalancer; # or multiple, see example above

# fastcgi_index index.php;

# fastcgi_param SCRIPT_FILENAME $document_root$fastcgi_script_name;

# include fastcgi_params;

#}

# deny access to .htaccess files, if Apache's document root

# concurs with nginx's one

#

#location ~ /\.ht {

# deny all;

#}

}

# another virtual host using mix of IP-, name-, and port-based configuration

#

#server {

# listen 8000;

# listen somename:8080;

# server_name somename alias another.alias;

# location / {

# root html;

# index index.html index.htm;

# }

#}

# HTTPS server

#

#server {

# listen 443 ssl spdy;

# server_name localhost;

# ssl on;

# ssl_certificate cert.pem;

# ssl_certificate_key cert.key;

# ssl_session_timeout 5m;

# ssl_prefer_server_ciphers On;

# ssl_protocols TLSv1 TLSv1.1 TLSv1.2;

# ssl_ciphers ECDH+AESGCM:ECDH+AES256:ECDH+AES128:ECDH+3DES:RSA+AESGCM:RSA+AES:RSA+3DES:!aNULL:!eNULL:!MD5:!DSS:!EXP:!ADH:!LOW:!MEDIUM;

# location / {

# root html;

# index index.html index.htm;

# }

#}

}

- nginx默认80端口,可以进行修改。我沿用默认的,但是如果用80端口可能会出现端口占用。这里推荐这个解决方案Nginx启动失败,80端口已被占用

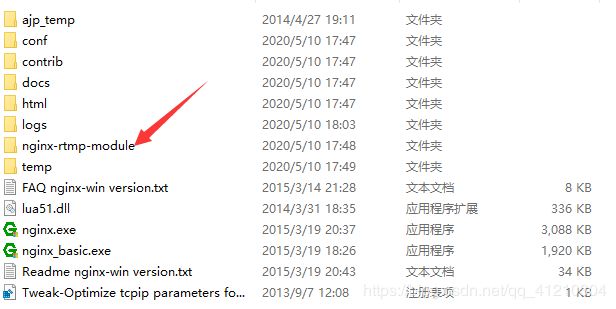

- 将下载好的服务器状态检查程序stat.xsl,放到nginx根目录下。如下图所示:

- 将ffmpeg进行解压后,然后添加环境变量如下图所示:

- 安装VLC

第三步 ffmpeg rtmp推流实验

- 启动nginx服务器 命令如下:

nginx.exe -c conf\nginx-win-rtmp.conf

- 启动成功后 打开cmd进行rtmp推流 命令如下:

ffmpeg -re -i mp4路径/mp4文件名.mp4 -vcodec libx264 -acodec aac -f flv rtmp://localhost:1935/live/home

- 打开VLC,点击“媒体”菜单,点击“打开网络串流”,输入以下命令并播放 :

rtmp://localhost:1935/live/home

第四步 进行HTML上摄像头rtmp推流播放

- 首先HTML代码(HTML代码引用自 H5实现RTMP流的直播小记)如下:

使用video.js实现rtmp流的直播播放

{% load static %}

<! - 引入播放器js - >

- 创建python文件填入以下代码(rtmp推流代码引用自 python利用ffmpeg进行rtmp推流直播):

import queue

import threading

import cv2 as cv

import subprocess as sp

class Live(object):

def __init__(self):

self.frame_queue = queue.Queue()

self.command = ""

# 自行设置

self.rtmpUrl = "rtmp://localhost:1935/live/home"

self.camera_path =0

def read_frame(self):

print("开启推流")

cap = cv.VideoCapture(0)

print('asda')

# Get video information

fps = int(cap.get(cv.CAP_PROP_FPS))

width = int(cap.get(cv.CAP_PROP_FRAME_WIDTH))

height = int(cap.get(cv.CAP_PROP_FRAME_HEIGHT))

# ffmpeg command

self.command = ['ffmpeg',

'-y',

'-f', 'rawvideo',

'-vcodec', 'rawvideo',

'-pix_fmt', 'bgr24',

'-s', "{}x{}".format(width, height),

'-r', str(fps),

'-i', '-',

'-c:v', 'libx264',

'-pix_fmt', 'yuv420p',

'-preset', 'ultrafast',

'-f', 'flv',

self.rtmpUrl]

# read webcamera

while (cap.isOpened()):

ret, frame = cap.read()

if not ret:

print("Opening camera is failed")

# 说实话这里的break应该替换为:

# cap = cv.VideoCapture(self.camera_path)

# 因为我这俩天遇到的项目里出现断流的毛病

# 特别是拉取rtmp流的时候!!!!

break

# put frame into queue

self.frame_queue.put(frame)

def push_frame(self):

# 防止多线程时 command 未被设置

while True:

if len(self.command) > 0:

# 管道配置

p = sp.Popen(self.command, stdin=sp.PIPE)

break

while True:

if self.frame_queue.empty() != True:

frame = self.frame_queue.get()

# process frame

# 你处理图片的代码

# write to pipe

p.stdin.write(frame.tostring())

def run(self):

threads = [

threading.Thread(target=Live.read_frame, args=(self,)),

threading.Thread(target=Live.push_frame, args=(self,))

]

[thread.setDaemon(True) for thread in threads]

[thread.start() for thread in threads]

- 在需要处理的地方引入上面那个类,然后执行 run()方法即可,以下是我测试写的代码:

def play_video(request):

#推流

live=Live()

live.run()

return render(request,'polls/video.html',{'error_message':"ii"})