Approach

We introduce a new type of knowledge – cross sample similarities for model compression and acceleration. This knowledge can be naturally derived from deep metric learning model. To transfer them, we bring the learning to rank technique into deep metric learning formulation.

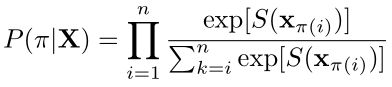

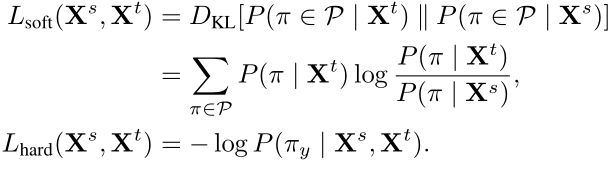

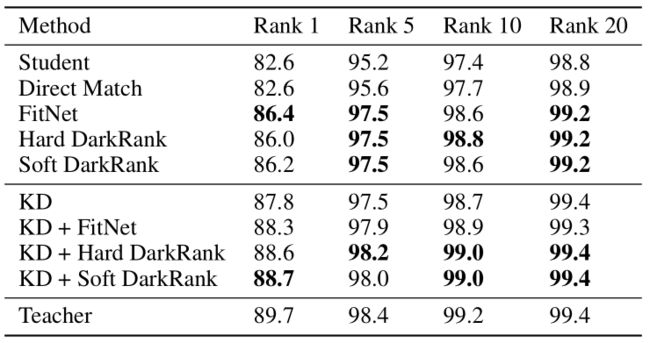

we propose two methods for the transfer: soft transfer and hard transfer. For soft transfer method, we construct two probability distributions P(π ∈ P | X s ) and P(π ∈ P | X t ) over all possible permutations (or ranks) P of the mini-batch based on Eqn. 1. Then, we match these two distributions with KL divergence. For hard transfer method, we simply maximize the likelihood of the ranking π y which has the highest probability by teacher model. Formally, we have

where S(x) is a score function based on the distance between x and the query q, and pi denotes a permutation of a list of length n. We denote the embedded features of each mini-batch after an embedding function f(.) as X.

Experiment

References:

DarkRank: Accelerating Deep Metric Learning via Cross Sample Similarities Transfer,Yuntao Chen,2017,arXiv