机器学习入门学习笔记

[跳转]《Kaggle教程 机器学习入门》系列课程目录

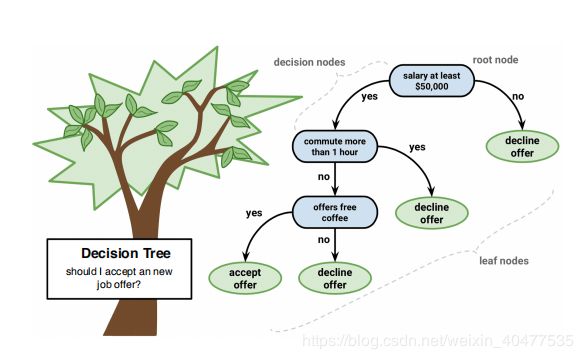

>> 决策树

- 简介:是在已知各种情况发生概率的基础上,通过构成决策树来求取净现值的期望值大于等于零的概率,评价项目风险,判断其可行性的决策分析方法,是直观运用概率分析的一种图解法。由于这种决策分支画成图形很像一棵树的枝干,故称决策树。

>> 举个例子,我们来看看澳大利亚墨尔本的房价数据。

import numpy as np

import pandas as pd

melbourne_file_path = 'data/melb_data.csv'

melbourne_data = pd.read_csv(melbourne_file_path, index_col="Date")

print(melbourne_data.shape)

melbourne_data.describe()

|

Rooms |

Price |

Distance |

Postcode |

Bedroom2 |

Bathroom |

Car |

Landsize |

BuildingArea |

YearBuilt |

Lattitude |

Longtitude |

Propertycount |

| count |

13580.000000 |

1.358000e+04 |

13580.000000 |

13580.000000 |

13580.000000 |

13580.000000 |

13518.000000 |

13580.000000 |

7130.000000 |

8205.000000 |

13580.000000 |

13580.000000 |

13580.000000 |

| mean |

2.937997 |

1.075684e+06 |

10.137776 |

3105.301915 |

2.914728 |

1.534242 |

1.610075 |

558.416127 |

151.967650 |

1964.684217 |

-37.809203 |

144.995216 |

7454.417378 |

| std |

0.955748 |

6.393107e+05 |

5.868725 |

90.676964 |

0.965921 |

0.691712 |

0.962634 |

3990.669241 |

541.014538 |

37.273762 |

0.079260 |

0.103916 |

4378.581772 |

| min |

1.000000 |

8.500000e+04 |

0.000000 |

3000.000000 |

0.000000 |

0.000000 |

0.000000 |

0.000000 |

0.000000 |

1196.000000 |

-38.182550 |

144.431810 |

249.000000 |

| 25% |

2.000000 |

6.500000e+05 |

6.100000 |

3044.000000 |

2.000000 |

1.000000 |

1.000000 |

177.000000 |

93.000000 |

1940.000000 |

-37.856822 |

144.929600 |

4380.000000 |

| 50% |

3.000000 |

9.030000e+05 |

9.200000 |

3084.000000 |

3.000000 |

1.000000 |

2.000000 |

440.000000 |

126.000000 |

1970.000000 |

-37.802355 |

145.000100 |

6555.000000 |

| 75% |

3.000000 |

1.330000e+06 |

13.000000 |

3148.000000 |

3.000000 |

2.000000 |

2.000000 |

651.000000 |

174.000000 |

1999.000000 |

-37.756400 |

145.058305 |

10331.000000 |

| max |

10.000000 |

9.000000e+06 |

48.100000 |

3977.000000 |

20.000000 |

8.000000 |

10.000000 |

433014.000000 |

44515.000000 |

2018.000000 |

-37.408530 |

145.526350 |

21650.000000 |

melbourne_data.head()

|

Suburb |

Address |

Rooms |

Type |

Price |

Method |

SellerG |

Distance |

Postcode |

Bedroom2 |

Bathroom |

Car |

Landsize |

BuildingArea |

YearBuilt |

CouncilArea |

Lattitude |

Longtitude |

Regionname |

Propertycount |

| Date |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 3/12/2016 |

Abbotsford |

85 Turner St |

2 |

h |

1480000.0 |

S |

Biggin |

2.5 |

3067.0 |

2.0 |

1.0 |

1.0 |

202.0 |

NaN |

NaN |

Yarra |

-37.7996 |

144.9984 |

Northern Metropolitan |

4019.0 |

| 4/02/2016 |

Abbotsford |

25 Bloomburg St |

2 |

h |

1035000.0 |

S |

Biggin |

2.5 |

3067.0 |

2.0 |

1.0 |

0.0 |

156.0 |

79.0 |

1900.0 |

Yarra |

-37.8079 |

144.9934 |

Northern Metropolitan |

4019.0 |

| 4/03/2017 |

Abbotsford |

5 Charles St |

3 |

h |

1465000.0 |

SP |

Biggin |

2.5 |

3067.0 |

3.0 |

2.0 |

0.0 |

134.0 |

150.0 |

1900.0 |

Yarra |

-37.8093 |

144.9944 |

Northern Metropolitan |

4019.0 |

| 4/03/2017 |

Abbotsford |

40 Federation La |

3 |

h |

850000.0 |

PI |

Biggin |

2.5 |

3067.0 |

3.0 |

2.0 |

1.0 |

94.0 |

NaN |

NaN |

Yarra |

-37.7969 |

144.9969 |

Northern Metropolitan |

4019.0 |

| 4/06/2016 |

Abbotsford |

55a Park St |

4 |

h |

1600000.0 |

VB |

Nelson |

2.5 |

3067.0 |

3.0 |

1.0 |

2.0 |

120.0 |

142.0 |

2014.0 |

Yarra |

-37.8072 |

144.9941 |

Northern Metropolitan |

4019.0 |

1、选择建模数据

melbourne_data = melbourne_data.dropna(axis=0)

2、选择预测目标 选择特征值

y = melbourne_data.Price

melbourne_features = ['Rooms', 'Bathroom', 'Landsize', 'Lattitude', 'Longtitude']

X = melbourne_data[melbourne_features]

X.describe()

|

Rooms |

Bathroom |

Landsize |

Lattitude |

Longtitude |

| count |

13580.000000 |

13580.000000 |

13580.000000 |

13580.000000 |

13580.000000 |

| mean |

2.937997 |

1.534242 |

558.416127 |

-37.809203 |

144.995216 |

| std |

0.955748 |

0.691712 |

3990.669241 |

0.079260 |

0.103916 |

| min |

1.000000 |

0.000000 |

0.000000 |

-38.182550 |

144.431810 |

| 25% |

2.000000 |

1.000000 |

177.000000 |

-37.856822 |

144.929600 |

| 50% |

3.000000 |

1.000000 |

440.000000 |

-37.802355 |

145.000100 |

| 75% |

3.000000 |

2.000000 |

651.000000 |

-37.756400 |

145.058305 |

| max |

10.000000 |

8.000000 |

433014.000000 |

-37.408530 |

145.526350 |

X.head()

|

Rooms |

Bathroom |

Landsize |

Lattitude |

Longtitude |

| Date |

|

|

|

|

|

| 3/12/2016 |

2 |

1.0 |

202.0 |

-37.7996 |

144.9984 |

| 4/02/2016 |

2 |

1.0 |

156.0 |

-37.8079 |

144.9934 |

| 4/03/2017 |

3 |

2.0 |

134.0 |

-37.8093 |

144.9944 |

| 4/03/2017 |

3 |

2.0 |

94.0 |

-37.7969 |

144.9969 |

| 4/06/2016 |

4 |

1.0 |

120.0 |

-37.8072 |

144.9941 |

3、构建模型

from sklearn.tree import DecisionTreeRegressor

melbourne_model = DecisionTreeRegressor(random_state=1)

melbourne_model.fit(X, y)

'''

DecisionTreeRegressor(criterion='mse', max_depth=None, max_features=None,

max_leaf_nodes=None, min_impurity_decrease=0.0,

min_impurity_split=None, min_samples_leaf=1,

min_samples_split=2, min_weight_fraction_leaf=0.0,

presort=False, random_state=1, splitter='best')

'''

4、预测

print("Making predictions for the following 5 houses:")

print("The predictions are")

print(melbourne_model.predict(X.head()))

print(y.head())

'''

The predictions are

[1480000. 1035000. 1465000. 850000. 1600000.]

Date

3/12/2016 1480000.0

4/02/2016 1035000.0

4/03/2017 1465000.0

4/03/2017 850000.0

4/06/2016 1600000.0

Name: Price, dtype: float64

'''

5、模型验证 MAE

- 从一个称为平均绝对误差(Mean Absolute Error,也称为MAE)的度量标准开始。

- MAE 越小越好

from sklearn.metrics import mean_absolute_error

predicted_home_prices = melbourne_model.predict(X)

mean_absolute_error(y, predicted_home_prices)

'''

MAE: 62509.0528227786

'''

6、数据分割为训练和验证数据 train_test_split

from sklearn.model_selection import train_test_split

train_X, val_X, train_y, val_y = train_test_split(X, y, random_state = 0)

melbourne_model = DecisionTreeRegressor()

melbourne_model.fit(train_X, train_y)

val_predictions = melbourne_model.predict(val_X)

print("MAE:", mean_absolute_error(val_y, val_predictions))

'''

MAE: 247974.12793323514

'''

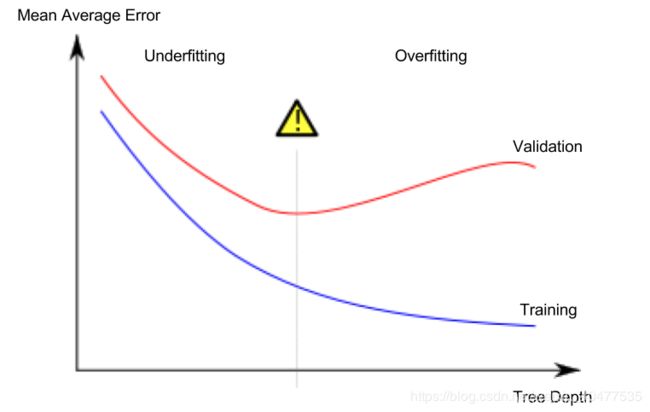

7. 尝试不同的模型

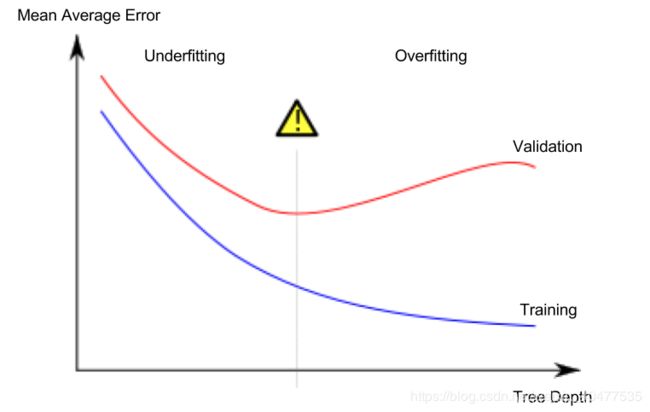

欠拟合与过拟合(overfitting underfitting)

- 过拟合: 树节点太多 模型与训练数据几乎完全匹配

- 欠拟合: 树节点太少 模型不能捕捉到数据中的重要特征和模式

from sklearn.metrics import mean_absolute_error

from sklearn.tree import DecisionTreeRegressor

def get_mae(max_leaf_nodes, train_X, val_X, train_y, val_y):

model = DecisionTreeRegressor(max_leaf_nodes=max_leaf_nodes, random_state=0)

model.fit(train_X, train_y)

preds_val = model.predict(val_X)

mae = mean_absolute_error(val_y, preds_val)

return(mae)

for max_leaf_nodes in [5, 50, 500, 5000]:

my_mae = get_mae(max_leaf_nodes, train_X, val_X, train_y, val_y)

print("Max leaf nodes: %d \t\t Mean Absolute Error: %d" %(max_leaf_nodes, my_mae))

'''

Max leaf nodes: 5 Mean Absolute Error: 354662

Max leaf nodes: 50 Mean Absolute Error: 266447

Max leaf nodes: 500 Mean Absolute Error: 231301

Max leaf nodes: 5000 Mean Absolute Error: 249163

'''

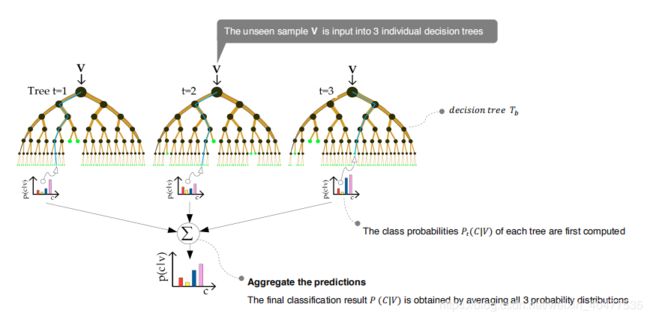

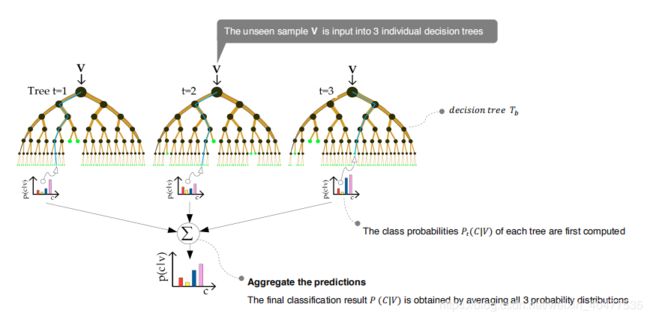

>>随机森林

- 决策树给你留下一个难题。一颗较深、叶子多的树将会过拟合,因为每一个预测都来自叶子上仅有的几个历史训练数据。一颗较浅、叶子少的树将会欠拟合,因为它不能在原始数据中捕捉到那么多的差异

- 随机森林使用了许多树,它通过对每棵成分树的预测进行平均来进行预测。它通常比单个决策树具有更好的预测精度,

from sklearn.ensemble import RandomForestRegressor

from sklearn.metrics import mean_absolute_error

forest_model = RandomForestRegressor(random_state=1)

forest_model.fit(train_X, train_y)

melb_preds = forest_model.predict(val_X)

print(mean_absolute_error(val_y, melb_preds))

'''

MAE: 191525.59192369733

'''

>>结论

- 可能还有进一步改进的空间,但是这比最佳决策树250,000的误差有很大的改进。

- 你可以修改一些参数来提升随机森林的性能,就像我们改变单个决策树的最大深度一样。

- 但是,随机森林模型的最佳特性之一是,即使没有这种调优,它们通常也可以正常工作。