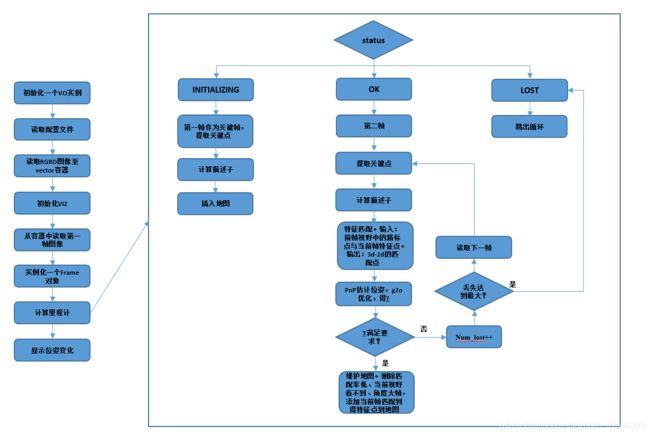

SLAM14讲ch9前端设计(二)

首先是整个VO的流程图

七、g2o类

g2o_types.h

g2o库中没有提供3D-3D的边,这里,我们需要自定义

#ifndef MYSLAM_G2O_TYPES_H

#define MYSLAM_G2O_TYPES_H

#include "myslam/common_include.h"

#include "camera.h"

#include

#include

#include

#include

#include

#include

#include

#include

namespace myslam

{

//3D-3D

//同时优化位姿和空间点

class EdgeProjectXYZRGBD : public g2o::BaseBinaryEdge<3, Eigen::Vector3d, g2o::VertexSBAPointXYZ, g2o::VertexSE3Expmap>

{

public:

EIGEN_MAKE_ALIGNED_OPERATOR_NEW;

virtual void computeError();

virtual void linearizeOplus();

virtual bool read( std::istream& in ){}

virtual bool write( std::ostream& out) const {}

};

//3D-3D

// 只优化位姿,不优化空间点

class EdgeProjectXYZRGBDPoseOnly: public g2o::BaseUnaryEdge<3, Eigen::Vector3d, g2o::VertexSE3Expmap >

{

public:

EIGEN_MAKE_ALIGNED_OPERATOR_NEW

// Error: measure = R*point+t

virtual void computeError();

virtual void linearizeOplus();

virtual bool read( std::istream& in ){}

virtual bool write( std::ostream& out) const {}

Vector3d point_;

};

//3D-2D

//只优化位姿,不优化空间点

class EdgeProjectXYZ2UVPoseOnly: public g2o::BaseUnaryEdge<2, Eigen::Vector2d, g2o::VertexSE3Expmap >

{

public:

EIGEN_MAKE_ALIGNED_OPERATOR_NEW

virtual void computeError();

virtual void linearizeOplus();

virtual bool read( std::istream& in ){}

virtual bool write(std::ostream& os) const {};

Vector3d point_;

Camera* camera_;

};

}

#endif

g2o_types.cpp

#include "myslam/g2o_types.h"

namespace myslam

{

void EdgeProjectXYZRGBD::computeError()

{

const g2o::VertexSBAPointXYZ* point = static_cast ( _vertices[0] );

const g2o::VertexSE3Expmap* pose = static_cast ( _vertices[1] );

_error = _measurement - pose->estimate().map ( point->estimate() );

}

void EdgeProjectXYZRGBD::linearizeOplus()

{

g2o::VertexSE3Expmap* pose = static_cast ( _vertices[1] );

g2o::SE3Quat T ( pose->estimate() );

g2o::VertexSBAPointXYZ* point = static_cast ( _vertices[0] );

Eigen::Vector3d xyz = point->estimate();

Eigen::Vector3d xyz_trans = T.map ( xyz );

double x = xyz_trans[0];

double y = xyz_trans[1];

double z = xyz_trans[2];

_jacobianOplusXi = - T.rotation().toRotationMatrix();

_jacobianOplusXj ( 0,0 ) = 0;

_jacobianOplusXj ( 0,1 ) = -z;

_jacobianOplusXj ( 0,2 ) = y;

_jacobianOplusXj ( 0,3 ) = -1;

_jacobianOplusXj ( 0,4 ) = 0;

_jacobianOplusXj ( 0,5 ) = 0;

_jacobianOplusXj ( 1,0 ) = z;

_jacobianOplusXj ( 1,1 ) = 0;

_jacobianOplusXj ( 1,2 ) = -x;

_jacobianOplusXj ( 1,3 ) = 0;

_jacobianOplusXj ( 1,4 ) = -1;

_jacobianOplusXj ( 1,5 ) = 0;

_jacobianOplusXj ( 2,0 ) = -y;

_jacobianOplusXj ( 2,1 ) = x;

_jacobianOplusXj ( 2,2 ) = 0;

_jacobianOplusXj ( 2,3 ) = 0;

_jacobianOplusXj ( 2,4 ) = 0;

_jacobianOplusXj ( 2,5 ) = -1;

}

void EdgeProjectXYZRGBDPoseOnly::computeError()

{

const g2o::VertexSE3Expmap* pose = static_cast ( _vertices[0] );

_error = _measurement - pose->estimate().map ( point_ );

}

void EdgeProjectXYZRGBDPoseOnly::linearizeOplus()

{

g2o::VertexSE3Expmap* pose = static_cast ( _vertices[0] );

g2o::SE3Quat T ( pose->estimate() );

Vector3d xyz_trans = T.map ( point_ );

double x = xyz_trans[0];

double y = xyz_trans[1];

double z = xyz_trans[2];

_jacobianOplusXi ( 0,0 ) = 0;

_jacobianOplusXi ( 0,1 ) = -z;

_jacobianOplusXi ( 0,2 ) = y;

_jacobianOplusXi ( 0,3 ) = -1;

_jacobianOplusXi ( 0,4 ) = 0;

_jacobianOplusXi ( 0,5 ) = 0;

_jacobianOplusXi ( 1,0 ) = z;

_jacobianOplusXi ( 1,1 ) = 0;

_jacobianOplusXi ( 1,2 ) = -x;

_jacobianOplusXi ( 1,3 ) = 0;

_jacobianOplusXi ( 1,4 ) = -1;

_jacobianOplusXi ( 1,5 ) = 0;

_jacobianOplusXi ( 2,0 ) = -y;

_jacobianOplusXi ( 2,1 ) = x;

_jacobianOplusXi ( 2,2 ) = 0;

_jacobianOplusXi ( 2,3 ) = 0;

_jacobianOplusXi ( 2,4 ) = 0;

_jacobianOplusXi ( 2,5 ) = -1;

}

void EdgeProjectXYZ2UVPoseOnly::computeError()

{

const g2o::VertexSE3Expmap* pose = static_cast ( _vertices[0] );

_error = _measurement - camera_->camera2pixel (

pose->estimate().map(point_) );

}

void EdgeProjectXYZ2UVPoseOnly::linearizeOplus()

{

g2o::VertexSE3Expmap* pose = static_cast ( _vertices[0] );

g2o::SE3Quat T ( pose->estimate() );

Vector3d xyz_trans = T.map ( point_ );

double x = xyz_trans[0];

double y = xyz_trans[1];

double z = xyz_trans[2];

double z_2 = z*z;

_jacobianOplusXi ( 0,0 ) = x*y/z_2 *camera_->fx_;

_jacobianOplusXi ( 0,1 ) = - ( 1+ ( x*x/z_2 ) ) *camera_->fx_;

_jacobianOplusXi ( 0,2 ) = y/z * camera_->fx_;

_jacobianOplusXi ( 0,3 ) = -1./z * camera_->fx_;

_jacobianOplusXi ( 0,4 ) = 0;

_jacobianOplusXi ( 0,5 ) = x/z_2 * camera_->fx_;

_jacobianOplusXi ( 1,0 ) = ( 1+y*y/z_2 ) *camera_->fy_;

_jacobianOplusXi ( 1,1 ) = -x*y/z_2 *camera_->fy_;

_jacobianOplusXi ( 1,2 ) = -x/z *camera_->fy_;

_jacobianOplusXi ( 1,3 ) = 0;

_jacobianOplusXi ( 1,4 ) = -1./z *camera_->fy_;

_jacobianOplusXi ( 1,5 ) = y/z_2 *camera_->fy_;

}

}

八、VisualOdometry.h

#ifndef VISUALODOMETRY_H

#define VISUALODOMETRY_H

#include "myslam/common_include.h"

#include "myslam/map.h"

#include

namespace myslam

{

class VisualOdometry

{

public:

typedef shared_ptr Ptr;

enum VOState { //枚举类型,VO的三种状态,初始化、正常、丢失

INITIALIZING=-1,

OK=0,

LOST

};

//VO状态、地图(关键帧和特征点)、参考帧、当前帧

VOState state_; // current VO status

Map::Ptr map_; // map with all frames and map points

Frame::Ptr ref_; // 参考帧

Frame::Ptr curr_; // 目前帧

//1、ORB部分去掉了参考帧3D点、描述子

cv::Ptr orb_; // orb detector and computer

vector keypoints_curr_; // 目前帧的关键点

Mat descriptors_curr_; // 目前帧的描述子

//2、匹配变成了地图点3D(match_3dpts_)和帧中关键点2d(match_2dkp_index_)

cv::FlannBasedMatcher matcher_flann_; // 快速最近邻匹配器,还记得前面一直用的是暴力匹配吗?

vector match_3dpts_; // matched 3d points

vector match_2dkp_index_; // matched 2d pixels (index of kp_curr)

//3、T变成了Tcw而不是之前的Tcr,当前帧直接和地图点区别绝对变化位姿,而非与参考帧之间找相对位姿变化

SE3 T_c_w_estimated_; // 目前帧的估计位姿

int num_inliers_; // number of inlier features in icp

int num_lost_; // number of lost times

// parameters

int num_of_features_; // number of features

double scale_factor_; // scale in image pyramid

int level_pyramid_; // number of pyramid levels

float match_ratio_; // ratio for selecting good matches

int max_num_lost_; // max number of continuous lost times

int min_inliers_; // minimum inliers

double key_frame_min_rot; // minimal rotation of two key-frames

double key_frame_min_trans; // minimal translation of two key-frames

double map_point_erase_ratio_; // remove map point ratio

public: // functions

VisualOdometry();

~VisualOdometry();

bool addFrame( Frame::Ptr frame ); // add a new frame

protected:

// inner operation

void extractKeyPoints();

void computeDescriptors();

void featureMatching();

void poseEstimationPnP();

//维护地图的函数

void optimizeMap();

void addKeyFrame();

//往地图中添加点的函数

void addMapPoints();

bool checkEstimatedPose();

bool checkKeyFrame();

//获取视角的函数

double getViewAngle( Frame::Ptr frame, MapPoint::Ptr point );

};

}

#endif

VisualOdometry.cpp

#include

#include

#include

#include

#include

#include "myslam/config.h"

#include "myslam/visual_odometry.h"

#include "myslam/g2o_types.h"

namespace myslam

{

VisualOdometry::VisualOdometry() :

state_ ( INITIALIZING ), ref_ ( nullptr ), curr_ ( nullptr ), map_ ( new Map ), num_lost_ ( 0 ), num_inliers_ ( 0 ), matcher_flann_ ( new cv::flann::LshIndexParams ( 5,10,2 ) )

{

num_of_features_ = Config::get ( "number_of_features" );

scale_factor_ = Config::get ( "scale_factor" );

level_pyramid_ = Config::get ( "level_pyramid" );

match_ratio_ = Config::get ( "match_ratio" );

max_num_lost_ = Config::get ( "max_num_lost" );

min_inliers_ = Config::get ( "min_inliers" );

key_frame_min_rot = Config::get ( "keyframe_rotation" );

key_frame_min_trans = Config::get ( "keyframe_translation" );

map_point_erase_ratio_ = Config::get ( "map_point_erase_ratio" );

orb_ = cv::ORB::create ( num_of_features_, scale_factor_, level_pyramid_ );

}

VisualOdometry::~VisualOdometry()

{

}

bool VisualOdometry::addFrame ( Frame::Ptr frame )

{

switch ( state_ )

{

case INITIALIZING:

{

state_ = OK;

curr_ = ref_ = frame;

// extract features from first frame and add them into map

extractKeyPoints();

computeDescriptors();

addKeyFrame(); // the first frame is a key-frame

break;

}

case OK:

{

curr_ = frame;

//T_c_w_ 为世界坐标系-相机坐标系的变换矩阵, 新帧先赋值为参考帧的位姿T

curr_->T_c_w_ = ref_->T_c_w_;

extractKeyPoints();

computeDescriptors();

featureMatching();

poseEstimationPnP();

if ( checkEstimatedPose() == true ) // a good estimation

{

//T_c_w_estimated_是通过solvePnPRansac粗估计、g2o优化后的当前帧相对于局部地图的变换矩阵

curr_->T_c_w_ = T_c_w_estimated_;

optimizeMap();

num_lost_ = 0;

if ( checkKeyFrame() == true ) // is a key-frame

{

addKeyFrame();

}

}

else // bad estimation due to various reasons

{

num_lost_++;

if ( num_lost_ > max_num_lost_ )

{

state_ = LOST;

}

return false;

}

break;

}

case LOST:

{

cout<<"vo has lost."<detect ( curr_->color_, keypoints_curr_ );

cout<<"extract keypoints cost time: "<compute ( curr_->color_, keypoints_curr_, descriptors_curr_ );

cout<<"descriptor computation cost time: "< matches;

//1. 选出视野内候选点

//建立装描述子的目标图,匹配需要的是描述子

Mat desp_map;

//建立候选地图点数组

vector candidate;

//检查地图点是否是匹配需要的(地图点在当前帧可观察到)

for ( auto& allpoints: map_->map_points_ )

{

//把路标点取出,赋值给p,map_里保存着两个数据,关键帧和路标点

MapPoint::Ptr& p = allpoints.second;

// check if p in curr frame image

if ( curr_->isInFrame(p->pos_) )

{

// add to candidate

p->visible_times_++;

candidate.push_back( p );

desp_map.push_back( p->descriptor_ );

}

}

// 2. 候选点描述子地图与当前帧进行匹配

matcher_flann_.match ( desp_map, descriptors_curr_, matches );

// 3. 这里返回最小距离

float min_dis = std::min_element (

matches.begin(), matches.end(),

[] ( const cv::DMatch& m1, const cv::DMatch& m2 )

{

return m1.distance < m2.distance;

} )->distance;

// 4. candidate只是中间存储,新帧会刷新

match_3dpts_.clear();

match_2dkp_index_.clear();

for ( cv::DMatch& m : matches )

{

if ( m.distance < max ( min_dis*match_ratio_, 30.0 ) )

{

//match_3dpts_对应good matches中候选3D路标点

match_3dpts_.push_back( candidate[m.queryIdx] );

//match_2dkp_index_对应good matches当前帧中特征点

match_2dkp_index_.push_back( m.trainIdx );

}

}

cout<<"good matches: "< pts3d;

vector pts2d;

//这里用的3d-2d点,是featureMatching()函数中匹配好的

for ( int index:match_2dkp_index_ )

{

pts2d.push_back ( keypoints_curr_[index].pt );

}

for ( MapPoint::Ptr pt:match_3dpts_ )

{

pts3d.push_back( pt->getPositionCV() );

}

Mat K = ( cv::Mat_ ( 3,3 ) <<

ref_->camera_->fx_, 0, ref_->camera_->cx_,

0, ref_->camera_->fy_, ref_->camera_->cy_,

0,0,1

);

Mat rvec, tvec, inliers;

//使用随机采样一致性算法求出R t的初值

cv::solvePnPRansac ( pts3d, pts2d, K, Mat(), rvec, tvec, false, 100, 4.0, 0.99, inliers );

num_inliers_ = inliers.rows;

cout<<"pnp inliers: "< ( 0,0 ), rvec.at ( 1,0 ), rvec.at ( 2,0 ) ),

Vector3d ( tvec.at ( 0,0 ), tvec.at ( 1,0 ), tvec.at ( 2,0 ) )

);

// using bundle adjustment to optimize the pose

typedef g2o::BlockSolver> Block;

Block::LinearSolverType* linearSolver = new g2o::LinearSolverDense();

Block* solver_ptr = new Block ( linearSolver );

g2o::OptimizationAlgorithmLevenberg* solver = new g2o::OptimizationAlgorithmLevenberg ( solver_ptr );

g2o::SparseOptimizer optimizer;

optimizer.setAlgorithm ( solver );

g2o::VertexSE3Expmap* pose = new g2o::VertexSE3Expmap();

pose->setId ( 0 );

pose->setEstimate ( g2o::SE3Quat (

T_c_w_estimated_.rotation_matrix(), T_c_w_estimated_.translation()

));

optimizer.addVertex ( pose );

// edges

for ( int i=0; i ( i,0 );

// 3D -> 2D projection

EdgeProjectXYZ2UVPoseOnly* edge = new EdgeProjectXYZ2UVPoseOnly();

edge->setId ( i );

edge->setVertex ( 0, pose );

edge->camera_ = curr_->camera_.get();

edge->point_ = Vector3d ( pts3d[index].x, pts3d[index].y, pts3d[index].z );

edge->setMeasurement ( Vector2d ( pts2d[index].x, pts2d[index].y ) );

edge->setInformation ( Eigen::Matrix2d::Identity() );

optimizer.addEdge ( edge );

// set the inlier map points

match_3dpts_[index]->matched_times_++;

}

optimizer.initializeOptimization();

optimizer.optimize ( 10 );

T_c_w_estimated_ = SE3 (

pose->estimate().rotation(),

pose->estimate().translation()

);

cout<<"T_c_w_estimated_: "<T_c_w_ * T_c_w_estimated_.inverse();

Sophus::Vector6d d = T_r_c.log();

if ( d.norm() > 5.0 )

{

cout<<"reject because motion is too large: "<T_c_w_ * T_c_w_estimated_.inverse();

Sophus::Vector6d d = T_r_c.log();

Vector3d trans = d.head<3>();

Vector3d rot = d.tail<3>();

if ( rot.norm() >key_frame_min_rot || trans.norm() >key_frame_min_trans )

return true;

return false;

}

void VisualOdometry::addKeyFrame()

{

if ( map_->keyframes_.empty() )

{

// 1、first key-frame, add all 3d points into map

for ( size_t i=0; ifindDepth ( keypoints_curr_[i] );

if ( d < 0 )

continue;

//先找深度d,像素转3D世界坐标

Vector3d p_world = ref_->camera_->pixel2world (

Vector2d ( keypoints_curr_[i].pt.x, keypoints_curr_[i].pt.y ), curr_->T_c_w_, d

);

//世界坐标减去相机光心坐标,求得模长

Vector3d n = p_world - ref_->getCamCenter();//

n.normalize();

//构造一个地图点,3D点、模长、描述子、帧

MapPoint::Ptr map_point = MapPoint::createMapPoint(

p_world, n, descriptors_curr_.row(i).clone(), curr_.get()

);

//添加进地图

map_->insertMapPoint( map_point );

}

}

//2. 添加关键帧到地图中

map_->insertKeyFrame ( curr_ );

ref_ = curr_;

}

void VisualOdometry::addMapPoints()

{

// add the new map points into map

vector matched(keypoints_curr_.size(), false);

for ( int index:match_2dkp_index_ )

matched[index] = true;

for ( int i=0; ifindDepth ( keypoints_curr_[i] );

if ( d<0 )

continue;

Vector3d p_world = ref_->camera_->pixel2world (

Vector2d ( keypoints_curr_[i].pt.x, keypoints_curr_[i].pt.y ),

curr_->T_c_w_, d

);

Vector3d n = p_world - ref_->getCamCenter();

n.normalize();

MapPoint::Ptr map_point = MapPoint::createMapPoint(

p_world, n, descriptors_curr_.row(i).clone(), curr_.get()

);

map_->insertMapPoint( map_point );

}

}

void VisualOdometry::optimizeMap()

{

// remove the hardly seen and no visible points

//1. 删除地图点

for ( auto iter = map_->map_points_.begin(); iter != map_->map_points_.end(); )

{

//1.1 当前帧看不见该点,删除

if ( !curr_->isInFrame(iter->second->pos_) )

{

iter = map_->map_points_.erase(iter);

continue;

}

//1.2 匹配率=匹配次数/可见次数,过低删除

float match_ratio = float(iter->second->matched_times_)/iter->second->visible_times_;

if ( match_ratio < map_point_erase_ratio_ )

{

iter = map_->map_points_.erase(iter);

continue;

}

// 1.3 角度大于

double angle = getViewAngle( curr_, iter->second );

if ( angle > M_PI/6. )

{

iter = map_->map_points_.erase(iter);

continue;

}

// 1.4 不是好点

if ( iter->second->good_ == false )

{

// TODO try triangulate this map point

}

iter++;

}

// 2. 增加点

// 2.1 当前帧与地图匹配点少于100个,

if ( match_2dkp_index_.size()<100 )

addMapPoints();

if ( map_->map_points_.size() > 1000 )

{

// TODO map is too large, remove some one

map_point_erase_ratio_ += 0.05;

}

// 2.2 点太多于1000,增加释放率,否则维持0.1

else

map_point_erase_ratio_ = 0.1;

cout<<"map points: "<map_points_.size()<pos_ - frame->getCamCenter();

//单位化

n.normalize();

//得到角度,反余弦

return acos( n.transpose()*point->norm_ );

}

}