Scikit-learn与Tensorflow_Aurelien——2017学习笔记 chapter1-2

一. 机器学习资源

1.机器学习code example 开源网站https://github.com/ageron/handson-ml

2.机器学习网上课程

吴恩达:https://www.coursera.org/learn/machine-learning/lecture/sHfVT/optimization-objective

Geoffrey Hinton: https://www.coursera.org/learn/neural-networks

5.python学习http://learnpython.org/,https://docs.python.org/3/tutorial/

python主要的科学库NumPy, Pandas ,Matplotlib

6. 相关书籍:

Data Science from Scratch

Machine Learning: An Algorithmic Perspective (Chapman and

Hall)

Python Machine Learning (Packt Publishing)

Learning from Data (AMLBook)

Artificial Intelligence: A Modern Approach, 3rd Edition (Pearson)

7. 加入机器学习竞赛的网站:https://www.kaggle.com/

8. 大牛解答tensorflow相关问题的网站:

Pete: https://petewarden.com/

Lukas: https://lukasbiewald.com/ ,

https://www.oreilly.com/learning/how-to-build-a-robot-that-sees-with-100-and-tensorflow

Justin: https://www.oreilly.com/people/justin-francis

David :http://www.david-andrzejewski.com/

二. 机器学习概念和类型

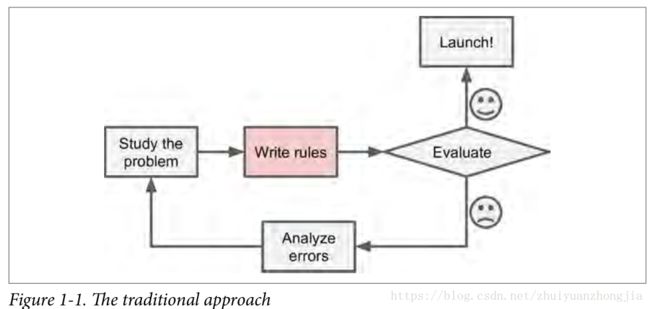

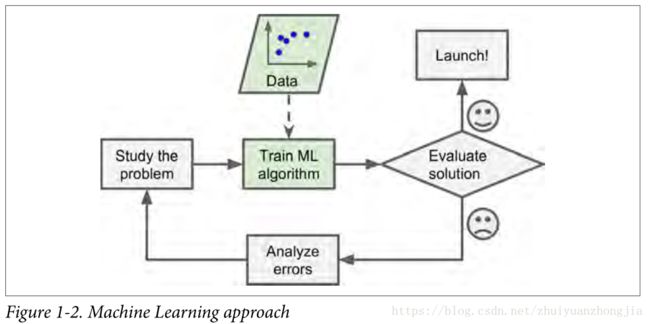

1.传统问题解决方式与机器学习方式

2.机器学习能解决什么问题

(1)Problems for which existing solutions require a lot of hand-tuning or long lists of

rules: one Machine Learning algorithm can often simplify code and perform bet‐

ter.

(2) Complex problems for which there is no good solution at all using a traditional

approach: the best Machine Learning techniques can find a solution.

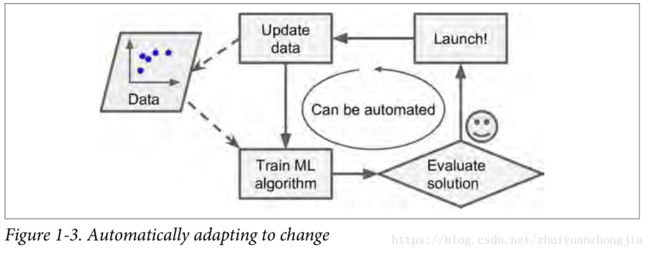

(3) Fluctuating environments: a Machine Learning system can adapt to new data.

(4)Getting insights about complex problems and large amounts of data.

3. 机器学习系统的类型

There are so many different types of Machine Learning systems that it is useful to

classify them in broad categories based on:

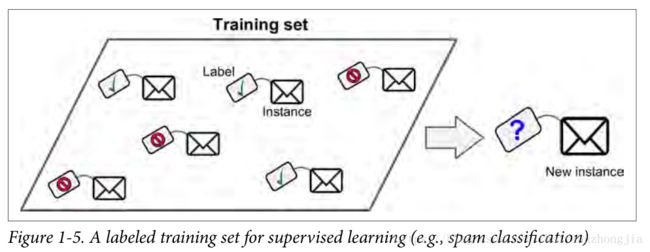

• Whether or not they are trained with human supervision (supervised, unsuper‐

vised, semisupervised, and Reinforcement Learning)

• Whether or not they can learn incrementally on the fly (online versus batch

learning)

• Whether they work by simply comparing new data points to known data points,

or instead detect patterns in the training data and build a predictive model, much

like scientists do (instance-based versus model-based learning)

• Linear Regression

• Logistic Regression

• Support Vector Machines (SVMs)

• Decision Trees and Random Forests

• Neural networks

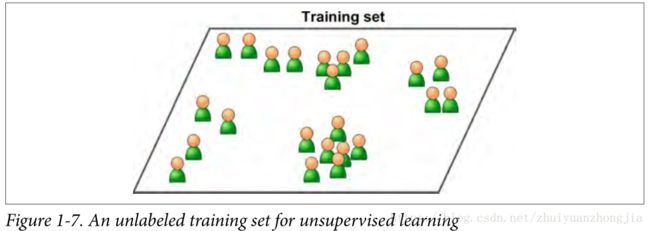

(2)非监督学习:

• Clustering

— k-Means

— Hierarchical Cluster Analysis (HCA)

— Expectation Maximization

• Visualization and dimensionality reduction

— Principal Component Analysis (PCA)

— Kernel PCA

— Locally-Linear Embedding (LLE)

— t-distributed Stochastic Neighbor Embedding (t-SNE)

• Association rule learning

— Apriori

— Eclat

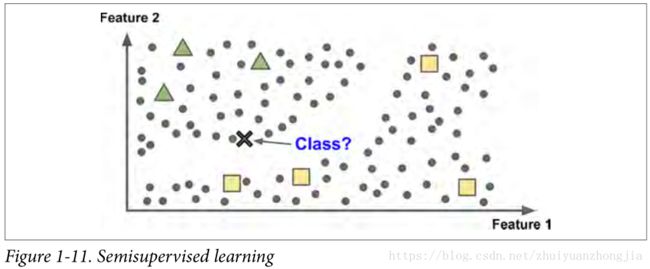

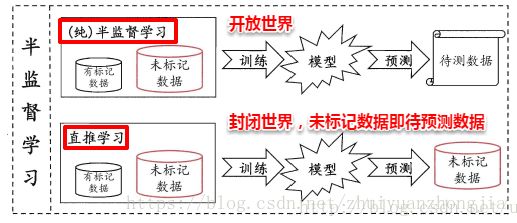

(3)半监督学习:

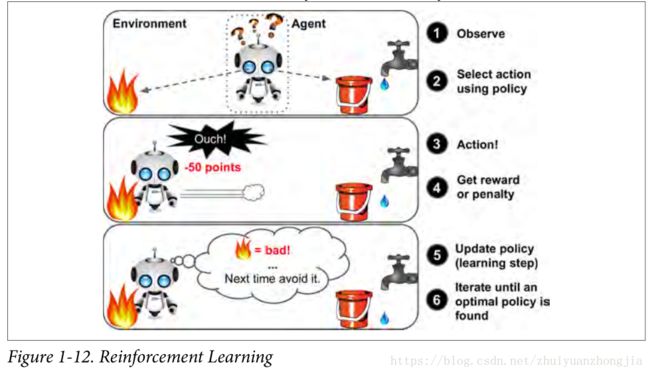

(4)强化学习:

Batch and Online Learning

(1)Batch learning(offline learning)

In batch learning, the system is incapable of learning incrementally: it must be trained using all the available data. This will generally take a lot of time and computing resources, so it is typically done offline. First the system is trained, and then it is launched into production and runs without learning anymore; it just applies what it has learned. This is called offline learning. If you want a batch learning system to know about new data (such as a new type of spam), you need to train a new version of the system from scratch on the full dataset (not just the new data, but also the old data), then stop the old system and replace it with the new one.

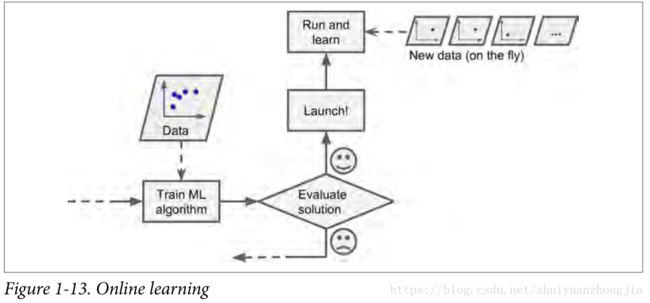

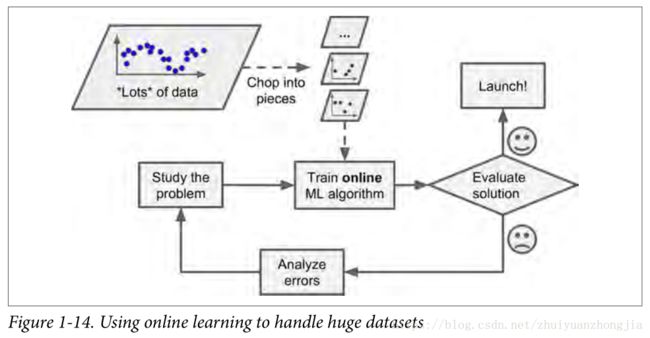

(2) Online learning(done offline,incremental learning)

In online learning, you train the system incrementally by feeding it data instances sequentially, either individually or by small groups called mini-batches. Each learningstep is fast and cheap, so the system can learn about new data on the fly, as it arrives.

Online learning is great for systems that receive data as a continuous flow (e.g., stock prices) and need to adapt to change rapidly or autonomously.once an online learning system has learned about new data instances, it does not need them anymore, so you can discard them

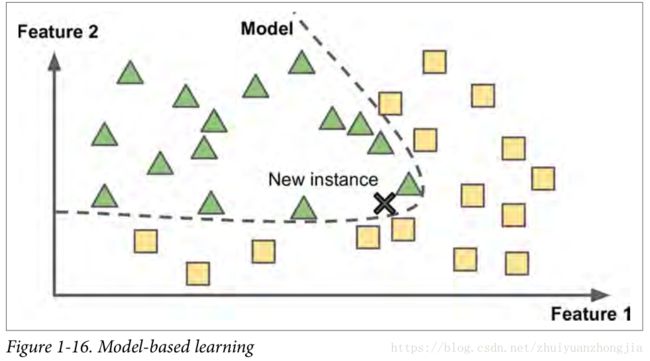

Instance-Based Versus Model-Based Learning

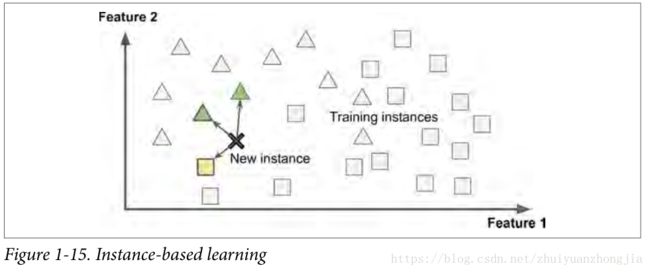

(1)Instance-based learning

the system learns the examples by heart, then generalizes to new cases using a similarity measure

(2)Model-based learning

Another way to generalize from a set of examples is to build a model of these exam‐ples, then use that model to make predictions.

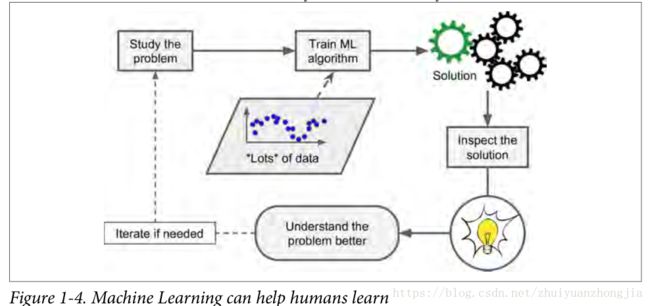

4. In summary,typical Machine Learning project

• You studied the data.

• You selected a model.

• You trained it on the training data (i.e., the learning algorithm searched for the

model parameter values that minimize a cost function).

• Finally, you applied the model to make predictions on new cases (this is called

inference), hoping that this model will generalize well.

(1).Insufficient Quantity of Training Data

it takes a lot of data for most Machine Learning algorithms to work properly.

(2).Nonrepresentative Training Data

In order to generalize well, it is crucial that your training data be representative of the new cases you want to generalize to. if the sample is too small, you will have sampling noise (i.e., nonrepresentative data as a result of chance), but even very large samples can be nonrepresentative if the sampling method is flawed.

(3).Poor-Quality Data

Obviously, if your training data is full of errors, outliers, and noise (e.g., due to poor-quality measurements), it will make it harder for the system to detect the underlying patterns, so your system is less likely to perform well. It is often well worth the effort to spend time cleaning up your training data. The truth is, most data scientists spend a significant part of their time doing just that.

For example:

• If some instances are clearly outliers, it may help to simply discard them or try to

fix the errors manually.

• If some instances are missing a few features (e.g., 5% of your customers did not

specify their age), you must decide whether you want to ignore this attribute alto‐

gether, ignore these instances, fill in the missing values (e.g., with the median

age), or train one model with the feature and one model without it, and so on.

(4).Irrelevant Features

A critical part of the success of a Machine Learning project is coming up with a

good set of features to train on. This process, called feature engineering, involves:

• Feature selection: selecting the most useful features to train on among existing

features.

• Feature extraction: combining existing features to produce a more useful one (as

we saw earlier, dimensionality reduction algorithms can help).

• Creating new features by gathering new data.

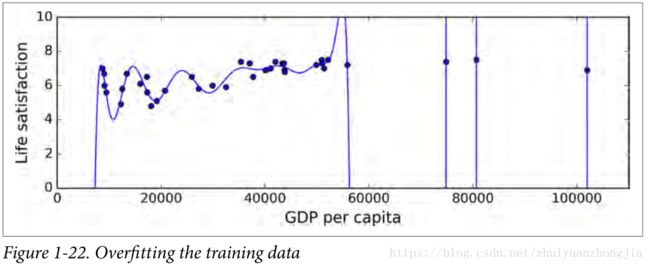

(5).Overfitting the Training Data

In Machine Learning this is called overfitting: it means that the model performs well on the training data, but it does not generalize well.

Overfitting happens when the model is too complex relative to the amount and noisiness of the training data. The possible solutions are:

• To simplify the model by selecting one with fewer parameters(e.g., a linear model rather than a high-degree polynomial model), by reducing the number of attributes in the training data or by constraining the model

• To gather more training data

• To reduce the noise in the training data (e.g., fix data errors and remove outliers)

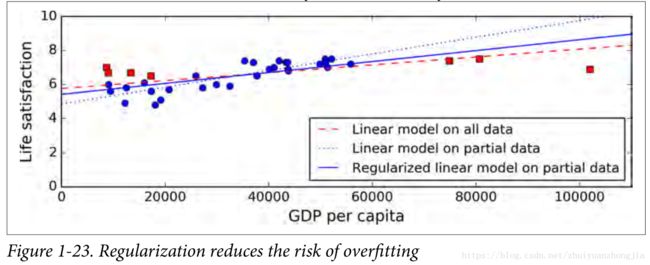

You want to find the right balance between fitting the data perfectly and keeping the model simple enough to ensure that it will generalize well.

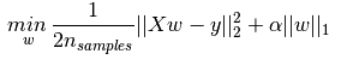

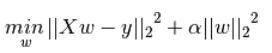

正则化:

正则化通俗讲可以削弱不重要的特征变量,正常来说正则化有L1和L2范数。

那添加L1和L2正则化有什么用?下面是L1正则化和L2正则化的作用

- L1正则化可以产生稀疏权值矩阵,即产生一个稀疏模型,可以用于特征选择

- L2正则化可以防止模型过拟合(overfitting);一定程度上,L1也可以防止过拟合

在原始代价函数后面加上一个L1/L2的正则项,由于参数更新是通过代价函数求导后计算更新得来,正则化项对b的更新没有影响,但是对于w的更新有影响,更新后效果就是让w往0靠,使网络中的权重尽可能为0,也就相当于减小了网络复杂度,防止过拟合.

为什么减小w能够防止过拟合呢?一种简单的解释是:更小的权值w,从某种意义来说,表示网络的复杂度更小,对数据的拟合刚刚好(此举也称作机器学习的奥卡姆剃刀)。当然,从更深层次角度看,过拟合的时候,拟合函数的系数往往非常大,过拟合就是拟合函数需要顾忌每一个点,最终形成的拟合函数波动很大。在某些很小的区间里,函数值的变化很剧烈。这就意味着函数在某些小区间里的导数值(绝对值)非常大,由于自变量值可大可小,所以只有系数足够大,才能保证导数值很大,而正则化是通过约束参数的范数使其不要太大,所以可以在一定程度上减少过拟合情况。

(6)Underfitting the Training Data

it occurs when your model is too simple to learn the underlying structure of the data.

The main options to fix this problem are:

• Selecting a more powerful model, with more parameters

• Feeding better features to the learning algorithm (feature engineering)

• Reducing the constraints on the model (e.g., reducing the regularization hyper‐parameter)

SUMMARY

• Machine Learning is about making machines get better at some task by learningfrom data, instead of having to explicitly code rules.

• There are many different types of ML systems: supervised or not, batch or online,instance-based or model-based, and so on.

• In a ML project you gather data in a training set, and you feed the training set to a learning algorithm. If the algorithm is model-based it tunes some parameters to fit the model to the training set (i.e., to make good predictions on the training set itself), and then hopefully it will be able to make good predictions on new cases as well. If the algorithm is instance-based, it just learns the examples by heart and uses a similarity measure to generalize to new instances.

• The system will not perform well if your training set is too small, or if the data is not representative, noisy, or polluted with irrelevant features (garbage in, garbage out). Lastly, your model needs to be neither too simple (in which case it will underfit) nor too complex (in which case it will overfit).

6.Testing and Validating

(1)Training set, validation set ,test set

(2)cross-validation: the training set is split into complementary subsets, and eachmodel is trained against a different combination of these subsets and validated against the remaining parts.

k-折交叉验证:将训练数据等分成k份(k通常的取值为3、5或10)

– 重复k次每次留出一份做校验,其余k-1份做训练

– k次校验集上的平均性能视为模型在测试集上性能的估计,该估计比train_test_split得到的估计方差更小

注意:如果每类样本不均衡或类别数较多,采用StratifiedKFold(有些类别少,有些类别多,不会每一份都属于同一个类别), 将数据集中每一类样本的数据等分.

三. End-to-End Machine Learning Project

1. Look at the big picture.

2. Get the data.

3. Discover and visualize the data to gain insights.

4. Prepare the data for Machine Learning algorithms.

5. Select a model and train it.

6. Fine-tune your model.

7. Present your solution.

8. Launch, monitor, and maintain your system.

1. Look at the Big Picture

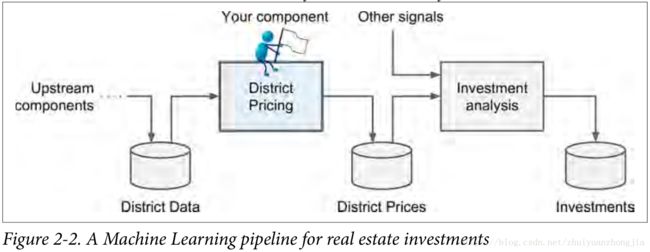

(1)Frame the Problem

what exactly is the business objective?How does the company expect to use and benefit from this model?The next question to ask is what the current solution looks like (if any).

First, you need to frame the problem: is it supervised, unsupervised, or Reinforce‐ment Learning? Is it a classification task, a regression task, or something else? Should you use batch learning or online learning techniques? Before you read on, pause and try to answer these questions for yourself.

(2)Select a Performance Measure

(3)Check the Assumptions

2. Get the Data

下载数据,查看数据结构

(1)测试集准备

注意data snooping bias, 随机选取20%,应用hash()

相关函数:os.path.join(), urllib. request.urlretrieve(), extractall() , random.permutation(),iloc[], loc[] ,hash() ,

hash.digets(), apply(), reset_index() , train_test_split(), split_train_test(),where(),StratifiedShuffleSplit(), split(),drop()

3. Discover and Visualize the Data to Gain Insights

函数:copy(),plot(),corr(),sort_values()

4.Prepare the Data for Machine Learning Algorithms

(1)Data cleaning:

• Get rid of the corresponding districts.

• Get rid of the whole attribute.

• Set the values to some value (zero, the mean, the median, etc.).

函数:dropna(),drop(),fillna(),median(),Imputer(),fit(),transform(),fit_transform()

Scikit-Learn Design:

可参考:https://www.jianshu.com/p/516f009c0875

(2)Handling Text and Categorical Attributes:

函数:LabelEncoder(),OneHotEncoder(),toarray(),LabelBinarizer()

(3)Custom Transformers

函数: get_params(), set_params()

(4)Feature Scaling

函数:MixMaxScaler(), StandardScaler()

(5) Transformation Pipelines

函数:Pipeline(), LabelBinarizer(),FeatureUnion(),list()

5.Select and Train a Model

(1)Training and Evaluating on the Training Set

函数:LinearRegression(),fit(),iloc(),predict(),mean_squared_error(),sqrt(),DecisionTreeRegressor()

(2)Better Evaluation Using Cross-Validation

函数:train_test_split(),cross_val_score(),RandomForestRegressor(),dump(),load()

6.Fine-Tune Your Model

Grid Search

函数:GridSearchCV()

Randomized Search

Ensemble Methods

Analyze the Best Models and Their Errors

Evaluate Your System on the Test Set

7. Launch, Monitor, and Maintain Your System