android 图像和视频编程中Image类和YUV详解

Image类在Android的API 19中引入,但真正开始发挥作用还是在API 21引入CameraDevice和MediaCodec的增强后。API 21引入了Camera2,deprecated掉了Camera,确立Image作为相机得到的原始帧数据的载体;硬件编解码的MediaCodec类加入了对Image和Image的封装ImageReader的全面支持。可以预见,Image将会用来统一Android内部混乱的中间图片数据(这里中间图片数据指如各式YUV格式数据,在处理过程中产生和销毁)管理。

YUV即通过Y、U和V三个分量表示颜色空间,其中Y表示亮度,U和V表示色度。不同于RGB中每个像素点都有独立的R、G和B三个颜色分量值,YUV根据U和V采样数目的不同,分为如YUV444、YUV422和YUV420等,而YUV420表示的就是每个像素点有一个独立的亮度表示,即Y分量;而色度,即U和V分量则由每4个像素点共享一个。举例来说,对于4x4的图片,在YUV420下,有16个Y值,4个U值和4个V值。

YUV 的原理是把亮度(Luma)与色度(Chroma)分离。

“Y”表示亮度,也就是灰度值。

“U”表示蓝色通道与亮度的差值。

“V”表示红色通道与亮度的差值。

少了U或者V变换出来的图像有时看起来的是正常的,可以使用颜色卡测试,一会影响蓝色或者红色颜色的准确表达

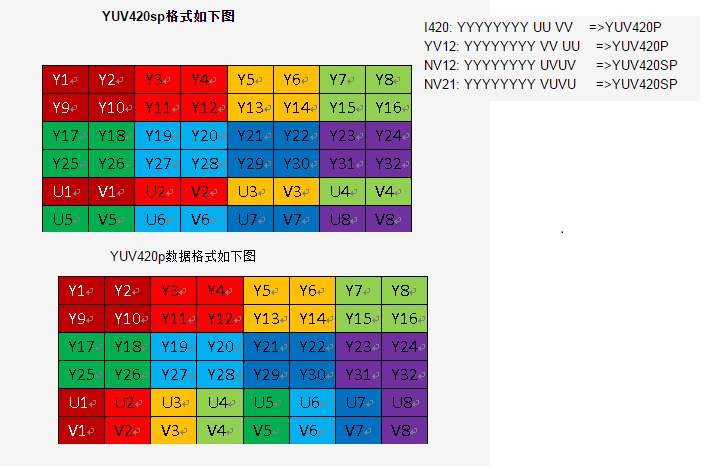

YUV420是一类格式的集合,YUV420并不能完全确定颜色数据的存储顺序。YUV420根据颜色数据的存储顺序不同,又分为了多种不同的格式,如YUV420Planar、YUV420PackedPlanar、YUV420SemiPlanar和YUV420PackedSemiPlanar,这些格式实际存储的信息还是完全一致的。举例来说,对于4x4的图片,在YUV420下,任何格式都有16个Y值,4个U值和4个V值,不同格式只是Y、U和V的排列顺序变化。I420(YUV420Planar的一种)则为YYYYYYYYYYYYYYYY UUUU VVVV,NV21(YUV420SemiPlanar)则为YYYYYYYYYYYYYYYY vu vu vu vu。

Camera2使用Image YUV_420_888,旧的API Camera默认使用NV21,NV21也是YUV 420的一种。事实上android也支持422编码。可以通过getFormat()获取camera2的Image 类型为YUV_420_888。YUV_420_888是一种三通道Planar存储方式,可以通过getPlanes()获取每个通道的数据,通道1为Y,通道2为U,通道3为V,和其他YUV420一样,四个Y对应一个U和一个V,也就是四个像素共用四个Y,一个U,一个V,但和其他存储格式的YUV420主要差别在于,单个U使用两个byte存储,单个V也使用两个byte,多出来的一个byte使用空数据127填充,总的大小是widthXheightX2,其他为乘以1.5,和其他YUV转换需要先去掉空数据。或者把U的空数据替换为V,也或者把V的空数据替换为U。

Planar,

image format: 35

get data from 3 planes

pixelStride 1

rowStride 1920

width 1920

height 1080

buffer size 2088960

Finished reading data from plane 0

pixelStride 1

rowStride 960

width 1920

height 1080

buffer size 522240

Finished reading data from plane 1

pixelStride 1

rowStride 960

width 1920

height 1080

buffer size 522240

Finished reading data from plane 2Planar对于大小为4X4图像,数据大小16+4+4,分三个通道,通道0存Y,大小与图像大小相当,通道1存U,四个Y对应一个U,U大小为Y四分之一,通道2存V,四个Y对应一个V,V大小也为Y四分之一,与U相等。如下

YYYY

YYYY

YYYY

YYYY

UUUU

VVVV

SemiPlanar,这是android Image默认输出的YUV_420_888

image format: 35

get data from 3 planes

pixelStride 1

rowStride 1920

width 1920

height 1080

buffer size 2088960

Finished reading data from plane 0

pixelStride 2

rowStride 1920

width 1920

height 1080

buffer size 1044479

Finished reading data from plane 1

pixelStride 2

rowStride 1920

width 1920

height 1080

buffer size 1044479

Finished reading data from plane 2SemiPlanar是android camera2默认的输出格式,camera2输出ImageReader,ImageReader支持的图像格式受硬件支持影响有些手机多,有些手机,但默认都会支持YUV_420_888,这种格式对于4X4图像,数据大小16+7+7,同样分三个通道,0通道Y数据和其他420的YUV一样,都是大小为宽X高,1通道为U,大小为Y的二分之一减1,主要是U通道带一个oxff的填充位,一个byte的U附加一个oxff填充位,同时省略了通道末尾最后一个填充位,V通道和U通道一样为分之一减1。如下

YYYY

YYYY

YYYY

YYYY

U0xffU0xff

U0xffU

V0xffV0xff

V0xffV

图片格式依然是YUV_420_888,Y分量与上述Planar中一样。但U和V分量出现了变化,buffer size是Y分量的1/2,如果说U分量只包含有U分量信息的话应当是1/4,多出来了1/4的内容,我们稍后再仔细看。注意到U中rowStride为1920,即U中每1920个数据代表一行,但pixelStride为2,代表行内颜色值间隔为1,就说是只有行内索引为0 2 4 6 ...才有U分量数据,这样来说还是行内每两个像素点共用一个U值,行间每两个像素点共用一个U值,即YUV420。

U和V的pixelStride都是2,我们从U和V中挑相同位置的20个byte值出来看看相互之间的关系。

124 -127 124 -127 123 -127 122 -127 122 -127 123 -127 123 -127 123 -127 122 -127 123 -127

-127 124 -127 123 -127 122 -127 122 -127 123 -127 123 -127 123 -127 122 -127 123 -127 123上面一行来自U,下面一行来自V,最前面一个byte的索引值相同,且为偶数。可以明显发现U和V分量只是进行了一次移位,而这个移位就保证了从索引0开始间隔取值就一定能取到自己分量的值。所以可以简单来说U和V分量就是复制的UV交叉的数据。

这样想要获取U分量值的话只需要以pixelStride为间隔获取就好了,V分量也是一样。虽然你也可以只从U或V分量得到U和V分量的信息,但毕竟官方并没有保证这一点,多少有些风险。另外如果想要知道更多的细节,也可以去翻Android源码。

常见YUV420存储格式

P是UV不交叉,SP是UV交叉存储,

对于不交叉,YV12是V在前,YU12是U在前,YU12双叫I420,opencv对应于IYUV,周属于420p

对于交叉,NV21是V在前,NV12是U在前, android老camera使用NV21,IOS使用NV12,同属于420sp

综上所知

Image YUV_420_888要转转换为大致分为三步

1 通过getPlanes获取Y,U,V三个通道数据

2.把U,V数据处理去掉空数据,得到紧凑的U和V

3.根据需要的数据对Y,U,V进行重新组合

注:对于需要输出420sp数据的情况,开始觉得第二步也可以不去掉空白,根据需要把U的空白数据依次替换为V或者把V的空白数据替换为U然后和Y合并,这会出错,因为u,v的最后一个空白被省掉了,会出错越界问题。但可以申请一个新空间交叉存放UV

把YUV_420_888转为NV21

private ByteBuffer imageToByteBuffer(final Image image) {

final Rect crop = image.getCropRect();

final int width = crop.width();

final int height = crop.height();

final Image.Plane[] planes = image.getPlanes();

final int bufferSize = width * height * ImageFormat.getBitsPerPixel(ImageFormat.YUV_420_888) / 8;

final ByteBuffer output = ByteBuffer.allocateDirect(bufferSize);

int channelOffset = 0;

int outputStride = 0;

for (int planeIndex = 0; planeIndex < planes.length; planeIndex++) {

if (planeIndex == 0) {

channelOffset = 0;

outputStride = 1;

} else if (planeIndex == 1) {

channelOffset = width * height + 1;

outputStride = 2;

} else if (planeIndex == 2) {

channelOffset = width * height;

outputStride = 2;

}

final ByteBuffer buffer = planes[planeIndex].getBuffer();

final int rowStride = planes[planeIndex].getRowStride();

final int pixelStride = planes[planeIndex].getPixelStride();

byte[] rowData = new byte[rowStride];

final int shift = (planeIndex == 0) ? 0 : 1;

final int widthShifted = width >> shift;

final int heightShifted = height >> shift;

buffer.position(rowStride * (crop.top >> shift) + pixelStride * (crop.left >> shift));

for (int row = 0; row < heightShifted; row++) {

final int length;

if (pixelStride == 1 && outputStride == 1) {

length = widthShifted;

buffer.get(output.array(), channelOffset, length);

channelOffset += length;

} else {

length = (widthShifted - 1) * pixelStride + 1;

buffer.get(rowData, 0, length);

for (int col = 0; col < widthShifted; col++) {

output.array()[channelOffset] = rowData[col * pixelStride];

channelOffset += outputStride;

}

}

if (row < heightShifted - 1) {

buffer.position(buffer.position() + rowStride - length);

}

}

}

return output;

}使用OPENCV转换提升效率,经测试看来速度相对JAVA差接近一个数量级

extern "C" JNIEXPORT void JNICALL

Java_com_hxdl_coco_ai_HXAIEngineNative_RGBA2BGRA(JNIEnv *env, jclass type, jbyteArray rgba,

jint width, jint height, jintArray bgra) {

jbyte *_rgba = env->GetByteArrayElements(rgba, 0);

jint *_bgra = env->GetIntArrayElements(bgra, 0);

cv::Mat mRgba(height, width * 4, CV_8UC1, (unsigned char *) _rgba);

cv::Mat mBgra(height, width, CV_8UC4, (unsigned char *) _bgra);

cvtColor(mRgba, mBgra, CV_RGBA2BGRA, 4);

env->ReleaseIntArrayElements(bgra, _bgra, 0);

env->ReleaseByteArrayElements(rgba, _rgba, 0);

}

//420sp, nv21:先Y后VU交叉混合 YYYYYYYY VU VU

extern "C" JNIEXPORT void JNICALL

Java_com_hxdl_coco_ai_AIEngineNative_yuv2rgbaNv21(JNIEnv *env, jclass type, jint width, jint height,

jbyteArray yuv, jintArray rgba) {

jbyte *_yuv = env->GetByteArrayElements(yuv, 0);

jint *_bgra = env->GetIntArrayElements(rgba, 0);

cv::Mat myuv(height + height / 2, width, CV_8UC1, (uchar *) _yuv);

cv::Mat mrgba(height, width, CV_8UC4, (uchar *) _bgra);

//cvtColor(myuv, mrgba, CV_YUV420sp2RGBA);

cvtColor(myuv, mrgba, CV_YUV2RGBA_NV21);

env->ReleaseIntArrayElements(rgba, _bgra, 0);

env->ReleaseByteArrayElements(yuv, _yuv, 0);

}

//420p, 对应I420, 不交叉先U后V, YYYYYYYY UU VV

extern "C" JNIEXPORT void JNICALL

Java_com_hxdl_coco_ai_AIEngineNative_yuv2rgbaI420(JNIEnv *env, jclass type, jint width, jint height,

jbyteArray yuv, jintArray rgba) {

jbyte *_yuv = env->GetByteArrayElements(yuv, 0);

jint *_bgra = env->GetIntArrayElements(rgba, 0);

cv::Mat myuv(height + height / 2, width, CV_8UC1, (uchar *) _yuv);

cv::Mat mrgba(height, width, CV_8UC4, (uchar *) _bgra);

cvtColor(myuv, mrgba, CV_YUV2RGBA_I420); //CV_YUV2RGBA_IYUV

env->ReleaseIntArrayElements(rgba, _bgra, 0);

env->ReleaseByteArrayElements(yuv, _yuv, 0);

}

//420sp,对应NV12,交叉先U后V, YYYYYYYY UV UV

extern "C" JNIEXPORT void JNICALL

Java_com_hxdl_coco_ai_AIEngineNative_yuv2rgbaNv12(JNIEnv *env, jclass type, jint width, jint height,

jbyteArray yuv, jintArray rgba) {

jbyte *_yuv = env->GetByteArrayElements(yuv, 0);

jint *_bgra = env->GetIntArrayElements(rgba, 0);

cv::Mat myuv(height + height / 2, width, CV_8UC1, (uchar *) _yuv);

cv::Mat mrgba(height, width, CV_8UC4, (uchar *) _bgra);

//cvtColor(myuv, mrgba, CV_YUV420sp2RGBA);

cvtColor(myuv, mrgba, CV_YUV2RGBA_NV12);

env->ReleaseIntArrayElements(rgba, _bgra, 0);

env->ReleaseByteArrayElements(yuv, _yuv, 0);

}

//转bgra

extern "C" JNIEXPORT void JNICALL

Java_com_hxdl_coco_ai_AIEngineNative_yuv2bgraNv12(JNIEnv *env, jclass type, jint width, jint height,

jbyteArray yuv, jintArray bgra) {

jbyte *_yuv = env->GetByteArrayElements(yuv, 0);

jint *_bgra = env->GetIntArrayElements(bgra, 0);

cv::Mat myuv(height + height / 2, width, CV_8UC1, (uchar *) _yuv);

cv::Mat mbgra(height, width, CV_8UC4, (uchar *) _bgra);

cvtColor(myuv, mbgra, CV_YUV2BGRA_NV12);

env->ReleaseIntArrayElements(bgra, _bgra, 0);

env->ReleaseByteArrayElements(yuv, _yuv, 0);

}

//bgr

extern "C" JNIEXPORT void JNICALL

Java_com_hxdl_coco_ai_AIEngineNative_yuv2bgrNv12(JNIEnv *env, jclass type, jint width, jint height,

jbyteArray yuv, jintArray bgr) {

jbyte *_yuv = env->GetByteArrayElements(yuv, 0);

jint *_bgr = env->GetIntArrayElements(bgr, 0);

cv::Mat myuv(height + height / 2, width, CV_8UC1, (uchar *) _yuv);

cv::Mat mbgr(height, width, CV_8UC3, (uchar *) _bgr);

cvtColor(myuv, mbgr, CV_YUV2BGR_NV12);

env->ReleaseIntArrayElements(bgr, _bgr, 0);

env->ReleaseByteArrayElements(yuv, _yuv, 0);

}

//bgra

extern "C" JNIEXPORT void JNICALL

Java_com_hxdl_coco_ai_AIEngineNative_yuv2bgraNv21(JNIEnv *env, jclass type, jint width,

jint height, jbyteArray yuv, jintArray bgra) {

jbyte *_yuv = env->GetByteArrayElements(yuv, 0);

jint *_bgra = env->GetIntArrayElements(bgra, 0);

cv::Mat myuv(height + height / 2, width, CV_8UC1, (uchar *) _yuv);

cv::Mat mbgra(height, width, CV_8UC4, (uchar *) _bgra);

cvtColor(myuv, mbgra, CV_YUV2BGRA_NV21);

env->ReleaseIntArrayElements(bgra, _bgra, 0);

env->ReleaseByteArrayElements(yuv, _yuv, 0);

}

//bgr

extern "C" JNIEXPORT void JNICALL

Java_com_hxdl_coco_ai_AIEngineNative_yuv2bgrNv21(JNIEnv *env, jclass type, jint width,

jint height, jbyteArray yuv, jintArray bgr) {

jbyte *_yuv = env->GetByteArrayElements(yuv, 0);

jint *_bgr = env->GetIntArrayElements(bgr, 0);

cv::Mat myuv(height + height / 2, width, CV_8UC1, (uchar *) _yuv);

cv::Mat mbgr(height, width, CV_8UC3, (uchar *) _bgr);

cvtColor(myuv, mbgr, CV_YUV2BGR_NV21);

env->ReleaseIntArrayElements(bgr, _bgr, 0);

env->ReleaseByteArrayElements(yuv, _yuv, 0);

}java 转换库

@SuppressWarnings("all")

public final class ImageUtil {

private static final String TAG = ImageUtil.class.getSimpleName();

/**

* YUV420p I420=YU12

*/

public static final int YUV420PYU12 = 0;

public static final int YUV420PI420 = YUV420PYU12;

public static final int YUV420PYV12 = 3;

/**

* YUV420SP NV12

*/

public static final int YUV420SPNV12 = 1;

/**

* YUV420SP NV21

*/

public static final int YUV420SPNV21 = 2;

/**

* 高速变换,减少内存分配的开销和防OOM

*/

private static byte[] Bytes_y = null;

private static byte[] uBytes = null;

private static byte[] vBytes = null;

private static byte[] Bytes_uv = null;

/**

* Image YUV420_888转NV12, NV21, I420(YU12)

* @param image

* @param type

* @param yuvBytes

*/

public static void getBytesFromImageAsType(Image image, int type, byte[] yuvBytes) {

try {

//获取源数据,如果是YUV格式的数据planes.length = 3

//plane[i]里面的实际数据可能存在byte[].length <= capacity (缓冲区总大小)

final Image.Plane[] planes = image.getPlanes();

//数据有效宽度,一般的,图片width <= rowStride,这也是导致byte[].length <= capacity的原因

// 所以我们只取width部分

int width = image.getWidth();

int height = image.getHeight();

//此处用来装填最终的YUV数据,需要1.5倍的图片大小,因为Y U V 比例为 4:1:1

//byte[] yuvBytes = new byte[width * height * ImageFormat.getBitsPerPixel(ImageFormat.YUV_420_888) / 8];

//目标数组的装填到的位置

int dstIndex = 0;

//临时存储uv数据的

//byte[] uBytes = new byte[width * height / 4];

//byte[] vBytes = new byte[width * height / 4];

if (uBytes == null) {

uBytes = new byte[width * height / 4];

}

if (vBytes == null) {

vBytes = new byte[width * height / 4];

}

int uIndex = 0;

int vIndex = 0;

LogUtil.d("jiaAAA", "planes.length="+ planes.length);

LogUtil.d("jiaAAA", "buffer="+ width * height);

LogUtil.d("jiaAAA", "buffer="+ width);

LogUtil.d("jiaAAA", "buffer="+ height);

LogUtil.d("jiaAAA", "buffer="+ image.getCropRect());

int pixelsStride, rowStride;

for (int i = 0; i < planes.length; i++) {

pixelsStride = planes[i].getPixelStride();

rowStride = planes[i].getRowStride();

ByteBuffer buffer = planes[i].getBuffer();

LogUtil.d("jiaAAA", "i="+i);

LogUtil.d("jiaAAA", "pixelsStride="+pixelsStride);

LogUtil.d("jiaAAA", "rowStride="+rowStride);

LogUtil.d("jiaAAA", "buffer="+buffer.capacity());

//int cap = buffer.capacity();

//如果pixelsStride==2,一般的Y的buffer长度=640*480,UV的长度=640*480/2-1

//源数据的索引,y的数据是byte中连续的,u的数据是v向左移以为生成的,两者都是偶数位为有效数据

LogUtil.d("malloc_buffer", "===" + buffer.capacity());

byte[] bytes;

if (buffer.capacity() >=(width*height)) {

if (Bytes_y == null){

Bytes_y = new byte[buffer.capacity()];

}

bytes = Bytes_y;

LogUtil.d("malloc1","1" +"===" + buffer.capacity());

}else if(buffer.capacity() >= (((width*height)/2) - 1)){

if (Bytes_uv == null){

Bytes_uv = new byte[buffer.capacity()];

}

bytes = Bytes_uv;

LogUtil.d("malloc2","2" +"===" + buffer.capacity());

}else{

bytes = new byte[buffer.capacity()];

LogUtil.d("malloc3","3"+"==="+ buffer.capacity());

}

buffer.get(bytes);

int srcIndex = 0;

if (i == 0) {

//直接取出来所有Y的有效区域,也可以存储成一个临时的bytes,到下一步再copy

for (int j = 0; j < height; j++) {

System.arraycopy(bytes, srcIndex, yuvBytes, dstIndex, width);

srcIndex += rowStride;

dstIndex += width;

}

} else if (i == 1) {

//根据pixelsStride取相应的数据

for (int j = 0; j < height / 2; j++) {

for (int k = 0; k < width / 2; k++) {

uBytes[uIndex++] = bytes[srcIndex];

srcIndex += pixelsStride;

}

if (pixelsStride == 2) {

srcIndex += rowStride - width;

} else if (pixelsStride == 1) {

srcIndex += rowStride - width / 2;

}

}

} else if (i == 2) {

//根据pixelsStride取相应的数据

for (int j = 0; j < height / 2; j++) {

for (int k = 0; k < width / 2; k++) {

vBytes[vIndex++] = bytes[srcIndex];

srcIndex += pixelsStride;

}

if (pixelsStride == 2) {

srcIndex += rowStride - width;

} else if (pixelsStride == 1) {

srcIndex += rowStride - width / 2;

}

}

}

}

// image.close();

//根据要求的结果类型进行填充

switch (type) {

case YUV420PI420:

System.arraycopy(uBytes, 0, yuvBytes, dstIndex, uBytes.length);

System.arraycopy(vBytes, 0, yuvBytes, dstIndex + uBytes.length, vBytes.length);

break;

case YUV420SPNV12:

for (int i = 0; i < vBytes.length; i++) {

yuvBytes[dstIndex++] = uBytes[i];

yuvBytes[dstIndex++] = vBytes[i];

}

break;

case YUV420SPNV21:

for (int i = 0; i < vBytes.length; i++) {

yuvBytes[dstIndex++] = vBytes[i];

yuvBytes[dstIndex++] = uBytes[i];

}

break;

case YUV420PYV12:

System.arraycopy(vBytes, 0, yuvBytes, dstIndex, vBytes.length);

System.arraycopy(uBytes, 0, yuvBytes, dstIndex + vBytes.length, uBytes.length);

break;

}

} catch (final Exception e) {

if (image != null) {

image.close();

}

Log.i(TAG, e.toString());

}

}

public static void getBytesFromImageAsTypeImagePlanes(ImagePlanes imagePlanes, int type, byte[] yuvBytes) {

try {

//数据有效宽度,一般的,图片width <= rowStride,这也是导致byte[].length <= capacity的原因

// 所以我们只取width部分

int width = imagePlanes.getWidth();

int height = imagePlanes.getHeight();

//此处用来装填最终的YUV数据,需要1.5倍的图片大小,因为Y U V 比例为 4:1:1

//byte[] yuvBytes = new byte[width * height * ImageFormat.getBitsPerPixel(ImageFormat.YUV_420_888) / 8];

//目标数组的装填到的位置

int dstIndex = 0;

//临时存储uv数据的

//byte[] uBytes = new byte[width * height / 4];

//byte[] vBytes = new byte[width * height / 4];

if (uBytes == null) {

uBytes = new byte[width * height / 4];

}

if (vBytes == null) {

vBytes = new byte[width * height / 4];

}

int uIndex = 0;

int vIndex = 0;

int pixelsStride, rowStride;

//Y

int srcIndex = 0;

pixelsStride = imagePlanes.getYPixelsStride();

rowStride = imagePlanes.getYRowStride();

//直接取出来所有Y的有效区域,也可以存储成一个临时的bytes,到下一步再copy

for (int j = 0; j < height; j++) {

System.arraycopy(imagePlanes.getYByte(), srcIndex, yuvBytes, dstIndex, width);

srcIndex += rowStride;

dstIndex += width;

}

//U

//根据pixelsStride取相应的数据

srcIndex = 0;

pixelsStride = imagePlanes.getUPixelsStride();

rowStride = imagePlanes.getURowStride();

for (int j = 0; j < height / 2; j++) {

for (int k = 0; k < width / 2; k++) {

uBytes[uIndex++] = imagePlanes.getUByte()[srcIndex];

srcIndex += pixelsStride;

}

if (pixelsStride == 2) {

srcIndex += rowStride - width;

} else if (pixelsStride == 1) {

srcIndex += rowStride - width / 2;

}

}

//V

//根据pixelsStride取相应的数据

pixelsStride = imagePlanes.getVPixelsStride();

rowStride = imagePlanes.getVRowStride();

srcIndex = 0;

for (int j = 0; j < height / 2; j++) {

for (int k = 0; k < width / 2; k++) {

vBytes[vIndex++] = imagePlanes.getVByte()[srcIndex];

srcIndex += pixelsStride;

}

if (pixelsStride == 2) {

srcIndex += rowStride - width;

} else if (pixelsStride == 1) {

srcIndex += rowStride - width / 2;

}

}

// image.close();

//根据要求的结果类型进行填充

switch (type) {

case YUV420PI420:

System.arraycopy(uBytes, 0, yuvBytes, dstIndex, uBytes.length);

System.arraycopy(vBytes, 0, yuvBytes, dstIndex + uBytes.length, vBytes.length);

break;

case YUV420SPNV12:

for (int i = 0; i < vBytes.length; i++) {

yuvBytes[dstIndex++] = uBytes[i];

yuvBytes[dstIndex++] = vBytes[i];

}

break;

case YUV420SPNV21:

for (int i = 0; i < vBytes.length; i++) {

yuvBytes[dstIndex++] = vBytes[i];

yuvBytes[dstIndex++] = uBytes[i];

}

break;

case YUV420PYV12:

System.arraycopy(vBytes, 0, yuvBytes, dstIndex, vBytes.length);

System.arraycopy(uBytes, 0, yuvBytes, dstIndex + vBytes.length, uBytes.length);

break;

}

} catch (final Exception e) {

Log.i(TAG, e.toString());

}

}

/**

* Image Yuv420_888转Yu12

* @param image Image

* @param yuvBytes data

* 又叫I420,先存Y,再存U,最后存v,四个Y对应一个U,一个V 4X4图像

* YYYY

* YYYY

* YYYY

* YYYY

* UUUU

* VVVV

*/

@SuppressWarnings("unused")

public static void getBytes420PYu12(Image image, byte[] yuvBytes) {

try {

//获取源数据,如果是YUV格式的数据planes.length = 3

//plane[i]里面的实际数据可能存在byte[].length <= capacity (缓冲区总大小)

final Image.Plane[] planes = image.getPlanes();

//数据有效宽度,一般的,图片width <= rowStride,这也是导致byte[].length <= capacity的原因

// 所以我们只取width部分

int width = image.getWidth();

int height = image.getHeight();

int Y_pixelsStride = planes[0].getPixelStride();

int Y_rowStride = planes[0].getRowStride();

int Y_srcIndex = 0;

int Y_dstIndex = 0;

ByteBuffer tt = planes[0].getBuffer();

tt.clear();

for (int j = 0; j < height; j++) {

tt.get(yuvBytes, Y_dstIndex, width);

Y_srcIndex += Y_rowStride;

Y_dstIndex += width;

if (Y_srcIndex < tt.capacity()) {

tt.position(Y_srcIndex);

}

}

int U_pixelsStride = planes[1].getPixelStride();

int U_rowStride = planes[1].getRowStride();

int U_srcIndex = 0;

int U_dstIndex = 0;

ByteBuffer tt2 = planes[1].getBuffer();

tt2.clear();

for (int j = 0; j < height / 2; j++) {

for (int k = 0; k < width/2 ; k++) {

yuvBytes[Y_dstIndex+U_dstIndex] = tt2.get(U_srcIndex);

U_srcIndex += U_pixelsStride;

U_dstIndex += 1;

}

if (U_pixelsStride == 2) {

U_srcIndex += U_rowStride - width;

} else if (U_pixelsStride == 1) {

U_srcIndex += U_rowStride - width / 2;

}

}

int V_pixelsStride = planes[2].getPixelStride();

int V_rowStride = planes[2].getRowStride();

int V_srcIndex = 0;

int V_dstIndex = 0;

ByteBuffer tt3 = planes[2].getBuffer();

tt3.clear();

for (int j = 0; j < height / 2; j++) {

for (int k = 0; k < width/2 ; k++) {

yuvBytes[Y_dstIndex+U_dstIndex+V_dstIndex] = tt3.get(V_srcIndex);

V_srcIndex += V_pixelsStride;

V_dstIndex += 1;

}

if (V_pixelsStride == 2) {

V_srcIndex += V_rowStride - width;

} else if (V_pixelsStride == 1) {

V_srcIndex += V_rowStride - width / 2;

}

}

} catch (final Exception e) {

if (image != null) {

image.close();

}

Log.i(TAG, e.toString());

}

}

/**

* Image Yuv420_888转YV12

* @param image Image

* @param yuvBytes data

* 先存Y,再存V,最后存U,四个Y对应一个U,一个V 4X4图像

* YYYY

* YYYY

* YYYY

* YYYY

* VVVV

* UUUU

*/

@SuppressWarnings("unused")

public static void getBytes420PYv12(Image image, byte[] yuvBytes) {

try {

//获取源数据,如果是YUV格式的数据planes.length = 3

//plane[i]里面的实际数据可能存在byte[].length <= capacity (缓冲区总大小)

final Image.Plane[] planes = image.getPlanes();

//数据有效宽度,一般的,图片width <= rowStride,这也是导致byte[].length <= capacity的原因

// 所以我们只取width部分

int width = image.getWidth();

int height = image.getHeight();

int Y_pixelsStride = planes[0].getPixelStride();

int Y_rowStride = planes[0].getRowStride();

int Y_srcIndex = 0;

int Y_dstIndex = 0;

ByteBuffer tt = planes[0].getBuffer();

tt.clear();

for (int j = 0; j < height; j++) {

tt.get(yuvBytes, Y_dstIndex, width);

Y_srcIndex += Y_rowStride;

Y_dstIndex += width;

if (Y_srcIndex < tt.capacity()) {

tt.position(Y_srcIndex);

}

}

int U_pixelsStride = planes[2].getPixelStride();

int U_rowStride = planes[2].getRowStride();

int U_srcIndex = 0;

int U_dstIndex = 0;

ByteBuffer tt2 = planes[2].getBuffer();

tt2.clear();

for (int j = 0; j < height / 2; j++) {

for (int k = 0; k < width/2 ; k++) {

yuvBytes[Y_dstIndex+U_dstIndex] = tt2.get(U_srcIndex);

U_srcIndex += U_pixelsStride;

U_dstIndex += 1;

}

if (U_pixelsStride == 2) {

U_srcIndex += U_rowStride - width;

} else if (U_pixelsStride == 1) {

U_srcIndex += U_rowStride - width / 2;

}

}

int V_pixelsStride = planes[1].getPixelStride();

int V_rowStride = planes[1].getRowStride();

int V_srcIndex = 0;

int V_dstIndex = 0;

ByteBuffer tt3 = planes[1].getBuffer();

tt3.clear();

for (int j = 0; j < height / 2; j++) {

for (int k = 0; k < width/2 ; k++) {

yuvBytes[Y_dstIndex+U_dstIndex+V_dstIndex] = tt3.get(V_srcIndex);

V_srcIndex += V_pixelsStride;

V_dstIndex += 1;

}

if (V_pixelsStride == 2) {

V_srcIndex += V_rowStride - width;

} else if (V_pixelsStride == 1) {

V_srcIndex += V_rowStride - width / 2;

}

}

} catch (final Exception e) {

if (image != null) {

image.close();

}

Log.i(TAG, e.toString());

}

}

/**

* Image Yuv420_888转Nu12

* @param image Image

* @param yuvBytes data

* 先存Y,再UV交叉存储,U前V后,四个Y对应一个U,一个V 4X4图像

* YYYY

* YYYY

* YYYY

* YYYY

* UVUV

* UVUV

*/

public static void getBytes420SPNv12(Image image, byte[] yuvBytes) {

try {

//获取源数据,如果是YUV格式的数据planes.length = 3

//plane[i]里面的实际数据可能存在byte[].length <= capacity (缓冲区总大小)

final Image.Plane[] planes = image.getPlanes();

//数据有效宽度,一般的,图片width <= rowStride,这也是导致byte[].length <= capacity的原因

// 所以我们只取width部分

int width = image.getWidth();

int height = image.getHeight();

int Y_pixelsStride = planes[0].getPixelStride();

int Y_rowStride = planes[0].getRowStride();

int Y_srcIndex = 0;

int Y_dstIndex = 0;

ByteBuffer tt = planes[0].getBuffer();

tt.clear();

for (int j = 0; j < height; j++) {

//System.arraycopy(planes[0].getBuffer().array(), Y_srcIndex, yuvBytes, Y_dstIndex, width);

tt.get(yuvBytes, Y_dstIndex, width);

Y_srcIndex += Y_rowStride;

Y_dstIndex += width;

if (Y_srcIndex < tt.capacity()) {

tt.position(Y_srcIndex);

}

}

int U_pixelsStride = planes[1].getPixelStride();

int U_rowStride = planes[1].getRowStride();

int U_srcIndex = 0;

int U_dstIndex = 0;

ByteBuffer tt2 = planes[1].getBuffer();

tt2.clear();

for (int j = 0; j < height / 2; j++) {

//System.arraycopy(planes[1].getBuffer().array(), U_srcIndex, yuvBytes, Y_dstIndex+U_dstIndex, width);

if (j == (height /2 -1)){

tt2.get(yuvBytes, Y_dstIndex + U_dstIndex, width-1);

}else {

tt2.get(yuvBytes, Y_dstIndex + U_dstIndex, width);

}

U_srcIndex += U_rowStride;

U_dstIndex += width;

if (U_srcIndex < tt2.capacity()) {

tt2.position(U_srcIndex);

}

}

//System.arraycopy(planes[0].getBuffer().array(), 0, yuvBytes, 0, width*height);

//System.arraycopy(planes[2].getBuffer().array(), 0, yuvBytes, width*height, width*height/2);

int V_pixelsStride = planes[2].getPixelStride();

int V_rowStride = planes[2].getRowStride();

int V_srcIndex = 0;

int V_dstIndex = 0;

ByteBuffer tt3 = planes[2].getBuffer();

tt3.clear();

for (int j = 0; j < height / 2; j++) {

for (int k = 0; k < width/2 ; k++) {

yuvBytes[Y_dstIndex+V_dstIndex+1] = tt3.get(V_srcIndex);

V_srcIndex += V_pixelsStride;

V_dstIndex += V_pixelsStride;

}

if (V_pixelsStride == 2) {

V_srcIndex += V_rowStride - width;

} else if (V_pixelsStride == 1) {

V_srcIndex += V_rowStride - width / 2;

}

}

} catch (final Exception e) {

if (image != null) {

image.close();

}

Log.i(TAG, e.toString());

}

}

/**

* Image Yuv420_888转NV21

* @param image Image

* @param yuvBytes data

* 先存Y,再VU交叉存储,V前U后,四个Y对应一个U,一个V 4X4图像

* YYYY

* YYYY

* YYYY

* YYYY

* VUVU

* VUVU

*/

@SuppressWarnings("unused")

public static void getBytes420SPNv21(Image image, byte[] yuvBytes) {

try {

//获取源数据,如果是YUV格式的数据planes.length = 3

//plane[i]里面的实际数据可能存在byte[].length <= capacity (缓冲区总大小)

final Image.Plane[] planes = image.getPlanes();

//数据有效宽度,一般的,图片width <= rowStride,这也是导致byte[].length <= capacity的原因

// 所以我们只取width部分

int width = image.getWidth();

int height = image.getHeight();

int Y_pixelsStride = planes[0].getPixelStride();

int Y_rowStride = planes[0].getRowStride();

int Y_srcIndex = 0;

int Y_dstIndex = 0;

ByteBuffer tt = planes[0].getBuffer();

tt.clear();

for (int j = 0; j < height; j++) {

//System.arraycopy(planes[0].getBuffer().array(), Y_srcIndex, yuvBytes, Y_dstIndex, width);

tt.get(yuvBytes, Y_dstIndex, width);

Y_srcIndex += Y_rowStride;

Y_dstIndex += width;

if (Y_srcIndex < tt.capacity()) {

tt.position(Y_srcIndex);

}

}

int U_pixelsStride = planes[2].getPixelStride();

int U_rowStride = planes[2].getRowStride();

int U_srcIndex = 0;

int U_dstIndex = 0;

ByteBuffer tt2 = planes[2].getBuffer();

tt2.clear();

for (int j = 0; j < height / 2; j++) {

//System.arraycopy(planes[1].getBuffer().array(), U_srcIndex, yuvBytes, Y_dstIndex+U_dstIndex, width);

if (j == (height /2 -1)){

tt2.get(yuvBytes, Y_dstIndex + U_dstIndex, width-1);

}else {

tt2.get(yuvBytes, Y_dstIndex + U_dstIndex, width);

}

U_srcIndex += U_rowStride;

U_dstIndex += width;

if (U_srcIndex < tt2.capacity()) {

tt2.position(U_srcIndex);

}

}

int V_pixelsStride = planes[1].getPixelStride();

int V_rowStride = planes[1].getRowStride();

int V_srcIndex = 0;

int V_dstIndex = 0;

ByteBuffer tt3 = planes[1].getBuffer();

tt3.clear();

for (int j = 0; j < height / 2; j++) {

for (int k = 0; k < width/2 ; k++) {

yuvBytes[Y_dstIndex+V_dstIndex+1] = tt3.get(V_srcIndex);

V_srcIndex += V_pixelsStride;

V_dstIndex += V_pixelsStride;

}

if (V_pixelsStride == 2) {

V_srcIndex += V_rowStride - width;

} else if (V_pixelsStride == 1) {

V_srcIndex += V_rowStride - width / 2;

}

}

} catch (final Exception e) {

if (image != null) {

image.close();

}

Log.i(TAG, e.toString());

}

}

public static void getBytes420SPNv21_ImagePlanes(ImagePlanes imagePlanes, byte[] yuvBytes) {

try {

// 所以我们只取width部分

LogUtil.e("jiaUUU", "imagePlanes="+imagePlanes);

LogUtil.e("jiaUUU", "imagePlanes="+imagePlanes.getWidth());

LogUtil.e("jiaUUU", "imagePlanes="+imagePlanes.getHeight());

LogUtil.e("jiaUUU", "imagePlanes="+imagePlanes.getYByte().length);

LogUtil.e("jiaUUU", "imagePlanes="+imagePlanes.getUByte().length);

LogUtil.e("jiaUUU", "imagePlanes="+imagePlanes.getVByte().length);

int width = imagePlanes.getWidth();

int height = imagePlanes.getHeight();

int Y_pixelsStride = imagePlanes.getYPixelsStride();

int Y_rowStride = imagePlanes.getYRowStride();

int Y_srcIndex = 0;

int Y_dstIndex = 0;

for (int j = 0; j < height; j++) {

System.arraycopy(imagePlanes.getYByte(), Y_srcIndex, yuvBytes, Y_dstIndex, width);

Y_srcIndex += Y_rowStride;

Y_dstIndex += width;

}

int U_pixelsStride = imagePlanes.getVPixelsStride();

int U_rowStride = imagePlanes.getVRowStride();

int U_srcIndex = 0;

int U_dstIndex = 0;

for (int j = 0; j < height / 2; j++) {

if (j == (height /2 -1)){

System.arraycopy(imagePlanes.getVByte(), U_srcIndex, yuvBytes, Y_dstIndex+U_dstIndex, width-1);

}else {

System.arraycopy(imagePlanes.getVByte(), U_srcIndex, yuvBytes, Y_dstIndex+U_dstIndex, width);

}

U_srcIndex += U_rowStride;

U_dstIndex += width;

}

int V_pixelsStride = imagePlanes.getUPixelsStride();

int V_rowStride = imagePlanes.getURowStride();

int V_srcIndex = 0;

int V_dstIndex = 0;

for (int j = 0; j < height / 2; j++) {

for (int k = 0; k < width/2 ; k++) {

yuvBytes[Y_dstIndex+V_dstIndex+1] = imagePlanes.getUByte()[V_srcIndex];

V_srcIndex += V_pixelsStride;

V_dstIndex += V_pixelsStride;

}

if (V_pixelsStride == 2) {

V_srcIndex += V_rowStride - width;

} else if (V_pixelsStride == 1) {

V_srcIndex += V_rowStride - width / 2;

}

}

} catch (final Exception e) {

Log.i(TAG, "jiaUUU"+e.toString());

}

}

@SuppressWarnings("unused")

public static byte[] NV21toJPEG(byte[] nv21, int width, int height, int quality) {

ByteStreamWrapper out = ByteStreamPool.get();

YuvImage yuv = new YuvImage(nv21, ImageFormat.NV21, width, height, null);

yuv.compressToJpeg(new Rect(0, 0, width, height), quality, out);

byte[] result = out.toByteArray();

ByteStreamPool.ret2pool(out);

return result;

}

@SuppressWarnings("unused")

public static void Rgba2Bgr(byte[] src, byte[] dest, boolean isAlpha){

if (isAlpha) {

//RGBA TO BGRA

if (src != null && src.length == dest.length) {

for (int i = 0; i < src.length / 4; i++) {

dest[i * 4] = src[i * 4 + 2]; //B

dest[i * 4 + 1] = src[i * 4 + 1]; //G

dest[i * 4 + 2] = src[i * 4]; //R

dest[i * 4 + 3] = src[i * 4 + 3]; //a

}

}else{

LogUtil.d(TAG, "RGBA2BGR error, dest length too short");

}

}else{

//RGBA TO BGR

if (src != null && src.length == (dest.length/4)*3) {

for (int i = 0; i < src.length / 4; i++) {

dest[i * 3] = src[i * 4 + 2]; //B

dest[i * 3 + 1] = src[i * 4 + 1]; //G

dest[i * 3 + 2] = src[i * 4]; //R

}

}else{

LogUtil.d(TAG, "RGBA2BGR error, dest length too short");

}

}

}

//bitmap

@SuppressWarnings("unused")

public static Bitmap getOriBitmap(byte[] jpgArray){

return BitmapFactory.decodeByteArray(jpgArray,

0, jpgArray.length);

}

//RGBA

public static byte[] getOriBitmapRgba(byte[] jpgArray){

Bitmap bitmap = BitmapFactory.decodeByteArray(jpgArray,

0, jpgArray.length);

int bytes = bitmap.getByteCount();

ByteBuffer buffer = ByteBuffer.allocate(bytes);

bitmap.copyPixelsToBuffer(buffer);

return buffer.array();

}

@SuppressWarnings("unused")

public static byte[] getJpegByte(byte[] rgba, int w, int h){

Bitmap bm = Bitmap.createBitmap(w, h, Bitmap.Config.ARGB_8888);

ByteBuffer buf = ByteBuffer.wrap(rgba);

bm.copyPixelsFromBuffer(buf);

ByteArrayOutputStream fos = new ByteArrayOutputStream();

bm.compress(Bitmap.CompressFormat.JPEG, 100, fos);

return fos.toByteArray();

}

@SuppressWarnings("unused")

public static int[] byte2int(byte[] byteArray){

IntBuffer intBuf =

ByteBuffer.wrap(byteArray)

.order(ByteOrder.LITTLE_ENDIAN)

.asIntBuffer();

int[] array = new int[intBuf.remaining()];

intBuf.get(array);

return array;

}

@SuppressWarnings("unused")

public byte[] int2byte(int[] intArray){

ByteBuffer byteBuffer = ByteBuffer.allocate(intArray.length * 4);

IntBuffer intBuffer = byteBuffer.asIntBuffer();

intBuffer.put(intArray);

byte[] byteConverted = byteBuffer.array();

for (int i = 0; i < 840; i++) {

Log.d("Bytes sfter Insert", ""+byteConverted[i]);

}

return byteConverted;

}

@SuppressWarnings("unused")

public void testXXX(String path){

Bitmap bm = BitmapFactory.decodeFile(path);

ByteStreamWrapper baos = ByteStreamPool.get();

bm.compress(Bitmap.CompressFormat.JPEG, 100, baos);

byte[] bytes = baos.toByteArray();

ByteStreamPool.ret2pool(baos);

long x = System.currentTimeMillis();

System.out.println("jiaXXX"+ "testXXX");

getOriBitmapRgba(bytes);

System.out.println("jiaXXX"+ "testXXX"+(System.currentTimeMillis()-x));

}

//Image to nv21

@SuppressWarnings("unused")

private ByteBuffer imageToByteBuffer(final Image image) {

final Rect crop = image.getCropRect();

final int width = crop.width();

final int height = crop.height();

final Image.Plane[] planes = image.getPlanes();

final int bufferSize = width * height * ImageFormat.getBitsPerPixel(ImageFormat.YUV_420_888) / 8;

final ByteBuffer output = ByteBuffer.allocateDirect(bufferSize);

int channelOffset = 0;

int outputStride = 0;

for (int planeIndex = 0; planeIndex < planes.length; planeIndex++) {

if (planeIndex == 0) {

channelOffset = 0;

outputStride = 1;

} else if (planeIndex == 1) {

channelOffset = width * height + 1;

outputStride = 2;

} else if (planeIndex == 2) {

channelOffset = width * height;

outputStride = 2;

}

final ByteBuffer buffer = planes[planeIndex].getBuffer();

final int rowStride = planes[planeIndex].getRowStride();

final int pixelStride = planes[planeIndex].getPixelStride();

byte[] rowData = new byte[rowStride];

final int shift = (planeIndex == 0) ? 0 : 1;

final int widthShifted = width >> shift;

final int heightShifted = height >> shift;

buffer.position(rowStride * (crop.top >> shift) + pixelStride * (crop.left >> shift));

for (int row = 0; row < heightShifted; row++) {

final int length;

if (pixelStride == 1 && outputStride == 1) {

length = widthShifted;

buffer.get(output.array(), channelOffset, length);

channelOffset += length;

} else {

length = (widthShifted - 1) * pixelStride + 1;

buffer.get(rowData, 0, length);

for (int col = 0; col < widthShifted; col++) {

output.array()[channelOffset] = rowData[col * pixelStride];

channelOffset += outputStride;

}

}

if (row < heightShifted - 1) {

buffer.position(buffer.position() + rowStride - length);

}

}

}

return output;

}

@SuppressWarnings("unused")

private static byte[] NV21toJPEG(byte[] nv21, int width, int height) {

ByteStreamWrapper out = ByteStreamPool.get();

try {

YuvImage yuv = new YuvImage(nv21, ImageFormat.NV21, width, height, null);

yuv.compressToJpeg(new Rect(0, 0, width, height), 100, out);

return out.toByteArray();

}finally{

ByteStreamPool.ret2pool(out);

}

}

@SuppressWarnings("unused")

private static byte[] NV21toRgba(byte[] nv21, int width, int height) {

ByteStreamWrapper out = ByteStreamPool.get();

try {

YuvImage yuv = new YuvImage(nv21, ImageFormat.NV21, width, height, null);

yuv.compressToJpeg(new Rect(0, 0, width, height), 100, out);

final Bitmap bitmap = BitmapFactory.decodeStream(out.getInputStream());

int bytes = bitmap.getByteCount();

ByteBuffer buffer = ByteBuffer.allocate(bytes);

bitmap.copyPixelsToBuffer(buffer); //Move the byte data to the buffer

return buffer.array();

}finally{

ByteStreamPool.ret2pool(out);

}

}

@SuppressWarnings("unused")

private static Bitmap getBitmapFromByte(byte[] rgba, int w, int h){

ByteBuffer buffer = ByteBuffer.wrap(rgba);

Bitmap bm = Bitmap.createBitmap(w, h, Bitmap.Config.ARGB_8888);

bm.copyPixelsFromBuffer(buffer);

return bm;

}

//取巧模式

public static void getBytes420SPNv21_New(Image image, byte[] yuvBytes) {

try {

//获取源数据,如果是YUV格式的数据planes.length = 3

//plane[i]里面的实际数据可能存在byte[].length <= capacity (缓冲区总大小)

final Image.Plane[] planes = image.getPlanes();

//数据有效宽度,一般的,图片width <= rowStride,这也是导致byte[].length <= capacity的原因

// 所以我们只取width部分

int width = image.getWidth();

int height = image.getHeight();

int Y_pixelsStride = planes[0].getPixelStride();

int Y_rowStride = planes[0].getRowStride();

int Y_srcIndex = 0;

int Y_dstIndex = 0;

ByteBuffer tt = planes[0].getBuffer();

tt.clear();

for (int j = 0; j < height; j++) {

//System.arraycopy(planes[0].getBuffer().array(), Y_srcIndex, yuvBytes, Y_dstIndex, width);

tt.get(yuvBytes, Y_dstIndex, width);

Y_srcIndex += Y_rowStride;

Y_dstIndex += width;

if (Y_srcIndex < tt.capacity()) {

tt.position(Y_srcIndex);

}

}

int U_pixelsStride = planes[2].getPixelStride();

int U_rowStride = planes[2].getRowStride();

int U_srcIndex = 0;

int U_dstIndex = 0;

ByteBuffer tt2 = planes[2].getBuffer();

tt2.clear();

for (int j = 0; j < height / 2; j++) {

//System.arraycopy(planes[1].getBuffer().array(), U_srcIndex, yuvBytes, Y_dstIndex+U_dstIndex, width);

if (j == (height /2 -1)){

tt2.get(yuvBytes, Y_dstIndex + U_dstIndex, width-1);

}else {

tt2.get(yuvBytes, Y_dstIndex + U_dstIndex, width);

}

U_srcIndex += U_rowStride;

U_dstIndex += width;

if (U_srcIndex < tt2.capacity()) {

tt2.position(U_srcIndex);

}

}

yuvBytes[(int) (width*height*1.5-1)]= planes[1].getBuffer().get(planes[1].getBuffer().capacity());

} catch (final Exception e) {

if (image != null) {

image.close();

}

Log.i(TAG, e.toString());

}

}

//取巧模式

//事实上很多手机的结构类似

/*

Y通道

YYYY

YYYY

YYYY

YYYY

//U通道

UVUV

UVU

//V通道

VUVU

VUV

*/

public static void getBytes420SPNv12_New(Image image, byte[] yuvBytes) {

try {

//获取源数据,如果是YUV格式的数据planes.length = 3

//plane[i]里面的实际数据可能存在byte[].length <= capacity (缓冲区总大小)

final Image.Plane[] planes = image.getPlanes();

//数据有效宽度,一般的,图片width <= rowStride,这也是导致byte[].length <= capacity的原因

// 所以我们只取width部分

int width = image.getWidth();

int height = image.getHeight();

int Y_pixelsStride = planes[0].getPixelStride();

int Y_rowStride = planes[0].getRowStride();

int Y_srcIndex = 0;

int Y_dstIndex = 0;

ByteBuffer tt = planes[0].getBuffer();

tt.clear();

for (int j = 0; j < height; j++) {

//System.arraycopy(planes[0].getBuffer().array(), Y_srcIndex, yuvBytes, Y_dstIndex, width);

tt.get(yuvBytes, Y_dstIndex, width);

Y_srcIndex += Y_rowStride;

Y_dstIndex += width;

if (Y_srcIndex < tt.capacity()) {

tt.position(Y_srcIndex);

}

}

int U_pixelsStride = planes[1].getPixelStride();

int U_rowStride = planes[1].getRowStride();

int U_srcIndex = 0;

int U_dstIndex = 0;

ByteBuffer tt2 = planes[1].getBuffer();

tt2.clear();

for (int j = 0; j < height / 2; j++) {

if (j == (height /2 -1)){

tt2.get(yuvBytes, Y_dstIndex + U_dstIndex, width-1);

}else {

tt2.get(yuvBytes, Y_dstIndex + U_dstIndex, width);

}

U_srcIndex += U_rowStride;

U_dstIndex += width;

if (U_srcIndex < tt2.capacity()) {

tt2.position(U_srcIndex);

}

}

yuvBytes[(int) (width*height*1.5-1)]= planes[2].getBuffer().get(planes[2].getBuffer().capacity());

} catch (final Exception e) {

if (image != null) {

image.close();

}

Log.i(TAG, e.toString());

}

}

}

https://blog.csdn.net/bd_zengxinxin/article/details/37388325

https://www.polarxiong.com/archives/Android-Image%E7%B1%BB%E6%B5%85%E6%9E%90-%E7%BB%93%E5%90%88YUV_420_888.html