python+word2vec+随机森林 微博文本情感极性分析(一)

数据源:36万条微博文本,已标注情感。源数据中label0:开心,label1-3:低落或忧伤。本文只考虑情感正负极性,所以1-3都划为负样本。

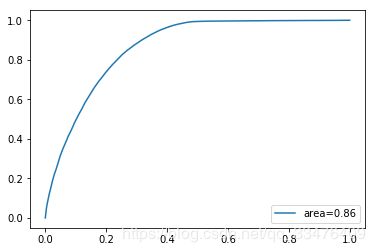

项目思路:分词后利用gensim.models.word2vec训练词向量,词向量表示训练集文本,sklearn训练随机森林模型,auc=0.86。

加载相关python包:

import jieba

import re

import pandas as pd

from gensim.models import word2vec

import numpy as np

from sklearn.decomposition import PCA

from sklearn.ensemble import RandomForestClassifier as RF

import matplotlib.pyplot as plt

from sklearn.metrics import roc_curve,auc

from sklearn.cross_validation import train_test_split

word2vec训练词向量

利用这36万微博数据训练词向量,word2vec需要语料分词。

data = pd.read_csv('F:/weibo_4_moods.csv',delimiter=',',header=0,encoding='utf-8')

file_train = 'F:/word_train.txt'

def get_word_train(filename):

with open(filename,'w',encoding='utf-8') as f:

for line in data['review']:

word_l = ' '.join(jieba.cut(line,cut_all=False))

word_l.replace(u',',u'').replace(u'。',u'').replace(u';',u'').replace(u'!',u'').replace(u'~',u'').replace(u'【',u'').replace(u'】',u'').replace(u'.',u'').replace(u'-',u'')

f.write(word_l)

f.write(u'\n')

f.close()

get_word_train(file_train)

分词后文本word_train.txt:

word2vec训练得到词向量保存在corpus.model。

sent = word2vec.Text8Corpus(file_train)

model = word2vec.Word2Vec(sent,size=200)

model.save('corpus.model')

model = word2vec.Word2Vec.load('corpus.model')

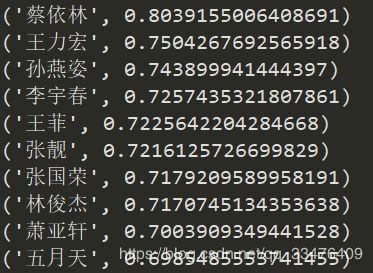

indexes = model.most_similar(u'周杰伦',topn=10)

for index in indexes:

print(index)

训练集句子用word2vec词向量表示

加载停用词:

file1 = 'F:/xiaoyan/study/data/text class/stopword.txt'

def stopw(file):

stopword = []

with open(file1,'r',encoding='utf-8') as text:

s = re.split(u'\n',text.read())

for word in s:

stopword.append(word)

return stopword

stopword = stopw(file1)

句子的词向量是每个词的词向量的均值:

def getvec(sent):

senvec = []

word_l = jieba.cut(sent)

for word in word_l:

if word in stopword:

continue

else:

try:

senvec.append(model[word])

except:

pass

return np.array(senvec,dtype='float')

def build_vec(data):

Input = []

for line in data['review']:

vec = getvec(line)

if len(vec)!=0:

res = sum(vec)/len(vec)

Input.append(res)

return Input

得到正负样本及数据标签y,1为正:

pos = build_vec(data[data.label==0])

neg = build_vec(data[data.label>0])

y = np.concatenate((np.ones(len(pos)),np.zeros(len(neg))))

X = pos[:]

for n in neg:

X.append(n)

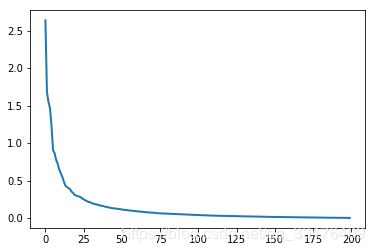

PCA

根据训练集维度与方差的关系图,对X降维到100维。

pca = PCA().fit(X)

plt.plot(pca.explained_variance_,linewidth=2)

X_reduced = PCA(n_components=100).fit_transform(X)

随机森林弱分类器训练200次

X_train,X_test,y_train,y_test = train_test_split(X_reduced,y,test_size=0.3)

clf = RF(n_estimators=200)

clf.fit(X_train,y_train)

print("准确率:%s" % clf.score(X_test,y_test))

ROC曲线

from sklearn.metrics import roc_curve,auc

y_pred = clf.predict_proba(X_test)[:,1]

fpr,tpr,_ = roc_curve(y_test,y_pred)

roc_auc = auc(fpr,tpr)

plt.plot(fpr,tpr,label ='area=%.2f' %roc_auc)

plt.legend(loc='lower right')