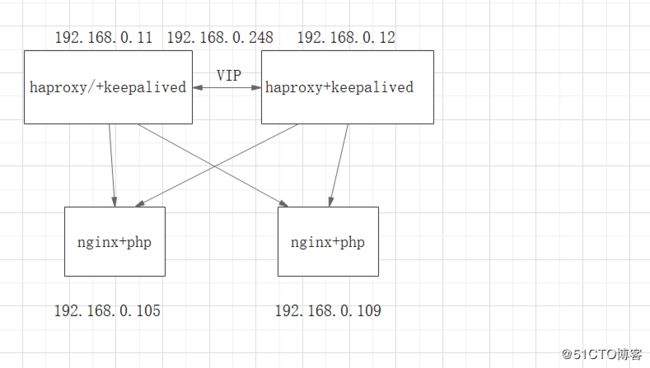

一.haproxy+keepalived

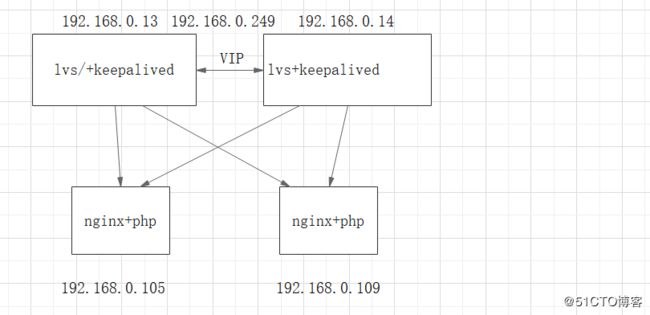

本文实现架构如下图,主要是记录haproxy+keepalived,和lvs+keepalived这些核心配置,安装和基础配置请自行学习

需要4台虚拟机

ip规划

keepalived vip192.168.0.248

haproxy1 192.168.0.11

haproxy2 192.168.0.12

nginx1 192.168.0.105

nginx2 192.168.0.109

1.两台haproxy的核心配置

listen WEB_PORT_80

bind 192.168.0.248:80 #这块要监听keepalived的VIP

mode tcp

balance roundrobin

server web1 192.168.0.109:80 weight 1 check inter 3000 fall 3 rise 5

server web2 192.168.0.105:80 weight 1 check inter 3000 fall 3 rise 5

- 主keepalived配置,192.168.0.11

keepalived调用辅助脚本监控本机haproxy进程脚本,方法很多,在这用发信号的方法,有进程返回0,没进程返回1

vi /etc/keepalived/chk_haproxy.sh

#!/bin/bash

/usr/bin/killall -0 haproxy

vi /etc/keepalived/keepalived.conf

vrrp_instance VIP1 { #虚拟IP

state MASTER #主

interface ens33 #网络接口,不要写错啊,有的是eth0

virtual_router_id 51 #和备的id号要一样,不要和其它keepalived集群一样

priority 100 #优先级,要比备的高,高一些就行

advert_int 2 #探测时间,vrrp通告时间,互相确认,vip在自己这

unicast_src_ip 192.168.0.11 #改成单播,把global配置里的vrrp_strict注释,目的是为了避免都向组播地址发送报文,占用带宽

unicast_peer {

192.168.0.12

}

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.0.248 dev ens33 label ens33:1

}

vrrp_script chk_haproxy {

script "/etc/keepalived/chk_haproxy.sh"

interval 1

weight -80

fall 3

rise 5

timeout 2

}

track_script {

chk_haproxy

}

3.备keepalived配置,192.168.0.12

监控本机haproxy进程脚本

vi /etc/keepalived/chk_haproxy.sh

#!/bin/bash

/usr/bin/killall -0 haproxy

vi /etc/keepalived/keepalived.conf

vrrp_instance VIP1 {

state BACKUP #备

interface ens33

virtual_router_id 51

priority 80 #优先级低于MASTER

advert_int 2

unicast_src_ip 192.168.0.12

unicast_peer {

192.168.0.11

}

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.0.248 dev ens33 label ens33:1

}

vrrp_script chk_haproxy {

script "/etc/keepalived/chk_haproxy.sh"

interval 1

weight -80

fall 3

rise 5

timeout 2

}

track_script {

chk_haproxy

}

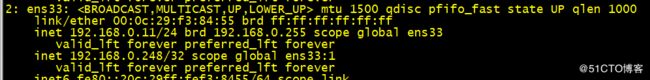

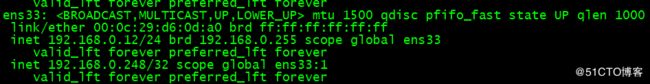

4.测试haproxy监控,keepalived的漂移

当关闭主keepalived的haproxy服务时,vip就漂移到了备keepalived主机上

1.架构如图,一般我们都是用lvs的dr模式,首先两台web服务器先绑定上vip,lvs脚本如下,再安装ipvsadm工具,方便测试

#!/bin/bash

vip=192.168.0.249

mask='255.255.255.255'

case $1 in

start)

echo 1 > /proc/sys/net/ipv4/conf/all/arp_ignore

echo 1 > /proc/sys/net/ipv4/conf/lo/arp_ignore

echo 2 > /proc/sys/net/ipv4/conf/all/arp_announce

echo 2 > /proc/sys/net/ipv4/conf/lo/arp_announce

sysctl -p > /dev/null 2>&1

ifconfig lo:0 $vip netmask $mask broadcast $vip up

route add -host $vip dev lo:0

;;

stop)

ifconfig lo:0 down

route del $vip >/dev/null 2>$1

echo 0 > /proc/sys/net/ipv4/conf/all/arp_ignore

echo 0 > /proc/sys/net/ipv4/conf/lo/arp_ignore

echo 0 > /proc/sys/net/ipv4/conf/all/arp_announce

echo 0 > /proc/sys/net/ipv4/conf/lo/arp_announce

;;

*)

echo "Usage $(basename $0) start|stop"

exit 1

;;

esac

2.主keepalived配置,基本和keepalived+haproxy一样,但是lvs的监控脚本不一样,我用的最简单的ping网关方法

vi /etc/keepalived/chk_lvs.sh

#!/bin/bash

ping -c 2 192.168.0.1 &>/dev/null

if [ $? -eq 0 ];then

exit 0

else

exit 2

fi

vrrp_script chk_lvs {

script "/etc/keepalived/chk_lvs.sh"

interval 1

weight -80

fall 3

rise 5

timeout 2

}

vrrp_instance VIP1 {

state MASTER

interface ens33

virtual_router_id 52

priority 100

advert_int 2 #探测时间

unicast_src_ip 192.168.0.13

unicast_peer {

192.168.0.14

}

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.0.249 dev ens33 label ens33:0

}

track_script {

chk_lvs

}

vrrp_script chk_lvs {

script "/etc/keepalived/chk_lvs.sh"

interval 1

weight -80

fall 3

rise 5

timeout 2

}

3.备keepalived配置

vrrp_instance VIP1 {

state MASTER

interface ens33

virtual_router_id 52

priority 100

advert_int 2 #探测时间

unicast_src_ip 192.168.0.13

unicast_peer {

192.168.0.14

}

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.0.249 dev ens33 label ens33:0

}

track_script {

chk_lvs

}

4.两台keepalived的增加下面的lvs相关配置,后端检查可以使用http,也可以使用tcp

virtual_server 192.168.0.249 80 { #定义虚拟主机IP地址及其端口

delay_loop 6 #检查后端服务器的时间间隔

lb_algo wrr #调度算法

lb_kind DR #集群模式

protocol TCP

real_server 192.168.0.109 80 {

weight 1

HTTP_GET { #HTTP监测,为了防止端口还在的假死状态

url {

path /monitor/index.html #自己定义好

status_code 200 #判断上述检测机制为健康状态的响应码

}

connect_timeout 5

nb_get_retry 3

delay_before_retry 3

}

#TCP_CHECK { #TCP监测后端服务器

#connect_timeout 5 # #超时时长

#nb_get_retry 3 #重试次数

#delay_before_retry 3 #重试之前等多久

#connect_port 80 #向哪个端口监测

#}

}

real_server 192.168.0.105 80 {

weight 1

HTTP_GET {

url {

path /monitor/index.html

status_code 200

}

connect_timeout 5

nb_get_retry 3

delay_before_retry 3

}

#TCP_CHECK {

#connect_timeout 5

#nb_get_retry 3

#delay_before_retry 3

#connect_port 80

#}

}

}