Xception 阅读笔记

Inception 背后的假设

常规卷积层试图在维度为长,宽,和通道数的3D的空间中学习一个滤波器,因此单个卷积核的任务是同时映射跨通道间相关性和空间空间相关性。Inception 模块背后的思想便是通过一系列明确的步骤把跨通道相关性和空间相关性独立开来映射。具体来说,Inception模块首先通过1x1卷积查看跨通道相关性,将其输入的数据映射到3-4个独立的子空间,然后使用不同大小的卷积核映射这些3D子空间映射所有相关性如Figure1。实际上,Inception背后的基本假设是跨通道相关性和空间相关性充分解耦。

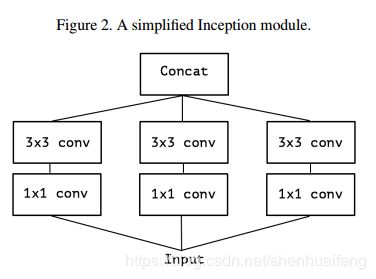

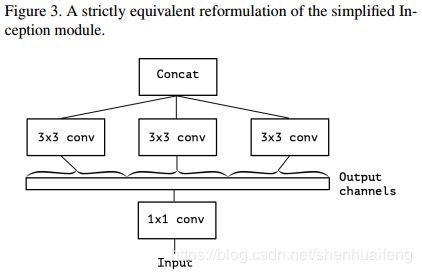

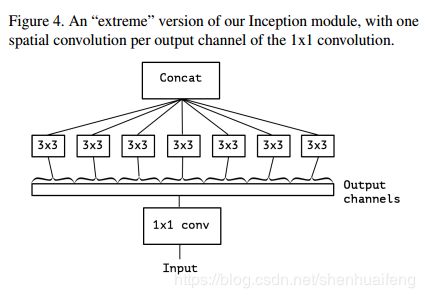

假设一个Inception模块简化版本如figure2,这个版本可以重构为将其中的3个1x1卷积使用一个大的1x1卷积替代,然后使用3个无通道间重叠的卷积如figure3。这样重构之后就会提出一个问题,分组的数目对卷积结果有什么影响,是否可以将通道间完全独立开来进行卷积使通道相关性和空间相关性完全解耦。根据这个假设,提出一个极端版本的Inception模块如Figure4,首先使用1x1映射通道间的关系,然后对输出的每一个通道独立使用卷积映射其空间相关性。

Figure4可以看做是Xcetpion模块的雏形,Figure4与Xception两个最主要的不同点在于

- Xcetpion先使用分离卷积然后再使用1x1卷积

- Inception 模块两次卷积的后都使用ReLU非线性激活函数,Xception卷积(深度分离卷积)通常不使用非线性激活函数。

上述两个主要不同点中,第二个不同点比较重要对实验结果影响比较大。

Xception网络结构

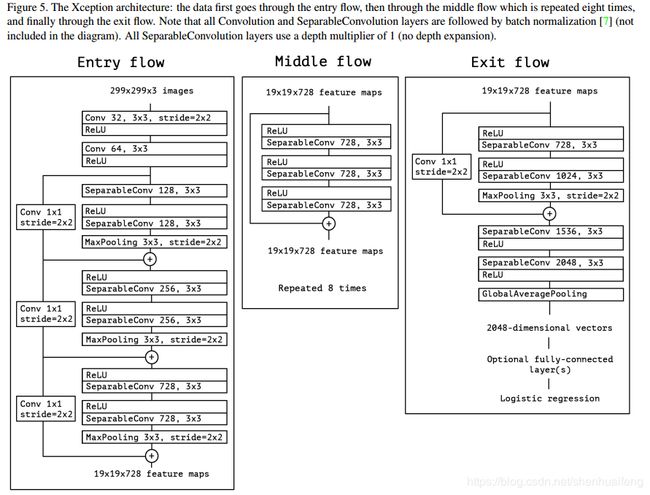

Xception 结构使用36层卷积结构提取特征器。36层卷积层被分为14个模块,除了第一个和最后一个模块,其它所有模块都应用了残差结构。

Tensorflow实现

参考:https://github.com/kwotsin/TensorFlow-Xception/blob/master/xception.py

def xception(inputs,

num_classes=1001,

is_training=True,

scope='xception'):

'''

The Xception Model!

Note:

The padding is included by default in slim.conv2d to preserve spatial dimensions.

INPUTS:

- inputs(Tensor): a 4D Tensor input of shape [batch_size, height, width, num_channels]

- num_classes(int): the number of classes to predict

- is_training(bool): Whether or not to train

OUTPUTS:

- logits (Tensor): raw, unactivated outputs of the final layer

- end_points(dict): dictionary containing the outputs for each layer, including the 'Predictions'

containing the probabilities of each output.

'''

with tf.variable_scope('Xception') as sc:

end_points_collection = sc.name + '_end_points'

with slim.arg_scope([slim.separable_conv2d], depth_multiplier=1),\

slim.arg_scope([slim.separable_conv2d, slim.conv2d, slim.avg_pool2d], outputs_collections=[end_points_collection]),\

slim.arg_scope([slim.batch_norm], is_training=is_training):

#===========ENTRY FLOW==============

#Block 1

net = slim.conv2d(inputs, 32, [3,3], stride=2, padding='valid', scope='block1_conv1')

net = slim.batch_norm(net, scope='block1_bn1')

net = tf.nn.relu(net, name='block1_relu1')

net = slim.conv2d(net, 64, [3,3], padding='valid', scope='block1_conv2')

net = slim.batch_norm(net, scope='block1_bn2')

net = tf.nn.relu(net, name='block1_relu2')

residual = slim.conv2d(net, 128, [1,1], stride=2, scope='block1_res_conv')

residual = slim.batch_norm(residual, scope='block1_res_bn')

#Block 2

net = slim.separable_conv2d(net, 128, [3,3], scope='block2_dws_conv1')

net = slim.batch_norm(net, scope='block2_bn1')

net = tf.nn.relu(net, name='block2_relu1')

net = slim.separable_conv2d(net, 128, [3,3], scope='block2_dws_conv2')

net = slim.batch_norm(net, scope='block2_bn2')

net = slim.max_pool2d(net, [3,3], stride=2, padding='same', scope='block2_max_pool')

net = tf.add(net, residual, name='block2_add')

residual = slim.conv2d(net, 256, [1,1], stride=2, scope='block2_res_conv')

residual = slim.batch_norm(residual, scope='block2_res_bn')

#Block 3

net = tf.nn.relu(net, name='block3_relu1')

net = slim.separable_conv2d(net, 256, [3,3], scope='block3_dws_conv1')

net = slim.batch_norm(net, scope='block3_bn1')

net = tf.nn.relu(net, name='block3_relu2')

net = slim.separable_conv2d(net, 256, [3,3], scope='block3_dws_conv2')

net = slim.batch_norm(net, scope='block3_bn2')

net = slim.max_pool2d(net, [3,3], stride=2, padding='same', scope='block3_max_pool')

net = tf.add(net, residual, name='block3_add')

residual = slim.conv2d(net, 728, [1,1], stride=2, scope='block3_res_conv')

residual = slim.batch_norm(residual, scope='block3_res_bn')

#Block 4

net = tf.nn.relu(net, name='block4_relu1')

net = slim.separable_conv2d(net, 728, [3,3], scope='block4_dws_conv1')

net = slim.batch_norm(net, scope='block4_bn1')

net = tf.nn.relu(net, name='block4_relu2')

net = slim.separable_conv2d(net, 728, [3,3], scope='block4_dws_conv2')

net = slim.batch_norm(net, scope='block4_bn2')

net = slim.max_pool2d(net, [3,3], stride=2, padding='same', scope='block4_max_pool')

net = tf.add(net, residual, name='block4_add')

#===========MIDDLE FLOW===============

for i in range(8):

block_prefix = 'block%s_' % (str(i + 5))

residual = net

net = tf.nn.relu(net, name=block_prefix+'relu1')

net = slim.separable_conv2d(net, 728, [3,3], scope=block_prefix+'dws_conv1')

net = slim.batch_norm(net, scope=block_prefix+'bn1')

net = tf.nn.relu(net, name=block_prefix+'relu2')

net = slim.separable_conv2d(net, 728, [3,3], scope=block_prefix+'dws_conv2')

net = slim.batch_norm(net, scope=block_prefix+'bn2')

net = tf.nn.relu(net, name=block_prefix+'relu3')

net = slim.separable_conv2d(net, 728, [3,3], scope=block_prefix+'dws_conv3')

net = slim.batch_norm(net, scope=block_prefix+'bn3')

net = tf.add(net, residual, name=block_prefix+'add')

#========EXIT FLOW============

residual = slim.conv2d(net, 1024, [1,1], stride=2, scope='block12_res_conv')

residual = slim.batch_norm(residual, scope='block12_res_bn')

net = tf.nn.relu(net, name='block13_relu1')

net = slim.separable_conv2d(net, 728, [3,3], scope='block13_dws_conv1')

net = slim.batch_norm(net, scope='block13_bn1')

net = tf.nn.relu(net, name='block13_relu2')

net = slim.separable_conv2d(net, 1024, [3,3], scope='block13_dws_conv2')

net = slim.batch_norm(net, scope='block13_bn2')

net = slim.max_pool2d(net, [3,3], stride=2, padding='same', scope='block13_max_pool')

net = tf.add(net, residual, name='block13_add')

net = slim.separable_conv2d(net, 1536, [3,3], scope='block14_dws_conv1')

net = slim.batch_norm(net, scope='block14_bn1')

net = tf.nn.relu(net, name='block14_relu1')

net = slim.separable_conv2d(net, 2048, [3,3], scope='block14_dws_conv2')

net = slim.batch_norm(net, scope='block14_bn2')

net = tf.nn.relu(net, name='block14_relu2')

net = slim.avg_pool2d(net, [10,10], scope='block15_avg_pool')

#Replace FC layer with conv layer instead

net = slim.conv2d(net, 2048, [1,1], scope='block15_conv1')

logits = slim.conv2d(net, num_classes, [1,1], activation_fn=None, scope='block15_conv2')

logits = tf.squeeze(logits, [1,2], name='block15_logits') #Squeeze height and width only

predictions = slim.softmax(logits, scope='Predictions')

end_points = slim.utils.convert_collection_to_dict(end_points_collection)

end_points['Logits'] = logits

end_points['Predictions'] = predictions

return logits, end_points