Hive 实现脱敏以及ETL 过程(开启kerberos)

一. 业务场景及实现原理:

- 源数据存储在Hbase中,需要将源数据中敏感部分(如身份证、电话号码等)进行脱敏再供用户使用。

- 只需要脱敏少量数据供页面呈现便可(这个呈现主要是给用户看样例数据,便于用户确定是否需要订阅资料)。

- 为了防止请求被非法模仿,因而编写了一个访问Ip 鉴权类,也就是设置了访问ip白名单,只有在白名单上的ip才可以访问接口。具体实现见如下链接:

http://blog.csdn.net/u013850277/article/details/77900765

- 注:运用该方法脱敏后的数据将存在Hive中而不是Hbase中。

实现原理:

-

通过 自定义Hive 函数,然后运用自定义函数结合Hive sql 对Hive 数据进行脱敏并入到脱敏库(这里的脱敏库与贴源库保存在同一个集群上)

-

本人为了方便部署与对外提供接口采用的是Spring boot 方式来部署

二. 执行过程简述

-

创建Hive 自定义函数

-

原先脱敏备份的表若存在则将其删除掉

-

如果存在该脱敏表则重命名备份

-

执行ETL及脱敏过程

-

创建表及脱敏成功则将备份表删除掉

测试:一百万条数据完成上述步骤用时1分钟之内;集群机器节点数量为3,内存分别为16G

三. 功能部署成功后的访问接口

- 请求URL:

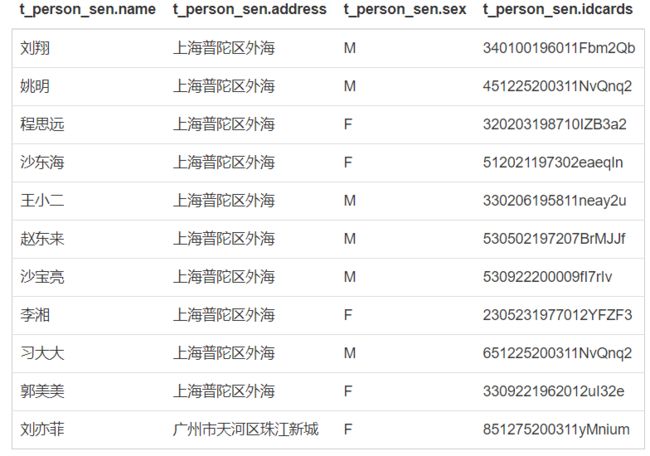

http://localhost:8003/hive_data_sensitive?tableName=sensitive.t_person_sen&sourceDataTable=admin.t_person1¶m=name_0,address_2,sex_1,birthday_3,idcards_4

注:如果源数据表有字段不想出现在脱敏后的表中,则可以不拼接到请求参数中。

- 请求方式:

GET

- 请求参数:

| 参数名 | 必选 | 说明 |

| --------------- | :–: | :--------------------------------------: |

| tableName | 是 | 脱敏后的表名,eg:sensitive.t_person_sen 其中 sensitive脱敏库,t_person_sen为脱敏后的表名 |

| sourceDataTable | 是 | 源数据,eg: admin.t_person1,admin为脱敏源库,t_person1为源数据 |

| param | 是 | 脱敏参数,字段及其脱敏规则,多个用英文符逗号分割,eg:name_0,address_2,sex_1,birthday_3,idcards_4 |

脱敏规则说明

| 参数 | 规则 | 说明 |

|---|---|---|

| 0 | 不做处理 | 原样输出 |

| 1 | 替换算法 | 默认将后4位替换成*号,如果少于4位大于1位将只保留前一位后几位用星号代替,对性别进行特殊处理,男替换成"M",女替换成"F",电话号码将中间五位替换成星号,eg: 134*****2152。 |

| 2 | 截取 | 默认将后4位载掉,少于4位大于1位则只保留一位,eg:上海普陀区外海110号,脱敏后是上海普陀区外海 |

| 3 | 加密 | 目前只简单实现MD5加密,eg:1983/7/15 脱敏后:70d7d532bd97bb0e5c7edbf128257f07 |

| 4 | 身份证脱敏 | 将后6位通过短网址替换,短网址替换后同样保证其唯一性,eg: 530502197207131215 脱敏后:5305021972079356h7 |

-

返回参数

参数名 说明 布尔类型 true : 成功; false:失败 -

示例

请求:

http://localhost:8003/hive_data_sensitive?tableName=sensitive.t_person_sen&sourceDataTable=admin.t_person1¶m=name_0,address_2,sex_1,birthday_3,idcards_4

返回:true

http://localhost:8003/hive_data_sensitive?tableName=sensitive.t_person_sen&sourceDataTable=admin.t_person1¶m=name_0,address_2,sex_1,idcards_4

返回:true

四. 功能实现

1、 Main (Spring boot 启动类)

package com.bms;

import org.jboss.logging.Logger;

import org.springframework.boot.SpringApplication;

import org.springframework.boot.autoconfigure.SpringBootApplication;

import org.springframework.boot.context.embedded.ConfigurableEmbeddedServletContainer;

import org.springframework.boot.context.embedded.EmbeddedServletContainerCustomizer;

import org.springframework.web.bind.annotation.RequestMapping;

import org.springframework.web.bind.annotation.RestController;

import com.bms.service.HiveDataEtl;

/**

* @author YeChunBo

* @time 2017年8月2日

*

* 类说明 :项目启动类,启动该项目只需启动该类便可

*/

@SpringBootApplication

@RestController

public class Main implements EmbeddedServletContainerCustomizer{

private static Logger log = Logger.getLogger(HiveDataEtl.class);

@RequestMapping("/")

public String getHello() {

log.info("Hello Spring Boot .....启动项目成功");

return "Hello Spring Boot .....启动项目成功";

}

// 修改访问的默认端口

public void customize(ConfigurableEmbeddedServletContainer configurableEmbeddedServletContainer) {

configurableEmbeddedServletContainer.setPort(Integer.parseInt("8003"));

}

public static void main(String[] args) {

SpringApplication.run(Main.class, args);

}

}

2、HiveDataEtlController(Spring boot 对应的controller类)

package com.bms.controller;

import java.util.ArrayList;

import org.jboss.logging.Logger;

import org.springframework.boot.autoconfigure.SpringBootApplication;

import org.springframework.web.bind.annotation.RequestMapping;

import org.springframework.web.bind.annotation.RequestParam;

import org.springframework.web.bind.annotation.ResponseBody;

import org.springframework.web.bind.annotation.RestController;

import com.bms.service.HiveDataEtl;

/**

* @author YeChunBo

* @time 2017年8月15日

*

* 类说明 Hive 数据库脱敏接口控制类

*/

@SpringBootApplication

@RestController

public class HiveDataEtlController {

private static Logger log = Logger.getLogger(HiveDataEtlController.class);

HiveDataEtl hiveDataEtl = new HiveDataEtl();

/**

* 请求样例:http://localhost:8003/hive_data_sensitive?tableName=sensitive.t_person_sen&sourceDataTable=admin.t_person1¶m=name_0,address_2,sex_1,birthday_3,idcards_4

* @param tableName

* @param sourceDataTable

* @param param : 字段及其要进行的脱敏规则:eg:name_1,address_2,birthday,idcards_4,其中0:不脱敏;1:替换算法,2:截取;3:加密;4:身份证脱敏

* @return

*/

@RequestMapping("/hive_data_sensitive")

@ResponseBody

Boolean hiveDataSensitive(@RequestParam(value = "tableName", required = true) String tableName,

@RequestParam(value = "sourceDataTable", required = true) String sourceDataTable,

@RequestParam(value = "param", required = true) String param) { // 带上脱敏规则的字段字符串,eg:param=name_1,address_2,birthday,idcards_4,其中1:替换算法,2:截取;3:加密;4:身份证脱敏

log.info("HiveDataSensitive req tableName=" + tableName + ",sourceDataTable=" + sourceDataTable + ",param=" + param);

return HiveDataEtl.hiveDataSen2OtherTable(tableName, sourceDataTable, param);

}

/**

* 查看脱敏后数据的样例数据,带上库的表名,eg:dbName.tableName,http://localhost:8003/query_table?tableName=sensitive.t_person_sen

* @param tableName

* @return

*/

@RequestMapping("/query_table")

@ResponseBody

ArrayList queryTable(@RequestParam(value = "tableName", required = true) String tableName) {

log.info("Query table req tableName=" + tableName);

return HiveDataEtl.queryTable(tableName);

}

}

3、Hive 实现脱敏过程的业务类

package com.bms.service;

import org.apache.commons.lang3.StringUtils;

/**

* @author YeChunBo

* @time 2017年8月11日

*

* 类说明

*/

import org.apache.hadoop.security.UserGroupInformation;

import org.jboss.logging.Logger;

import java.io.IOException;

import java.sql.Connection;

import java.sql.DriverManager;

import java.sql.ResultSet;

import java.sql.ResultSetMetaData;

import java.sql.SQLException;

import java.sql.Statement;

import java.util.ArrayList;

public class HiveDataEtl {

/**

* 用于连接Hive所需的一些参数设置 driverName:用于连接hive的JDBC驱动名 When connecting to

* HiveServer2 with Kerberos authentication, the URL format is:

* jdbc:hive2://:/;principal=

*/

private static String driverName = "org.apache.hive.jdbc.HiveDriver";

// 注意:这里的principal是固定不变的,其指的hive服务所对应的principal

private static String url = "jdbc:hive2://bigdata40:10000/admin;principal=hive/[email protected]";

private static Logger log = Logger.getLogger(HiveDataEtl.class);

// 其中 0:不处理,1:替换算法,2:截取;3:加密;4:身份证脱敏

public static final String NO_SEN = "0";

public static final String REPLACE_SEN = "1";

public static final String CUT_SEN = "2";

public static final String MD5_SEN = "3";

public static final String IDCARDS_SEN = "4";

public static Connection get_conn() throws SQLException, ClassNotFoundException {

org.apache.hadoop.conf.Configuration conf = new org.apache.hadoop.conf.Configuration();

conf.set("hadoop.security.authentication", "Kerberos");

if (System.getProperty("os.name").toLowerCase().startsWith("win")) {

System.setProperty("java.security.krb5.conf", "C:/Windows/krbconf/krb5.ini");

}

try {

UserGroupInformation.setConfiguration(conf);

UserGroupInformation.loginUserFromKeytab("hive/[email protected]", "./conf/hive.service.keytab"); //

} catch (IOException e1) {

e1.printStackTrace();

}

Class.forName(driverName);

Connection conn = DriverManager.getConnection(url);

return conn;

}

/**

* Hive数据脱敏到另一张表

*

* @param statement

* @param tableName

* @param sourceDataTable

* @return

*/

public static boolean hiveDataSen2OtherTable(String tableName, String sourceDataTable, String param) {

String sqlAdd = "ADD jar /usr/bigdata/2.5.3.0-37/hive/auxlib/HiveEtl-0.0.1-SNAPSHOT.jar"; // Server_Principal_of_HiveServer2 对应的位置

String cutSql = "CREATE TEMPORARY FUNCTION cut_sensitive as 'com.bms.udf.CutOfSensitive'";

String md5Sql = "CREATE TEMPORARY FUNCTION md5_sensitive as 'com.bms.udf.EncAlgorithmSensitive'";

String replaceSql = "CREATE TEMPORARY FUNCTION replace_sensitive as 'com.bms.udf.ReplaceOfSensitive'";

String idCardsSql = "CREATE TEMPORARY FUNCTION idcards_sensitive as 'com.bms.udf.IDCardsOfSensitive'";

String dropBakTableSql = "DROP TABLE IF EXISTS " + tableName + "_bak "; // 先删除原先的备份数据

String tableBakSql = "ALTER TABLE " + tableName + " RENAME TO " + tableName + "_bak";// 将要修改的表进行备份

String paramSql = tranReqparamToSql(param); // 将请求参数转换成sql语句

String createTableSql = "CREATE TABLE " + tableName

+ " AS select " + paramSql + " from " + sourceDataTable; // 进行ETL 过程的sql 语句

Connection conn = null;

Statement statement = null;

try {

conn = get_conn();

statement = conn.createStatement();

// 将jar 包加入到HIVE类路径下

statement.execute(sqlAdd);

log.info(sqlAdd + " is successed....");

// 将自定义的函数类加入到classpath下

statement.execute(cutSql);

log.info(cutSql + " is successed....");

statement.execute(md5Sql);

log.info(md5Sql + " is successed....");

statement.execute(replaceSql);

log.info(replaceSql + " is successed....");

statement.execute(idCardsSql);

log.info(idCardsSql + " is successed....");

statement.execute(dropBakTableSql); // 原先脱敏备份的表若存在则将其删除掉

log.info(dropBakTableSql + " is successed....");

if (isExistTable(statement, tableName.substring(tableName.indexOf(".") + 1 ), tableName.substring(0, tableName.indexOf(".")))) {

statement.execute(tableBakSql);// 如果存在该脱敏表则重命名备份

log.info(tableBakSql + " is successed....");

}

statement.execute(createTableSql); // 执行ETL及脱敏过程

log.info(createTableSql + " is successed....");

statement.execute(dropBakTableSql); // 创建表及脱敏成功则将备份表删除掉

log.info(dropBakTableSql + " is successed....");

return true;

} catch (Exception e) {

log.error("hiveDataSen2OtherTable is failed , the errorMessage is " + e.getMessage());

e.printStackTrace();

return false;

} finally {

if(statement != null) {

try {

statement.close();

} catch (SQLException e) {

log.error("close statement is failed , the errorMessage is " + e.getMessage());

e.printStackTrace();

}

}

if (conn != null) {

try {

conn.close();

} catch (SQLException e1) {

log.error("close conn is failed , the errorMessage is " + e1.getMessage());

e1.printStackTrace();

}

}

}

}

/**

* 将请求参数(字段及其要进行的脱敏规则)

* @param param 字段与脱敏参数:eg:name_0,address_2,sex=1,birthday=3,idcards_4

* @return 转换成sql, eg:name,cut_sensitive(address) as address,replace_sensitive(sex) as sex,md5_sensitive(birthday) as birthday,idcards_sensitive(idcards) as idcards

*/

public static String tranReqparamToSql(String param) {

StringBuffer paramSql = new StringBuffer();

String col = null;

String sensiType = null;

if (StringUtils.isNotBlank(param)) {

String[] colArr = param.split(",");

for (int i = 0; i < colArr.length; i++) {

col = colArr[i].substring(0, colArr[i].indexOf("_"));

sensiType = colArr[i].substring(colArr[i].indexOf("_") + 1);

if (NO_SEN.equals(sensiType))

paramSql.append(col).append(",");

if (REPLACE_SEN.equals(sensiType))

paramSql.append(" replace_sensitive" + "(" + col + ") ").append(" AS " + col).append(",");

if (CUT_SEN.equals(sensiType))

paramSql.append(" cut_sensitive" + "(" + col + ") ").append(" AS " + col).append(",");

if (MD5_SEN.equals(sensiType))

paramSql.append(" md5_sensitive" + "(" + col + ") ").append(" AS " + col).append(",");

if (IDCARDS_SEN.equals(sensiType))

paramSql.append(" idcards_sensitive" + "(" + col + ") ").append(" AS " + col).append(",");

}

return paramSql.toString().substring(0, paramSql.toString().lastIndexOf(","));

} else

return param;

}

public static ArrayList queryTable(String tableName) {

String querySql = "Select * from " + tableName + " limit 20 ";

log.info("Query sql is " + querySql);

Connection conn;

ArrayList arrayList = new ArrayList();

try {

conn = get_conn();

Statement statement = conn.createStatement();

ResultSet rs = statement.executeQuery(querySql);

ResultSetMetaData m = rs.getMetaData();

int columns = m.getColumnCount();

// 显示列,表格的表头

for (int i = 1; i <= columns; i++) {

arrayList.add(m.getColumnName(i));

}

System.out.println();

// 显示表格内容

while (rs.next()) {

for (int i = 1; i <= columns; i++) {

arrayList.add(rs.getString(i));

}

}

} catch (Exception e) {

e.printStackTrace();

}

return arrayList;

}

/**

* 判断表是否存在对应的库中

* @param statement

* @param tableName

* @param dbName

* @return

*/

public static boolean isExistTable(Statement statement, String tableName, String dbName) {

ResultSet rs;

try {

statement.execute(" use " + dbName);

rs = statement.executeQuery(" show tables ");

while(rs.next()) {

if (tableName.equalsIgnoreCase(rs.getString(1))) // 不区分大小写,因为Hive查出来的表名就没有区分大小写

return true;

}

} catch (SQLException e) {

e.printStackTrace();

}

return false;

}

public static void main(String[] args) throws Exception {

// Connection conn = get_conn();

// Statement statement = conn.createStatement();

// System.out.println(isExistTable(statement, "t_person1_sen", "sensitive"));

// hiveDataSen2OtherTable("sensitive.t_person1_sen","t_person1", "");

// tranReqparamToSql("name_0,address_2,sex_1,birthday_3,idcards_4");

/*

* // 创建的表名 String tableName = "sensitive.t_person_100_sen"; String

* sourceDataTable = "t_person_100"; createTable( tableName,

* sourceDataTable); // 将t_person_100脱敏后存入default库的t_person_100_sen表

* System.out.println("...........End........");

*/

}

}

4、Hive UDF 定义类(身份证处理算法)

package com.bms.udf;

import java.util.ArrayList;

import java.util.List;

import java.util.Random;

import org.apache.commons.lang.StringUtils;

import org.apache.hadoop.hive.ql.exec.UDF;

import com.bms.utils.TokenUtils;

/**

* @author YeChunBo

* @time 2017年8月11日

* 将身份证后6位使用短网址的方式进行脱敏生成

* 类说明 :脱敏替换的UDF

*/

public class IDCardsOfSensitive extends UDF {

public String evaluate(String str) {

return idcarts(str);

}

/**

* 身份证后6位用短网址的token代替,保证了它的唯一性

*

* @param idcards

* @return

*/

public static String idcarts(String idcards) {

if (StringUtils.isBlank(idcards)) {

return idcards;

}

// 用正则判断传进来的是否为身份号再处理

boolean isIdcardsFlag = idcards.matches(

"(^[1-9]\\d{5}(18|19|([23]\\d))\\d{2}((0[1-9])|(10|11|12))(([0-2][1-9])|10|20|30|31)\\d{3}[0-9Xx]$)|(^[1-9]\\d{5}\\d{2}((0[1-9])|(10|11|12))(([0-2][1-9])|10|20|30|31)\\d{2}[0-9Xx]$)");

if(isIdcardsFlag) {

// return idcards.replace(idcards.substring(idcards.length() - 6), genCodes(6,1).get(0));

return idcards.replace(idcards.substring(idcards.length() - 6), TokenUtils.getShortUrlToken(idcards.substring(idcards.length() - 6)));

}

else

return idcards;

}

/**

* 随机生成6位字符串

*

* @param length

* @param num

* 生成字符串的组数

* @return

*/

public static List genCodes(int length, long num) {

List results = new ArrayList();

for (int j = 0; j < num; j++) {

String val = "";

Random random = new Random();

for (int i = 0; i < length; i++) {

String charOrNum = random.nextInt(2) % 2 == 0 ? "char" : "num"; // 输出字母还是数字

if ("char".equalsIgnoreCase(charOrNum)) // 字符串

{

int choice = random.nextInt(2) % 2 == 0 ? 65 : 97; // 取得大写字母还是小写字母

val += (char) (choice + random.nextInt(26));

} else if ("num".equalsIgnoreCase(charOrNum)) // 数字

{

val += String.valueOf(random.nextInt(10));

}

}

val = val.toLowerCase();

if (results.contains(val)) {

continue;

} else {

results.add(val);

}

}

return results;

}

public static void main(String[] args) {

System.out.println(idcarts("130503670401001"));//15位:13为河北,05为邢台,03为桥西区,出生日期为1967年4月1日,顺序号为001

System.out.println(idcarts("340100196011010134"));//18位

}

}

5、Hive UDF 简单替换算法

package com.bms.udf;

import org.apache.commons.lang.StringUtils;

import org.apache.hadoop.hive.ql.exec.UDF;

/**

* @author YeChunBo

* @time 2017年8月11日

*

* 类说明 :替换的 hive 自定义函数

*/

public class ReplaceOfSensitive extends UDF {

public String evaluate(String str) {

return replace(str, 4);

}

/**

* 替换算法 如果字段值为性别,男替换成M,女替换成F,电话号码将中间5位替换成*, 地址将后四位替换成*

* <例子:北京市海淀区****>

*

* @param address

* @param sensitiveSize

* 敏感信息长度

* @return

*/

public static String replace(String inputStr, int sensitiveSize) {

int length = StringUtils.length(inputStr);

if (StringUtils.isBlank(inputStr))

return inputStr;

else if(inputStr.matches("^((13[0-9])|(14[5|7])|(15([0-3]|[5-9]))|(18[0,5-9]))\\d{8}$")) // 手机号特殊处理,eg:处理后138******1234

return StringUtils.left(inputStr, 3).concat(StringUtils.removeStart(StringUtils.leftPad(StringUtils.right(inputStr, 4), StringUtils.length(inputStr), "*"), "***"));

else if ("男".equals(inputStr))

return "M";

else if ("女".equals(inputStr))

return "F";

else if (StringUtils.isBlank(inputStr) || length == 1) // 如果为空或只有一位则不做任何处理

return inputStr;

else if (length == 2)

return StringUtils.rightPad(StringUtils.left(inputStr, length - 1), length, "*");

else if (length == 3)

return StringUtils.rightPad(StringUtils.left(inputStr, length - 2), length, "*");

else if (length == 4)

return StringUtils.rightPad(StringUtils.left(inputStr, length - 3), length, "*");

else {

return StringUtils.rightPad(StringUtils.left(inputStr, length - sensitiveSize), length, "*");

}

}

public static void main(String[] args) {

System.out.println(replace("男", 5));

System.out.println(replace("女", 5));

System.out.println(replace("叶问", 5));

System.out.println(replace("迪力热巴", 5));

System.out.println(replace("上海普陀区外海110号", 4));

System.out.println(replace("13428282152", 4));

}

}

// M

// F

// 叶*

// 迪***

//上海普陀区外海****

// 134****2152

5、 短网址实现算法

// 字符表

private static String[] chars = new String[] { "a", "b", "c", "d", "e",

"f", "g", "h", "i", "j", "k", "l", "m", "n", "o", "p", "q", "r",

"s", "t", "u", "v", "w", "x", "y", "z", "0", "1", "2", "3", "4",

"5", "6", "7", "8", "9", "A", "B", "C", "D", "E", "F", "G", "H",

"I", "J", "K", "L", "M", "N", "O", "P", "Q", "R", "S", "T", "U",

"V", "W", "X", "Y", "Z" };

/**

* 生成短网址的token

* @param data 数据

* @return 6位长度的token字符串

*/

public static String getShortUrlToken(String data) {

if (data == null)

return null;

// 将数据进行md5加密生成32位签名串

String md5Hex = new String(DigestUtils.md5Hex(data));

// 将32位签名串,分为4段,每段8位,进行求和

int hexLen = md5Hex.length();

int subHexLen = hexLen / 8;

long subHexSum = 0;

for (int i = 0; i < subHexLen; i++) {

int j = i + 1;

String subHex = md5Hex.substring(i * 8, j * 8);

subHexSum += Long.valueOf(subHex, 16);

}

// 生成token

long idx = Long.valueOf("3FFFFFFF", 16) & subHexSum;

StringBuffer token = new StringBuffer();

for (int k = 0; k < 6; k++) {

int index = (int) (Long.valueOf("0000003D", 16) & idx);

token.append(chars[index]);

idx = idx >> 5;

}

return token.toString();

}

6、pom.xml

4.0.0

HiveEtl

HiveEtl

0.0.1-SNAPSHOT

org.springframework.boot

spring-boot-starter-parent

1.5.4.RELEASE

org.springframework.boot

spring-boot-starter-web

org.springframework.boot

spring-boot-starter-logging

org.springframework.boot

spring-boot-starter-log4j

1.3.0.RELEASE

org.apache.hive

hive-jdbc

1.2.1

org.eclipse.jetty.aggregate

jetty-all

org.apache.hive

hive-shims

org.apache.hadoop

hadoop-common

2.7.1

org.apache.hive

hive-exec

1.2.1

org.apache.hive

hive-metastore

1.2.1

org.apache.hive

hive-common

1.2.1

org.apache.hive

hive-service

1.2.1

org.eclipse.jetty.aggregate

jetty-all

org.apache.hive

hive-shims

jdk.tools

jdk.tools

1.8

system

${JAVA_HOME}/lib/tools.jar

org.springframework.boot

spring-boot-maven-plugin

true

${start-class}

repackage

maven-assembly-plugin

2.2-beta-5

true

com.bms.Main

jar-with-dependencies

assemble-all

package

single

五. linux 部署过程

1. 部署Hive UDF 环境

用普通的maven 打包成 jar包(大小只有几十K),上传的Hive服务器上(目录是:/usr/bigdata/2.5.3.0-37/hive/auxlib), 这个 jar 的主要作用是 创建 Hive自定义函数用的。之所以打普通包,是因为普通包Hive加载起来快。

-

注:/usr/bigdata/2.5.3.0-37/hive/auxlib 这个目录一般是不存在的,需要新建,主要作用是因为将 Hive包放到这个目录,hive会自动加载。

-

特别提醒:这个jar 包是要放到Hive的安装目录下。例如,公司测试环境Hive安装在bigdata40,则将其上传到bigdata40。

-

打成普通包时需要先将pom.xml文件中加载启动类的打包方式注释掉,也就下面这段代码

org.springframework.boot

spring-boot-maven-plugin

true

${start-class}

repackage

maven-assembly-plugin

2.2-beta-5

true

com.bms.Main

jar-with-dependencies

assemble-all

package

single

2. 部署HiveETL 项目

运用Maven 打包(注意将上一步注释掉的pom.xml文件加上,因为这个要生成的是运行Jar包,需要指定项目的运行Main方法)

将打包后的jar包上传到部署服务器,编写启停脚本

检查安装的服务器,是否有/etc/krb5.conf 文件存在,没有则到安装kerberos的服务复制一份

在创建部署目录下创建 conf 目录并上传对应的keytab上去(注意:这个用户所对应的权限是必须拥有创建 Hive UDF权限的)

启动脚本,查看日志输出

其中脚本如下

- start.sh

#!/bin/bash

PROJECTNAME=HiveEtl

pid=`ps -ef |grep $PROJECTNAME |grep -v "grep" |awk '{print $2}'`

if [ $pid ]; then

echo "$PROJECTNAME is running and pid=$pid"

else

echo "Start success to start $PROJECTNAME ...."

nohup java -jar HiveEtl-0.0.1-SNAPSHOT.jar >> catalina.out 2>&1 &

fi

- stop.sh

#!/bin/bash

PROJECTNAME=HiveEtl

pid=`ps -ef |grep $PROJECTNAME |grep -v "grep" |awk '{print $2}' `

if [ $pid ]; then

echo "$PROJECTNAME is running and pid=$pid"

kill -9 $pid

if [[ $? -eq 0 ]];then

echo "success to stop $PROJECTNAME "

else

echo "fail to stop $PROJECTNAME "

fi

fi

五. 遇到的问题

- 使用spring boot整合Hive,在启动Spring boot项目时,报出异常:

java.lang.NoSuchMethodError: org.eclipse.jetty.servlet.ServletMapping.setDefault(Z)V

经过排查,是maven的包冲突引起的,具体做法,排除:jetty-all、hive-shims依赖包。对应的pom配置如下:

org.apache.hive

hive-jdbc

1.2.1

org.eclipse.jetty.aggregate

jetty-all

org.apache.hive

hive-shims