Kubernetes(K8S)(七)——网络插件flannel和calico应用于跨主机调度通信

文章目录

- 1.网络插件flannel

- 1.1 Flannel——vxlan模式跨主机通信

- 1.2 Flannel——host-gw模式跨主机通信(纯三层)

- 1.1 Flannel——vxlan+directrouting模式

- 2.网络插件calico

1.网络插件flannel

跨主机通信的一个解决方案是Flannel,由CoreOS推出,支持3种实现:UDP、VXLAN、host-gw

udp模式:使用设备flannel.0进行封包解包,不是内核原生支持,上下文切换较大,性能非常差

vxlan模式:使用flannel.1进行封包解包,内核原生支持,性能较强

host-gw模式:无需flannel.1这样的中间设备,直接宿主机当作子网的下一跳地址,性能最强

host-gw的性能损失大约在10%左右,而其他所有基于VXLAN“隧道”机制的网络方案,性能损失在20%~30%左右

1.1 Flannel——vxlan模式跨主机通信

什么是VXLAN?

VXLAN,即Virtual Extensible LAN(虚拟可扩展局域网),是Linux本身支持的一网种网络虚拟化技术。VXLAN可以完全在内核态实现封装和解封装工作,从而通过“隧道”机制,构建出覆盖网络(Overlay Network)

VXLAN的设计思想是:

在现有的三层网络之上,“覆盖”一层虚拟的、由内核VXLAN模块负责维护的二层网络,使得连接在这个VXLAN二nfcu网络上的“主机”(虚拟机或容器都可以),可以像在同一个局域网(LAN)里那样自由通信。

为了能够在二nfcu网络上打通“隧道”,VXLAN会在宿主机上设置一个特殊的网络设备作为“隧道”的两端,叫VTEP:VXLAN Tunnel End Point(虚拟隧道端点)

Flannel vxlan模式跨主机通信原理

flanel.1设备,就是VXLAN的VTEP,即有IP地址,也有MAC地址

与UPD模式类似,当container-发出请求后,上的地址10.1.16.3的IP包,会先出现在docker网桥,再路由到本机的flannel.1设备进行处理(进站)

为了能够将“原始IP包”封装并发送到正常的主机,VXLAN需要找到隧道的出口:上的宿主机的VTEP设备,这个设备信息,由宿主机的flanneld进程维护

VTEP设备之间通过二层数据桢进行通信

源VTEP设备收到原始IP包后,在上面加上一个目的MAC地址,封装成一个导去数据桢,发送给目的VTEP设备(获取 MAC地址需要通过三层IP地址查询,这是ARP表的功能)

封装过程只是加了一个二层头,不会改变“原始IP包”的内容

这些VTEP设备的MAC地址,对宿主机网络来说没什么实际意义,称为内部数据桢,并不能在宿主机的二层网络传输,Linux内核还需要把它进一步封装成为宿主机的一个普通的数据桢,好让它带着“内部数据桢”通过宿主机的eth0进行传输,Linux会在内部数据桢前面,加上一个我死的VXLAN头,VXLAN头里有一个重要的标志叫VNI,它是VTEP识别某个数据桢是不是应该归自己处理的重要标识。

在Flannel中,VNI的默认值是1,这也是为什么宿主机的VTEP设备都叫flannel.1的原因

一个flannel.1设备只知道另一端flannel.1设备的MAC地址,却不知道对应的宿主机地址是什么。

在linux内核里面,网络设备进行转发的依据,来自FDB的转发数据库,这个flannel.1网桥对应的FDB信息,是由flanneld进程维护的。linux内核再在IP包前面加上二层数据桢头,把Node2的MAC地址填进去。这个MAC地址本身,是Node1的ARP表要学习的,需Flannel维护,这时候Linux封装的“外部数据桢”的格式如下

然后Node1的flannel.1设备就可以把这个数据桢从eth0发出去,再经过宿主机网络来到Node2的eth0

Node2的内核网络栈会发现这个数据桢有VXLAN Header,并且VNI为1,Linux内核会对它进行拆包,拿到内部数据桢,根据VNI的值,所它交给Node2的flannel.1设备

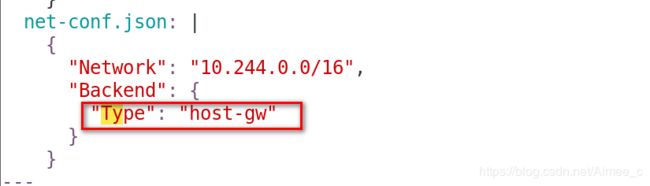

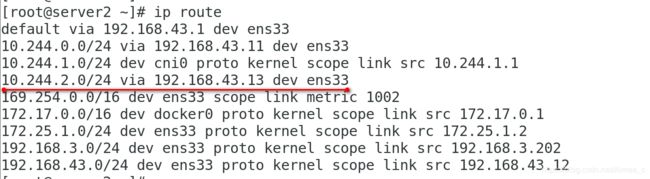

1.2 Flannel——host-gw模式跨主机通信(纯三层)

这是一种纯三层网络的方案,性能最高。

howt-gw模式的工作原理,就是将每个Flannel子网的下一跳,设置成了该子网对应的宿主机的IP地址,也就是说,宿主机(host)充当了这条容器通信路径的“网关”(Gateway),这正是host-gw的含义。

所有的子网和主机的信息,都保存在Etcd中,flanneld只需要watch这些数据的变化 ,实时更新路由表就行了。

核心是IP包在封装成桢的时候,使用路由表的“下一跳”设置上的MAC地址,这样可以经过二层网络到达目的宿主机。

[kubeadm@server1 mainfest]$ cp /home/kubeadm/kube-flannel.yml .

[kubeadm@server1 mainfest]$ ls

cronjob.yml daemonset.yml deployment.yml init.yml job.yml kube-flannel.yml pod2.yml pod.yml rs.yml service.yml

[kubeadm@server1 mainfest]$ kubectl delete -f kube-flannel.yml

podsecuritypolicy.policy "psp.flannel.unprivileged" deleted

clusterrole.rbac.authorization.k8s.io "flannel" deleted

clusterrolebinding.rbac.authorization.k8s.io "flannel" deleted

serviceaccount "flannel" deleted

configmap "kube-flannel-cfg" deleted

daemonset.apps "kube-flannel-ds-amd64" deleted

daemonset.apps "kube-flannel-ds-arm64" deleted

daemonset.apps "kube-flannel-ds-arm" deleted

daemonset.apps "kube-flannel-ds-ppc64le" deleted

daemonset.apps "kube-flannel-ds-s390x" deleted

[kubeadm@server1 mainfest]$ vim kube-flannel.yml

[kubeadm@server1 mainfest]$ kubectl apply -f kube-flannel.yml

podsecuritypolicy.policy/psp.flannel.unprivileged created

clusterrole.rbac.authorization.k8s.io/flannel created

clusterrolebinding.rbac.authorization.k8s.io/flannel created

serviceaccount/flannel created

configmap/kube-flannel-cfg created

daemonset.apps/kube-flannel-ds-amd64 created

daemonset.apps/kube-flannel-ds-arm64 created

daemonset.apps/kube-flannel-ds-arm created

daemonset.apps/kube-flannel-ds-ppc64le created

daemonset.apps/kube-flannel-ds-s390x created

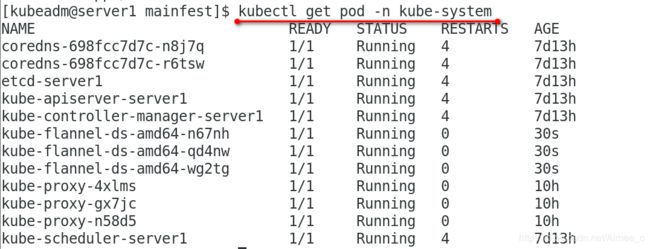

[kubeadm@server1 mainfest]$ kubectl get pod -n kube-system

NAME READY STATUS RESTARTS AGE

coredns-698fcc7d7c-n8j7q 1/1 Running 4 7d13h

coredns-698fcc7d7c-r6tsw 1/1 Running 4 7d13h

etcd-server1 1/1 Running 4 7d13h

kube-apiserver-server1 1/1 Running 4 7d13h

kube-controller-manager-server1 1/1 Running 4 7d13h

kube-flannel-ds-amd64-n67nh 1/1 Running 0 30s

kube-flannel-ds-amd64-qd4nw 1/1 Running 0 30s

kube-flannel-ds-amd64-wg2tg 1/1 Running 0 30s

kube-proxy-4xlms 1/1 Running 0 10h

kube-proxy-gx7jc 1/1 Running 0 10h

kube-proxy-n58d5 1/1 Running 0 10h

kube-scheduler-server1 1/1 Running 4 7d13h

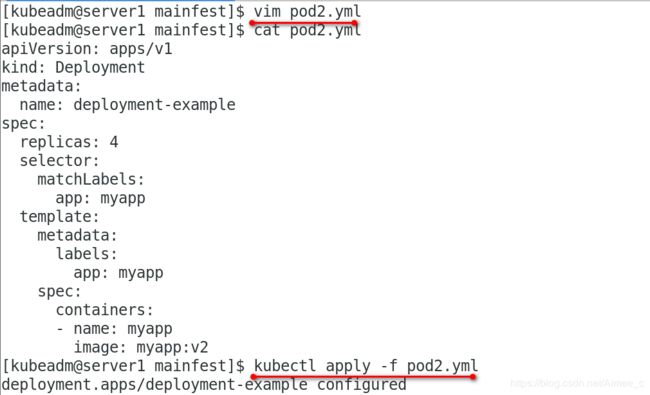

[kubeadm@server1 mainfest]$ vim pod2.yml

[kubeadm@server1 mainfest]$ cat pod2.yml

apiVersion: apps/v1

kind: Deployment

metadata:

name: deployment-example

spec:

replicas: 4

selector:

matchLabels:

app: myapp

template:

metadata:

labels:

app: myapp

spec:

containers:

- name: myapp

image: myapp:v2

[kubeadm@server1 mainfest]$ kubectl apply -f pod2.yml

deployment.apps/deployment-example configured

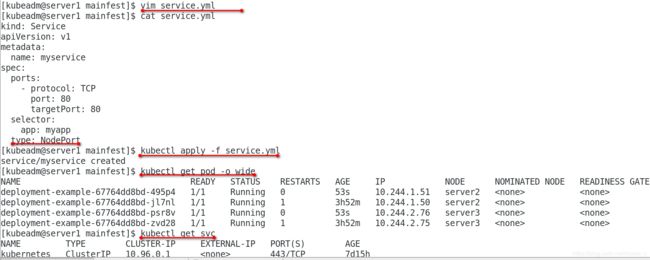

[kubeadm@server1 mainfest]$ vim service.yml

[kubeadm@server1 mainfest]$ cat service.yml

kind: Service

apiVersion: v1

metadata:

name: myservice

spec:

ports:

- protocol: TCP

port: 80

targetPort: 80

selector:

app: myapp

type: NodePort

[kubeadm@server1 mainfest]$ kubectl apply -f service.yml

service/myservice created

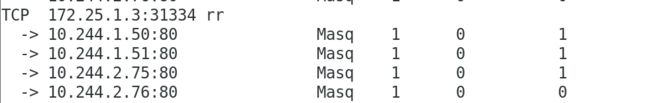

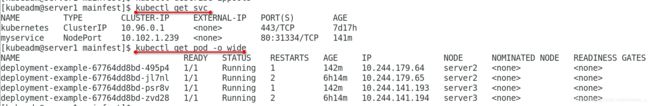

[kubeadm@server1 mainfest]$ kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

deployment-example-67764dd8bd-495p4 1/1 Running 0 53s 10.244.1.51 server2 <none> <none>

deployment-example-67764dd8bd-jl7nl 1/1 Running 1 3h52m 10.244.1.50 server2 <none> <none>

deployment-example-67764dd8bd-psr8v 1/1 Running 0 53s 10.244.2.76 server3 <none> <none>

deployment-example-67764dd8bd-zvd28 1/1 Running 1 3h52m 10.244.2.75 server3 <none> <none>

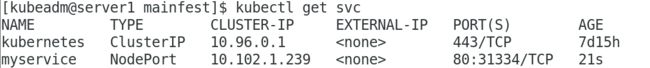

[kubeadm@server1 mainfest]$ kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 7d15h

myservice NodePort 10.102.1.239 <none> 80:31334/TCP 21s

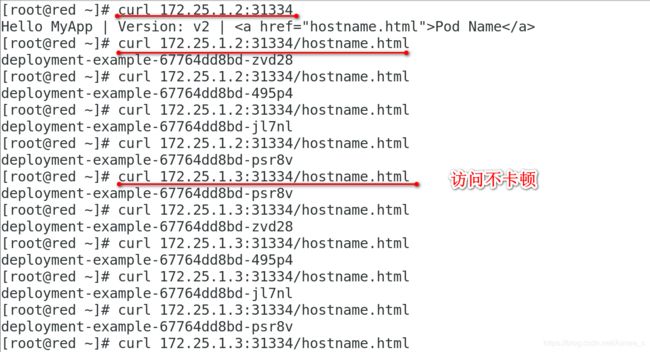

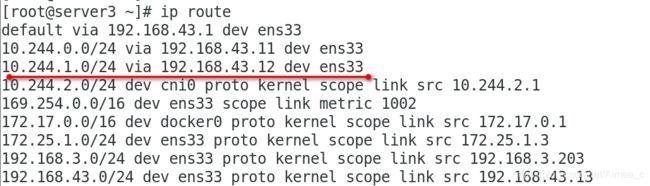

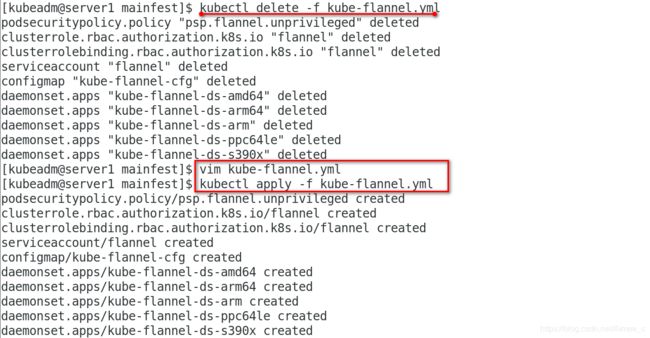

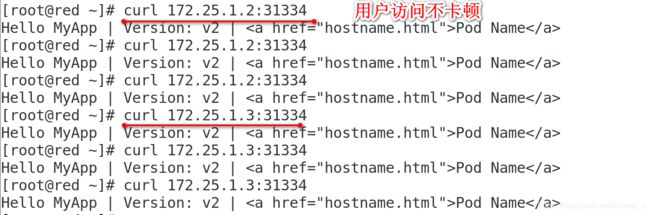

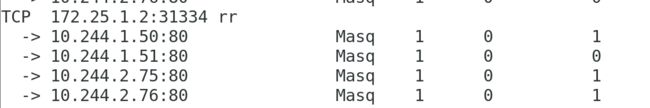

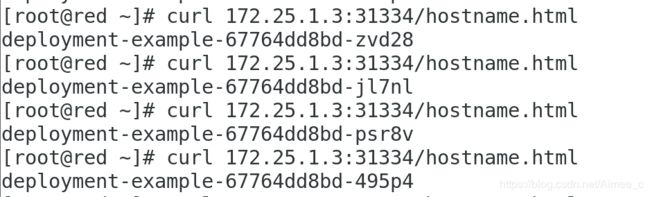

1.1 Flannel——vxlan+directrouting模式

[kubeadm@server1 mainfest]$ kubectl delete -f kube-flannel.yml

podsecuritypolicy.policy "psp.flannel.unprivileged" deleted

clusterrole.rbac.authorization.k8s.io "flannel" deleted

clusterrolebinding.rbac.authorization.k8s.io "flannel" deleted

serviceaccount "flannel" deleted

configmap "kube-flannel-cfg" deleted

daemonset.apps "kube-flannel-ds-amd64" deleted

daemonset.apps "kube-flannel-ds-arm64" deleted

daemonset.apps "kube-flannel-ds-arm" deleted

daemonset.apps "kube-flannel-ds-ppc64le" deleted

daemonset.apps "kube-flannel-ds-s390x" deleted

[kubeadm@server1 mainfest]$ vim kube-flannel.yml

[kubeadm@server1 mainfest]$ kubectl apply -f kube-flannel.yml

podsecuritypolicy.policy/psp.flannel.unprivileged created

clusterrole.rbac.authorization.k8s.io/flannel created

clusterrolebinding.rbac.authorization.k8s.io/flannel created

serviceaccount/flannel created

configmap/kube-flannel-cfg created

daemonset.apps/kube-flannel-ds-amd64 created

daemonset.apps/kube-flannel-ds-arm64 created

daemonset.apps/kube-flannel-ds-arm created

daemonset.apps/kube-flannel-ds-ppc64le created

daemonset.apps/kube-flannel-ds-s390x created

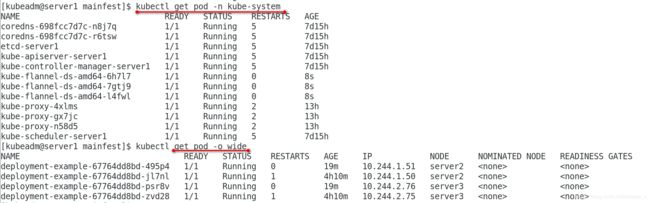

[kubeadm@server1 mainfest]$ kubectl get pod -n kube-system

NAME READY STATUS RESTARTS AGE

coredns-698fcc7d7c-n8j7q 1/1 Running 5 7d15h

coredns-698fcc7d7c-r6tsw 1/1 Running 5 7d15h

etcd-server1 1/1 Running 5 7d15h

kube-apiserver-server1 1/1 Running 5 7d15h

kube-controller-manager-server1 1/1 Running 5 7d15h

kube-flannel-ds-amd64-6h7l7 1/1 Running 0 8s

kube-flannel-ds-amd64-7gtj9 1/1 Running 0 8s

kube-flannel-ds-amd64-l4fwl 1/1 Running 0 8s

kube-proxy-4xlms 1/1 Running 2 13h

kube-proxy-gx7jc 1/1 Running 2 13h

kube-proxy-n58d5 1/1 Running 2 13h

kube-scheduler-server1 1/1 Running 5 7d15h

[kubeadm@server1 mainfest]$ kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

deployment-example-67764dd8bd-495p4 1/1 Running 0 19m 10.244.1.51 server2 <none> <none>

deployment-example-67764dd8bd-jl7nl 1/1 Running 1 4h10m 10.244.1.50 server2 <none> <none>

deployment-example-67764dd8bd-psr8v 1/1 Running 0 19m 10.244.2.76 server3 <none> <none>

deployment-example-67764dd8bd-zvd28 1/1 Running 1 4h10m 10.244.2.75 server3 <none> <none>

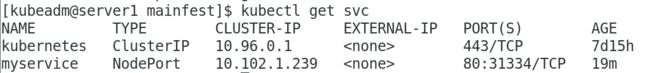

[kubeadm@server1 mainfest]$ kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 7d15h

myservice NodePort 10.102.1.239 <none> 80:31334/TCP 19m

2.网络插件calico

参考官网:https://kubernetes.io/docs/setup/production-environment/tools/kubeadm/create-cluster-kubeadm/

calico除了可以解决网络通信还可以解决网络策略

在私有仓库新建一个calico项目,用来存放calico镜像

拉取calico所需镜像

[root@server1 harbor]# docker pull calico/cni:v3.14.1

[root@server1 harbor]# docker pull calico/pod2daemon-flexvol:v3.14.1

[root@server1 harbor]# docker pull calico/node:v3.14.1

[root@server1 harbor]# docker pull calico/kube-controllers:v3.14.1

[root@server1 harbor]# docker images| grep calico|awk '{print $1":"$2}'

calico/node:v3.14.1

calico/pod2daemon-flexvol:v3.14.1

calico/cni:v3.14.1

calico/kube-controllers:v3.14.1

[root@server1 harbor]# for i in `docker images| grep calico|awk '{print $1":"$2}'`;do docker tag $i reg.red.org/$i;done

[root@server1 harbor]# docker images| grep reg.red.org\/calico|awk '{print $1":"$2}'

reg.red.org/calico/node:v3.14.1

reg.red.org/calico/pod2daemon-flexvol:v3.14.1

reg.red.org/calico/cni:v3.14.1

reg.red.org/calico/kube-controllers:v3.14.1

[root@server1 harbor]# for i in `docker images| grep reg.red.org\/calico|awk '{print $1":"$2}'`;do docker push $i;done##########在安装calico网络插件前先删除flannel网络插件,防止两个插件在运行时发生冲突

[root@server2 mainfest]# kubectl delete -f kube-flannel.yml

podsecuritypolicy.policy "psp.flannel.unprivileged" deleted

clusterrole.rbac.authorization.k8s.io "flannel" deleted

clusterrolebinding.rbac.authorization.k8s.io "flannel" deleted

serviceaccount "flannel" deleted

configmap "kube-flannel-cfg" deleted

daemonset.apps "kube-flannel-ds-amd64" deleted

daemonset.apps "kube-flannel-ds-arm64" deleted

daemonset.apps "kube-flannel-ds-arm" deleted

daemonset.apps "kube-flannel-ds-ppc64le" deleted

daemonset.apps "kube-flannel-ds-s390x" deleted

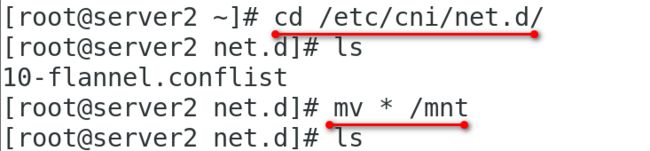

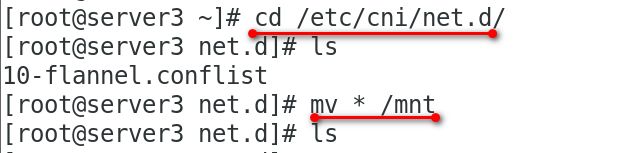

[root@server2 mainfest]# cd /etc/cni/net.d/

[root@server2 net.d]# ls

10-flannel.conflist

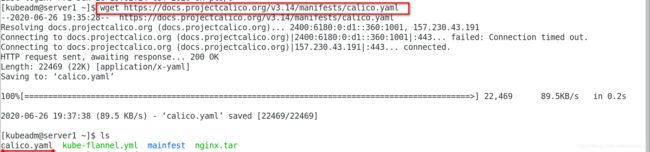

[root@server2 net.d]# mv 10-flannel.conflist /mnt ############所以节点都执行[kubeadm@server1 ~]$ wget https://docs.projectcalico.org/v3.14/manifests/calico.yaml ##下载所需calico配置文件

kubeadm@server1 mainfest]$ cp /home/kubeadm/calico.yaml .

[kubeadm@server1 mainfest]$ ls

calico.yaml cronjob.yml daemonset.yml deployment.yml init.yml job.yml kube-flannel.yml pod2.yml pod.yml rs.yml service.yml

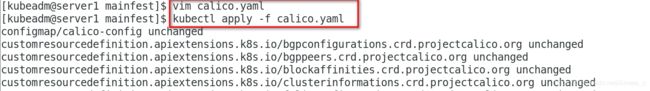

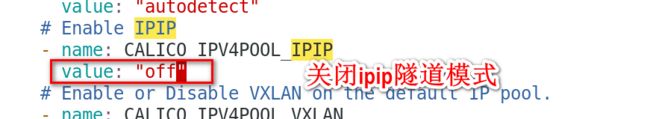

[kubeadm@server1 mainfest]$ vim calico.yaml

# Enable IPIP

- name: CALICO_IPV4POOL_IPIP

value: "off"

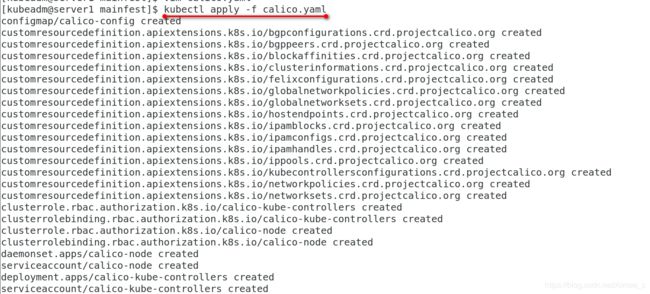

[kubeadm@server1 mainfest]$ kubectl apply -f calico.yaml

configmap/calico-config created

customresourcedefinition.apiextensions.k8s.io/bgpconfigurations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/bgppeers.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/blockaffinities.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/clusterinformations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/felixconfigurations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/globalnetworkpolicies.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/globalnetworksets.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/hostendpoints.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipamblocks.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipamconfigs.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipamhandles.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ippools.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/kubecontrollersconfigurations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/networkpolicies.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/networksets.crd.projectcalico.org created

clusterrole.rbac.authorization.k8s.io/calico-kube-controllers created

clusterrolebinding.rbac.authorization.k8s.io/calico-kube-controllers created

clusterrole.rbac.authorization.k8s.io/calico-node created

clusterrolebinding.rbac.authorization.k8s.io/calico-node created

daemonset.apps/calico-node created

serviceaccount/calico-node created

deployment.apps/calico-kube-controllers created

serviceaccount/calico-kube-controllers created

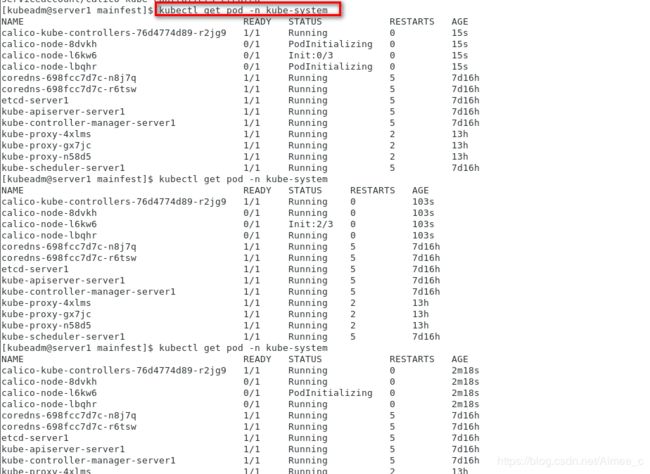

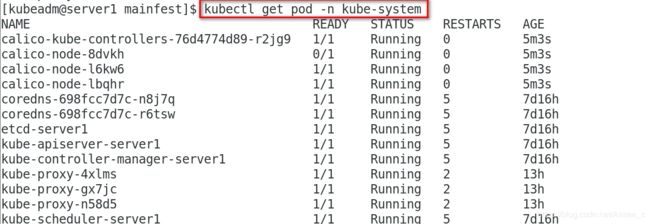

[kubeadm@server1 mainfest]$ kubectl get pod -n kube-system

NAME READY STATUS RESTARTS AGE

calico-kube-controllers-76d4774d89-r2jg9 1/1 Running 0 15s

calico-node-8dvkh 0/1 PodInitializing 0 15s

calico-node-l6kw6 0/1 Init:0/3 0 15s

calico-node-lbqhr 0/1 PodInitializing 0 15s

coredns-698fcc7d7c-n8j7q 1/1 Running 5 7d16h

coredns-698fcc7d7c-r6tsw 1/1 Running 5 7d16h

etcd-server1 1/1 Running 5 7d16h

kube-apiserver-server1 1/1 Running 5 7d16h

kube-controller-manager-server1 1/1 Running 5 7d16h

kube-proxy-4xlms 1/1 Running 2 13h

kube-proxy-gx7jc 1/1 Running 2 13h

kube-proxy-n58d5 1/1 Running 2 13h

kube-scheduler-server1 1/1 Running 5 7d16h

[kubeadm@server1 mainfest]$ kubectl get pod -n kube-system

NAME READY STATUS RESTARTS AGE

calico-kube-controllers-76d4774d89-r2jg9 1/1 Running 0 103s

calico-node-8dvkh 0/1 Running 0 103s

calico-node-l6kw6 0/1 Init:2/3 0 103s

calico-node-lbqhr 0/1 Running 0 103s

coredns-698fcc7d7c-n8j7q 1/1 Running 5 7d16h

coredns-698fcc7d7c-r6tsw 1/1 Running 5 7d16h

etcd-server1 1/1 Running 5 7d16h

kube-apiserver-server1 1/1 Running 5 7d16h

kube-controller-manager-server1 1/1 Running 5 7d16h

kube-proxy-4xlms 1/1 Running 2 13h

kube-proxy-gx7jc 1/1 Running 2 13h

kube-proxy-n58d5 1/1 Running 2 13h

kube-scheduler-server1 1/1 Running 5 7d16h

[kubeadm@server1 mainfest]$ kubectl get pod -n kube-system

NAME READY STATUS RESTARTS AGE

calico-kube-controllers-76d4774d89-r2jg9 1/1 Running 0 2m18s

calico-node-8dvkh 0/1 Running 0 2m18s

calico-node-l6kw6 0/1 PodInitializing 0 2m18s

calico-node-lbqhr 0/1 Running 0 2m18s

coredns-698fcc7d7c-n8j7q 1/1 Running 5 7d16h

coredns-698fcc7d7c-r6tsw 1/1 Running 5 7d16h

etcd-server1 1/1 Running 5 7d16h

kube-apiserver-server1 1/1 Running 5 7d16h

kube-controller-manager-server1 1/1 Running 5 7d16h

kube-proxy-4xlms 1/1 Running 2 13h

kube-proxy-gx7jc 1/1 Running 2 13h

kube-proxy-n58d5 1/1 Running 2 13h

kube-scheduler-server1 1/1 Running 5 7d16h

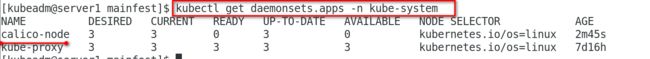

[kubeadm@server1 mainfest]$ kubectl get daemonsets.apps -n kube-system

NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE

calico-node 3 3 0 3 0 kubernetes.io/os=linux 2m45s

kube-proxy 3 3 3 3 3 kubernetes.io/os=linux 7d16h

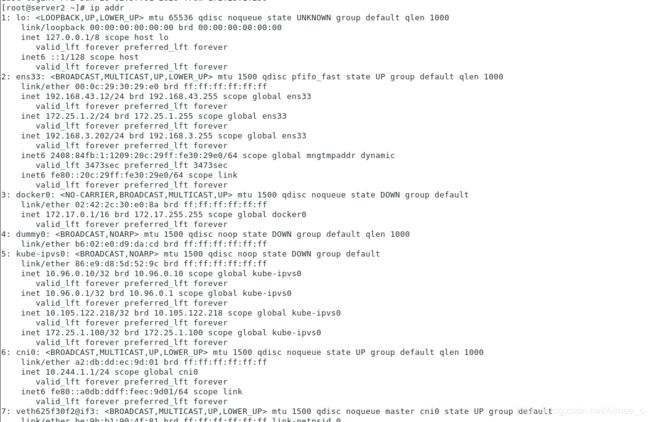

[kubeadm@server1 mainfest]$ ip addr

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:0c:29:bb:3e:1d brd ff:ff:ff:ff:ff:ff

inet 192.168.43.11/24 brd 192.168.43.255 scope global ens33

valid_lft forever preferred_lft forever

inet 172.25.1.1/24 brd 172.25.1.255 scope global ens33

valid_lft forever preferred_lft forever

inet 192.168.3.201/24 brd 192.168.3.255 scope global ens33

valid_lft forever preferred_lft forever

inet6 2408:84fb:1:1209:20c:29ff:febb:3e1d/64 scope global mngtmpaddr dynamic

valid_lft 3505sec preferred_lft 3505sec

inet6 fe80::20c:29ff:febb:3e1d/64 scope link

valid_lft forever preferred_lft forever

3: docker0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN group default

link/ether 02:42:e9:5e:cd:d0 brd ff:ff:ff:ff:ff:ff

inet 172.17.0.1/16 brd 172.17.255.255 scope global docker0

valid_lft forever preferred_lft forever

4: dummy0: <BROADCAST,NOARP> mtu 1500 qdisc noop state DOWN group default qlen 1000

link/ether 5a:94:ba:ba:c0:07 brd ff:ff:ff:ff:ff:ff

5: kube-ipvs0: <BROADCAST,NOARP> mtu 1500 qdisc noop state DOWN group default

link/ether 0a:6f:17:7d:e9:a8 brd ff:ff:ff:ff:ff:ff

inet 10.96.0.10/32 brd 10.96.0.10 scope global kube-ipvs0

valid_lft forever preferred_lft forever

inet 10.96.0.1/32 brd 10.96.0.1 scope global kube-ipvs0

valid_lft forever preferred_lft forever

inet 10.102.1.239/32 brd 10.102.1.239 scope global kube-ipvs0

valid_lft forever preferred_lft forever

6: calic3d023fea71@if3: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1440 qdisc noqueue state UP group default

link/ether ee:ee:ee:ee:ee:ee brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet6 fe80::ecee:eeff:feee:eeee/64 scope link

valid_lft forever preferred_lft forever

7: cali8e3712e48ff@if3: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1440 qdisc noqueue state UP group default

link/ether ee:ee:ee:ee:ee:ee brd ff:ff:ff:ff:ff:ff link-netnsid 1

inet6 fe80::ecee:eeff:feee:eeee/64 scope link

valid_lft forever preferred_lft forever

[kubeadm@server1 mainfest]$

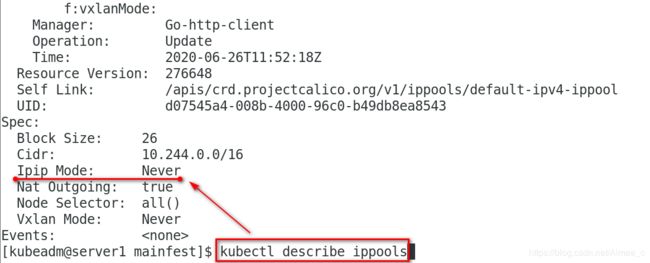

[kubeadm@server1 mainfest]$ kubectl describe ippools

Name: default-ipv4-ippool

Namespace:

Labels: <none>

Annotations: projectcalico.org/metadata: {"uid":"e086a226-81ff-4cf9-923d-d5f75956a6f4","creationTimestamp":"2020-06-26T11:52:17Z"}

API Version: crd.projectcalico.org/v1

Kind: IPPool

Metadata:

Creation Timestamp: 2020-06-26T11:52:18Z

Generation: 1

Managed Fields:

API Version: crd.projectcalico.org/v1

Fields Type: FieldsV1

fieldsV1:

f:metadata:

f:annotations:

.:

f:projectcalico.org/metadata:

f:spec:

.:

f:blockSize:

f:cidr:

f:ipipMode:

f:natOutgoing:

f:nodeSelector:

f:vxlanMode:

Manager: Go-http-client

Operation: Update

Time: 2020-06-26T11:52:18Z

Resource Version: 276648

Self Link: /apis/crd.projectcalico.org/v1/ippools/default-ipv4-ippool

UID: d07545a4-008b-4000-96c0-b49db8ea8543

Spec:

Block Size: 26

Cidr: 10.244.0.0/16

Ipip Mode: Never

Nat Outgoing: true

Node Selector: all()

Vxlan Mode: Never

Events: <none>

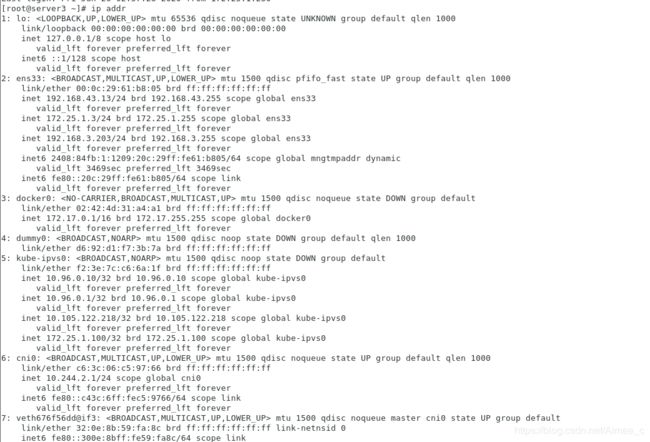

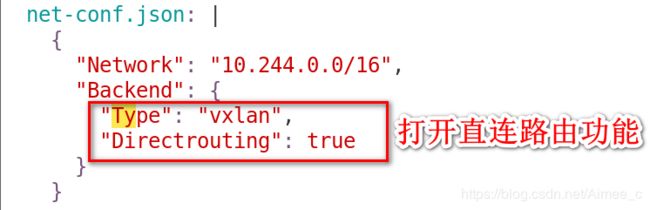

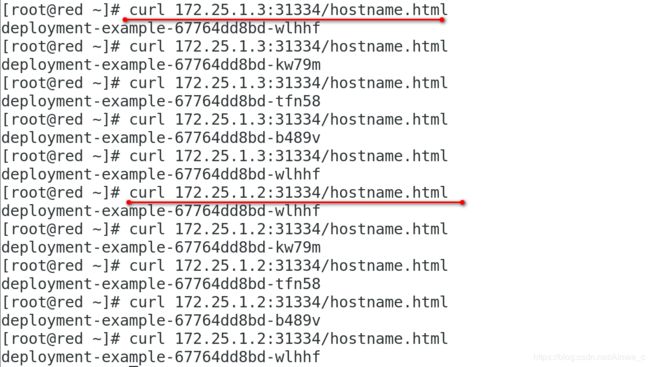

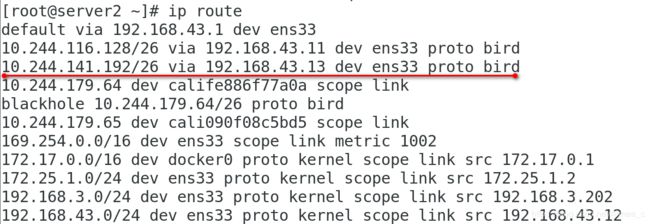

查看路由环路:是直接通过ens33进行通信传输的

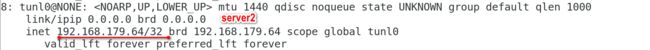

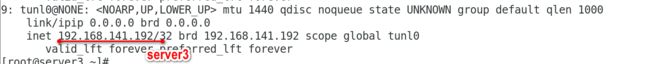

更改calico.yml文件打开隧道模式并分配一个固定的pod ip网段

[kubeadm@server1 mainfest]$ vim calico.yaml

# Enable IPIP

- name: CALICO_IPV4POOL_IPIP

value: "Always"

- name: CALICO_IPV4POOL_CIDR

value: "192.168.0.0/16"

[kubeadm@server1 mainfest]$ kubectl apply -f calico.yaml 重新应用flannel网络插件

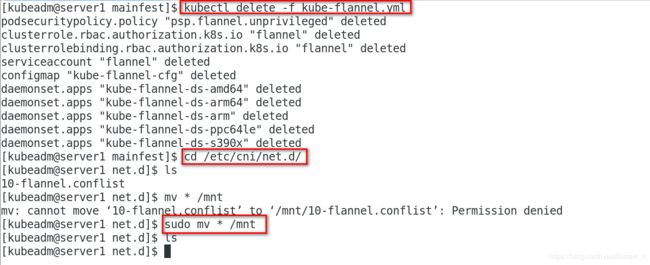

[kubeadm@server1 mainfest]$ kubectl delete -f calico.yaml

[kubeadm@server1 ~]$ cd /etc/cni/net.d/

[kubeadm@server1 net.d]$ sudo mv * /mnt

[kubeadm@server1 mainfest]$ kubectl apply -f kube-flannel.yml

podsecuritypolicy.policy/psp.flannel.unprivileged created

clusterrole.rbac.authorization.k8s.io/flannel created

clusterrolebinding.rbac.authorization.k8s.io/flannel created

serviceaccount/flannel created

configmap/kube-flannel-cfg created

daemonset.apps/kube-flannel-ds-amd64 created

daemonset.apps/kube-flannel-ds-arm64 created

daemonset.apps/kube-flannel-ds-arm created

daemonset.apps/kube-flannel-ds-ppc64le created

daemonset.apps/kube-flannel-ds-s390x created