python3 刷博客访问量(两种方案)

(怀着胆战心惊、瑟瑟发抖的心态写下这篇博文)

作为一名不懂爬虫但是对爬虫很感兴趣的小白,一直在探索着爬虫的各种应用,这次分享的是如何用python增加指定的CSDN博客的访问量,亲测的两种方法,各有利弊,现介绍如下。

1. 方案一

这种方式是先爬取http://www.xicidaili.com/网站上的IP,然后使用爬取到的IP访问指定的博客。这个方案的优点是不用打开浏览器即可完成访问,缺点是只能增加文章阅读数,并不增加访问量。代码如下:

import re

import time

import requests

import urllib.request

from bs4 import BeautifulSoup

firefoxHead = {"User-Agent":"Mozilla/5.0 (Windows NT 10.0; Win64; x64; rv:61.0) Gecko/20100101 Firefox/61.0"}

IPRegular = r"(([1-9]?\d|1\d{2}|2[0-4]\d|25[0-5]).){3}([1-9]?\d|1\d{2}|2[0-4]\d|25[0-5])"

host = "https://blog.csdn.net"

url = "https://blog.csdn.net/weixin_41783077/article/details/{}"

codes = ["82983127","82990355","82990200","82658116","82020121","82990050","82982895","82982662","82658116",

"82982393","82872754","82726306","82222074","82019494","80894466","80895722",

"80895400","80895382","80895361","80895331","80895117"]

def parseIPList(url="http://www.xicidaili.com/"):

IPs = []

request = urllib.request.Request(url,headers=firefoxHead)

response = urllib.request.urlopen(request)

soup = BeautifulSoup(response,"lxml")

tds = soup.find_all("td")

for td in tds:

string = str(td.string)

if re.search(IPRegular,string):

IPs.append(string)

return IPs

def PV(IP):

s = requests.Session()

s.headers = firefoxHead

for i in range(len(codes)):

print("No.{}\t".format(i),end="\t")

s.proxies = {"http":"{}:8080".format(IP)}

s.get(host)

r = s.get(url.format(codes[i]))

html = r.text

soup = BeautifulSoup(html,"html.parser")

spans = soup.find_all("span")

print(spans[2].string)

time.sleep(2)

def main():

IPs = parseIPList()

for i in range(len(IPs)):

print("正在进行第{}次访问\t".format(i),end="\t")

PV(IPs[i])

if __name__ == "__main__":

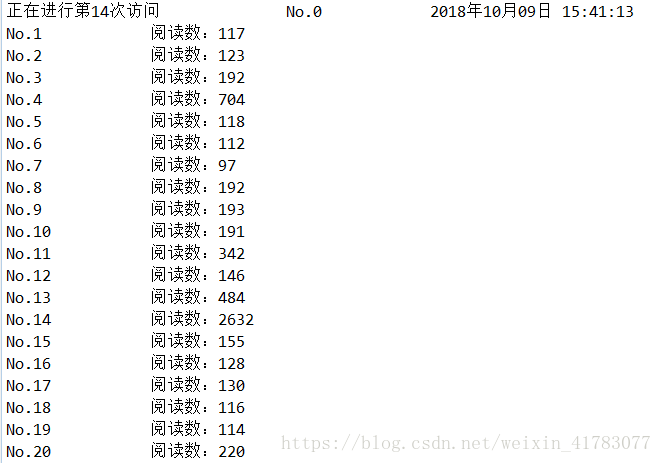

main()运行界面如下:

2.方案二

这种方式是使用本地IP进行访问,是需要通过浏览器打开指定博客内容。这个方案的优点是稳定,既能增加文章阅读数,也能增加访问量,缺点是你的浏览器要一直弹窗口、关窗口,建议在不使用电脑进行其他操作时采用这种方案。代码如下:

# -*- coding: utf-8 -*

import webbrowser as web

import time

import os

urllist=[

'https://blog.csdn.net/weixin_41783077/article/details/82983127',

'https://blog.csdn.net/weixin_41783077/article/details/82990355',

'https://blog.csdn.net/weixin_41783077/article/details/82990200',

'https://blog.csdn.net/weixin_41783077/article/details/82658116',

'https://blog.csdn.net/weixin_41783077/article/details/82020121',

'https://blog.csdn.net/weixin_41783077/article/details/82990050',

'https://blog.csdn.net/weixin_41783077/article/details/82982895',

'https://blog.csdn.net/weixin_41783077/article/details/82982662',

'https://blog.csdn.net/weixin_41783077/article/details/82658116',

'https://blog.csdn.net/weixin_41783077/article/details/82982393',

'https://blog.csdn.net/weixin_41783077/article/details/82872754',

'https://blog.csdn.net/weixin_41783077/article/details/82726306',

'https://blog.csdn.net/weixin_41783077/article/details/82222074',

'https://blog.csdn.net/weixin_41783077/article/details/82019494',

'https://blog.csdn.net/weixin_41783077/article/details/80894466',

'https://blog.csdn.net/weixin_41783077/article/details/80895722',

'https://blog.csdn.net/weixin_41783077/article/details/80895400',

'https://blog.csdn.net/weixin_41783077/article/details/80895382',

'https://blog.csdn.net/weixin_41783077/article/details/80895361',

'https://blog.csdn.net/weixin_41783077/article/details/80895331',

'https://blog.csdn.net/weixin_41783077/article/details/80895117'

]

for j in range(0,100000):#设置循环的总次数

i=0

while i<1 : #一次打开浏览器访问的循环次数

for url in urllist:

web.open(url) #访问网址地址,语法 .open(url,new=0,Autorasise=True),设置 new 的值不同有不同的效果0、1、2

i=i+1

time.sleep(2) #设置每次打开新页面的等待时间

else:

time.sleep(5) #设置每次等待关闭浏览器的时间

os.system('taskkill /IM chrome.exe') #你设置的默认使用浏览器,其他的更换下就行

代码运行结果就是你的浏览器会自动打开关闭。

补充:有些小伙伴想,是不是缩短等待时间可以加快刷的速度,其实并不是这样的,刷的太快服务器并不会增加文章的阅读量,究竟隔多久刷一次最好,我经过测试发现是大概是1分钟1次,各位小伙伴也可以自己探索啦~