Flink on zeppelin 实时写入hive

概述

随着Flink1.11.0版本的发布,一个很重要的特性就是支持了流数据直接写入到hive中,用户可以非常方便的用SQL的方式把kafka的数据直接写入到hive里面.这篇文章会给出Flink on zeppelin里面实现流式写入hive的简单示例以及遇到问题的解决方案

Streaming Writing

先来看一下官网对hive streaming writing的介绍.

streaming writing是基于Filesystem streaming sink实现了,当然也就支持端到端exactly-once语义了.从1.11开始,用户可以在 Table API/SQL 和 SQL Client 中使用 Hive 语法(HiveQL)来编写 SQL 语句。为了支持这一特性,Flink 引入了一种新的 SQL 方言,用户可以动态的为每一条语句选择使用Flink(default)或Hive(hive)方法。可以通过SET table.sql-dialect=hive;或者SET table.sql-dialect=default; 来设置.

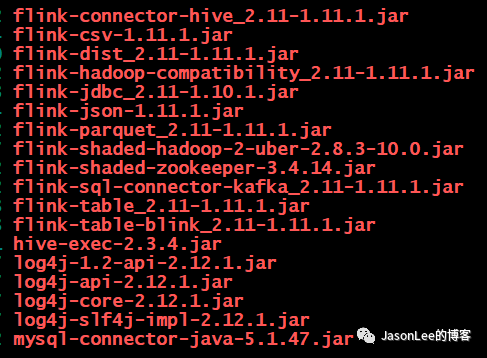

添加依赖

这里还是要强调一下,用不到的jar包不要随便添加,很容易会造成jar包的冲突,报各种乱七八糟的错.只添加需要用的即可.

创建表

1,创建kafka的流表

%flink.ssql

DROP TABLE IF EXISTS kafka_table;

CREATE TABLE kafka_table (

name VARCHAR COMMENT '姓名',

age int COMMENT '年龄',

city VARCHAR,

borth VARCHAR,

ts BIGINT COMMENT '时间戳',

t as TO_TIMESTAMP(FROM_UNIXTIME(ts/1000,'yyyy-MM-dd HH:mm:ss')),

proctime as PROCTIME(),

WATERMARK FOR t AS t - INTERVAL '5' SECOND

)

WITH (

'connector' = 'kafka', -- 使用 kafka connector

'topic' = 'jason_flink', -- kafka topic

'properties.bootstrap.servers' = 'master:9092,storm1:9092,storm2:9092', -- broker连接信息

'properties.group.id' = 'jason_flink_test',

'scan.startup.mode' = 'latest-offset', -- 读取数据的位置

'format' = 'json' -- 数据源格式为 json

);

2,创建hive表

在创建hive表之前需要先把方言设置成hive的,也就是先执行

SET table.sql-dialect=hive; 但是目前zeppelin应该是还不支持直接执SQL语句,还需要写scala的代码. 但是Flink的sql-client里面是可以直接执行的.

%flink

stenv.getConfig().setSqlDialect(SqlDialect.HIVE)

这里我就只创建了一个天级别的分区,分区提交策略使用的是默认的

%flink.ssql

DROP TABLE IF EXISTS fs_table;

CREATE TABLE fs_table (

name STRING,

age int,

dt STRING

) PARTITIONED BY (dt STRING) STORED AS PARQUET TBLPROPERTIES (

'sink.partition-commit.delay'='1s',

'sink.partition-commit.policy.kind'='metastore,success-file',

'sink.rolling-policy.check-interval'='1min'

);

hive streaming sink还引入了新的机制:Partition commit。

一个合理的数仓的数据导入,它不止包含数据文件的写入,也包含了 Partition 的可见性提交。当某个 Partition 完成写入时,需要通知 Hive metastore 或者在文件夹内添加 SUCCESS 文件。Flink 1.11 的 Partition commit 机制可以让你:

-

Trigger:控制Partition提交的时机,可以根据Watermark加上从Partition中提取的时间来判断,也可以通过Processing time来判断。你可以控制:是想先尽快看到没写完的Partition;还是保证写完Partition之后,再让下游看到它。

-

Policy:提交策略,内置支持SUCCESS文件和Metastore的提交,你也可以扩展提交的实现,比如在提交阶段触发Hive的analysis来生成统计信息,或者进行小文件的合并等等。

这里还有几个参数需要说明一下:

sink.partition-commit.trigger:触发分区提交的时间特征。默认为 processing-time,即处理时间,但是在有延迟的情况下,可能会造成数据分区错乱。所以你可以使用 partition-time,即按照分区时间戳(使用partition中抽取时间,加上watermark决定partiton commit的时机 即分区内数据对应的事件时间)来提交。

partition.time-extractor.timestamp-pattern:分区时间戳的抽取格式。需要写成 yyyy-MM-dd HH:mm:ss 的形式,并用 Hive 表中相应的分区字段做占位符替换。显然,Hive 表的分区字段值来自流表中定义好的事件时间,timestamp-pattern 会从你定义的partition 里面推断出完整的timestamp

sink.partition-commit.delay:触发分区提交的延迟。在时间特征设为 partition-time 的情况下,当水印时间戳大于分区创建时间加上此延迟时(当 watermark > partition时间 + 1小时,会commit这个partition),分区才会真正提交。此值最好与分区粒度相同,例如若 Hive 表按1小时分区,此参数可设为 1 h,若按 10 分钟分区,可设为 10 min。

sink.partition-commit.policy.kind:分区提交策略,可以理解为使分区对下游可见的附加操作。metastore 表示更新 Hive Metastore 中的表元数据, success-file 则表示在分区内创建 _SUCCESS 标记文件(先更新metastore(addPartition),再写SUCCESS文件)。只有创建了success文件标识,hive里面的数据才真正可见.

执行插入hive表SQL

%flink.ssql(type=update,parallelism=8)

INSERT INTO fs_table SELECT name, age, FROM_UNIXTIME(ts/1000,'yyyy-MM-dd') FROM kafka_table where name = 'jason';

然后来看下Flink的WEB UI上任务的DAG如下图所示

从UI上可以看到有数据进来了,然后来看一下HDFS对应的路径下是否有文件产生.

这里产生了success文件,就是上面说的分区提交策略,这个时候hive里面的数据就是可见的了.

为了保险起见我们再到hive里面查一下数据验证一下,看看能不能查到数据

hive> show tables;

OK

fs_table

kafka_table

Time taken: 1.648 seconds, Fetched: 2 row(s)

hive> select * from fs_table ;

OK

SLF4J: Failed to load class "org.slf4j.impl.StaticLoggerBinder".

SLF4J: Defaulting to no-operation (NOP) logger implementation

SLF4J: See http://www.slf4j.org/codes.html#StaticLoggerBinder for further details.

jason 1 2020-07-26

jason 1 2020-07-26

jason 1 2020-07-26

jason 1 2020-07-26

jason 1 2020-07-26

Time taken: 3.965 seconds, Fetched: 5 row(s)

数据是没有问题的,我往kafka里面写入了10条数据,但是上面的SQL里面只过滤了name是jason的,所以只有5条.

遇到问题总结

遇到的第一个问题是关于hive的metastore的问题.

Caused by: java.lang.IllegalArgumentException: Embedded metastore is not allowed. Make sure you have set a valid value for hive.metastore.uris

at org.apache.flink.util.Preconditions.checkArgument(Preconditions.java:139) ~[flink-dist_2.11-1.11.1.jar:1.11.1]

at org.apache.flink.table.catalog.hive.HiveCatalog.(HiveCatalog.java:171) ~[flink-connector-hive_2.11-1.11.1.jar:1.11.1]

at org.apache.flink.table.catalog.hive.HiveCatalog.(HiveCatalog.java:157) ~[flink-connector-hive_2.11-1.11.1.jar:1.11.1]

at flink.hive.FlinkHiveDemo$.main(FlinkHiveDemo.scala:40) ~[?:?]

at flink.hive.FlinkHiveDemo.main(FlinkHiveDemo.scala) ~[?:?]

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method) ~[?:1.8.0_111]

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62) ~[?:1.8.0_111]

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43) ~[?:1.8.0_111]

at java.lang.reflect.Method.invoke(Method.java:498) ~[?:1.8.0_111]

at org.apache.flink.client.program.PackagedProgram.callMainMethod(PackagedProgram.java:288) ~[flink-dist_2.11-1.11.1.jar:1.11.1]

... 13 more

这个报错是因为在Flink1.11.0版本中HiveCatalog已经不允许embedded模式了,所以需要我们自己启动一个独立的metastore server.

下面再简单的说一下hive metastore的配置.

hive server端的配置

javax.jdo.option.ConnectionURL

jdbc:mysql://master:3306/hive?useSSL=false&createDatabaseIfNotExist=true&useUnicode=true&characterEncoding=UTF-8

JDBC connect string for a JDBC metastore

javax.jdo.option.ConnectionDriverName

com.mysql.jdbc.Driver

Driver class name for a JDBC metastore

javax.jdo.option.ConnectionUserName

****

username to use against metastore database

javax.jdo.option.ConnectionPassword

****

password to use against metastore database

datanucleus.schema.autoCreateAll

true

hive.metastore.warehouse.dir

/hive/warehouse

hive.metastore.schema.verification

false

hive client端的配置

hive.metastore.local

false

hive.metastore.uris

thrift://master:9083

配置完成后启动任务的时候可能还会遇到下面的报错

org.apache.zeppelin.interpreter.InterpreterException: org.apache.zeppelin.interpreter.InterpreterException: org.apache.flink.table.catalog.exceptions.CatalogException: Failed to create Hive Metastore client

at org.apache.zeppelin.interpreter.LazyOpenInterpreter.open(LazyOpenInterpreter.java:76)

at org.apache.zeppelin.interpreter.remote.RemoteInterpreterServer$InterpretJob.jobRun(RemoteInterpreterServer.java:760)

at org.apache.zeppelin.interpreter.remote.RemoteInterpreterServer$InterpretJob.jobRun(RemoteInterpreterServer.java:668)

at org.apache.zeppelin.scheduler.Job.run(Job.java:172)

at org.apache.zeppelin.scheduler.AbstractScheduler.runJob(AbstractScheduler.java:130)

at org.apache.zeppelin.scheduler.ParallelScheduler.lambda$runJobInScheduler$0(ParallelScheduler.java:39)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)

at java.lang.Thread.run(Thread.java:748)

Caused by: org.apache.zeppelin.interpreter.InterpreterException: org.apache.flink.table.catalog.exceptions.CatalogException: Failed to create Hive Metastore client

at org.apache.zeppelin.interpreter.LazyOpenInterpreter.open(LazyOpenInterpreter.java:76)

at org.apache.zeppelin.interpreter.Interpreter.getInterpreterInTheSameSessionByClassName(Interpreter.java:355)

at org.apache.zeppelin.interpreter.Interpreter.getInterpreterInTheSameSessionByClassName(Interpreter.java:366)

at org.apache.zeppelin.flink.FlinkStreamSqlInterpreter.open(FlinkStreamSqlInterpreter.java:47)

at org.apache.zeppelin.interpreter.LazyOpenInterpreter.open(LazyOpenInterpreter.java:70)

... 8 more

Caused by: org.apache.flink.table.catalog.exceptions.CatalogException: Failed to create Hive Metastore client

at org.apache.flink.table.catalog.hive.client.HiveShimV230.getHiveMetastoreClient(HiveShimV230.java:52)

at org.apache.flink.table.catalog.hive.client.HiveMetastoreClientWrapper.createMetastoreClient(HiveMetastoreClientWrapper.java:240)

at org.apache.flink.table.catalog.hive.client.HiveMetastoreClientWrapper.(HiveMetastoreClientWrapper.java:71)

at org.apache.flink.table.catalog.hive.client.HiveMetastoreClientFactory.create(HiveMetastoreClientFactory.java:35)

at org.apache.flink.table.catalog.hive.HiveCatalog.open(HiveCatalog.java:223)

at org.apache.flink.table.catalog.CatalogManager.registerCatalog(CatalogManager.java:191)

at org.apache.flink.table.api.internal.TableEnvironmentImpl.registerCatalog(TableEnvironmentImpl.java:337)

at org.apache.zeppelin.flink.FlinkScalaInterpreter.registerHiveCatalog(FlinkScalaInterpreter.scala:458)

at org.apache.zeppelin.flink.FlinkScalaInterpreter.open(FlinkScalaInterpreter.scala:133)

at org.apache.zeppelin.flink.FlinkInterpreter.open(FlinkInterpreter.java:67)

at org.apache.zeppelin.interpreter.LazyOpenInterpreter.open(LazyOpenInterpreter.java:70)

... 12 more

Caused by: java.lang.reflect.InvocationTargetException

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.flink.table.catalog.hive.client.HiveShimV230.getHiveMetastoreClient(HiveShimV230.java:50)

... 22 more

Caused by: java.lang.RuntimeException: Unable to instantiate org.apache.hadoop.hive.metastore.HiveMetaStoreClient

at org.apache.hadoop.hive.metastore.MetaStoreUtils.newInstance(MetaStoreUtils.java:1708)

at org.apache.hadoop.hive.metastore.RetryingMetaStoreClient.(RetryingMetaStoreClient.java:83)

at org.apache.hadoop.hive.metastore.RetryingMetaStoreClient.getProxy(RetryingMetaStoreClient.java:133)

at org.apache.hadoop.hive.metastore.RetryingMetaStoreClient.getProxy(RetryingMetaStoreClient.java:89)

... 27 more

Caused by: java.lang.reflect.InvocationTargetException

at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method)

at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:62)

at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45)

at java.lang.reflect.Constructor.newInstance(Constructor.java:423)

at org.apache.hadoop.hive.metastore.MetaStoreUtils.newInstance(MetaStoreUtils.java:1706)

... 30 more

Caused by: MetaException(message:Could not connect to meta store using any of the URIs provided. Most recent failure: org.apache.thrift.transport.TTransportException: java.net.ConnectException: Connection refused (Connection refused)

at org.apache.thrift.transport.TSocket.open(TSocket.java:226)

at org.apache.hadoop.hive.metastore.HiveMetaStoreClient.open(HiveMetaStoreClient.java:480)

at org.apache.hadoop.hive.metastore.HiveMetaStoreClient.(HiveMetaStoreClient.java:247)

at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method)

at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:62)

at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45)

at java.lang.reflect.Constructor.newInstance(Constructor.java:423)

at org.apache.hadoop.hive.metastore.MetaStoreUtils.newInstance(MetaStoreUtils.java:1706)

at org.apache.hadoop.hive.metastore.RetryingMetaStoreClient.(RetryingMetaStoreClient.java:83)

at org.apache.hadoop.hive.metastore.RetryingMetaStoreClient.getProxy(RetryingMetaStoreClient.java:133)

at org.apache.hadoop.hive.metastore.RetryingMetaStoreClient.getProxy(RetryingMetaStoreClient.java:89)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.flink.table.catalog.hive.client.HiveShimV230.getHiveMetastoreClient(HiveShimV230.java:50)

at org.apache.flink.table.catalog.hive.client.HiveMetastoreClientWrapper.createMetastoreClient(HiveMetastoreClientWrapper.java:240)

at org.apache.flink.table.catalog.hive.client.HiveMetastoreClientWrapper.(HiveMetastoreClientWrapper.java:71)

at org.apache.flink.table.catalog.hive.client.HiveMetastoreClientFactory.create(HiveMetastoreClientFactory.java:35)

at org.apache.flink.table.catalog.hive.HiveCatalog.open(HiveCatalog.java:223)

at org.apache.flink.table.catalog.CatalogManager.registerCatalog(CatalogManager.java:191)

at org.apache.flink.table.api.internal.TableEnvironmentImpl.registerCatalog(TableEnvironmentImpl.java:337)

at org.apache.zeppelin.flink.FlinkScalaInterpreter.registerHiveCatalog(FlinkScalaInterpreter.scala:458)

at org.apache.zeppelin.flink.FlinkScalaInterpreter.open(FlinkScalaInterpreter.scala:133)

at org.apache.zeppelin.flink.FlinkInterpreter.open(FlinkInterpreter.java:67)

at org.apache.zeppelin.interpreter.LazyOpenInterpreter.open(LazyOpenInterpreter.java:70)

at org.apache.zeppelin.interpreter.Interpreter.getInterpreterInTheSameSessionByClassName(Interpreter.java:355)

at org.apache.zeppelin.interpreter.Interpreter.getInterpreterInTheSameSessionByClassName(Interpreter.java:366)

at org.apache.zeppelin.flink.FlinkStreamSqlInterpreter.open(FlinkStreamSqlInterpreter.java:47)

at org.apache.zeppelin.interpreter.LazyOpenInterpreter.open(LazyOpenInterpreter.java:70)

at org.apache.zeppelin.interpreter.remote.RemoteInterpreterServer$InterpretJob.jobRun(RemoteInterpreterServer.java:760)

at org.apache.zeppelin.interpreter.remote.RemoteInterpreterServer$InterpretJob.jobRun(RemoteInterpreterServer.java:668)

at org.apache.zeppelin.scheduler.Job.run(Job.java:172)

at org.apache.zeppelin.scheduler.AbstractScheduler.runJob(AbstractScheduler.java:130)

at org.apache.zeppelin.scheduler.ParallelScheduler.lambda$runJobInScheduler$0(ParallelScheduler.java:39)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)

at java.lang.Thread.run(Thread.java:748)

Caused by: java.net.ConnectException: Connection refused (Connection refused)

at java.net.PlainSocketImpl.socketConnect(Native Method)

at java.net.AbstractPlainSocketImpl.doConnect(AbstractPlainSocketImpl.java:350)

at java.net.AbstractPlainSocketImpl.connectToAddress(AbstractPlainSocketImpl.java:206)

at java.net.AbstractPlainSocketImpl.connect(AbstractPlainSocketImpl.java:188)

at java.net.SocksSocketImpl.connect(SocksSocketImpl.java:392)

at java.net.Socket.connect(Socket.java:589)

at org.apache.thrift.transport.TSocket.open(TSocket.java:221)

... 37 more

)

at org.apache.hadoop.hive.metastore.HiveMetaStoreClient.open(HiveMetaStoreClient.java:529)

at org.apache.hadoop.hive.metastore.HiveMetaStoreClient.(HiveMetaStoreClient.java:247)

... 35 more

这个报错是因为你虽然配置了hive的metastore,但是没有启动服务.在你配置的服务端执行hive --service metastore 启动就可以了.

这篇文章主要是介绍了在Flink on zeppelin里面流式的写入数据到hive里,以及中间遇到问题的解决方法,同样的你也可以在IDEA里面写代码或者用sql-client去提交这样的任务如果你没有装zeppelin的话.Flink还支持了流式读取hive表,这个暂时就先不介绍了.

推荐阅读

Flink on zeppelin第三弹UDF的使用

JasonLee,公众号:JasonLee的博客Flink on zeppelin第三弹UDF的使用

Flink on zeppelin 实时计算pv,uv结果写入mysql

JasonLee,公众号:JasonLee的博客Flink on zeppelin体验第二弹

Flink on zepplien的安装配置

JasonLee,公众号:JasonLee的博客Flink on zepplien的使用体验

更多spark和flink的内容可以关注下面的公众号

![]()