Python计算机视觉——SIFT描述子

目录

- 1 SIFT描述子

- 1.1SIFT描述子简介

- 1.2 SIFT算法实现步骤简述

- 1.3 SIFT算法可以解决的问题

- 2 关键点检测

- 2.1SIFT要查找的关键点

- 2.2关键点检测的相关概念

- 2.2.1尺度空间

- 2.2.2高斯模糊

- 2.2.3高斯金子塔

- 2.3关键点检测——DOG

- 2.4关键点方向分配

- 2.5关键点匹配

- 2.6代码实现

- 2.6.1关键点检测

- 2.6.2 描述子匹配

- 2.7实验结果分析

- 2.8实现数据集中查找匹配数高的图片

- 2.9 地理标记图像匹配

- 2.9.1 用局部描述子进行匹配

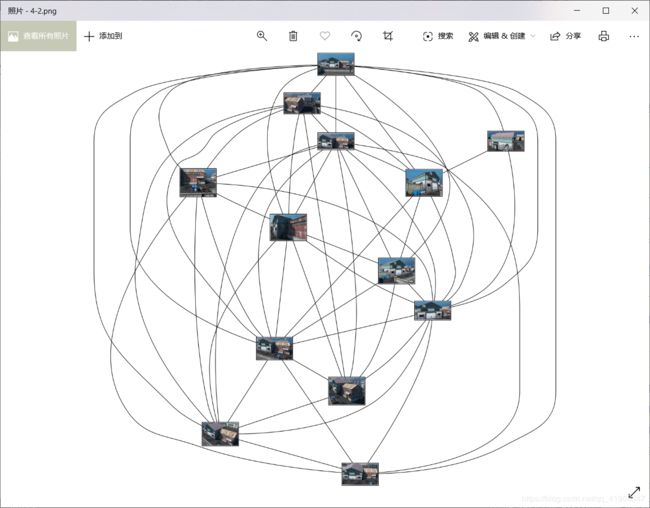

- 2.9.2可视化接连的图片

- 2.9.3完整可视化输出

- 2.9.4实验结果分析

- 3 RANSAC算法筛选SIFT特征匹配

- 3.1RANSAC算法简介

- 3.2RANSAC算法原理

- 3.3RANSAC算法流程在SIFT特征筛选中的主要流程

- 3.4代码实现

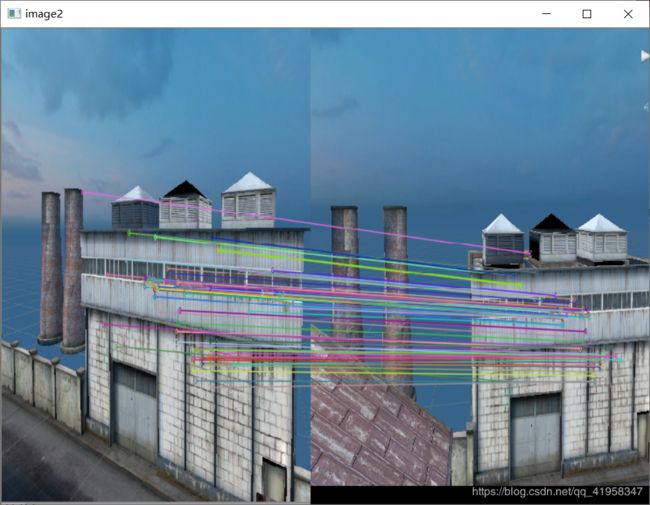

- 3.5实验结果

- 3.6实验结果分析

1 SIFT描述子

1.1SIFT描述子简介

SIFT,即尺度不变特征变换(Scale-invariant feature transform,SIFT),是用于图像处理领域的一种描述。这种描述具有尺度不变性,可在图像中检测出关键点,是一种局部特征描述子。

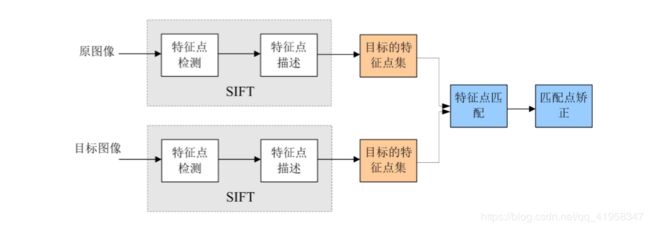

1.2 SIFT算法实现步骤简述

SIFT算法实现特征匹配主要有三个流程,1、提取关键点;2、对关键点附加 详细的信息(局部特征),即描述符;3、通过特征点(附带上特征向量的关 键点)的两两比较找出相互匹配的若干对特征点,建立景物间的对应关系。

SIFT算法实现特征匹配主要有三个流程,1、提取关键点;2、对关键点附加 详细的信息(局部特征),即描述符;3、通过特征点(附带上特征向量的关 键点)的两两比较找出相互匹配的若干对特征点,建立景物间的对应关系。

1.3 SIFT算法可以解决的问题

1 目标的旋转、缩放、平移(RST)

2 图像仿射/投影变换(视点viewpoint)

3 弱光照影响(illumination)

4 部分目标遮挡(occlusion)

5 杂物场景(clutter)

6 噪声

2 关键点检测

2.1SIFT要查找的关键点

是一些十分突出的点不会因光照、尺度、旋转等因素的改变而消失,比如角点、边缘点、暗区域的亮点以及亮区域的暗点。既然两幅图像中有相同的景物,那么使用某种方法分别提取各自的稳定点,这些点之间会有相互对应的匹配点。

2.2关键点检测的相关概念

2.2.1尺度空间

主要思想是通过 对原始图像进行尺度变换,获得图像多尺度下的空间表示。从而实现边缘、角点检测和不同分辨率上的特征提取,以满足特征点的尺度不变性。

尺度空间中各尺度图像的 模糊程度逐渐变大,能够模拟人在距离目标由近到远时目标在视网膜上的形成过程尺度越大图像越模糊。

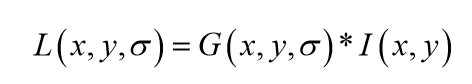

2.2.2高斯模糊

高斯模糊是在Adobe Photoshop等图像处理软件中广泛使用的处理 效果,通常用它来减小图像噪声以及降低细节层次。这种模糊技术生成 的图像的视觉效果是好像经过一个半透明的屏幕观察图像。可以用高斯模糊来处理图像,得到图像的边缘信息与整体信息。

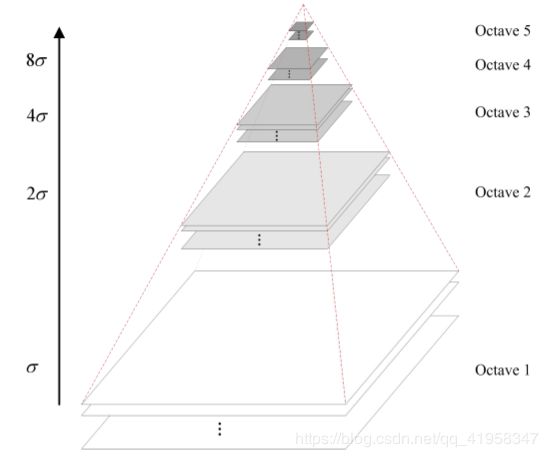

2.2.3高斯金子塔

高斯金子塔的构建过程可分为两步:

(1)对图像做高斯平滑;

(2)对图像做降采样。

为了让尺度体现其连续性,在简单

下采样的基础上加上了高斯滤波。

一幅图像可以产生几组(octave)

图像,一组图像包括几层

(interval)图像。

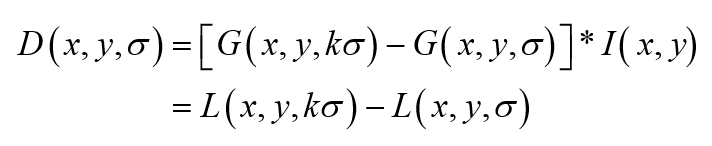

2.3关键点检测——DOG

DoG(Difference of Gaussian)函数

DoG在计算上只需相邻高斯平滑后图像相减,因此简化了计算!

DOG局部极值检测

特征点是由DOG空间的局部极值点组成的。为了寻找DoG函数的极值点, 每一个像素点要和它所有的相邻点比较,看其是否比它的图像域和尺度域 的相邻点大或者小

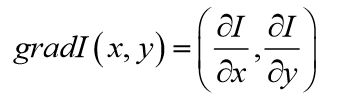

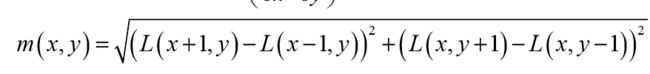

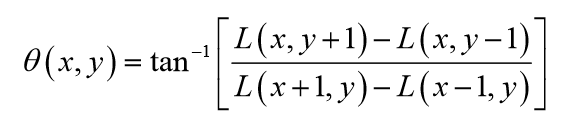

2.4关键点方向分配

通过尺度不变性求极值点,可以使其具有缩放不变的性质。而利 用关键点邻域像素的梯度方向分布特性,可以为每个关键点指定方向参数 方向,从而使描述子对图像旋转具有不变性。

通过求每个极值点的梯度来为极值点赋予方向。

像素点的梯度表示

梯度幅值:

梯度幅值: 梯度方向:

梯度方向:

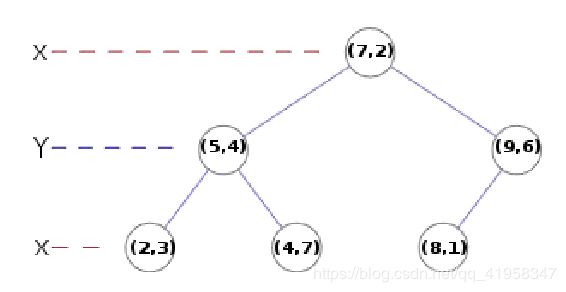

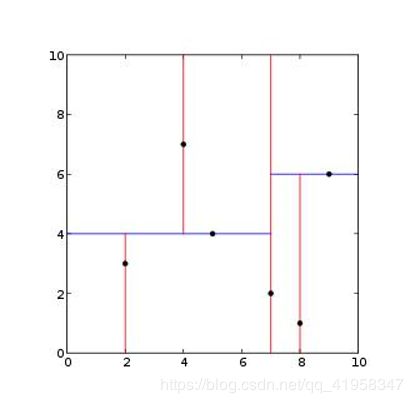

2.5关键点匹配

关键点的匹配可以采用穷举法来完成,但是这样耗费的时间太多,一 般都采用kd树的数据结构来完成搜索。搜索的内容是以目标图像的关 键点为基准,搜索与目标图像的特征点最邻近的原图像特征点和次邻 近的原图像特征点。Kd树是一个平衡二叉树

2.6代码实现

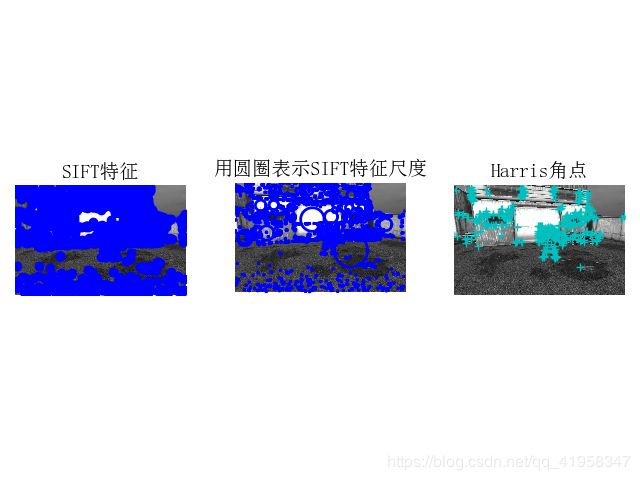

2.6.1关键点检测

# -*- coding: utf-8 -*-

from PIL import Image

from pylab import *

from PCV.localdescriptors import sift

from PCV.localdescriptors import harris

# 添加中文字体支持

from matplotlib.font_manager import FontProperties

font = FontProperties(fname=r"c:\windows\fonts\SimSun.ttc", size=14)

imname = 'E:/picture/02/2.png'

im = array(Image.open(imname).convert('L'))

sift.process_image(imname, 'empire.sift')

l1, d1 = sift.read_features_from_file('empire.sift')

figure()

gray()

subplot(131)

sift.plot_features(im, l1, circle=False)

title(u'SIFT特征',fontproperties=font)

subplot(132)

sift.plot_features(im, l1, circle=True)

title(u'用圆圈表示SIFT特征尺度',fontproperties=font)

# 检测harris角点

harrisim = harris.compute_harris_response(im)

subplot(133)

filtered_coords = harris.get_harris_points(harrisim, 6, 0.1)

imshow(im)

plot([p[1] for p in filtered_coords], [p[0] for p in filtered_coords], '+c')

axis('off')

title(u'Harris角点',fontproperties=font)

show()

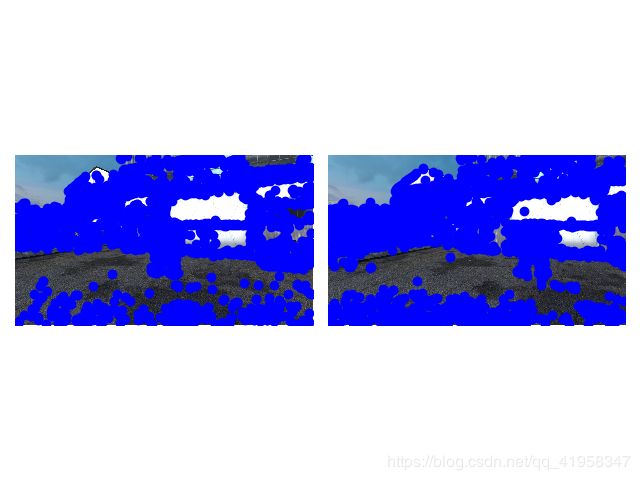

2.6.2 描述子匹配

from PIL import Image

from pylab import *

import sys

from PCV.localdescriptors import sift

if len(sys.argv) >= 3:

im1f, im2f = sys.argv[1], sys.argv[2]

else:

# im1f = '../data/sf_view1.jpg'

# im2f = '../data/sf_view2.jpg'

im1f = 'E:/picture/02/1-1.png'

im2f = 'E:/picture/02/1-3.png'

# im1f = '../data/climbing_1_small.jpg'

# im2f = '../data/climbing_2_small.jpg'

im1 = array(Image.open(im1f))

im2 = array(Image.open(im2f))

sift.process_image(im1f, 'out_sift_1.txt')

l1, d1 = sift.read_features_from_file('out_sift_1.txt')

figure()

gray()

subplot(121)

sift.plot_features(im1, l1, circle=False)

sift.process_image(im2f, 'out_sift_2.txt')

l2, d2 = sift.read_features_from_file('out_sift_2.txt')

subplot(122)

sift.plot_features(im2, l2, circle=False)

#matches = sift.match(d1, d2)

matches = sift.match_twosided(d1, d2)

print ('{} matches'.format(len(matches.nonzero()[0])))

figure()

gray()

sift.plot_matches(im1, im2, l1, l2, matches, show_below=True)

show()

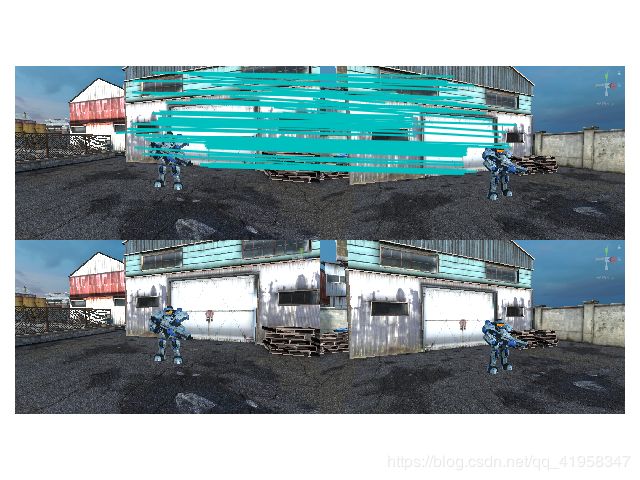

2.7实验结果分析

由实验结果可看出,SIFT点的特点为

1.视角和旋转变化不变性

2.光照不变性

3.尺度不变性

但是在实验过程中发现对模糊的图像和边缘平滑的图像,检测出的特征点过少,对圆更是无能为力

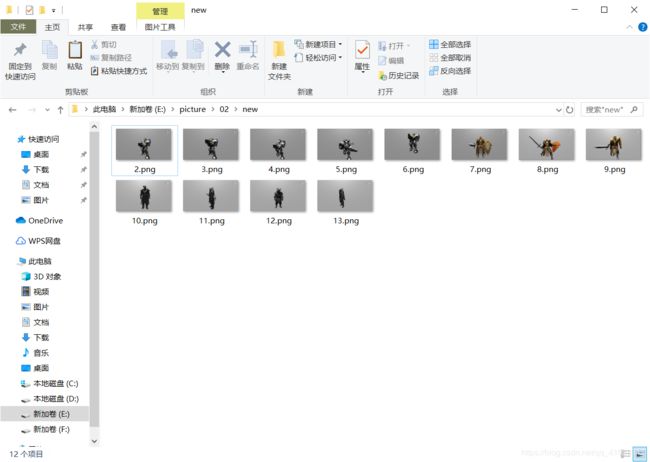

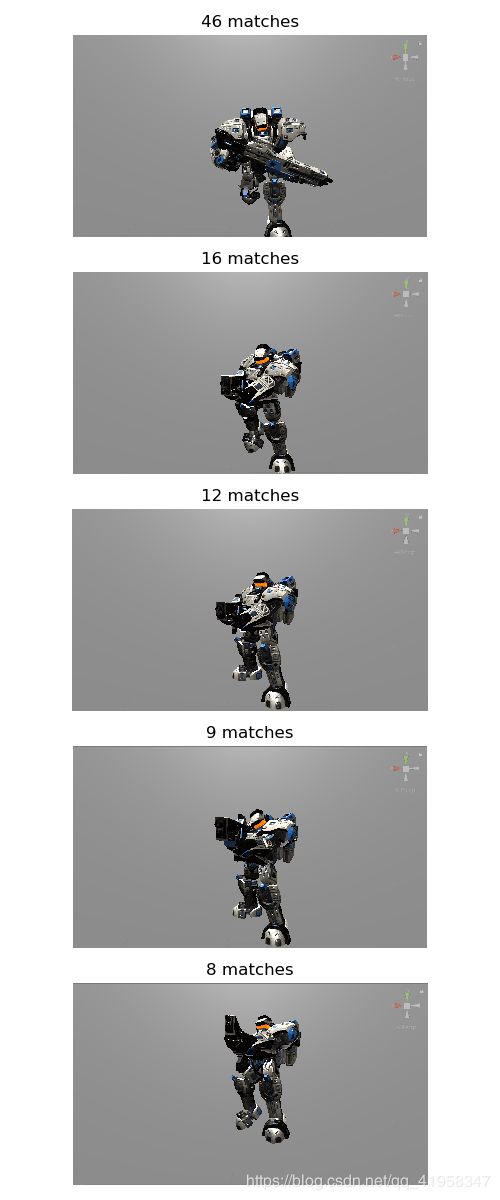

2.8实现数据集中查找匹配数高的图片

代码

from PIL import Image

from pylab import *

from PCV.localdescriptors import sift

import matplotlib.pyplot as plt # plt 用于显示图片

im1f = 'E:/picture/02/new/1.png'

im1 = array(Image.open(im1f))

sift.process_image(im1f, 'out_sift_1.txt')

l1, d1 = sift.read_features_from_file('out_sift_1.txt')

arr=[]#单维链表数组

arrHash = {}#字典型数组

for i in range(2,7):

im2f = 'E:/picture/02/new/'+str(i)+'.png'

im2 = array(Image.open(im2f))

sift.process_image(im2f, 'out_sift_2.txt')

l2, d2 = sift.read_features_from_file('out_sift_2.txt')

matches = sift.match_twosided(d1, d2)

length=len(matches.nonzero()[0])

length=int(length)

arr.append(length)#添加新的值

arrHash[length]=im2f#添加新的值

arr.sort()#数组排序

arr=arr[::-1]#数组反转

arr=arr[:5]#截取数组元素到第五个

i=0

plt.figure(figsize=(5,12))#设置输出图像的大小

for item in arr:

if(arrHash.get(item)!=None):

img=arrHash.get(item)

im1 = array(Image.open(img))

ax=plt.subplot(511 + i)#设置子团位置

ax.set_title('{} matches'.format(item))#设置子图标题

plt.axis('off')#不显示坐标轴

imshow(im1)

i = i + 1

plt.show()

2.9 地理标记图像匹配

2.9.1 用局部描述子进行匹配

# -*- coding: utf-8 -*-

from pylab import *

from PIL import Image

from PCV.localdescriptors import sift

from PCV.tools import imtools

import pydot

""" This is the example graph illustration of matching images from Figure 2-10.

To download the images, see ch2_download_panoramio.py."""

#download_path = "panoimages" # set this to the path where you downloaded the panoramio images

#path = "/FULLPATH/panoimages/" # path to save thumbnails (pydot needs the full system path)

download_path = "E:/picture/04" # set this to the path where you downloaded the panoramio images

path = "E:/picture/date/" # path to save thumbnails (pydot needs the full system path)

# list of downloaded filenames

imlist = imtools.get_imlist(download_path)

print(imlist)

nbr_images = len(imlist)

# extract features

featlist = [imname[:-3] + 'sift' for imname in imlist]

for i, imname in enumerate(imlist):

sift.process_image(imname, featlist[i])

matchscores = zeros((nbr_images, nbr_images))

for i in range(nbr_images):

for j in range(i, nbr_images): # only compute upper triangle

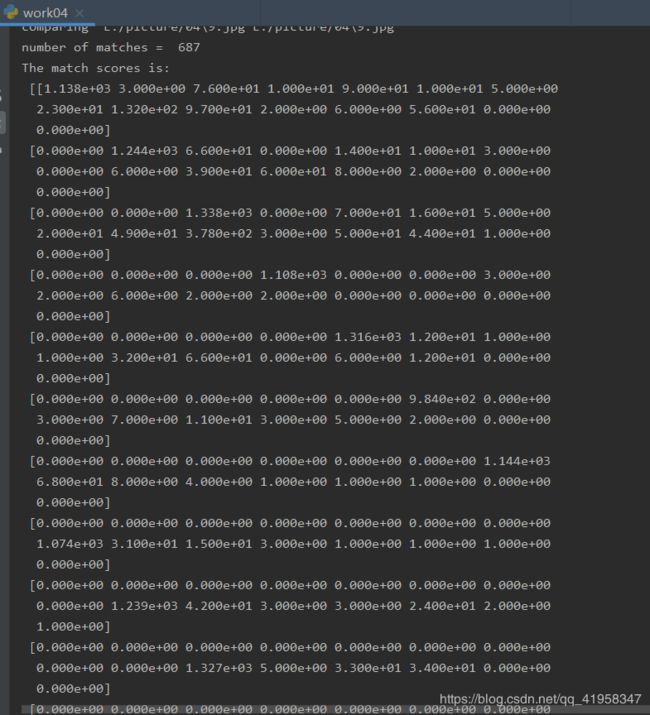

print('comparing ', imlist[i], imlist[j])

l1, d1 = sift.read_features_from_file(featlist[i])

l2, d2 = sift.read_features_from_file(featlist[j])

matches = sift.match_twosided(d1, d2)

nbr_matches = sum(matches > 0)

print('number of matches = ', nbr_matches)

matchscores[i, j] = nbr_matches

print("The match scores is: \n", matchscores)

# copy values

for i in range(nbr_images):

for j in range(i + 1, nbr_images): # no need to copy diagonal

matchscores[j, i] = matchscores[i, j]

2.9.2可视化接连的图片

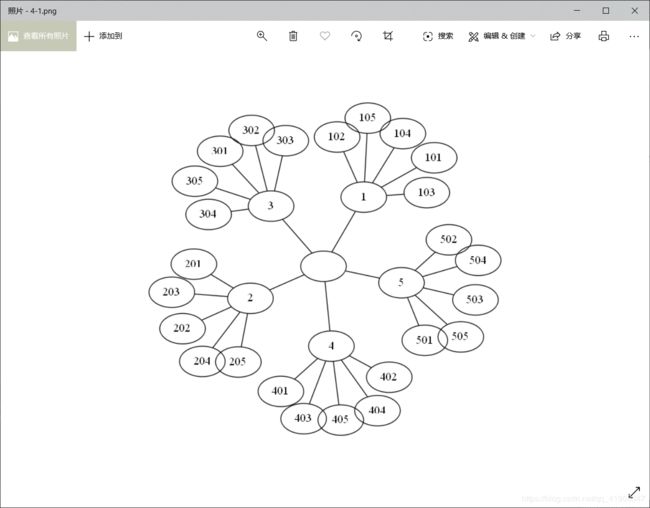

g = pydot.Dot(graph_type='graph')

g.add_node(pydot.Node(str(0), fontcolor='transparent'))

for i in range(5):

g.add_node(pydot.Node(str(i + 1)))

g.add_edge(pydot.Edge(str(0), str(i + 1)))

for j in range(5):

g.add_node(pydot.Node(str(j + 1) + '0' + str(i + 1)))

g.add_edge(pydot.Edge(str(j + 1) + '0' + str(i + 1), str(j + 1)))

g.write_png('E:/picture/date/4-1.png', prog='neato')

2.9.3完整可视化输出

# -*- coding: utf-8 -*-

from pylab import *

from PIL import Image

from PCV.localdescriptors import sift

from PCV.tools import imtools

import pydot

""" This is the example graph illustration of matching images from Figure 2-10.

To download the images, see ch2_download_panoramio.py."""

# download_path = "panoimages" # set this to the path where you downloaded the panoramio images

# path = "/FULLPATH/panoimages/" # path to save thumbnails (pydot needs the full system path)

# download_path = "F:\\dropbox\\Dropbox\\translation\\pcv-notebook\\data\\panoimages" # set this to the path where you downloaded the panoramio images

# path = "F:\\dropbox\\Dropbox\\translation\\pcv-notebook\\data\\panoimages\\" # path to save thumbnails (pydot needs the full system path)

download_path = "E:/picture/04"

path = "E:/picture/04/"

# list of downloaded filenames

imlist = imtools.get_imlist(download_path)

nbr_images = len(imlist)

# extract features

featlist = [imname[:-3] + 'sift' for imname in imlist]

for i, imname in enumerate(imlist):

sift.process_image(imname, featlist[i])

matchscores = zeros((nbr_images, nbr_images))

for i in range(nbr_images):

for j in range(i, nbr_images): # only compute upper triangle

print('comparing ', imlist[i], imlist[j])

l1, d1 = sift.read_features_from_file(featlist[i])

l2, d2 = sift.read_features_from_file(featlist[j])

matches = sift.match_twosided(d1, d2)

nbr_matches = sum(matches > 0)

print('number of matches = ', nbr_matches)

matchscores[i, j] = nbr_matches

print("The match scores is: \n", matchscores)

# np.savetxt(("../data/panoimages/panoramio_matches.txt",matchscores)

# copy values

for i in range(nbr_images):

for j in range(i + 1, nbr_images): # no need to copy diagonal

matchscores[j, i] = matchscores[i, j]

threshold = 20 # min number of matches needed to create link

g = pydot.Dot(graph_type='graph') # don't want the default directed graph

for i in range(nbr_images):

for j in range(i + 1, nbr_images):

if matchscores[i, j] > threshold:

# first image in pair

im = Image.open(imlist[i])

im.thumbnail((100, 100))

filename = path + str(i) + '.png'

im.save(filename) # need temporary files of the right size

g.add_node(pydot.Node(str(i), fontcolor='transparent', shape='rectangle', image=filename))

# second image in pair

im = Image.open(imlist[j])

im.thumbnail((100, 100))

filename = path + str(j) + '.png'

im.save(filename) # need temporary files of the right size

g.add_node(pydot.Node(str(j), fontcolor='transparent', shape='rectangle', image=filename))

g.add_edge(pydot.Edge(str(i), str(j)))

g.write_png('E:/picture/date/4-2.png')

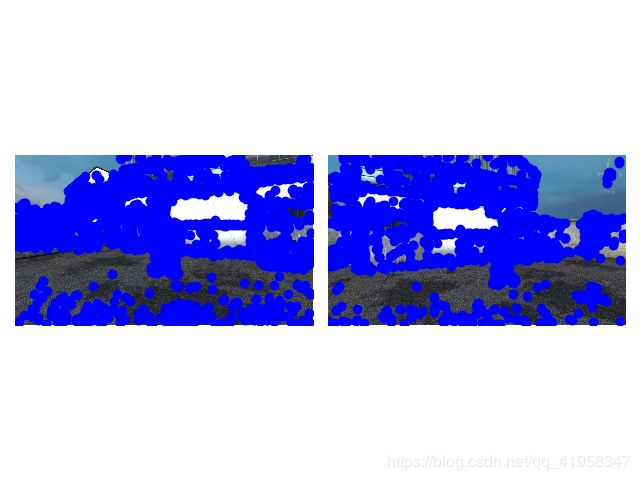

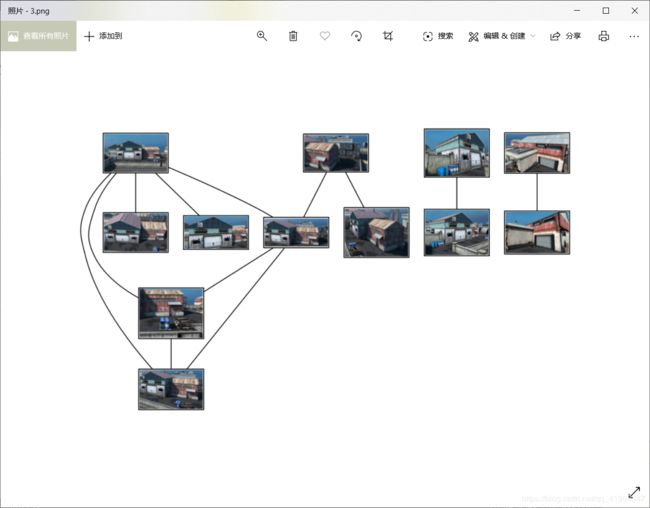

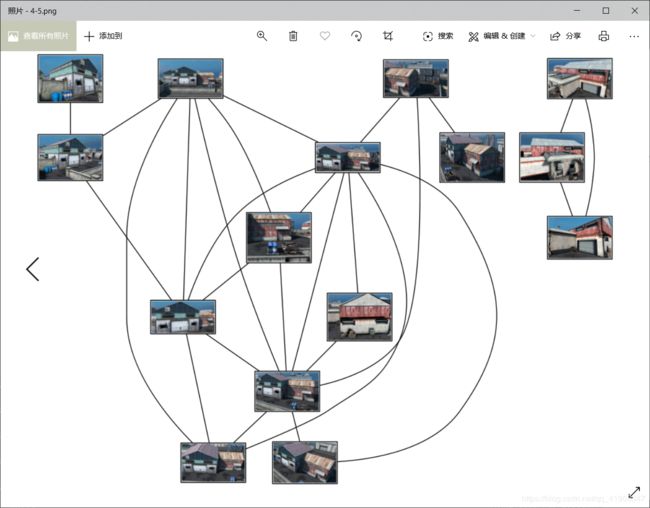

2.9.4实验结果分析

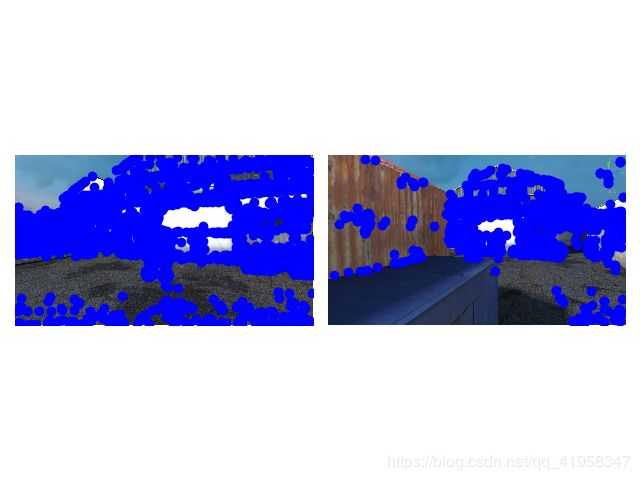

由该图可看出连线较多的都是正面,或者建筑物比较完整的,而局部的建筑物,或者不全的,匹配值mathches就小,所以连线就少。

threshold=50

threshold=20

这是threshold=2

这是threshold=2

相同角度,只是尺度不同,旋转不同,更容易匹配。随着threshold的提高,排除掉了因为少量相似而被认为是相同建筑物的误差。再threshold=50时,发现建筑物侧面的图片不被认为是同一建筑物,所以特征点的匹配数的多少也与角度有关

3 RANSAC算法筛选SIFT特征匹配

3.1RANSAC算法简介

RANSAC(RANdom SAmple Consensus)随机抽样一致算法,是一种在包含离群点在内的数据集里,通过迭代的方式估计模型的参数。举个例子,我们计算单应性矩阵时,初始匹配有很多的误匹配即是一个有离群点的数据集,然后我们估计出单应性矩阵。

RANSAC是一种算法的思路,在计算机视觉中应用较多。它是一种不确定的算法,即有一定的概率得出一个合理的结果,当然也会出现错误的结果。如果要提高概率,一个要提高迭代的次数,在一个就是减少数据集离群点的比例。

RANSAC 在视觉中有很多的应用,比如2D特征点匹配,3D点云匹配,在图片或者点云中识别直线,识别可以参数化的形状。RANSAC还可以用来拟合函数等。

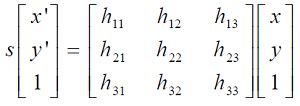

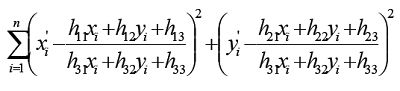

3.2RANSAC算法原理

OpenCV中滤除误匹配对采用RANSAC算法寻找一个最佳单应性矩阵H,矩阵大小为3×3。RANSAC目的是找到最优的参数矩阵使得满足该矩阵的数据点个数最多,通常令h33=1来归一化矩阵。由于单应性矩阵有8个未知参数,至少需要8个线性方程求解,对应到点位置信息上,一组点对可以列出两个方程,则至少包含4组匹配点对。

RANSAC算法从匹配数据集中随机抽出4个样本并保证这4个样本之间不共线,计算出单应性矩阵,然后利用这个模型测试所有数据,并计算满足这个模型数据点的个数与投影误差(即代价函数),若此模型为最优模型,则对应的代价函数最小。

3.3RANSAC算法流程在SIFT特征筛选中的主要流程

RANSAC算法在SIFT特征筛选中的主要流程是:

(1) 从样本集中随机抽选一个RANSAC样本,即4个匹配点对

(2) 根据这4个匹配点对计算变换矩阵M

(3) 根据样本集,变换矩阵M,和误差度量函数计算满足当前变换矩阵的一致集consensus,并返回一致集中元素个数

(4) 根据当前一致集中元素个数判断是否最优(最大)一致集,若是则更新当前最优一致集

(5) 更新当前错误概率p,若p大于允许的最小错误概率则重复(1)至(4)继续迭代,直到当前错误概率p小于最小错误概率

3.4代码实现

# -*- coding: utf-8 -*-

import cv2

import numpy as np

import random

def compute_fundamental(x1, x2):

n = x1.shape[1]

if x2.shape[1] != n:

raise ValueError("Number of points don't match.")

# build matrix for equations

A = np.zeros((n, 9))

for i in range(n):

A[i] = [x1[0, i] * x2[0, i], x1[0, i] * x2[1, i], x1[0, i] * x2[2, i],

x1[1, i] * x2[0, i], x1[1, i] * x2[1, i], x1[1, i] * x2[2, i],

x1[2, i] * x2[0, i], x1[2, i] * x2[1, i], x1[2, i] * x2[2, i]]

# compute linear least square solution

U, S, V = np.linalg.svd(A)

F = V[-1].reshape(3, 3)

# constrain F

# make rank 2 by zeroing out last singular value

U, S, V = np.linalg.svd(F)

S[2] = 0

F = np.dot(U, np.dot(np.diag(S), V))

return F / F[2, 2]

def compute_fundamental_normalized(x1, x2):

""" Computes the fundamental matrix from corresponding points

(x1,x2 3*n arrays) using the normalized 8 point algorithm. """

n = x1.shape[1]

if x2.shape[1] != n:

raise ValueError("Number of points don't match.")

# normalize image coordinates

x1 = x1 / x1[2]

mean_1 = np.mean(x1[:2], axis=1)

S1 = np.sqrt(2) / np.std(x1[:2])

T1 = np.array([[S1, 0, -S1 * mean_1[0]], [0, S1, -S1 * mean_1[1]], [0, 0, 1]])

x1 = np.dot(T1, x1)

x2 = x2 / x2[2]

mean_2 = np.mean(x2[:2], axis=1)

S2 = np.sqrt(2) / np.std(x2[:2])

T2 = np.array([[S2, 0, -S2 * mean_2[0]], [0, S2, -S2 * mean_2[1]], [0, 0, 1]])

x2 = np.dot(T2, x2)

# compute F with the normalized coordinates

F = compute_fundamental(x1, x2)

# print (F)

# reverse normalization

F = np.dot(T1.T, np.dot(F, T2))

return F / F[2, 2]

def randSeed(good, num = 8):

'''

:param good: 初始的匹配点对

:param num: 选择随机选取的点对数量

:return: 8个点对list

'''

eight_point = random.sample(good, num)

return eight_point

def PointCoordinates(eight_points, keypoints1, keypoints2):

'''

:param eight_points: 随机八点

:param keypoints1: 点坐标

:param keypoints2: 点坐标

:return:8个点

'''

x1 = []

x2 = []

tuple_dim = (1.,)

for i in eight_points:

tuple_x1 = keypoints1[i[0].queryIdx].pt + tuple_dim

tuple_x2 = keypoints2[i[0].trainIdx].pt + tuple_dim

x1.append(tuple_x1)

x2.append(tuple_x2)

return np.array(x1, dtype=float), np.array(x2, dtype=float)

def ransac(good, keypoints1, keypoints2, confidence,iter_num):

Max_num = 0

good_F = np.zeros([3,3])

inlier_points = []

for i in range(iter_num):

eight_points = randSeed(good)

x1,x2 = PointCoordinates(eight_points, keypoints1, keypoints2)

F = compute_fundamental_normalized(x1.T, x2.T)

num, ransac_good = inlier(F, good, keypoints1, keypoints2, confidence)

if num > Max_num:

Max_num = num

good_F = F

inlier_points = ransac_good

print(Max_num, good_F)

return Max_num, good_F, inlier_points

def computeReprojError(x1, x2, F):

"""

计算投影误差

"""

ww = 1.0/(F[2,0]*x1[0]+F[2,1]*x1[1]+F[2,2])

dx = (F[0,0]*x1[0]+F[0,1]*x1[1]+F[0,2])*ww - x2[0]

dy = (F[1,0]*x1[0]+F[1,1]*x1[1]+F[1,2])*ww - x2[1]

return dx*dx + dy*dy

def inlier(F,good, keypoints1,keypoints2,confidence):

num = 0

ransac_good = []

x1, x2 = PointCoordinates(good, keypoints1, keypoints2)

for i in range(len(x2)):

line = F.dot(x1[i].T)

#在对极几何中极线表达式为[A B C],Ax+By+C=0, 方向向量可以表示为[-B,A]

line_v = np.array([-line[1], line[0]])

err = h = np.linalg.norm(np.cross(x2[i,:2], line_v)/np.linalg.norm(line_v))

# err = computeReprojError(x1[i], x2[i], F)

if abs(err) < confidence:

ransac_good.append(good[i])

num += 1

return num, ransac_good

if __name__ =='__main__':

im1 = 'E:/picture/9/1.jpg'

im2 = 'E:/picture/9/2.jpg'

print(cv2.__version__)

psd_img_1 = cv2.imread(im1, cv2.IMREAD_COLOR)

psd_img_2 = cv2.imread(im2, cv2.IMREAD_COLOR)

# 3) SIFT特征计算

sift = cv2.xfeatures2d.SIFT_create()

# find the keypoints and descriptors with SIFT

kp1, des1 = sift.detectAndCompute(psd_img_1, None)

kp2, des2 = sift.detectAndCompute(psd_img_2, None)

# FLANN 参数设计

match = cv2.BFMatcher()

matches = match.knnMatch(des1, des2, k=2)

# Apply ratio test

# 比值测试,首先获取与 A距离最近的点 B (最近)和 C (次近),

# 只有当 B/C 小于阀值时(0.75)才被认为是匹配,

# 因为假设匹配是一一对应的,真正的匹配的理想距离为0

good = []

for m, n in matches:

if m.distance < 0.75 * n.distance:

good.append([m])

print(good[0][0])

print("number of feature points:",len(kp1), len(kp2))

print(type(kp1[good[0][0].queryIdx].pt))

print("good match num:{} good match points:".format(len(good)))

for i in good:

print(i[0].queryIdx, i[0].trainIdx)

Max_num, good_F, inlier_points = ransac(good, kp1, kp2, confidence=30, iter_num=500)

# cv2.drawMatchesKnn expects list of lists as matches.

# img3 = np.ndarray([2, 2])

# img3 = cv2.drawMatchesKnn(img1, kp1, img2, kp2, good[:10], img3, flags=2)

# cv2.drawMatchesKnn expects list of lists as matches.

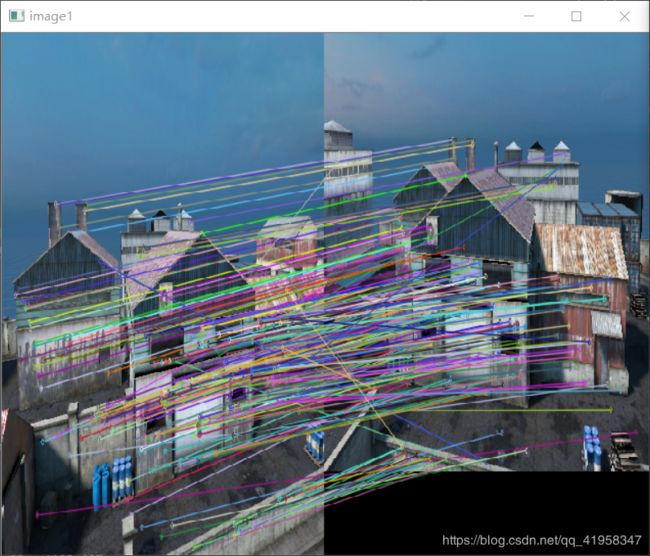

img3 = cv2.drawMatchesKnn(psd_img_1,kp1,psd_img_2,kp2,good,None,flags=2)

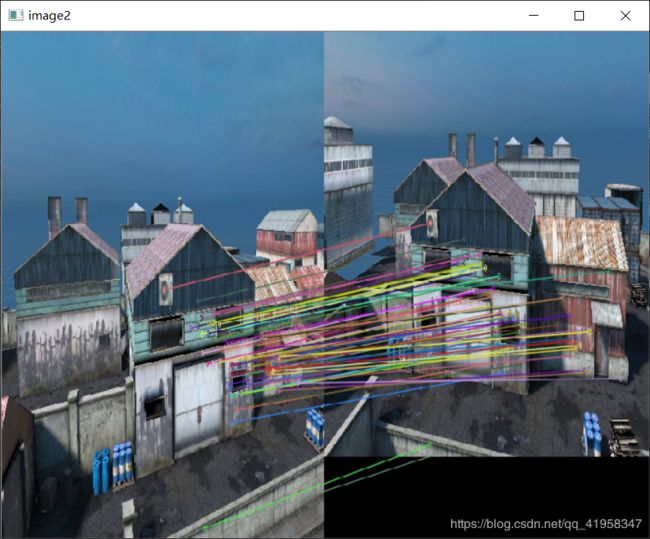

img4 = cv2.drawMatchesKnn(psd_img_1,kp1,psd_img_2,kp2,inlier_points,None,flags=2)

cv2.namedWindow('image1', cv2.WINDOW_NORMAL)

cv2.namedWindow('image2', cv2.WINDOW_NORMAL)

cv2.imshow("image1",img3)

cv2.imshow("image2",img4)

cv2.waitKey(0)#等待按键按下

cv2.destroyAllWindows()#清除所有窗口

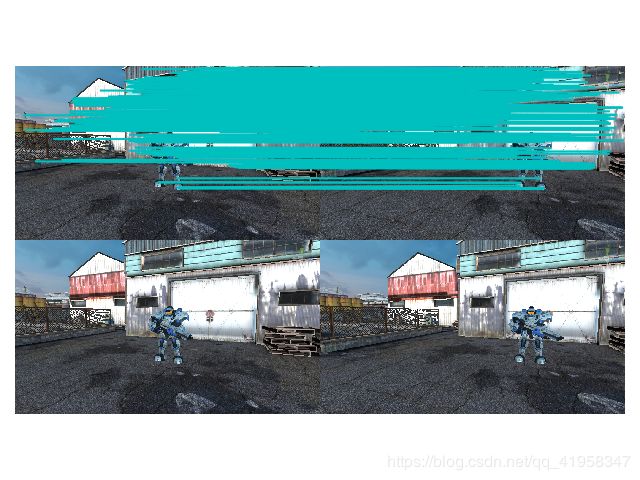

3.5实验结果

3.6实验结果分析

从以上实验结果可看出,RANSAC算法可以剔除大部分的错误点的信息,但是也可以出去,一些正确点的信息也被RANSAC所剔除。并发现RANSAC算法还可以用于图像搜索时的纠错与物体识别定位,对图片的定位效果好