芝加哥犯罪率数据集(数据分析与特征处理)

参照:SF-Crime Analysis & Prediction

Crime Scene Exploration and Model Fit

Random Forest Crime Classification(特征工程和预测)

主要是因为这个数据集包含了时间序列和坐标点。练习一下特征处理。

数据分析

导入库

#%%

%matplotlib inline

import numpy as np

import pandas as pd

import math

import seaborn as sns

import matplotlib.pyplot as plt

import matplotlib.ticker as ticker

from datetime import tzinfo,timedelta,datetime

datetime库概述

导入数据

data_train = pd.read_csv("C:\\Users\\Nihil\\Documents\\pythonlearn\\data\\kaggle\\sf-crime\\train.csv")

print(data_train.dtypes)

Dates object

Category object

Descript object

DayOfWeek object

PdDistrict object

Resolution object

Address object

X float64

Y float64

dtype: object

print(data_train.head())

Dates Category Descript \

0 2015-05-13 23:53:00 WARRANTS WARRANT ARREST

1 2015-05-13 23:53:00 OTHER OFFENSES TRAFFIC VIOLATION ARREST

2 2015-05-13 23:33:00 OTHER OFFENSES TRAFFIC VIOLATION ARREST

3 2015-05-13 23:30:00 LARCENY/THEFT GRAND THEFT FROM LOCKED AUTO

4 2015-05-13 23:30:00 LARCENY/THEFT GRAND THEFT FROM LOCKED AUTO

DayOfWeek PdDistrict Resolution Address \

0 Wednesday NORTHERN ARREST, BOOKED OAK ST / LAGUNA ST

1 Wednesday NORTHERN ARREST, BOOKED OAK ST / LAGUNA ST

2 Wednesday NORTHERN ARREST, BOOKED VANNESS AV / GREENWICH ST

3 Wednesday NORTHERN NONE 1500 Block of LOMBARD ST

4 Wednesday PARK NONE 100 Block of BRODERICK ST

X Y

0 -122.425892 37.774599

1 -122.425892 37.774599

2 -122.424363 37.800414

3 -122.426995 37.800873

4 -122.438738 37.771541

print(data_train.columns.values)

['Dates' 'Category' 'Descript' 'DayOfWeek' 'PdDistrict' 'Resolution'

'Address' 'X' 'Y']

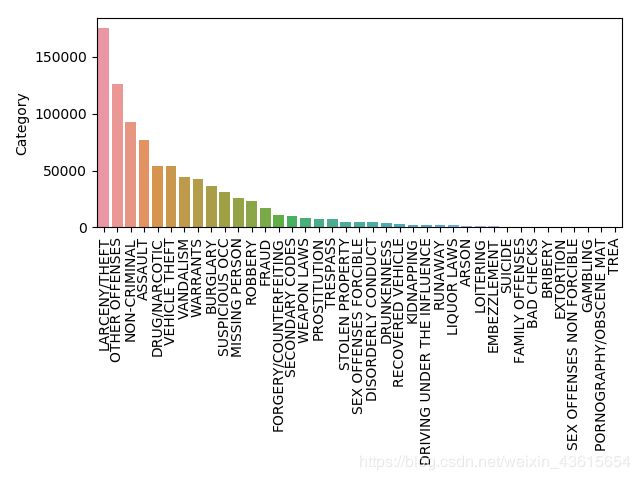

单一特征分析

Category

类型:类别特征

有哪些特征?

print('number of crime categories is {}'.format(len(data_train.Category.unique())))

print('Type:')

print(data_train.Category.value_counts().index)

统计频次还可以用:

print('number of crime categories is {}'.format(data_train.Category.nunique()))

number of crime categories is 39

number of crime categories is 39

Type

Index(['LARCENY/THEFT', 'OTHER OFFENSES', 'NON-CRIMINAL', 'ASSAULT',

'DRUG/NARCOTIC', 'VEHICLE THEFT', 'VANDALISM', 'WARRANTS', 'BURGLARY',

'SUSPICIOUS OCC', 'MISSING PERSON', 'ROBBERY', 'FRAUD',

'FORGERY/COUNTERFEITING', 'SECONDARY CODES', 'WEAPON LAWS',

'PROSTITUTION', 'TRESPASS', 'STOLEN PROPERTY', 'SEX OFFENSES FORCIBLE',

'DISORDERLY CONDUCT', 'DRUNKENNESS', 'RECOVERED VEHICLE', 'KIDNAPPING',

'DRIVING UNDER THE INFLUENCE', 'RUNAWAY', 'LIQUOR LAWS', 'ARSON',

'LOITERING', 'EMBEZZLEMENT', 'SUICIDE', 'FAMILY OFFENSES', 'BAD CHECKS',

'BRIBERY', 'EXTORTION', 'SEX OFFENSES NON FORCIBLE', 'GAMBLING',

'PORNOGRAPHY/OBSCENE MAT', 'TREA'],

dtype='object')

作图:每个类型出现的频次

number_of_crimes = data_train.Category.value_counts()

ax = sns.barplot(x=number_of_crimes.index,y=number_of_crimes)

ax.set_xticklabels(number_of_crimes.index,rotation=90)

时间属性

类别:时间序列

type(数据类型):object(需要转换)

统计各个属性的频次

print(data_train.DayOfWeek.value_counts())

Friday 133734

Wednesday 129211

Saturday 126810

Thursday 125038

Tuesday 124965

Monday 121584

Sunday 116707

Name: DayOfWeek, dtype: int64

crimeTime = data_train.DayOfWeek.value_counts()

ax = sns.barplot(x=crimeTime.index,y=crimeTime)

ax.set_xticklabels(crimeTime.index,rotation=90)

转换日期格式

data_train.Dates = pd.to_datetime(data_train.Dates,format='%Y/%m/%d %H:%M:%S')

print(data_train.Dates.dtypes)

datetime64[ns]

- 如何只留下年月日,去掉分秒

data_train['Dates'] = pd.to_datetime(data_train['Dates'])

data_train['Date'] = data_train['Dates'].dt.date

print(data_train.Date)

0 2015-05-13

1 2015-05-13

2 2015-05-13

3 2015-05-13

4 2015-05-13

Name: Date, dtype: object

写一个函数,方便转换测试集和训练集

def transformTimeDataset(dataset):

dataset['Dates'] = pd.to_datetime(dataset['Dates'])

dataset['Date'] = dataset['Dates'].dt.date

dataset['n_days'] = (dataset['Date']-dataset['Date'].min()).apply(lambda x:x.days)

dataset['Year'] = dataset['Dates'].dt.year

dataset['DayOfWeek'] = dataset['Dates'].dt.dayofweek

dataset['WeekOfYear'] = dataset['Dates'].dt.weekofyear

dataset['Month'] = dataset['Dates'].dt.month

dataset['Hour'] = dataset['Dates'].dt.hour

dataset = dataset.drop('Dates',1)

return dataset

转换

data_train = transformTimeDataset(data_train)

data_test = transformTimeDataset(data_test)

print(data_train.Date.head())

0 2015-05-13

1 2015-05-13

2 2015-05-13

3 2015-05-13

4 2015-05-13

Name: Date, dtype: object

地理信息

有几个街区,街区类别

print('number of PdDistrict is {}'.format(data_train.PdDistrict.nunique()))

print('Type:')

print(data_train.PdDistrict.value_counts().index)

number of PdDistrict is 10

Type:

Index(['SOUTHERN', 'MISSION', 'NORTHERN', 'BAYVIEW', 'CENTRAL', 'TENDERLOIN',

'INGLESIDE', 'TARAVAL', 'PARK', 'RICHMOND'],

dtype='object')

给街区编热独码。

dataset = pd.get_dummies(data=dataset, columns=[ 'PdDistrict'], drop_first = True)

print(dataset)

PdDistrict_CENTRAL PdDistrict_INGLESIDE PdDistrict_MISSION \

0 0 0 0

1 0 0 0

2 0 0 0

3 0 0 0

4 0 0 0

PdDistrict_NORTHERN PdDistrict_PARK PdDistrict_RICHMOND \

0 1 0 0

1 1 0 0

2 1 0 0

3 1 0 0

4 0 1 0

PdDistrict_SOUTHERN PdDistrict_TARAVAL PdDistrict_TENDERLOIN

0 0 0 0

1 0 0 0

2 0 0 0

3 0 0 0

4 0 0 0

地址

print('number of Address is {}'.format(data_train.Address.nunique()))

print('Type:')

print(data_train.Address.value_counts())

number of Address is 23228

Type:

800 Block of BRYANT ST 26533

800 Block of MARKET ST 6581

2000 Block of MISSION ST 5097

1000 Block of POTRERO AV 4063

900 Block of MARKET ST 3251

0 Block of TURK ST 3228

0 Block of 6TH ST 2884

300 Block of ELLIS ST 2703

400 Block of ELLIS ST 2590

16TH ST / MISSION ST 2504

1000 Block of MARKET ST 2489

1100 Block of MARKET ST 2319

2000 Block of MARKET ST 2168

100 Block of OFARRELL ST 2140

700 Block of MARKET ST 2081

3200 Block of 20TH AV 2035

100 Block of 6TH ST 1887

500 Block of JOHNFKENNEDY DR 1824

TURK ST / TAYLOR ST 1810

200 Block of TURK ST 1800

0 Block of PHELAN AV 1791

0 Block of UNITEDNATIONS PZ 1789

0 Block of POWELL ST 1717

100 Block of EDDY ST 1681

1400 Block of PHELPS ST 1629

300 Block of EDDY ST 1589

100 Block of GOLDEN GATE AV 1353

100 Block of POWELL ST 1333

200 Block of INTERSTATE80 HY 1316

MISSION ST / 16TH ST 1300

...

CRISP RD / QUESADA AV 1

AGUA WY / TERESITA BL 1

MUNICH ST / NAYLOR ST 1

SPEAR ST / THE EMBARCADERO SOUTH ST 1

SENECA AV / BERTITA ST 1

600 Block of ARTHUR AV 1

MAJESTIC AV / SUMMIT ST 1

FERNWOOD DR / BRENTWOOD AV 1

PACHECO ST / GREAT HWY 1

CLAREMONT BL / DORCHESTER WY 1

ARBOR ST / HILIRITAS AV 1

23RD ST / SEVERN ST 1

CERVANTES BL / BAY ST 1

TELEGRAPH HILL BL / LOMBARD ST 1

300 Block of MATEO ST 1

CABRILLO ST / 22ND AV 1

WAWONA ST / 33RD AV 1

CONRAD ST / POPPY LN 1

16TH ST / SPENCER AL 1

MARSILY ST / ST MARYS AV 1

MOULTRIE ST / OGDEN AV 1

1500 Block of BURROWS ST 1

OGDEN AV / FOLSOM ST 1

LAWTON ST / LOWER GREAT HY 1

CHENERY ST / MIZPAH ST 1

3RD ST / JAMES LICK FREEWAY HY 1

5THSTNORTH ST / ELLIS ST 1

PARADISE AV / BURNSIDE AV 1

PORTOLA DR / 15TH AV 1

DE HARO ST / ALAMEDA ST 1

Name: Address, Length: 23228, dtype: int64

这个操作太有意思了,小本本记下来

把文本里含有某个关键词的赋值1,其余赋值为0。

dataset['Block'] = dataset['Address'].str.contains('block', case=False)

dataset['Block'] = dataset['Block'].map(lambda x: 1 if x == True else 0)

print(dataset.Block)

0 0

1 0

2 0

3 1

4 1

Name: Block, dtype: int64

为了方便测试集和训练集的转换,写一个函数(其实可以把时间和地址的转换写一起,我只是为了方便看明白所以分开了)

def transformdGeoDataset(dataset):

dataset['Block'] = dataset['Address'].str.contains('block', case=False)

dataset['Block'] = dataset['Block'].map(lambda x: 1 if x == True else 0)

dataset.drop('Address', 1)

dataset = pd.get_dummies(data=dataset,columns='PdDistrict',drop_first=True)

return dataset

转换测试集与训练集

data_train = transformdGeoDataset(data_train)

data_test = transformdGeoDataset(data_test)

print(data_train.head())

输出结果

Category Descript DayOfWeek Resolution Address X Y Date n_days Year WeekOfYear Month Hour Block PdDistrict_CENTRAL PdDistrict_INGLESIDE PdDistrict_MISSION PdDistrict_NORTHERN PdDistrict_PARK PdDistrict_RICHMOND PdDistrict_SOUTHERN PdDistrict_TARAVAL PdDistrict_TENDERLOIN

0 WARRANTS WARRANT ARREST 2 ARREST, BOOKED OAK ST / LAGUNA ST -122.425892 37.774599 2015-05-13 4510 2015 20 5 23 0 0 0 0 1 0 0 0 0 0

1 OTHER OFFENSES TRAFFIC VIOLATION ARREST 2 ARREST, BOOKED OAK ST / LAGUNA ST -122.425892 37.774599 2015-05-13 4510 2015 20 5 23 0 0 0 0 1 0 0 0 0 0

2 OTHER OFFENSES TRAFFIC VIOLATION ARREST 2 ARREST, BOOKED VANNESS AV / GREENWICH ST -122.424363 37.800414 2015-05-13 4510 2015 20 5 23 0 0 0 0 1 0 0 0 0 0

3 LARCENY/THEFT GRAND THEFT FROM LOCKED AUTO 2 NONE 1500 Block of LOMBARD ST -122.426995 37.800873 2015-05-13 4510 2015 20 5 23 1 0 0 0 1 0 0 0 0 0

4 LARCENY/THEFT GRAND THEFT FROM LOCKED AUTO 2 NONE 100 Block of BRODERICK ST -122.438738 37.771541 2015-05-13 4510 2015 20 5 23 1 0 0 0 0 1 0 0 0 0

X,Y坐标点

写了一下午的又没了,一脸血泪,记得保存。

sns.pairplot(data_train[["X", "Y"]])

从图中可看出,y点有80的值较为离群。

sns.boxplot(data_train[["Y"]])

data_train = data_train[train_data["Y"] < 80]

sns.distplot(data_train[["X"]])

去掉一些属性

data_train = data_train.drop(["Descript", "Resolution","Address","Dates","Date"], axis = 1)

data_test = data_test.drop(["Address","Dates","Date"], axis = 1)

对Target(Category)进行编码

from sklearn.preprocessing import LabelEncoder

le = LabelEncoder()

data_train.Category = le.fit_transform(data_train.Category)

X与y

X = data_train.drop("Category",axis=1).values

y = data_train['Category'].values

from sklearn.model_selection import train_test_split

X_train,X_test,y_train,y_test = train_test_split(X,y,test_size=0.1,random_state=42)

训练模型采用的数据均来自train.csv,把Train里的数据分为训练集和测试集。

决策树模型

from sklearn.tree import DecisionTreeClassifier

dtree = DecisionTreeClassifier()

dtree.fit(X_train,y_train)

预测与评估

from sklearn.metrics import classification_report,confusion_matrix

cm = confusion_matrix(y_test,predictions)

fig,ax = plt.subplots(figsize=(20,20))

sns.heatmap(cm,annot=False,ax = ax)

ax.set_xlabel('Predicted labels')

ax.set_ylabel('True labels')

ax.set_title('Confusion Matrix')

plt.show()

precision,recall,f1-score,support

print(classification_report(y_test,predictions))

precision recall f1-score support

0 0.04 0.04 0.04 168

1 0.21 0.27 0.23 7632

2 0.00 0.00 0.00 41

3 0.00 0.00 0.00 28

4 0.14 0.14 0.14 3762

5 0.02 0.04 0.03 409

6 0.02 0.03 0.02 212

7 0.35 0.51 0.41 5437

8 0.01 0.01 0.01 421

9 0.00 0.00 0.00 91

10 0.00 0.00 0.00 29

11 0.02 0.02 0.02 44

12 0.11 0.13 0.12 1043

13 0.07 0.08 0.07 1621

14 0.07 0.07 0.07 14

15 0.04 0.04 0.04 232

16 0.38 0.34 0.36 17455

17 0.06 0.07 0.06 192

18 0.26 0.23 0.25 138

19 0.49 0.57 0.53 2562

20 0.21 0.19 0.20 9311

21 0.25 0.23 0.24 12464

22 0.00 0.00 0.00 1

23 0.59 0.55 0.57 779

24 0.03 0.03 0.03 311

25 0.08 0.07 0.07 2281

26 0.19 0.17 0.18 204

27 0.00 0.00 0.00 1031

28 0.15 0.13 0.14 478

29 0.00 0.00 0.00 14

30 0.02 0.01 0.02 470

31 0.03 0.02 0.02 45

32 0.07 0.06 0.06 3197

33 0.00 0.00 0.00 0

34 0.03 0.02 0.03 761

35 0.13 0.11 0.11 4540

36 0.43 0.46 0.44 5296

37 0.12 0.09 0.11 4216

38 0.10 0.08 0.09 875

accuracy 0.25 87805

macro avg 0.12 0.12 0.12 87805

weighted avg 0.25 0.25 0.25 87805

随机森林

from sklearn.ensemble import RandomForestClassifier

rfc = RandomForestClassifier(n_estimators=40,min_samples_split=100)

rfc.fit(X_train,y_train)

print(rfc)

RandomForestClassifier(bootstrap=True, class_weight=None, criterion='gini',

max_depth=None, max_features='auto', max_leaf_nodes=None,

min_impurity_decrease=0.0, min_impurity_split=None,

min_samples_leaf=1, min_samples_split=100,

min_weight_fraction_leaf=0.0, n_estimators=40,

n_jobs=None, oob_score=False, random_state=None,

verbose=0, warm_start=False)

验证

rfc_pred = rfc.predict(X_test)

print(classification_report(y_test,rfc_pred))

precision recall f1-score support

0 0.00 0.00 0.00 168

1 0.20 0.18 0.19 7632

2 0.00 0.00 0.00 41

3 0.00 0.00 0.00 28

4 0.23 0.03 0.06 3762

5 0.18 0.02 0.04 409

6 0.00 0.00 0.00 212

7 0.34 0.43 0.38 5437

8 0.00 0.00 0.00 421

9 0.00 0.00 0.00 91

10 0.00 0.00 0.00 29

11 0.00 0.00 0.00 44

12 0.29 0.00 0.01 1043

13 0.28 0.01 0.01 1621

14 0.00 0.00 0.00 14

15 0.00 0.00 0.00 232

16 0.30 0.77 0.43 17455

17 0.75 0.02 0.03 192

18 1.00 0.04 0.08 138

19 0.53 0.29 0.38 2562

20 0.24 0.15 0.18 9311

21 0.28 0.37 0.32 12464

22 0.00 0.00 0.00 1

23 0.56 0.68 0.62 779

24 0.00 0.00 0.00 311

25 0.00 0.00 0.00 2281

26 0.77 0.11 0.20 204

27 0.33 0.00 0.00 1031

28 0.00 0.00 0.00 478

29 0.00 0.00 0.00 14

30 0.00 0.00 0.00 470

31 0.00 0.00 0.00 45

32 0.00 0.00 0.00 3197

34 0.26 0.02 0.03 761

35 0.24 0.01 0.02 4540

36 0.28 0.24 0.26 5296

37 0.28 0.00 0.01 4216

38 1.00 0.00 0.00 875

accuracy 0.29 87805

macro avg 0.22 0.09 0.09 87805

weighted avg 0.27 0.29 0.23 87805

特征重要度

n_features = X.shape[1]#列

plt.barh(range(n_features),rfc.feature_importances_)

plt.yticks(np.arange(n_features),data_train.columns[1:])

plt.show()

n_features = X.shape[1]

print(n_features)

18

Submission(保存并且输出)

1.把Target的类型作为keys,其标签作为values

rfc_pred = rfc.predict(X_test)

keys = le.classes_

values = le.transform(le.classes_)

print(keys)

2.将Target的类别及进行特征工程后的值保存为字典

dictionary = dict(zip(keys,values))

print(dictionary)

{'ARSON': 0, 'ASSAULT': 1, 'BAD CHECKS': 2, 'BRIBERY': 3, 'BURGLARY': 4, 'DISORDERLY CONDUCT': 5, 'DRIVING UNDER THE INFLUENCE': 6, 'DRUG/NARCOTIC': 7, 'DRUNKENNESS': 8, 'EMBEZZLEMENT': 9, 'EXTORTION': 10, 'FAMILY OFFENSES': 11, 'FORGERY/COUNTERFEITING': 12, 'FRAUD': 13, 'GAMBLING': 14, 'KIDNAPPING': 15, 'LARCENY/THEFT': 16, 'LIQUOR LAWS': 17, 'LOITERING': 18, 'MISSING PERSON': 19, 'NON-CRIMINAL': 20, 'OTHER OFFENSES': 21, 'PORNOGRAPHY/OBSCENE MAT': 22, 'PROSTITUTION': 23, 'RECOVERED VEHICLE': 24, 'ROBBERY': 25, 'RUNAWAY': 26, 'SECONDARY CODES': 27, 'SEX OFFENSES FORCIBLE': 28, 'SEX OFFENSES NON FORCIBLE': 29, 'STOLEN PROPERTY': 30, 'SUICIDE': 31, 'SUSPICIOUS OCC': 32, 'TREA': 33, 'TRESPASS': 34, 'VANDALISM': 35, 'VEHICLE THEFT': 36, 'WARRANTS': 37, 'WEAPON LAWS': 38}

3.对测试集进行处理

查看测试集的信息

print(data_test.head())

Id DayOfWeek X Y n_days Year WeekOfYear Month Hour Block PdDistrict_CENTRAL PdDistrict_INGLESIDE PdDistrict_MISSION PdDistrict_NORTHERN PdDistrict_PARK PdDistrict_RICHMOND PdDistrict_SOUTHERN PdDistrict_TARAVAL PdDistrict_TENDERLOIN

0 0 6 -122.399588 37.735051 4512 2015 19 5 23 1 0 0 0 0 0 0 0 0 0

1 1 6 -122.391523 37.732432 4512 2015 19 5 23 0 0 0 0 0 0 0 0 0 0

2 2 6 -122.426002 37.792212 4512 2015 19 5 23 1 0 0 0 1 0 0 0 0 0

3 3 6 -122.437394 37.721412 4512 2015 19 5 23 1 0 1 0 0 0 0 0 0 0

4 4 6 -122.437394 37.721412 4512 2015 19 5 23 1 0 1 0 0 0 0 0 0 0

去掉多余特征‘Id’

data_test = data_test.drop('Id',axis=1)

用前面训练好的模型rfc对测试集进行预测

y_pred_prob = rfc.predict_proba(data_test)

print(y_pred_prob)

[[0.00220038 0.14332224 0. ... 0.12182985 0.04372455 0.02692804]

[0.0003572 0.0505187 0. ... 0.07283049 0.06742673 0.03087049]

[0.0040157 0.08378697 0. ... 0.06877263 0.02119305 0.00594818]

...

[0.00373092 0.12110726 0.00178571 ... 0.22416137 0.03237321 0.00850585]

[0.0169558 0.15710357 0.00148783 ... 0.12023433 0.05170328 0.00645309]

[0.00309621 0.06249053 0.015625 ... 0.17716719 0.03481506 0.00728016]]

好气啊又没有了。

封装结果

results = pd.DataFrame(y_pred_prob)

results.columns = keys

results.to_csv(path_or_buf="rfc_predict_4.csv",index=True, index_label = 'Id')