SVM-SVC分类

SVM优点:

- 用于二元和多元分类器、回归和新奇性检测

- 良好的预测生成器,提供了鲁棒的过拟合、噪声数据和异常点处理

- 成功处理了涉及到很多变量的场景

- 当变量比样本还多是依旧有效

- 快速,即使样本量大于1万

- 自动检测数据的非线性,不用做变量变换

SVM缺点:

- 应用在二元分类表现最好,其他预测问题表现不是太好

- 变量比样例多很多的时候,有效性降低,需要使用其他方案,例如SGD方案

- 只提供预测结果,如果想要获取预测概率,需要额外方法去获取

- 如果想要最优结果,需要调参。

使用SVM预测模型的通用步骤

- 选择使用的SVM类

- 用数据训练模型

- 检查验证误差并作为基准线

- 为SVM参数尝试不同的值

- 检查验证误差是否改进

- 再次使用最优参数的数据来训练模型

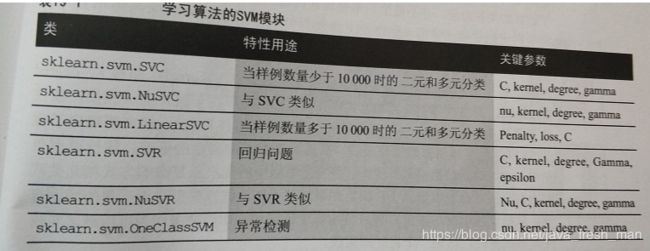

SVM种类,用途和关键参数表

主要分为三类:1、分类 2、回归 3、异常检测

TIPs:SVM模块包含2个库:libsvm和liblinear,拟合模型时,python和这两个库有数据流,会消耗一部分内存。

如果内存足够,最好能够把SVM的cashe_size参数设置大于200M,例如1000M。

参数问题:

- C参数表明算法要训练数据点需要作出多少调整适应,C小,SVM对数据点调整少,多半取平均,仅用少量的数据点和可用变量,C值增大,会使学习过程遵循大部分的可用训练数据点并牵涉较多变量。C值过大,有过拟合问题,C值过小,预测粗糙,不准确。

- kernel

- degree

- gamma

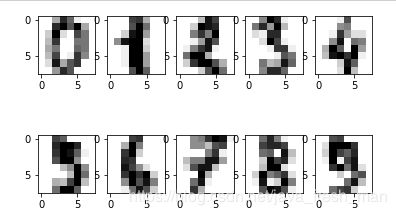

一、SVC分类(手写字体识别问题)

#载入数据

from sklearn import datasets

digits = datasets.load_digits()

X,y = digits.data, digits.target

#画图展示

import matplotlib.pyplot as plt

#此处注意,k,img代表的数字在1-10之间,故enmerate()需要增加一个start=1的参数

for k,img in enumerate(range(10),start=1):

plt.subplot(2, 5, k)

plt.imshow(digits.images[img],cmap='binary',interpolation='none')

plt.show()

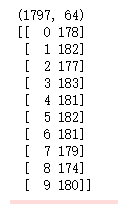

print(X.shape)

#X[0]

from scipy.stats import itemfreq

print(itemfreq(y))

TIPs如果遇到类不平衡的问题:

1、保持不平衡,偏向于评率高的类做预测

2、使用权重在类间建立平等,允许对观察点多次计数

3、删减拥有过多样例的勒种的一些样例

解决类不平衡问题的参数方法:

- sklearn.svm.SVC中的class_weight='auto'

- fit中的sample_weight

#数据区分测试集和训练集,并将数据标准化

from sklearn.cross_validation import train_test_split, cross_val_score

from sklearn.preprocessing import MinMaxScaler

# We keep 30% random examples for test

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.3, random_state=101)

# We scale the data in the range [-1,1]

scaling = MinMaxScaler(feature_range=(-1, 1)).fit(X_train)

X_train = scaling.transform(X_train)

X_test = scaling.transform(X_test)

from sklearn.svm import SVC

# We balance the clasess so you can see how it works

learning_algo = SVC(kernel='linear', class_weight='balanced')

cv_performance = cross_val_score(learning_algo, X_train, y_train, cv=5)

test_performance = learning_algo.fit(X_train, y_train).score(X_test, y_test)

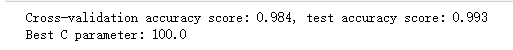

print ('Cross-validation accuracy score: %0.3f, test accuracy score: %0.3f' % (np.mean(cv_performance),test_performance))

数据结果显示精度很好

C参数默认1,下面使用网格搜索来选择最优的C参数

from sklearn.grid_search import GridSearchCV

learning_algo = SVC(class_weight='balanced', random_state=101)

#定义了C的一个列表,包含7个值,从10^-3到10^3

search_space = {'C': np.logspace(-3, 3, 7)}

gridsearch = GridSearchCV(learning_algo, param_grid=search_space, scoring='accuracy', refit=True, cv=10, n_jobs=-1)

gridsearch.fit(X_train,y_train)

cv_performance = gridsearch.best_score_

test_performance = gridsearch.score(X_test, y_test)

print ('Cross-validation accuracy score: %0.3f, test accuracy score: %0.3f' % (cv_performance,test_performance))

print ('Best C parameter: %0.1f' % gridsearch.best_params_['C'])

C取值为100的时候,模型最优。