一个简单的百度贴吧爬虫&&百度搜索爬虫&&模拟登录菜鸟踩坑记(requests、lxml)

这几天在学爬虫,试了下简单的,不涉及scrapy框架,库用的lxml、requests,python3,不涉及网页界面交互。

1、百度贴吧爬虫

爬取贴吧贴子标题、发贴人信息(性别、关注贴吧)等,后来根据数据做了个词云,代码跟词云戳下:

import os

import urllib

from lxml import etree

from bs4 import BeautifulSoup

import re

import json

import requests

import csv

import codecs

import time

def baidu_tieba(url, begin_page, end_page):

for i in range(begin_page, end_page + 1):

print('正在下载第', str(i), '个页面')

time.sleep(1)

if i > 1:

suffix = '&&pn=' + str(50 * (i - 1))

else:

suffix = ''

html = etree.HTML(requests.get(url + suffix).text)

parse_html(html)

def parse_html(html):

for e in html.xpath("//li[@class=' j_thread_list clearfix']"):

# get info

info = json.loads(e.get('data-field'))

reply_num = info['reply_num']

author = info['author_name']

is_top = 1 if info['is_top'] else 0

is_good = 1 if info['is_good'] else 0

# get title & author & author info

temp = e.xpath(

".//div[@class='col2_right j_threadlist_li_right ']//div[contains(@class,'threadlist_title pull_left j_th_tit ')]//a")[

0]

title = temp.get('title')

content_url = 'http://tieba.baidu.com' + temp.get('href')

content = etree.HTML(requests.get(content_url).text)

print(content_url)

try:

content_text = content.xpath("//div[@class='d_post_content_main d_post_content_firstfloor']//cc/div")[

1].text

print(content_text)

except:

continue

try:

temp = e.xpath(".//span[@class='frs-author-name-wrap']//a")[0]

author_url = 'http://tieba.baidu.com' + temp.get('href')

print(author_url)

# get content and user accurate_info

author_content = requests.get(author_url).text

author_page = etree.HTML(author_content)

temp = author_page.xpath("//div[@class='userinfo_userdata']//span")

gender = temp[0].get('class')

if re.match('.*female', gender):

gender = 1

else:

gender = 0

temp = temp[1].xpath("./span/span")

online_time = re.findall('吧龄:(.*)', temp[1].text)

post_num = re.findall('发贴:(.*)', temp[3].text)

temp = author_page.xpath(".//div[@class='ihome_forum_group ihome_section clearfix']")

follow = []

if temp:

for t in temp[0].getchildren()[1].xpath('.//a'):

follow.append(t.xpath("./span")[0].text)

else:

pass

except:

gender = None

online_time = None

letter_num = None

follow = []

post_num = None

author_url = None

f_tieba.writerow([title, author, reply_num, is_top, is_good, content_url, content_text])

f_author.writerow([author, gender, online_time, post_num, follow, author_url])

def createWordCloud(f_1, f_2):

import pandas as pd

import jieba.analyse

from scipy.misc import imread

import matplotlib as mpl

import matplotlib.pyplot as plt

from wordcloud import WordCloud, STOPWORDS, ImageColorGenerator

title = pd.read_csv(f_1)

author = pd.read_csv(f_2)

author['online_time'] = author['online_time'].apply(lambda x: re.findall("\['(.*)'\]", str(x)))

author['online_time'] = author['online_time'].apply(lambda x: None if len(x) < 1 else x[0])

author['online_time'].fillna(0, inplace=True)

author = author.groupby(['author', 'gender', 'online_time']).first().reset_index()

title = title.groupby(['title', 'author', 'url']).first().reset_index()

title['sents'] = title.apply(

lambda x: x['content'] if len(x['content'].strip()) > len(x['title'].strip()) else x['title'], axis=1)

sents = list(title['sents'])

jieba.analyse.set_stop_words('../stopword/stopwords.txt')

tags = []

for s in sents:

tags.extend(jieba.analyse.extract_tags(s, topK=100, withWeight=False))

text = " ".join(tags)

text = str(text)

# read the mask

wc = WordCloud(font_path='/Users/czw/Library/Fonts/PingFang.ttc',

background_color="white", max_words=100, width=1600, height=800,

max_font_size=500, random_state=42, min_font_size=40)

print(text)

# generate word cloud

wc.generate(text)

# wc.to_file('word.jpg')

# generate 关键词 词云

plt.imshow(wc)

plt.axis("off")

plt.show()

print(title)

print(author)

temp = list(author['follow'].apply(lambda x: re.findall("\[(.*)\]", x)[0]))

temp = [x.split(',') for x in temp if x]

follow_word = []

for f in temp:

if len(f) > 0:

f = [i.strip()[1:-1] + '吧' for i in f]

follow_word.extend(f)

wc = WordCloud(font_path='/Users/czw/Library/Fonts/PingFang.ttc',

background_color="white", max_words=100, width=200, height=1200,

max_font_size=400, random_state=42, min_font_size=40)

wc.generate(str(" ".join(follow_word)))

# 用户词云

plt.axis('off')

plt.imshow(wc)

plt.savefig('word_2.jpg', dpi=150)

plt.show()

if __name__ == '__main__':

url = 'http://tieba.baidu.com/f?kw=%E5%8D%8E%E4%B8%AD%E7%A7%91%E6%8A%80%E5%A4%A7%E5%AD%A6&ie=utf-8'

f_1 = open('title_try.csv', 'w', encoding='utf-8-sig')

f_2 = open('author_try.csv', 'w', encoding='utf-8-sig')

f_tieba = csv.writer(f_1, dialect='excel')

f_author = csv.writer(f_2, dialect='excel')

f_tieba.writerow(["title", "author", "reply_num", "is_top", "is_good", "url", "content"])

f_author.writerow(["author","gender","online_time","post_num","follow","author_url"])

# page 从1到20页的内容

baidu_tieba(url, 1, 2)

f_1.close()

f_2.close()

createWordCloud('title_try.csv','author_try.csv')

这里做的是hust贴吧的词云,如下:

爬的数据不太多,随便弄的,第一张是关键词,第二张是附近贴吧。just for fun :-)

2、百度搜索爬虫

这里不涉及网页交互,只是用url的形式搜索,所以不用selenium或者其他通过url_open的方式搜索,想知道的同学请期待下一篇博客(嘻嘻)orz。

这个代码写的比之前的稍微好一点,因为要爬的内容不是很多,就没写队列或者连接db之类的。

class Downloader():

def __init__(self, url):

self.url = url

s = requests.session()

page = s.get(self.url)

self.html = lxml.html.fromstring(page.text)

self.contents = self.html.xpath("//div[contains(@class,'c-container')]")

def __call__(self, number):

content = self.contents[number]

title = str(lxml.html.tostring(content.xpath(".//h3[contains(@class,'t')]")[0]),encoding = "utf-8")

title = title.replace('','')

title = title.replace('','')

title = lxml.html.fromstring(title).xpath(".//a")[0].text

content_url = content.xpath(".//h3[contains(@class,'t')]/a")[0].get('href')

try:

content = content.xpath(".//div[contains(@class,'c-abstract')]")[0]

content = str(lxml.html.tostring(content))

content = content.replace('','')

content = content.replace('','')

# ems = content.xpath(".//em")

# for e in ems:

# text += e.text

content = lxml.html.fromstring(content).xpath('.//div')[0].text

# print(content.xpath('.//div')[0].text)

except:

content = None

# content = content.replace('','')

# content = content.replace('','')

# print(content)

# content = lxml.html.fromstring(content)

# except:

# content = None

return {'title':title, 'content':content, 'url':content_url}

import urllib.request as up

if __name__ == '__main__':

keyword = input('the keyword you want search: \n')

url = 'http://www.baidu.com/s?wd=' + keyword + '&rsv_bp=0&rsv_spt=3&rsv_n=2&inputT=6391'

# print(url)

# url = 'http://www.baidu.com/s?wd=ff&rsv_bp=0&rsv_spt=3&rsv_n=2&inputT=6391'

# url = 'http://www.baidu.com'

D = Downloader(url)

import tkinter as tk

import tkinter.messagebox

text = ''

for i in range(6):

d = D(i)

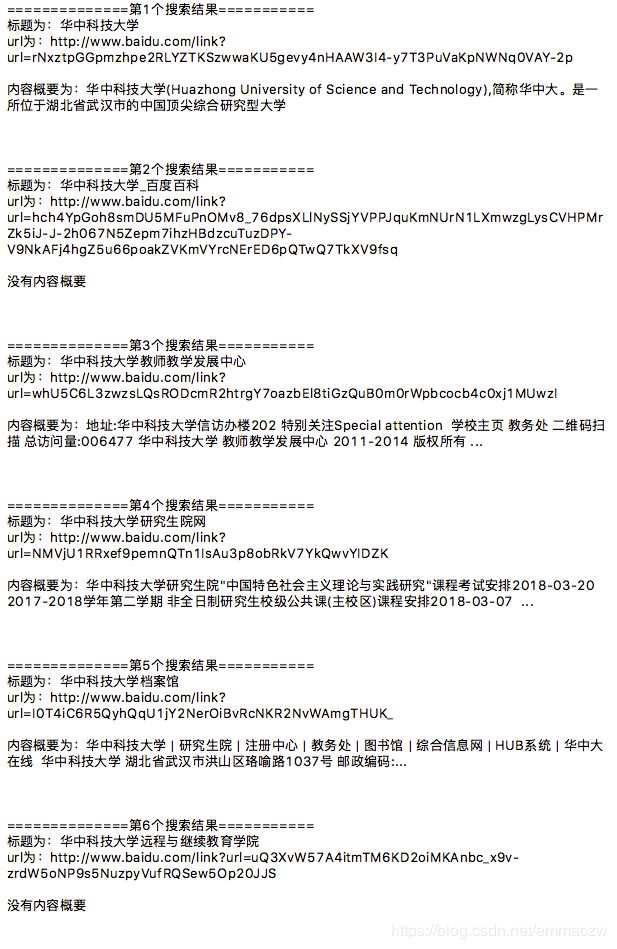

text += '==============第{}个搜索结果===========\n'.format(i+1)

text += '标题为:{}\n\n'.format(d['title'])

# text += 'url为:{}\n\n'.format(d['url'])

print('==============第{}个搜索结果==========='.format(i+1))

print('标题为:',d['title'])

print('url为:',d['url'])

if d['content']:

text += '内容概要为:{}\n\n'.format(d['content'])

print('内容概要为:',d['content'])

else:

text += '没有内容概要\n\n'

print('没有内容概要')

text += '\n\n'

# print(d['content'])

root = tk.Tk()

root.title('GUI')

root.geometry('800x600')

tkinter.Label(root, text=text, justify ='left',wraplength=450).pack()

root.mainloop()结果大概就是

怎么说呢,没有内容概要是因为html里面的文字不太好提取,其实也可以提取出来,只是博主略懒,(有些坑,比如百度是http而不是https,还有其他的就忘记了,看代码吧)

3、模拟登录

这里只给一个小网站,是《用python写网络爬虫》这本书里的,不涉及验证码或者比较麻烦的加密登录,要是想知道的话也继续期待下一篇博文哦~

login_url = 'http://example.webscraping.com/places/default/edit/Algeria-4'

login_email = '[email protected]'

login_password = 'example'

# data = {'email':login_user, 'password':login_password}

def parse_form(html, content='//input'):

tree = lxml.html.fromstring(html)

data = {}

for e in tree.xpath(content):

if e.get('name'):

data[e.get('name')] = e.get('value')

return data

r_session = requests.Session()

page = r_session.get(login_url)

data = parse_form(page.content)

print(data)

login_url = page.url

data['email'] = login_email

data['password'] = login_password

# encoded_data = urllib.urlencode(data)

print(data)

resp = r_session.post(login_url, data=data)

print(resp.url)

# print(resp.text)

data = parse_form(resp.text)

print(data)

print(resp)

data['population'] = int(data['population'])+1

resp = r_session.post(resp.url, data=data)

html = lxml.html.fromstring(resp.text)

print(html.xpath("//table//tr[@id='places_population__row']/td[@class='w2p_fw']")[0].text)坑就是,需要标注cookie,创建一个session对象即可~很简单,看代码就懂啦~

bye~