OpenCV学习三十四:watershed 分水岭算法

1. watershed

void watershed( InputArray image, InputOutputArray markers );

第一个参数 image,必须是一个8bit 3通道彩色图像矩阵序列,第一个参数没什么要说的。

关键是第二个参数 markers:在执行分水岭函数watershed之前,必须对第二个参数markers进行处理,它应该包含不同区域的轮廓,每个轮廓有一个自己唯一的编号,轮廓的定位可以通过Opencv中findContours方法实现,这个是执行分水岭之前的要求。

接下来执行分水岭会发生什么呢?算法会根据markers传入的轮廓作为种子(也就是所谓的注水点),对图像上其他的像素点根据分水岭算法规则进行判断,并对每个像素点的区域归属进行划定,直到处理完图像上所有像素点。而区域与区域之间的分界处的值被置为“-1”,以做区分。

简单概括一下就是说第二个入参markers必须包含了种子点信息。Opencv官方例程中使用鼠标划线标记,其实就是在定义种子,只不过需要手动操作,而使用findContours可以自动标记种子点。而分水岭方法完成之后并不会直接生成分割后的图像,还需要进一步的显示处理,如此看来,只有两个参数的watershed其实并不简单。

2. 步骤

- 1. 将白色背景编程黑色背景 - 目的是为了后面变的变换做准备

- 2. 使用filter2D与拉普拉斯算子实现图像对比度的提高 - sharp

- 3. 转为二值图像通过threshold

- 4. 距离变换

- 5. 对距离变换结果进行归一化[0-1]之间

- 6. 使用阈值,在此二值化,得到标记

- 7. 腐蚀每个peak erode

- 8. 发现轮廓 findContours

- 9. 绘制轮廓 drawContours

- 10.分水岭变换 watershed

- 11.对每个分割区域着色输出结果

我觉得教程的思路有点过于啰嗦~

3. 代码及结果

#include

#include

using namespace cv;

using namespace std;

int main(int argc, char* argv[]){

char input_win[] = "input image";

char watershed_win[] = "watershed segementation demo";

Mat src = imread("10.jpg", -1);

resize(src, src, Size(), 0.25, 0.25, 1);

if (src.empty()){

puts("could not load images");

return -1; }

namedWindow(input_win, CV_WINDOW_AUTOSIZE);

imshow(input_win, src);

//1. 将白色背景编程黑色背景 - 目的是为了后面变的变换做准备

for (int row=0; row(row, col) == Vec3b(255, 255, 255)){

//我这里和视频教程图片不一样,所以这一步不同

// src.at(row, col)[0] = 0;

// src.at(row, col)[1] = 0;

// src.at(row, col)[2] = 0;

}

}

}

namedWindow("black background", CV_WINDOW_AUTOSIZE);

imshow("black background", src);

imwrite("black background.jpg", src);

//2. 使用filter2D与拉普拉斯算子实现图像对比度的提高 - sharp

Mat kernel1 = (Mat_(3, 3)<<1,1,1, 1,-8, 1, 1,1,1) ;

Mat imgLaplance;

Mat imgSharpen;

filter2D(src, imgLaplance, CV_32F, kernel1, Point(-1,-1), 0, BORDER_DEFAULT);

src.convertTo(imgSharpen, CV_32F);

Mat imgResult = imgSharpen - imgLaplance;

imgResult.convertTo(imgResult, CV_8UC3);

imgLaplance.convertTo(imgLaplance, CV_8UC3);

imshow("sharpen img", imgResult);

imwrite("sharpen img.jpg", imgResult);

//3. 转为二值图像通过threshold

Mat imgBinary;

cvtColor(imgResult, imgResult, CV_BGR2GRAY);

threshold(imgResult, imgBinary, 40, 255, THRESH_BINARY | THRESH_OTSU);

Mat temp;

imgBinary.copyTo(temp, Mat());

Mat kernel2 = getStructuringElement(MORPH_RECT, Size(2,2), Point(-1, -1));

morphologyEx(temp, temp, CV_MOP_TOPHAT, kernel2, Point(-1, -1), 1);

for (int row=0; row(row, col) = saturate_cast(imgBinary.at(row, col) - temp.at(row, col));

}

}

imshow("sharpen img", imgResult);

imshow("binary img", imgBinary);

imwrite("sharpen img2.jpg", imgResult);

imwrite("binary.jpg", imgBinary);

//4. 距离变换

Mat imgDist;

distanceTransform(imgBinary, imgDist, CV_DIST_L1, 3);

//5. 对距离变换结果进行归一化[0-1]之间

normalize(imgDist, imgDist, 0, 1, NORM_MINMAX);

imshow("distance result normalize", imgDist);

imwrite("distance result normalize.jpg", imgDist);

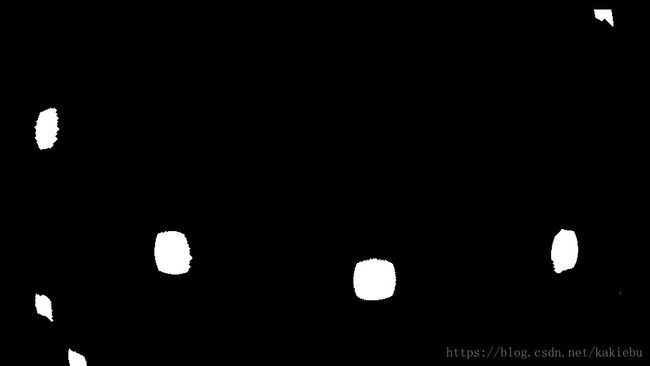

//6. 使用阈值,在此二值化,得到标记

threshold(imgDist, imgDist, 0.5, 1, CV_THRESH_BINARY);

imshow("distance result threshold", imgDist);

imwrite("distance result threshold.jpg", imgDist);

//7. 腐蚀每个peak erode

Mat kernel3 = Mat::zeros(15, 15, CV_8UC1);

erode(imgDist, imgDist, kernel3, Point(-1,-1), 2);

imshow("distance result erode", imgDist);

imwrite("distance result erode.jpg", imgDist);

//8. 发现轮廓 findContours

Mat imgDist8U;

imgDist.convertTo(imgDist8U, CV_8U);

vector> contour;

findContours(imgDist8U, contour, RETR_EXTERNAL, CHAIN_APPROX_SIMPLE, Point(0, 0));

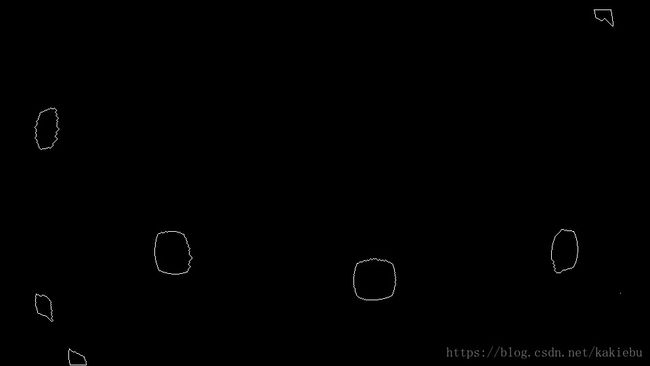

//9. 绘制轮廓 drawContours

Mat maskers = Mat::zeros(imgDist8U.size(), CV_32SC1);

for (size_t i=0; i(i), Scalar::all(static_cast(i) + 1)); }

imshow("maskers", maskers);

imwrite("maskers.jpg", maskers);

//10.分水岭变换 watershed

watershed(src, maskers);

Mat mark = Mat::zeros(maskers.size(), CV_8UC1);

maskers.convertTo(mark, CV_8UC1);

bitwise_not(mark, mark, Mat());

imshow("watershed", mark);

imwrite("watershed.jpg", mark);

//11.对每个分割区域着色输出结果

vector colors;

for (size_t i=0; i(row, col);

if (index>0 && index <= static_cast(contour.size())){

dst.at(row,col) = colors[index-1];

} else {

dst.at(row,col) = Vec3b(0,0,0);

}

}

}

imshow("dst", dst);

imwrite("dst.jpg", dst);

waitKey();

return 0;

}

black background.jpg

black background.jpg