方面级分类paper2 Attention-based LSTM for Aspect-level sentiment classification(2016 EMNLP)

系列文章之二:attention-based LSTM for Aspect-level sentiment classification

一份相关代码实现

来源:EMNLP2016(可从前言文章中可看出,很多关于方面级的文章都是来自于这一期刊。)

问题:aspect level sentiment classification

一、aspect level情感分析

Explain: 给定句子和相应aspect word(target word),aspect level sdntiment classification的任务是判断所给句子在指定aspect/target上的情感倾向。

Key point: aspect level情感分析的关键问题在于捕捉不同的context word对于特定aspect的重要性,利用这个信息做句子的语义表示。

本文的前系列文章 Effective LSTMs for Target-dependnt sentiment classification propose TD-LSTM and TC-LSTM

本文在此基础上详细探讨了LSTM的作用,并引用了attention机制针对不同aspect进行不同重要性的分配,提出了AE-LSTM、AT-LSTM、ATAE-LSTM等模型进行试验的performance比较。

二、introduction and contributions

In this paper, we deal with aspect-level sentiment classification and we find that the sentiment polarity of a sentence is highly dependent on both content and aspect. For example, the sentiment polarity of “Staffs are not that friendly, but the taste covers all.” will be positive if the aspect is food but negative when considering the aspect service. Polarity could be opposite when different aspects are considered.

We explore the potential correlation of aspect and sentiment polarity in aspect-level sentiment classification.In order to capture important information in response to a given aspect, we design an attentionbased LSTM.

contributions:

• We propose attention-based Long Short-Term memory for aspect-level sentiment classification.The models are able to attend different parts of a sentence when different aspects are concerned. Results show that the attention mechanism is effective.

• Since aspect plays a key role in this task, we propose two ways to take into account aspect information during attention: one way is to concatenate the aspect vector into the sentence hidden representations for computing attentionweights, and another way is to additionally append the aspect vector into the input word vectors.

• Experimental results indicate that our approach can improve the performance compared with several baselines, and further examples demonstrate the attention mechanism work well for aspect-level sentiment classification.

三、本文模型:

本文主要是通过attention机制来捕获不同上下文信息对给定aspect的重要性,将attention机制与LSTM结合起来对句子进行语义建模,解决aspect level情感分析的问题。

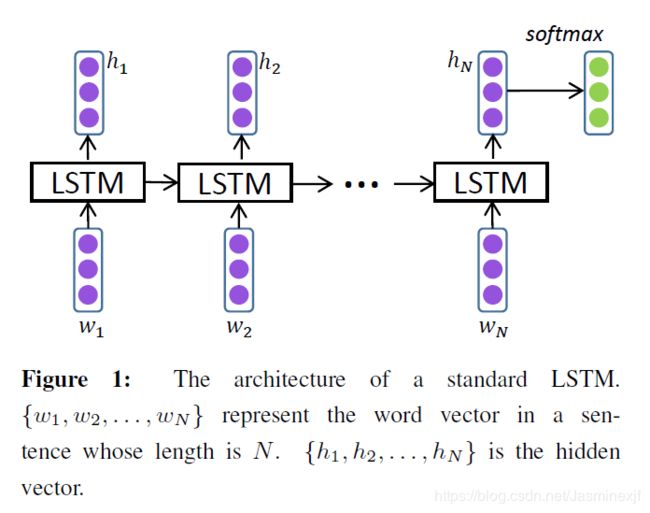

1. standard LSTM

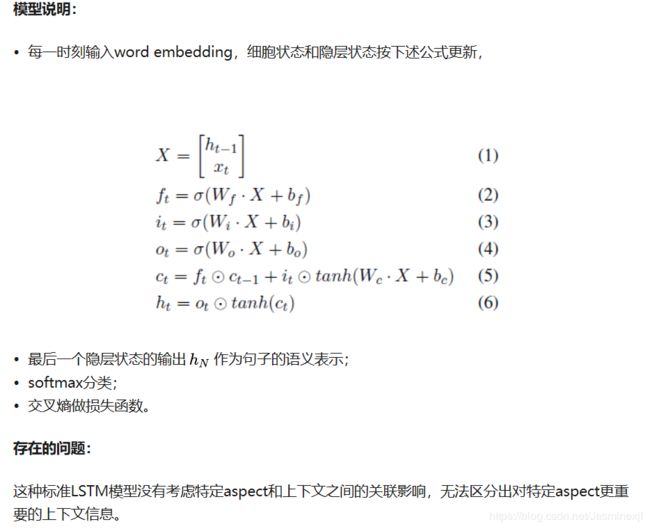

2.Attention-based LSTM(AT-LSTM)

AT-LSTM的模型结构如下图所示,

模型说明:

每一时刻输入word embedding,LSTM的状态更新,将隐层状态和aspect embedding结合起来,aspect embedding作为模型参数一起训练,得到句子在给定aspect下的权重表示r。具体计算公式如下

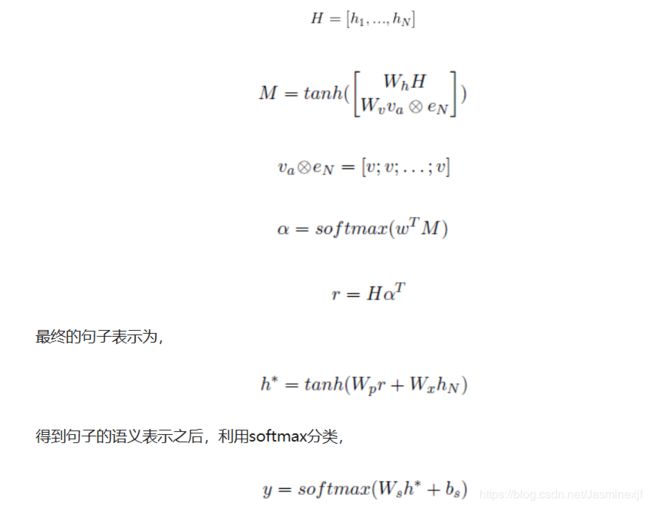

3、Attention-based LSTM with Aspect Embedding (ATAE-LSTM)

AT-LSTM是在计算attention权重的过程中引入了aspect的信息,为了更充分的利用aspect的信息。作者继而在AT-LSTM模型的基础上又提出了ATAE-LSTM模型,在输入端将aspect embedding和word embedding结合起来,并同时在计算权重时引入了aspect的信息。

模型说明:

在AT-LSTM的基础上,将aspect embedding和word embedding结合起来作为输入,aspect embedding依然是作为模型参数一起训练。

四、模型训练:

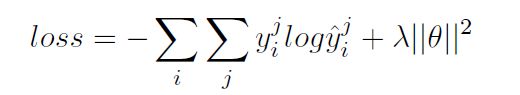

利用AdaGrad优化算法对以下交叉熵损失函数进行训练:

五、实验Experiments

1、数据

实验所用数据是SemEval 2014 Task4的用户评论数据集,具体分布情况如下表所示。

数据集中的每一条评论对应一个aspect列表和相应aspect情感倾向的列表。

例: The restaurant was too expensive. aspect:{price} polarity:{negative}

2.超参数设置:

Glove进行embeddings的初始化;其他参数的初始化:uniform distribution ![]() ;

;

the dimension of word vectors,aspect embeddings and the size of hidden layer are 300;

batch_size=25, the weight of L2 normalization is 0.001,learning rate is 0.0;

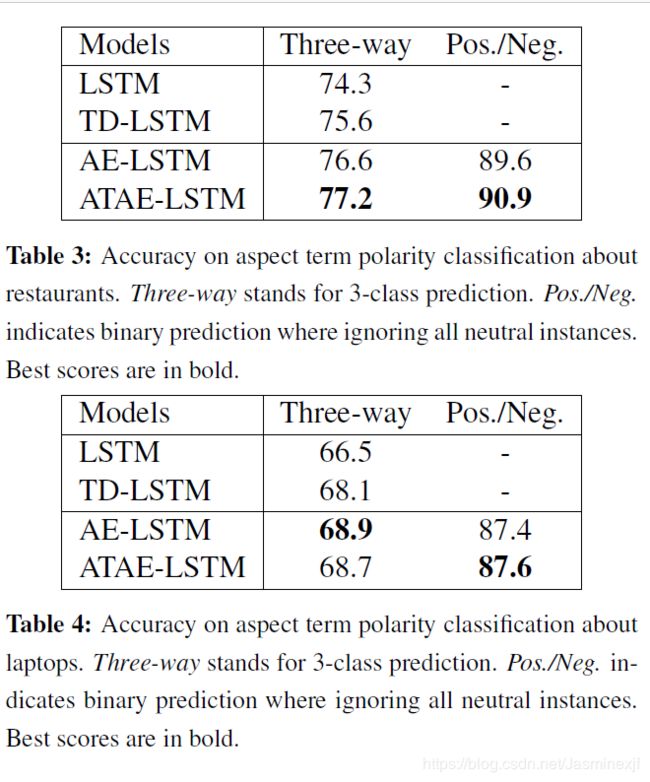

3.实验结果:

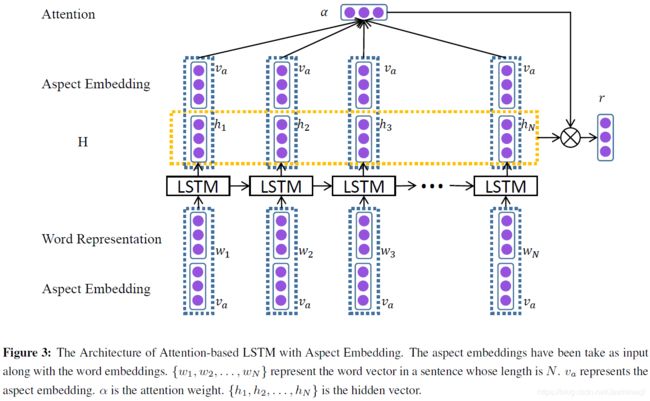

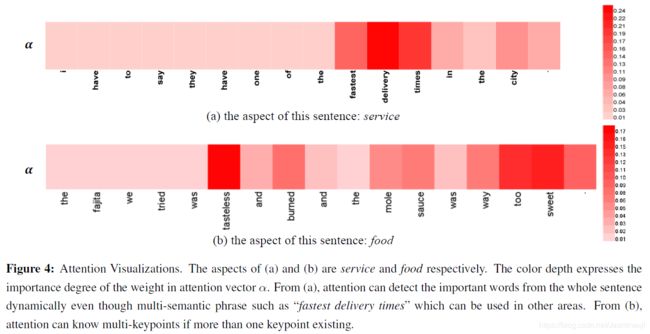

attention visualization(qualitative analysis):using a visualization tool Heml to visualize.

可看出:

图(a)中,输入句子为“I have to say they have one of the fastest delivery times in the city .” ,aspect为"service",fatest delivery times的权重比较高。

图(b)中,输入句子为 “The fajita we tried was tasteless and burned and the mole sauce was way too sweet.”,aspect为"food",tasteless和too sweet权重较高。

六、结论:

1、本文将attention与LSTM结合在一起,通过attention去获取对不同aspect更重要的上下文信息,来解决aspect level情感分析问题,在实验数据集上取得了较好效果。

2、目前的模型中,不同的aspect只能独立输入处理,怎样才能通过attention一次处理多个aspect是一个值得深入探讨的方向。

注:之前有的实验,会在现有数据集基础上,定义一些aspect/target word sets.例如针对餐厅的数据集,可以定义:food、service、cleanity等方面词进行相应方面情感的检测。