使用 Keras 定义简单神经网络来识别 MNIST 手写数字的网络

Keras 深度学习实战(不定时更新)

Kera 提供了必要的库来加载数据集,并将其划分成用于微调网络的训练集 X_train,

以及用于评估性能的测试集 X_test 。数据转换为支持 GPU 计算的 float 32 类型,

井归一化为[0, 1]。另外,我们将真正的标签各自加载到 Y_train、Y_test 中,

并对其进行 One-hot 编码。Keras 配置

https://keras-cn.readthedocs.io/en/latest/preprocessing/image/

data_format:字符串,“channel_first”或“channel_last”之一,代表图像的通道维的位置。

该参数是Keras 1.x中的image_dim_ordering,“channel_last”对应原本的“tf”,“channel_first”对应原本的“th”。

以128x128的RGB图像为例,“channel_first”应将数据组织为(3,128,128),而“channel_last”应将数据组织为(128,128,3)。

该参数的默认值是~/.keras/keras.json中设置的值,若从未设置过,则为“channel_last”

{

"floatx": "float32",

"epsilon": 1e-07,

"backend": "tensorflow",

"image_data_format": "channels_last"

}

查看mnist文件的位置

在from keras.datasets import mnist 一行上 点击 mnist 进入 mnist.py

keras_MINST_V1.py

from __future__ import print_function

import numpy as np

from keras.datasets import mnist

from keras.models import Sequential

from keras.layers.core import Dense, Activation

from keras.optimizers import SGD

from keras.utils import np_utils

import matplotlib.pyplot as plt

np.random.seed(1671) # for reproducibility

# network and training

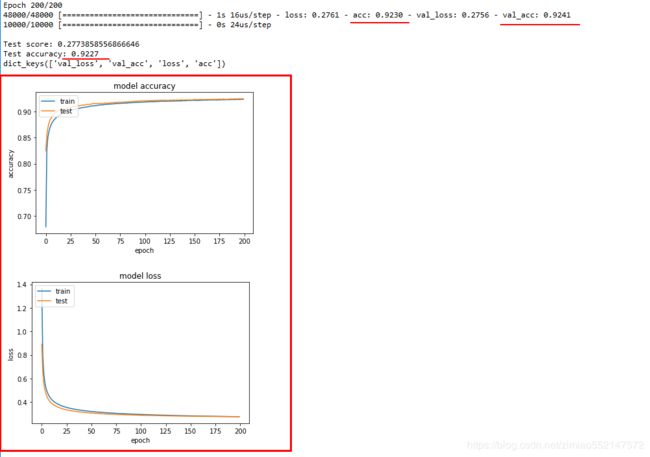

NB_EPOCH = 200

BATCH_SIZE = 128

VERBOSE = 1

NB_CLASSES = 10 # number of outputs = number of digits

OPTIMIZER = SGD() # SGD optimizer, explained later in this chapter

N_HIDDEN = 128

VALIDATION_SPLIT=0.2 # how much TRAIN is reserved for VALIDATION

# data: shuffled and split between train and test sets

#

(X_train, y_train), (X_test, y_test) = mnist.load_data()

#X_train is 60000 rows of 28x28 values --> reshaped in 60000 x 784

RESHAPED = 784

#

X_train = X_train.reshape(60000, RESHAPED)

X_test = X_test.reshape(10000, RESHAPED)

X_train = X_train.astype('float32')

X_test = X_test.astype('float32')

# normalize

#

X_train /= 255

X_test /= 255

print(X_train.shape[0], 'train samples')

print(X_test.shape[0], 'test samples')

# convert class vectors to binary class matrices

Y_train = np_utils.to_categorical(y_train, NB_CLASSES)

Y_test = np_utils.to_categorical(y_test, NB_CLASSES)

# 10 outputs

# final stage is softmax

model = Sequential()

model.add(Dense(NB_CLASSES, input_shape=(RESHAPED,)))

model.add(Activation('softmax'))

model.summary()

model.compile(loss='categorical_crossentropy',

optimizer=OPTIMIZER,

metrics=['accuracy'])

history = model.fit(X_train, Y_train,

batch_size=BATCH_SIZE, epochs=NB_EPOCH,

verbose=VERBOSE, validation_split=VALIDATION_SPLIT)

score = model.evaluate(X_test, Y_test, verbose=VERBOSE)

print("\nTest score:", score[0])

print('Test accuracy:', score[1])

# list all data in history

print(history.history.keys())

# summarize history for accuracy

plt.plot(history.history['acc'])

plt.plot(history.history['val_acc'])

plt.title('model accuracy')

plt.ylabel('accuracy')

plt.xlabel('epoch')

plt.legend(['train', 'test'], loc='upper left')

plt.show()

# summarize history for loss

plt.plot(history.history['loss'])

plt.plot(history.history['val_loss'])

plt.title('model loss')

plt.ylabel('loss')

plt.xlabel('epoch')

plt.legend(['train', 'test'], loc='upper left')

plt.show()执行情况

keras_MINST_V2.py

from __future__ import print_function

import numpy as np

from keras.datasets import mnist

from keras.models import Sequential

from keras.layers.core import Dense, Activation

from keras.optimizers import SGD

from keras.utils import np_utils

import matplotlib.pyplot as plt

np.random.seed(1671) # for reproducibility

# network and training

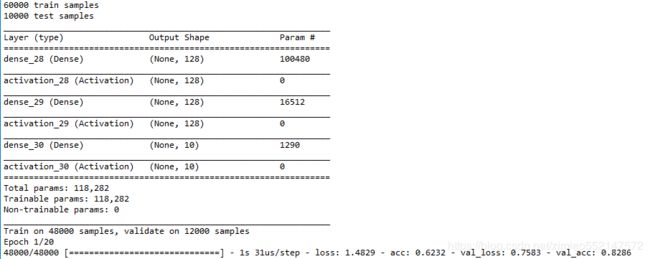

NB_EPOCH = 20

BATCH_SIZE = 128

VERBOSE = 1

NB_CLASSES = 10 # number of outputs = number of digits

OPTIMIZER = SGD() # optimizer, explained later in this chapter

N_HIDDEN = 128

VALIDATION_SPLIT=0.2 # how much TRAIN is reserved for VALIDATION

# data: shuffled and split between train and test sets

(X_train, y_train), (X_test, y_test) = mnist.load_data()

#X_train is 60000 rows of 28x28 values --> reshaped in 60000 x 784

RESHAPED = 784

#

X_train = X_train.reshape(60000, RESHAPED)

X_test = X_test.reshape(10000, RESHAPED)

X_train = X_train.astype('float32')

X_test = X_test.astype('float32')

# normalize

X_train /= 255

X_test /= 255

print(X_train.shape[0], 'train samples')

print(X_test.shape[0], 'test samples')

# convert class vectors to binary class matrices

Y_train = np_utils.to_categorical(y_train, NB_CLASSES)

Y_test = np_utils.to_categorical(y_test, NB_CLASSES)

# M_HIDDEN hidden layers

# 10 outputs

# final stage is softmax

model = Sequential()

model.add(Dense(N_HIDDEN, input_shape=(RESHAPED,)))

model.add(Activation('relu'))

model.add(Dense(N_HIDDEN))

model.add(Activation('relu'))

model.add(Dense(NB_CLASSES))

model.add(Activation('softmax'))

model.summary()

model.compile(loss='categorical_crossentropy',

optimizer=OPTIMIZER,

metrics=['accuracy'])

history = model.fit(X_train, Y_train,

batch_size=BATCH_SIZE, epochs=NB_EPOCH,

verbose=VERBOSE, validation_split=VALIDATION_SPLIT)

score = model.evaluate(X_test, Y_test, verbose=VERBOSE)

print("\nTest score:", score[0])

print('Test accuracy:', score[1])

# list all data in history

print(history.history.keys())

# summarize history for accuracy

plt.plot(history.history['acc'])

plt.plot(history.history['val_acc'])

plt.title('model accuracy')

plt.ylabel('accuracy')

plt.xlabel('epoch')

plt.legend(['train', 'test'], loc='upper left')

plt.show()

# summarize history for loss

plt.plot(history.history['loss'])

plt.plot(history.history['val_loss'])

plt.title('model loss')

plt.ylabel('loss')

plt.xlabel('epoch')

plt.legend(['train', 'test'], loc='upper left')

plt.show()执行情况

keras_MINST_V3.py

from __future__ import print_function

import numpy as np

from keras.datasets import mnist

from keras.models import Sequential

from keras.layers.core import Dense, Dropout, Activation

from keras.optimizers import SGD

from keras.utils import np_utils

import matplotlib.pyplot as plt

np.random.seed(1671) # for reproducibility

# network and training

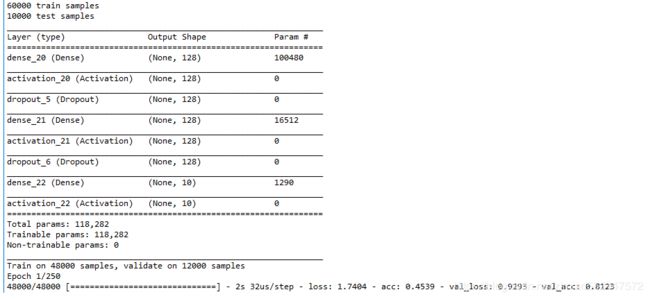

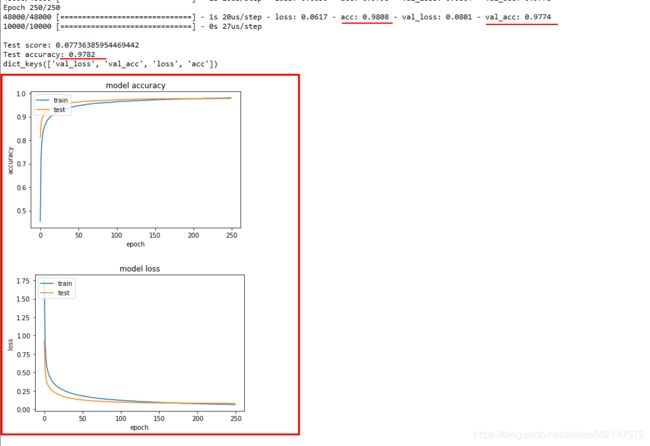

NB_EPOCH = 250

BATCH_SIZE = 128

VERBOSE = 1

NB_CLASSES = 10 # number of outputs = number of digits

OPTIMIZER = SGD() # optimizer, explained later in this chapter

N_HIDDEN = 128

VALIDATION_SPLIT=0.2 # how much TRAIN is reserved for VALIDATION

DROPOUT = 0.3

# data: shuffled and split between train and test sets

(X_train, y_train), (X_test, y_test) = mnist.load_data()

#X_train is 60000 rows of 28x28 values --> reshaped in 60000 x 784

RESHAPED = 784

#

X_train = X_train.reshape(60000, RESHAPED)

X_test = X_test.reshape(10000, RESHAPED)

X_train = X_train.astype('float32')

X_test = X_test.astype('float32')

# normalize

X_train /= 255

X_test /= 255

print(X_train.shape[0], 'train samples')

print(X_test.shape[0], 'test samples')

# convert class vectors to binary class matrices

Y_train = np_utils.to_categorical(y_train, NB_CLASSES)

Y_test = np_utils.to_categorical(y_test, NB_CLASSES)

# M_HIDDEN hidden layers

# 10 outputs

# final stage is softmax

model = Sequential()

model.add(Dense(N_HIDDEN, input_shape=(RESHAPED,)))

model.add(Activation('relu'))

model.add(Dropout(DROPOUT))

model.add(Dense(N_HIDDEN))

model.add(Activation('relu'))

model.add(Dropout(DROPOUT))

model.add(Dense(NB_CLASSES))

model.add(Activation('softmax'))

model.summary()

model.compile(loss='categorical_crossentropy',

optimizer=OPTIMIZER,

metrics=['accuracy'])

history = model.fit(X_train, Y_train,

batch_size=BATCH_SIZE, epochs=NB_EPOCH,

verbose=VERBOSE, validation_split=VALIDATION_SPLIT)

score = model.evaluate(X_test, Y_test, verbose=VERBOSE)

print("\nTest score:", score[0])

print('Test accuracy:', score[1])

# list all data in history

print(history.history.keys())

# summarize history for accuracy

plt.plot(history.history['acc'])

plt.plot(history.history['val_acc'])

plt.title('model accuracy')

plt.ylabel('accuracy')

plt.xlabel('epoch')

plt.legend(['train', 'test'], loc='upper left')

plt.show()

# summarize history for loss

plt.plot(history.history['loss'])

plt.plot(history.history['val_loss'])

plt.title('model loss')

plt.ylabel('loss')

plt.xlabel('epoch')

plt.legend(['train', 'test'], loc='upper left')

plt.show()执行情况

keras_MINST_V4.py

from __future__ import print_function

import numpy as np

from keras.datasets import mnist

from keras.models import Sequential

from keras.layers.core import Dense, Dropout, Activation

from keras.optimizers import RMSprop

from keras.utils import np_utils

import matplotlib.pyplot as plt

np.random.seed(1671) # for reproducibility

# network and training

NB_EPOCH = 20

BATCH_SIZE = 128

VERBOSE = 1

NB_CLASSES = 10 # number of outputs = number of digits

OPTIMIZER = RMSprop() # optimizer, explainedin this chapter

N_HIDDEN = 128

VALIDATION_SPLIT=0.2 # how much TRAIN is reserved for VALIDATION

DROPOUT = 0.3

# data: shuffled and split between train and test sets

(X_train, y_train), (X_test, y_test) = mnist.load_data()

#X_train is 60000 rows of 28x28 values --> reshaped in 60000 x 784

RESHAPED = 784

#

X_train = X_train.reshape(60000, RESHAPED)

X_test = X_test.reshape(10000, RESHAPED)

X_train = X_train.astype('float32')

X_test = X_test.astype('float32')

# normalize

X_train /= 255

X_test /= 255

print(X_train.shape[0], 'train samples')

print(X_test.shape[0], 'test samples')

# convert class vectors to binary class matrices

Y_train = np_utils.to_categorical(y_train, NB_CLASSES)

Y_test = np_utils.to_categorical(y_test, NB_CLASSES)

# M_HIDDEN hidden layers

# 10 outputs

# final stage is softmax

model = Sequential()

model.add(Dense(N_HIDDEN, input_shape=(RESHAPED,)))

model.add(Activation('relu'))

model.add(Dropout(DROPOUT))

model.add(Dense(N_HIDDEN))

model.add(Activation('relu'))

model.add(Dropout(DROPOUT))

model.add(Dense(NB_CLASSES))

model.add(Activation('softmax'))

model.summary()

model.compile(loss='categorical_crossentropy',

optimizer=OPTIMIZER,

metrics=['accuracy'])

history = model.fit(X_train, Y_train,

batch_size=BATCH_SIZE, epochs=NB_EPOCH,

verbose=VERBOSE, validation_split=VALIDATION_SPLIT)

score = model.evaluate(X_test, Y_test, verbose=VERBOSE)

print("\nTest score:", score[0])

print('Test accuracy:', score[1])

# list all data in history

print(history.history.keys())

# summarize history for accuracy

plt.plot(history.history['acc'])

plt.plot(history.history['val_acc'])

plt.title('model accuracy')

plt.ylabel('accuracy')

plt.xlabel('epoch')

plt.legend(['train', 'test'], loc='upper left')

plt.show()

# summarize history for loss

plt.plot(history.history['loss'])

plt.plot(history.history['val_loss'])

plt.title('model loss')

plt.ylabel('loss')

plt.xlabel('epoch')

plt.legend(['train', 'test'], loc='upper left')

plt.show()执行情况