python 时间序列预测——简单神经网络

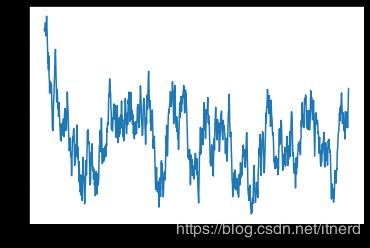

时间序列生成

y t = α + β y t − 1 + ϵ t y_t = \alpha + \beta y_{t-1} + \epsilon_t yt=α+βyt−1+ϵt

seed =2019

np.random.seed(seed)

y_0 = 1

alpha = -0.25

beta = 0.95

y=pd.Series(y_0)

num =1000

for i in range(num):

y_t = alpha +(beta * y_0)+ np.random.uniform(-1, 1)

y[i]=y_t

y_0 = y_t

plt.plot(y)

plt.show()

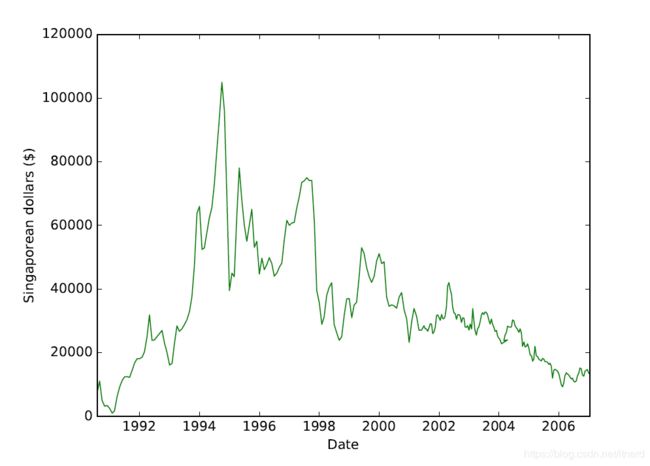

数据集下载

Certificate of Entitlement Price :

If you have lived in Singapore, you will know that anyone who wants to

register a new vehicle must first obtain a Certificate of Entitlement

(COE). It gives the holder the right to vehicle ownership and access

to the very limited road space in the tropical island city-state. The

number of COEs issued is limited and they can only be obtained through

an open bidding system.

url ="http://ww2.amstat.org/publications/jse/datasets/COE.xls"

loc = "./COE.xls"

request.urlretrieve(url,loc)

Excel_file = pd.ExcelFile (loc)

print(Excel_file . sheet_names)

'''

['COE data']

'''

spreadsheet = Excel_file.parse ('COE data')

print(spreadsheet.info())

'''

RangeIndex: 265 entries, 0 to 264

Data columns (total 6 columns):

DATE 265 non-null datetime64[ns]

COE$ 265 non-null float64

COE$_1 265 non-null float64

#Bids 265 non-null int64

Quota 265 non-null int64

Open? 265 non-null int64

dtypes: datetime64[ns](1), float64(2), int64(3)

memory usage: 12.5 KB

None

'''

data = spreadsheet ['COE$']

print(data . head ())

'''

0 7400.0

1 11100.0

2 5002.0

3 3170.0

4 3410.0

Name: COE$, dtype: float64

'''

print ( spreadsheet ['DATE'][193:204])

'''

193 2004-02-01

194 2002-02-15

195 2004-03-01

196 2004-03-15

197 2004-04-01

198 2002-04-15

199 2004-05-01

200 2004-05-15

201 2004-06-01

202 2002-06-15

203 2004-07-01

Name: DATE, dtype: datetime64[ns]

'''

修正数据错误并保存

spreadsheet.at[194,'DATE']='20040215'

spreadsheet.at[198,'DATE']='20040415'

spreadsheet.at[202,'DATE']='20040615'

loc = "COE.csv"

spreadsheet.to_csv(loc)

一、单纯基于历史做预测

数据归一化

x= data

scaler = preprocessing.MinMaxScaler(feature_range=(0,1))

print(scaler)

'''

MinMaxScaler(copy=True, feature_range=(0, 1))

'''

x = np.array(x).reshape((len(x),)) # shape (265,)

x = np.log(x)

x = x.reshape(-1 ,1) # shape (256,1)

x = scaler.fit_transform(x)

x = x.reshape(-1) # shape (265,)

print(round(x.min () ,2),round(x.max () ,2))

'''

0.0 1.0

'''

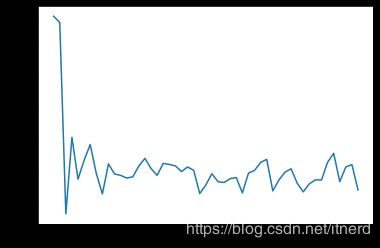

Partial AutoCorrelation Function

from statsmodels.tsa.stattools import pacf

x_pacf = pacf(x ,nlags=50, method='ols')

plt.plot(x_pacf)

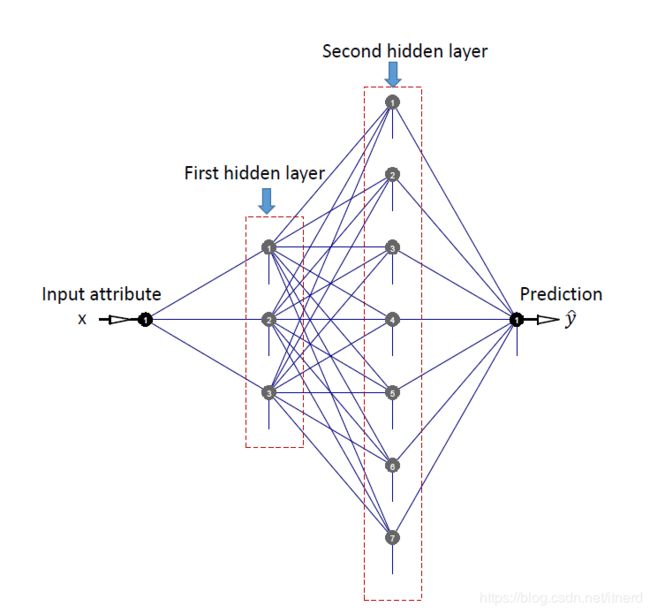

TimeSeriesNnet 类

logging.basicConfig(format='%(levelname)s:%(message)s', level=logging.INFO)

class TimeSeriesNnet(object):

def __init__(self, hidden_layers = [20, 15, 5], activation_functions = ['relu', 'relu', 'relu'],

optimizer = SGD(), loss = 'mean_absolute_error'):

self.hidden_layers = hidden_layers

self.activation_functions = activation_functions

self.optimizer = optimizer

self.loss = loss

if len(self.hidden_layers) != len(self.activation_functions):

raise Exception("hidden_layers size must match activation_functions size")

def fit(self, timeseries, lag = 7, epochs = 10000, verbose = 0):

self.timeseries = np.array(timeseries, dtype = "float64") # Apply log transformation por variance stationarity

self.lag = lag

self.n = len(timeseries)

if self.lag >= self.n:

raise ValueError("Lag is higher than length of the timeseries")

self.X = np.zeros((self.n - self.lag, self.lag), dtype = "float64")

self.y = np.log(self.timeseries[self.lag:])

self.epochs = epochs

self.scaler = StandardScaler()

self.verbose = verbose

logging.info("Building regressor matrix")

# Building X matrix

for i in range(0, self.n - lag):

self.X[i, :] = self.timeseries[range(i, i + lag)]

logging.info("Scaling data")

self.scaler.fit(self.X)

self.X = self.scaler.transform(self.X)

logging.info("Checking network consistency")

# Neural net architecture

self.nn = Sequential()

self.nn.add(Dense(self.hidden_layers[0], input_shape = (self.X.shape[1],)))

self.nn.add(Activation(self.activation_functions[0]))

for layer_size, activation_function in zip(self.hidden_layers[1:],self.activation_functions[1:]):

self.nn.add(Dense(layer_size))

self.nn.add(Activation(activation_function))

# Add final node

self.nn.add(Dense(1))

self.nn.add(Activation('linear'))

self.nn.compile(loss = self.loss, optimizer = self.optimizer)

logging.info("Training neural net")

# Train neural net

self.nn.fit(self.X, self.y, epochs = self.epochs, verbose = self.verbose)

def predict_ahead(self, n_ahead = 1):

# Store predictions and predict iteratively

self.predictions = np.zeros(n_ahead)

for i in range(n_ahead):

self.current_x = self.timeseries[-self.lag:]

self.current_x = self.current_x.reshape((1, self.lag))

self.current_x = self.scaler.transform(self.current_x)

self.next_pred = self.nn.predict(self.current_x)

self.predictions[i] = np.exp(self.next_pred[0, 0])

self.timeseries = np.concatenate((self.timeseries, np.exp(self.next_pred[0,:])), axis = 0)

return self.predictions

训练

count = 0

ahead = 12

pred = []

while ( count < ahead ) :

end =len(x) - ahead + count

np.random.seed(2016)

model = TimeSeriesNnet(hidden_layers =[ 7 , 3 ], activation_functions = ["tanh" , "tanh"])

model .fit ( x[ 0 : end ] , lag = 1 , epochs = 100)

out = model.predict_ahead(n_ahead = 1)

print ("Obs: " , count+1, "x =" ,round( x[count] , 4 ) , "prediction = " , round(pd.Series(out) , 4 ) )

pred.append(out)

count = count + 1

pred = np.exp(scaler.inverse_transform(pred))

target = np.exp(scaler.inverse_transform(x[-ahead:].reshape(-1,1)))

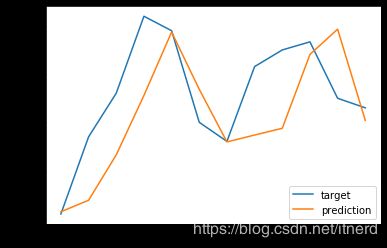

plt.plot(target)

plt.plot(pred1)

plt.legend(['target','prediction'])

二、同时基于历史和外部输入来预测

输入为 x x x,输出为 y y y

此时的输入不仅包含上一时刻的 y,还包含另外3个时间序列的值

loc= "COE.csv "

temp = pd.read_csv ( loc )

data= temp . drop ( temp . columns [ [ 0 , 1 ] ] , axis=1)

y=data ['COE$']

x=data.drop ( data . columns [ [ 0 , 4 ] ] , axis =1)

x=x.apply(np.log )

x=pd.concat ( [ x , data ['Open?' ] ] , axis=1)

print(x.head())

'''

COE$_1 #Bids Quota Open?

0 8.955448 6.486161 6.156979 0

1 8.909235 7.287561 6.148468 0

2 9.314700 6.450470 6.156979 0

3 8.517593 6.858565 6.236370 0

4 8.061487 6.823286 6.154858 0

'''

scaler_x = preprocessing . MinMaxScaler (feature_range=(0 , 1))

x = np.array(x).reshape((len (x) , 4))

x = scaler_x.fit_transform (x)

scaler_y = preprocessing . MinMaxScaler (feature_range=(0 , 1) )

y = np.array(y).reshape((len (y) , 1))

y = np.log(y)

y = scaler_y.fit_transform(y)

y=y.tolist ( )

x=x.tolist ( )

使用 pyneurgen 库

from pyneurgen . neuralnet import NeuralNet

random. seed(2019)

nnet = NeuralNet ( )

nnet.init_layers ( 4 , [ 7 , 3 ] , 1)

nnet.randomize_network()

nnet . set_learnrate ( .05 )

nnet . set_all_inputs ( x )

nnet . set_all_targets ( y )

length = len ( x )

learn_end_point = int ( length * 0.95 )

nnet . set_learn_range ( 0 , learn_end_point )

nnet . set_test_range ( learn_end_point + 1 ,length -1)

nnet . layers [ 1 ] . set_activation_type ( 'tanh' )

nnet . layers [ 2 ] . set_activation_type ( 'tanh')

nnet . learn ( epochs=100 ,show_epoch_results=True ,random_testing=False )

mse = nnet . test ( )

print (" test MSE =" ,np . round(mse , 6 ))

result = np.round( nnet.test_targets_activations , 4 )

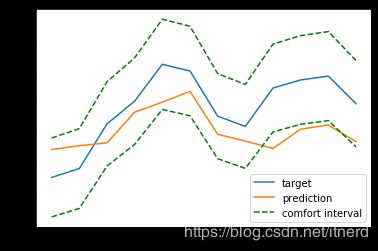

plt.plot(result[:,0])

plt.plot(result[:,1])

plt.plot(result[:,0]*1.05,'--g',result[:,0]*0.95,'--g')

plt.legend(['target','prediction','comfort interval'])