两层线性神经网络的多种实现方法(pytorch入门1)

文章目录

- Numpy实现

- Pytorch实现

- 1.整体框架完全不变,只是改用torch的语法

- 2.利用pytorch的autograd机制

- 3.利用pytorch.nn搭建model

- 4.用torch.optim自动优化,得到梯度最小

- 5.使用nn.module类自定义模型

- 6.总结

Numpy实现

完全用numpy写,第一层线性层,第二层relu激活层,第三层线性层

# 用numpy实现两层神经网络

import numpy as np

N,D_in,H,D_out = 64,1000,100,10

x = np.random.randn(N,D_in)

y = np.random.randn(N,D_out)

# w1 = np.random.rand(D_in,H)

# w2 = np.random.rand(H,D_out)

# 初始化用rand效果很差

w1 = np.random.randn(D_in,H)

w2 = np.random.randn(H,D_out)

learning_rate = 1e-6

for i in range(500):

# forward pass

h = x.dot(w1)

h_relu = np.maximum(h,0)

y_pred = h_relu.dot(w2)

# compute loss

loss = np.square(y_pred-y).sum()

print(i,loss)

# back pass

y_pred_grad = 2.0 * (y_pred-y)

w2_grad = h_relu.T.dot(y_pred_grad)

h_relu_grad = y_pred_grad.dot(w2.T)

h_grad = h_relu_grad.copy()

h_grad[h<0] = 0

w1_grad = x.T.dot(h_grad)

# update weight

w1 -= learning_rate * w1_grad

w2 -= learning_rate * w2_gradPytorch实现

1.整体框架完全不变,只是改用torch的语法

# 用torch实现两层神经网络

import torch

N,D_in,H,D_out = 64,1000,100,10

x = torch.randn(N,D_in)

y = torch.randn(N,D_out)

# w1 = np.random.rand(D_in,H)

# w2 = np.random.rand(H,D_out)

w1 = torch.randn(D_in,H)

w2 = torch.randn(H,D_out)

learning_rate = 1e-6

for i in range(500):

# forward pass

h = x.mm(w1) # 用.mm代替.dot

h_relu = torch.clamp(h,min=0) # clamp函数代替maximum,博客写了

y_pred = h_relu.mm(w2)

# compute loss

loss = (y_pred-y).pow(2).sum()

# loss是一个值的tensor,需要item()将值取出

print(i,loss.item())

# back pass

y_pred_grad = 2.0 * (y_pred-y)

w2_grad = h_relu.t().mm(y_pred_grad) # torch的转置用.t()

h_relu_grad = y_pred_grad.mm(w2.t())

h_grad = h_relu_grad.clone() # torch的复制用.clone()

h_grad[h<0] = 0

w1_grad = x.t().mm(h_grad)

# update weight

w1 -= learning_rate * w1_grad

w2 -= learning_rate * w2_grad2.利用pytorch的autograd机制

具体更改两点:

- 设置requires_grad参数为True

- lose函数的backward()

关于下面提到的clamp函数:clamp

# 利用pytorch的autograd机制

import torch

N,D_in,H,D_out = 64,1000,100,10

x = torch.randn(N,D_in)

y = torch.randn(N,D_out)

w1 = torch.randn(D_in,H,requires_grad=True)

w2 = torch.randn(H,D_out,requires_grad=True)

learning_rate = 1e-6

for i in range(500):

# forward pass

h = x.mm(w1) # 用.mm代替.dot

h_relu = torch.clamp(h,min=0) # clamp函数代替maximum,博客写了

y_pred = h_relu.mm(w2)

# compute loss

loss = (y_pred-y).pow(2).sum()

# loss是一个值的tensor,需要item()将值取出

print(i,loss.item())

# back pass

# 不需要复杂的计算,直接用backward()

loss.backward()

# update weight

with torch.no_grad(): # 节省内存空间

w1 -= learning_rate * w1.grad

w2 -= learning_rate * w2.grad

# 使用完梯度,要将梯度清零,否则每次迭代都会堆积

# 利用zero_()这一inplace函数,直接将w1、w2的梯度清零

w1.grad.zero_()

w2.grad.zero_()3.利用pytorch.nn搭建model

model里定义了各个层级,在下面的情况下, 初始化后的效果比较好(玄学)

# model

import torch

import torch.nn as nn

N,D_in,H,D_out = 64,1000,100,10

x = torch.randn(N,D_in)

y = torch.randn(N,D_out)

model = nn.Sequential(

nn.Linear(D_in,H),

nn.ReLU(),

nn.Linear(H,D_out)

)

# 初始化model的权重w1、w2,mean和std决定了分布,可以调

nn.init.normal_(model[0].weight,mean = 0.0,std = 1.0)

nn.init.normal_(model[2].weight,mean = 0.0,std = 1.0)

learning_rate = 1e-6

for i in range(500):

# forward pass

y_pred = model(x)

# compute loss

loss = (y_pred-y).pow(2).sum()

# loss是一个值的tensor,需要item()将值取出

print(i,loss.item())

# back pass

# 不需要复杂的计算,直接用backward()

loss.backward()

# update weight

with torch.no_grad(): # 节省内存空间

# 更新每一个参数

for para in model.parameters():

para -= learning_rate * para.grad

# 使用完梯度,要将梯度清零,否则每次迭代都会堆积

# 利用zero_grad()清零

model.zero_grad()

4.用torch.optim自动优化,得到梯度最小

在上面model的基础上,将model中的所有参数传入optim中,并设好learningrate

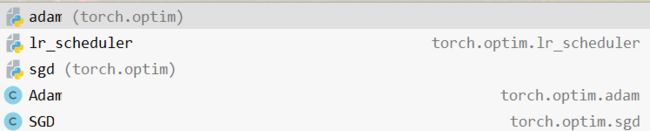

有adm、sgd等优化方式

SGD常用learning_rate应>1e-6,至于下面代码中最终结果也不错,我也不知道为何

# model

import torch

import torch.nn as nn

N,D_in,H,D_out = 64,1000,100,10

x = torch.randn(N,D_in)

y = torch.randn(N,D_out)

model = nn.Sequential(

nn.Linear(D_in,H),

nn.ReLU(),

nn.Linear(H,D_out)

)

# 初始化model的权重w1、w2,mean和std决定了分布,可以调

nn.init.normal_(model[0].weight,mean = 0.0,std = 1.0)

nn.init.normal_(model[2].weight,mean = 0.0,std = 1.0)

optimizer = torch.optim.SGD(

params= model.parameters(),

lr = 1e-6

)

loss_fn = nn.MSELoss(reduction='sum')

for i in range(500):

# forward pass

y_pred = model(x)

# compute loss

loss = loss_fn(y_pred,y)

# loss是一个值的tensor,需要item()将值取出

print(i,loss.item())

# back pass

# 不需要复杂的计算,直接用backward()

loss.backward()

# update weight

optimizer.step() # 用step更新参数

optimizer.zero_grad() # 用zero_grad清零梯度5.使用nn.module类自定义模型

3.中的nn.model可以方便的定义两层神经网络模型,但不够灵活。故树用nn.module。

需要两个内部函数:

- init 定义网络

- forward 前向传播

损失函数计算和后向传播不变

# module

import torch

import torch.nn as nn

N,D_in,H,D_out = 64,1000,100,10

x = torch.randn(N,D_in)

y = torch.randn(N,D_out)

class TwoLayerNet(nn.Module):

# 在init中继承类,并定义两层线性层

def __init__(self,D_in,H,D_out):

super(TwoLayerNet,self).__init__() # 继承nn.Module

self.linear1 = nn.Linear(D_in,H)

self.linear2 = nn.Linear(H,D_out)

# 在forward中定义前向传播

def forward(self,x):

return self.linear2(torch.clamp(self.linear1(x),min=0))

model = TwoLayerNet(D_in,H,D_out)

# optimizer = torch.optim.Adam(

# params= model.parameters(),

# lr = 1e-3

# )

optimizer = torch.optim.SGD(

params= model.parameters(),

lr = 1e-3

)

loss_fn = nn.MSELoss(reduction='sum')

for i in range(500):

# forward pass

y_pred = model(x)

# compute loss

loss = loss_fn(y_pred,y)

# loss是一个值的tensor,需要item()将值取出

print(i,loss.item())

# back pass

# 不需要复杂的计算,直接用backward()

loss.backward()

# update weight

optimizer.step() # 用step更新参数

optimizer.zero_grad() # 用zero_grad清零梯度6.总结

以上是我在b站某pytorch入门教程的第一课中得到的收获,于我而言,课程讲得真的很好,感兴趣的xd也可以去康康。

最终合适的框架应该是*5.*即:

- 用module自定义model

- 将model中的参数传入optimizer利用step来自动更新参数

- 当然前提是lossfunction已经backward反向求梯度了

end