利用sklearn 计算 precision、recall、F1 score

精确度:precision,正确预测为正的,占全部预测为正的比例,TP / (TP+FP)

召回率:recall,正确预测为正的,占全部实际为正的比例,TP / (TP+FN)

F1-score:精确率和召回率的调和平均数,2 * precision*recall / (precision+recall)

from sklearn.metrics import confusion_matrix

from sklearn.metrics import classification_report

from sklearn.metrics import precision_recall_fscore_support

from sklearn.metrics import accuracy_score

actual = [0,0,0,0,1,1,0,3,3]

predicted = [0,0,0,0,1,1,2,3,3]

# 计算总的精度

acc = accuracy_score(actual, predicted)

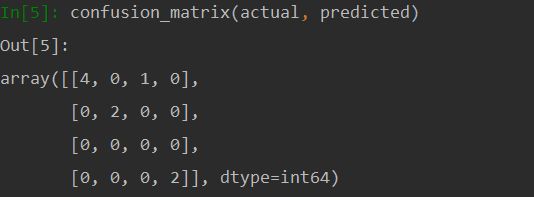

# 计算混淆矩阵

confusion_matrix(actual, predicted)

结果:

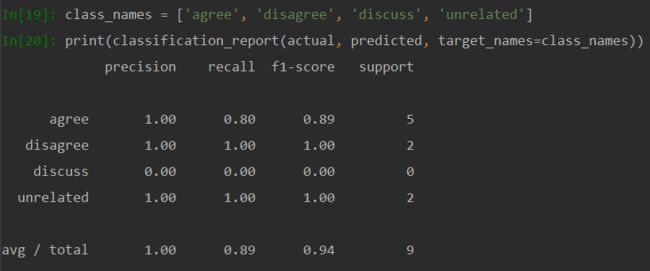

由混淆矩阵计算每一类的precision、recall、F1-score:

例 class0: precision = 4/4+0+0+0 = 1; recall = 4/4+0+1+0 = 0.8; f1-score = 2 *1 *0.8/(1+0.8) = 0.89

# 计算precision, recall, F1-score, support

class_names = ['agree', 'disagree', 'discuss', 'unrelated']

print(classification_report(actual, predicted, target_names=class_names))

# 另一种=方式计算precision, recall, F1-score, support

pre, rec, f1, sup = precision_recall_fscore_support(actual, predicted)

print("precision:", pre, "\nrecall:", rec, "\nf1-score:", f1, "\nsupport:", sup)