ELK小规模搜索引擎详解

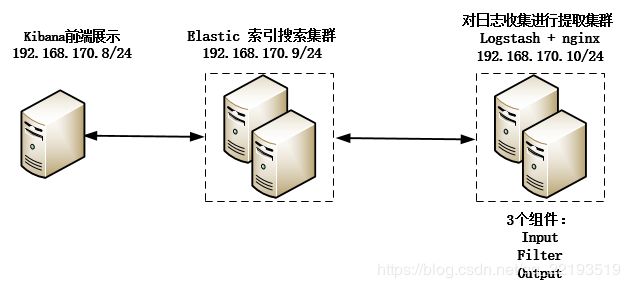

实验环境

192.168.170.8 node1 kibana和haproxy

192.168.170.9 node2 elasticsearch

192.168.170.10 node3 logstash和nginx

拓扑图

准备工作:

每个节点都配置主机名保证3台主机互通

[root@node1 ~]# vi /etc/hosts

192.168.170.8 node1

192.168.170.9 node2

192.168.170.10 node3

保证ntp时间是同步的,3个节点时间是一致,同时关闭selinux和防火墙

一、去下载最新的稳定版,因为功能最多最全,这里贴出官网https://www.elastic.co/downloads

二、Elasticsearch安装

1 由于安装ELK依赖jdk,因此先安装jdk,必须是1.8版本以上

[root@node2 ~]# yum -y install java-1.8.0-openjdk-devel2 下载并安装Elasticsearch

[root@node2 ~]# wget https://artifacts.elastic.co/downloads/elasticsearch/elasticsearch-5.4.3.rpm

[root@node2 ~]# rpm -ivh elasticsearch-5.4.3.rpm3 编辑配置文件,主要修改以下几项

[root@node2 ~]# vim /etc/elasticsearch/elasticsearch.yml

cluster.name: myels #集群名称,在同一个集群必须一致

path.data: /data/elasticsearch #日志存储目录

path.logs: /data/elasticsearch/log #elasticsearch启动日志路径

network.host: 192.168.170.9 #这里是本主机IP地址

node.name: "node2" #节点名字,不同节点名字要改为不一样

http.port: 9200 #api接口url

node.master: true #主节点

node.data: true #是否存储数据

bootstrap.memory_lock: true #开启内存锁定

#手动发现节点,我这里有两个节点加入到elk集群

discovery.zen.ping.unicast.hosts: ["192.168.170.8", "192.168.170.9"]

#总节点数/2+1

discovery.zen.minimum_master_nodes: 2 4 创建配置文件夹后启动

[root@node2 ~]# mkdir -pv /data/elasticsearch/log

[root@node2 ~]# chown -R elasticsearch.elasticsearch /data/5 查看启动状态及监听的端口

[root@node2 ~]# systemctl start elasticsearch

[root@node2 ~]# ss -tunlp

[root@node2 ~]# ss -tunlp |grep 9200

tcp LISTEN 0 128 ::ffff:192.168.170.9:9200 :::* users:(("java",pid=9655,fd=175))

tcp LISTEN 0 128 ::ffff:192.168.170.9:9300 :::* users:(("java",pid=9655,fd=129))

[root@node2 ~]# 6 启动后通过如下命令查看后台日志看els是否有报错信息,显示started启动正常。

[root@node2 ~]# tail -f /data/elasticsearch/log/

[2019-04-15T08:50:19,204][INFO ][o.e.n.Node ] [node2] stopping ...

[2019-04-15T08:50:19,409][INFO ][o.e.n.Node ] [node2] stopped

[2019-04-15T08:50:19,410][INFO ][o.e.n.Node ] [node2] closing ...

[2019-04-15T08:50:19,425][INFO ][o.e.n.Node ] [node2] closed

[2019-04-15T08:50:24,440][INFO ][o.e.n.Node ] [node2] initializing ...

[2019-04-15T08:50:24,612][INFO ][o.e.e.NodeEnvironment ] [node2] using [1] data paths, mounts [[/ (rootfs)]], net usable_space [25.8gb], net total_space [28.9gb], spins? [unknown], types [rootfs]

[2019-04-15T08:50:24,613][INFO ][o.e.e.NodeEnvironment ] [node2] heap size [1.9gb], compressed ordinary object pointers [true]

[2019-04-15T08:50:24,694][INFO ][o.e.n.Node ] [node2] node name [node2], node ID [xG5TiVBuQSiFPqHlxoCNAQ]

[2019-04-15T08:50:24,695][INFO ][o.e.n.Node ] [node2] version[5.4.3], pid[16728], build[eed30a8/2017-06-22T00:34:03.743Z], OS[Linux/3.10.0-862.el7.x86_64/amd64], JVM[Oracle Corporation/OpenJDK 64-Bit Server VM/1.8.0_201/25.201-b09]

[2019-04-15T08:50:24,695][INFO ][o.e.n.Node ] [node2] JVM arguments [-Xms2g, -Xmx2g, -XX:+UseConcMarkSweepGC, -XX:CMSInitiatingOccupancyFraction=75, -XX:+UseCMSInitiatingOccupancyOnly, -XX:+DisableExplicitGC, -XX:+AlwaysPreTouch, -Xss1m, -Djava.awt.headless=true, -Dfile.encoding=UTF-8, -Djna.nosys=true, -Djdk.io.permissionsUseCanonicalPath=true, -Dio.netty.noUnsafe=true, -Dio.netty.noKeySetOptimization=true, -Dio.netty.recycler.maxCapacityPerThread=0, -Dlog4j.shutdownHookEnabled=false, -Dlog4j2.disable.jmx=true, -Dlog4j.skipJansi=true, -XX:+HeapDumpOnOutOfMemoryError, -Des.path.home=/usr/share/elasticsearch]

[2019-04-15T08:50:26,571][INFO ][o.e.p.PluginsService ] [node2] loaded module [aggs-matrix-stats]

[2019-04-15T08:50:26,571][INFO ][o.e.p.PluginsService ] [node2] loaded module [ingest-common]

[2019-04-15T08:50:26,572][INFO ][o.e.p.PluginsService ] [node2] loaded module [lang-expression]

[2019-04-15T08:50:26,572][INFO ][o.e.p.PluginsService ] [node2] loaded module [lang-groovy]

[2019-04-15T08:50:26,572][INFO ][o.e.p.PluginsService ] [node2] loaded module [lang-mustache]

[2019-04-15T08:50:26,572][INFO ][o.e.p.PluginsService ] [node2] loaded module [lang-painless]

[2019-04-15T08:50:26,572][INFO ][o.e.p.PluginsService ] [node2] loaded module [percolator]

[2019-04-15T08:50:26,572][INFO ][o.e.p.PluginsService ] [node2] loaded module [reindex]

[2019-04-15T08:50:26,572][INFO ][o.e.p.PluginsService ] [node2] loaded module [transport-netty3]

[2019-04-15T08:50:26,572][INFO ][o.e.p.PluginsService ] [node2] loaded module [transport-netty4]

[2019-04-15T08:50:26,574][INFO ][o.e.p.PluginsService ] [node2] no plugins loaded

[2019-04-15T08:50:28,486][INFO ][o.e.d.DiscoveryModule ] [node2] using discovery type [zen]

[2019-04-15T08:50:29,258][INFO ][o.e.n.Node ] [node2] initialized

[2019-04-15T08:50:29,258][INFO ][o.e.n.Node ] [node2] starting ...

[2019-04-15T08:50:29,494][INFO ][o.e.t.TransportService ] [node2] publish_address {192.168.170.9:9300}, bound_addresses {192.168.170.9:9300}

[2019-04-15T08:50:29,502][INFO ][o.e.b.BootstrapChecks ] [node2] bound or publishing to a non-loopback or non-link-local address, enforcing bootstrap checks

[2019-04-15T08:50:32,938][INFO ][o.e.c.s.ClusterService ] [node2] detected_master {node1}{U9lP7v3aSuSzL_AWgZMc9g}{kuvKCTVnT0uB_pe5-7BR5w}{192.168.170.8}{192.168.170.8:9300}, added {{node1}{U9lP7v3aSuSzL_AWgZMc9g}{kuvKCTVnT0uB_pe5-7BR5w}{192.168.170.8}{192.168.170.8:9300},}, reason: zen-disco-receive(from master [master {node1}{U9lP7v3aSuSzL_AWgZMc9g}{kuvKCTVnT0uB_pe5-7BR5w}{192.168.170.8}{192.168.170.8:9300} committed version [174]])

[2019-04-15T08:50:33,249][INFO ][o.e.h.n.Netty4HttpServerTransport] [node2] publish_address {192.168.170.9:9200}, bound_addresses {192.168.170.9:9200}

[2019-04-15T08:50:33,255][INFO ][o.e.n.Node ] [node2] started7 修改java虚拟机的内存,否则,无法启动elk,按实际情况改为合理的参数

[root@node2 ~]# vi /etc/elasticsearch/jvm.options

-Xms4g

-Xmx4g8 可以通过修改启动服务配置文件,再次重启elk正常

[root@node2 ~]# vi /usr/lib/systemd/system/elasticsearch.service

LimitMEMLOCK=infiinity #不限制内存大小,默认是关闭的安装nginx 1.10.3:

[root@node3 ~]# cd /usr/local/src

[root@node3 src]# yum install gcc gcc-c++ automake pcre pcre-devel zlip zlib-devel openssl openssl-devel

[root@node3 src]# wget http://nginx.org/download/nginx-1.10.3.tar.gz

[root@node3 src]# tar xf nginx-1.10.3.tar.gz

[root@node3 src]# cd nginx-1.10.3

[root@node3 src]# ./configure --prefix=/usr/local/nginx

[root@node3 src]# make && make install配置nginx测试页面并做测试

[root@node3 conf.d]# vi vhost.conf

server {

listen 80;

server_name www.node3.com;

root /data/nginx/html;

}

[root@node3 conf.d]# mkdir -pv /data/nginx/html/

[root@node3 conf.d]# cd /data/nginx/html/

[root@node3 html]# vi index.html

Test Page

[root@node3 html]# nginx -t

nginx: the configuration file /etc/nginx/nginx.conf syntax is ok

nginx: configuration file /etc/nginx/nginx.conf test is successful

[root@node3 html]# systemctl restart nginx

[root@node3 html]#

[root@node1 html]# curl http://192.168.170.10

Test Page

[root@node1 html]# 注意:测试时建议注释nginx.conf中server段的所有配置项,避免测试无法进行,这是我踩过的坑,花了我好久才找到答案。

安装logstash

logstash依赖jdk,因此下载安装jdk和logstash,并做标准输入输出操作

[root@node3 src]# yum -y install jdk-8u25-linux-x64.rpm logstash-6.5.4.rpm

[root@node3 src]# cd /etc/logstash/conf.d/

[root@node3 conf.d]# ls

[root@node3 conf.d]# vi stdout.conf

input {

stdin {}

}

output {

stdout {

codec => "rubydebug"

}

}测试logstash标准输入输出语法是否正确,如下显示Configuration OK表示语法正确。

[root@node3 conf.d]# /usr/share/logstash/bin/logstash -f /etc/logstash/conf.d/stdout.conf -t

WARNING: Could not find logstash.yml which is typically located in $LS_HOME/config or /etc/logstash. You can specify the path using --path.settings. Continuing using the defaults

Could not find log4j2 configuration at path /usr/share/logstash/config/log4j2.properties. Using default config which logs errors to the console

[INFO ] 2019-04-13 14:54:26.391 [main] writabledirectory - Creating directory {:setting=>"path.queue", :path=>"/usr/share/logstash/data/queue"}

[INFO ] 2019-04-13 14:54:26.403 [main] writabledirectory - Creating directory {:setting=>"path.dead_letter_queue", :path=>"/usr/share/logstash/data/dead_letter_queue"}

[WARN ] 2019-04-13 14:54:27.113 [LogStash::Runner] multilocal - Ignoring the 'pipelines.yml' file because modules or command line options are specified

Configuration OK

[INFO ] 2019-04-13 14:54:30.409 [LogStash::Runner] runner - Using config.test_and_exit mode. Config Validation Result: OK. Exiting Logstash收集nginx日志并输出到/tmp/nginx.conf中

[root@node3 conf]# cd /etc/logstash/conf.d/

[root@node3 conf.d]# vi stdout.conf

input {

file {

path => "/usr/local/nginx/logs/access.log"

start_position => "beginning"

}

}

output {

file {

path => "/tmp/nginx.conf"

}

}重启logstash服务,刷新nginx主页面,查看/tmp/nginx.conf日志

[root@node3 conf.d]# systemctl restart logstash

[root@node3 conf.d]# tail -f /tmp/nginx.conf

{"host":"node3","@timestamp":"2019-04-13T07:37:20.848Z","path":"/usr/local/nginx/logs/access.log","@version":"1","message":"172.17.1.94 - - [13/Apr/2019:15:37:20 +0800] \"GET / HTTP/1.1\" 304 0 \"-\" \"Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/74.0.3724.8 Safari/537.36\""}

{"host":"node3","@timestamp":"2019-04-13T07:37:23.861Z","path":"/usr/local/nginx/logs/access.log","@version":"1","message":"172.17.1.94 - - [13/Apr/2019:15:37:23 +0800] \"GET / HTTP/1.1\" 304 0 \"-\" \"Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/74.0.3724.8 Safari/537.36\""}收集nginx日志,并将日志输出到elasticsearch中

[root@node3 conf.d]# vi stdout.conf

#收集nginx日志

input {

file {

path => "/usr/local/nginx/logs/access.log"

start_position => "beginning"

}

}

#输出到elasticsearch中去,并把日志保存在/tmp/nginx.conf中

output {

elasticsearch {

hosts => ["192.168.170.9:9200"]

index => "nginx-accesslog-00010-%{+YYYY.MM.dd}"

}

file {

path => "/tmp/nginx.conf"

}

}测试logstash语法

[root@node3 conf.d]# /usr/share/logstash/bin/logstash -f /etc/logstash/conf.d/stdout.conf -t

[root@node3 conf.d]# systemctl restart logstash查看logstash日志是否输出到elasticsearch

[root@node3 conf.d]# tail -f /var/log/logstash/logstash-plain.log -f在elasticsearch上可以看到,日志文件保存在/data/elasticsearch/nodes/0/indices/中,文件名是随机生成的

[root@node1 ~]# ll /data/elasticsearch/nodes/0/indices/

total 0

drwxr-xr-x. 8 elasticsearch elasticsearch 65 Apr 13 13:55 MvEuf88tSk2E69QYjbUAPw

drwxr-xr-x. 8 elasticsearch elasticsearch 65 Apr 13 15:51 ZmxdAucdQnmY9jpzwAbGYw安装kibana

kibana与Elasticsearch版本号必须一致,否则会报错。

下载kibana安装包并安装

[root@node1 ~]# cd /usr/loca/src

[root@node1 src]# wget https://artifacts.elastic.co/downloads/kibana/kibana-5.4.3-x86_64.rpm

[root@node1 src]# yum install kibana-5.4.3-x86_64.rpm -y

修改配置文件

[root@node1 src]# vim /etc/kibana/kibana.yml

server.port: 5601

server.host: "0.0.0.0"

elasticsearch.url: http://192.168.0.9:9200 调用elasticsearch的接口开启启动及设置开启启动服务

[root@node1 src]# systemctl start kibana

[root@node1 src]# systemctl enable kibana验证kibana是否启动成功:

[root@node1 src]# ps -ef | grep kibana

[root@node1 src]# ss -tnl | grep 5601

[root@node1 src]# lsof -i:5601收集nginx日志和系统日志,并将日志输出到elasticsearch当中去

[root@node3 conf.d]# vi stdout.conf

#收集nginx日志和系统日志

input {

file {

type => "nginxlog-0010"

path => "/usr/local/nginx/logs/access.log"

start_position => "beginning"

}

file {

type => "messagelog-0010"

path => "/var/log/message"

start_position => "beginning"

}

}

#将收集到的日志,输出到elasticsearch中去,并把nginx日志保存在/nginx-accesslog-0010中,将系统日志messagelog-0010中

output {

if [type] == "nginxlog-0010" {

elasticsearch {

index => "nginx-accesslog-0010-%{+YYYY.MM.dd}"

}

}

file {

path => "/tmp/nginx.conf"

}

if [type] == "messagelog-0010" {

elasticsearch {

hosts => ["192.168.170.9:9200"]

index => "messagelog-0010-%{+YYYY.MM.dd}"

}

}

}重启服务并监测服务启动状态

[root@node3 ~]# systemctl restart logstash

[root@node3 conf.d]# tail -f /var/log/logstash/logstash-plain.log

[2019-04-14T21:11:57,871][INFO ][logstash.inputs.file ] No sincedb_path set, generating one based on the "path" setting {:sincedb_path=>"/var/lib/logstash/plugins/inputs/file/.sincedb_d2343edad78a7252d2ea9cba15bbff6d", :path=>["/usr/local/nginx/logs/access.log"]}

[2019-04-14T21:11:57,876][INFO ][logstash.inputs.file ] No sincedb_path set, generating one based on the "path" setting {:sincedb_path=>"/var/lib/logstash/plugins/inputs/file/.sincedb_452905a167cf4509fd08acb964fdb20c", :path=>["/var/log/messages"]}

[2019-04-14T21:11:58,040][INFO ][logstash.pipeline ] Pipeline started successfully {:pipeline_id=>"main", :thread=>"#"}

[2019-04-14T21:11:58,177][INFO ][filewatch.observingtail ] START, creating Discoverer, Watch with file and sincedb collections

[2019-04-14T21:11:58,214][INFO ][filewatch.observingtail ] START, creating Discoverer, Watch with file and sincedb collections

[2019-04-14T21:11:58,256][INFO ][filewatch.observingtail ] START, creating Discoverer, Watch with file and sincedb collections

[2019-04-14T21:11:58,446][INFO ][logstash.agent ] Pipelines running {:count=>1, :running_pipelines=>[:main], :non_running_pipelines=>[]}

[2019-04-14T21:12:00,144][INFO ][logstash.agent ] Successfully started Logstash API endpoint {:port=>9600}

[2019-04-14T21:12:01,535][INFO ][logstash.outputs.file ] Opening file {:path=>"/tmp/nginx.conf"}

[2019-04-14T21:12:01,609][INFO ][logstash.outputs.file ] Opening file {:path=>"/tmp/nginx.conf"} 安装配置Nginx代理kibana服务,实现登录认证

配置Kibana

[root@node1 ~]# grep "^[a-z]" /etc/kibana/kibana.yml

server.port: 5601

server.host: "127.0.0.1" # 端口监听地址,此时配置用于测试

elasticsearch.url: "http://192.168.170.8:9200" #用来处理所有查询的 Elasticsearch 实例的 URL,写本机elasticsearch的ip地址,写其它elasticsearch也可以,但是在做反向代理时不能访问。重启Kibana,查看本地监听端口

[root@node1 ~]# systemctl restart kibana

[root@node1 ~]# ss -tunlp

Netid State Recv-Q Send-Q Local Address:Port Peer Address:Port

udp UNCONN 0 0 127.0.0.1:323 *:* users:(("chronyd",pid=530,fd=1))

udp UNCONN 0 0 ::1:323 :::* users:(("chronyd",pid=530,fd=2))

tcp LISTEN 0 128 127.0.0.1:5601 *:* users:(("node",pid=27837,fd=10))

yum安装nginx,配置niginx反向代理

[root@node1 ~]# yum install nginx -y

[root@node1 ~]# cd /etc/nginx/conf.d

[root@node1 conf.d]# vi proxy-kibana.conf

upstream kibana_server {

server 127.0.0.1:5601 weight=1 max_fails=3 fail_timeout=60;

}

server {

listen 80;

server_name kibana1013.test.com; #监听域名

location / {

proxy_pass http://127.0.0.1:5601; #kibana端口

proxy_http_version 1.1;

proxy_set_header Upgrade $http_upgrade;

proxy_set_header Connection 'upgrade';

proxy_set_header Host $host;

proxy_cache_bypass $http_upgrade;

}

}启动nginx:

[root@node1 ~]# nginx -t

[root@node1 ~]# systemctl start nginx

[root@node1 ~]# netstat -lntp | grep 80

tcp 0 0 0.0.0.0:80 0.0.0.0:* LISTEN 6085/nginx: master 配置验证

创建验证文件授权,需要先安装httpd-tools工具

[root@node1 conf.d]# yum install -y httpd-tools

[root@node1 conf.d]# htpasswd -bc /etc/nginx/htpasswd.users kibanauser 123456

[root@node1 conf.d]# cat /etc/nginx/htpasswd.users

kibanauser:$apr1$FKK.o3xu$hAg7Si5cJtSKnYGXgfxNJ0如果要添加多个用户密码可以使用如下命令

[root@node1 ~]# htpasswd -b /etc/nginx/htpasswd.users jerry redhat

Adding password for user try

[root@node1 ~]# cat /etc/nginx/htpasswd.users

admin:$apr1$9AMiN0Ud$Q95cyrPix89nw3h3d4cwo0

jerry:$apr1$s5QCG32f$9KQFhsiw.PYmmmst.5r/q1确保hosts文件能解析,没有需要添加上,否则无法解析访问

[root@localhost ~] vi /etc/hosts

192.168.170.8 kibana1013.test.com再次使用IP登录时,就需要输入用户名和密码。

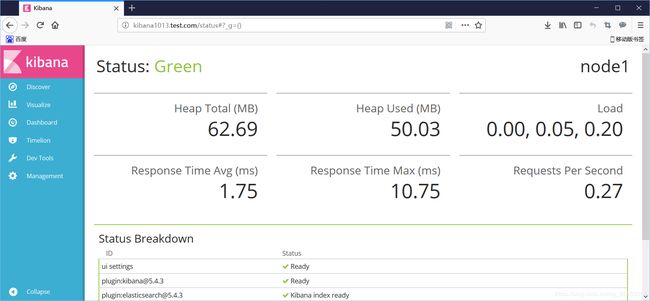

可以通过使用status查看系统的当前状态:http://kibana1013.test.com/status