Android Camera2 Hal3(二)startPreview预览过程

引言

上一节分析了Camera2 Hal3的初始化过程。这一节来分析写预览过程,预览过程我将以Camera2Client::startPreview为入口分析这个过程。

时序图

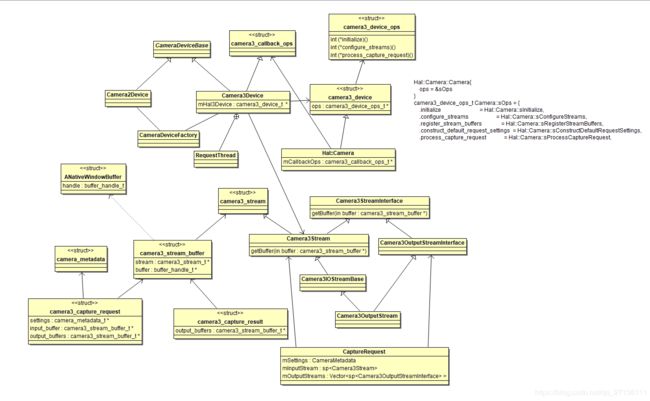

类图

创建流,启动流

startPreview过程会先调用mStreamingProcessor->updatePreviewStream来创建Camera3OutputStream

status_t Camera2Client::startPreview() {

...

SharedParameters::Lock l(mParameters);

//上一节的分析我们知道了l.mParameters.initialize实际上就是调用Parameters::initialize,只不过给它加了一把Mutex而已

//所以这里l.mParameters的调用也是返回Parameters对象,同时给它加了一把Mutex锁

return startPreviewL(l.mParameters, false);

}

status_t Camera2Client::startPreviewL(Parameters ¶ms, bool restart) {

...

res = mStreamingProcessor->updatePreviewStream(params);

....

}

status_t StreamingProcessor::updatePreviewStream(const Parameters ¶ms) {

sp<CameraDeviceBase> device = mDevice.promote();//上一节的分析我们知道了mDevice就是指向Camera3Device

if (mPreviewStreamId == NO_STREAM) {

res = device->createStream(mPreviewWindow,

params.previewWidth, params.previewHeight,

CAMERA2_HAL_PIXEL_FORMAT_OPAQUE, &mPreviewStreamId);

}

}

status_t Camera3Device::createStream(sp<ANativeWindow> consumer,

uint32_t width, uint32_t height, int format, int *id) {

newStream = new Camera3OutputStream(mNextStreamId, consumer,width, height, format);

mOutputStreams.add(mNextStreamId, newStream);

}

Camera3OutputStream::Camera3OutputStream(int id, sp<ANativeWindow> consumer,uint32_t width, uint32_t height, int format) :

Camera3IOStreamBase(id, CAMERA3_STREAM_OUTPUT, width, height, /*maxSize*/0, format),

mConsumer(consumer),

mTransform(0),

mTraceFirstBuffer(true) {

......

}

在创建Camera3OutputStream的过程中有一个变量要注意一下mPreviewWindow,来看看mPreviewWindow是什么东西。

在初始化camera的时候会调用Camera2Client::setPreviewTarget把绘图相关接口IGraphicBufferProducer传递过来,创建Surface,我们知道Surface相当于一块画布,不同画布的绘图最终会通过SurfaceFlinger去指导合成,最后输出在屏幕上面。

status_t Camera2Client::setPreviewTarget( const sp<IGraphicBufferProducer>& bufferProducer) {

sp<IBinder> binder;

sp<ANativeWindow> window;

if (bufferProducer != 0) {

binder = bufferProducer->asBinder();

// Using controlledByApp flag to ensure that the buffer queue remains in

// async mode for the old camera API, where many applications depend

// on that behavior.

window = new Surface(bufferProducer, /*controlledByApp*/ true);

}

return setPreviewWindowL(binder, window);

}

status_t Camera2Client::setPreviewWindowL(const sp<IBinder>& binder,sp<ANativeWindow> window) {

...

res = mStreamingProcessor->setPreviewWindow(window);

}

status_t StreamingProcessor::setPreviewWindow(sp<ANativeWindow> window) {

...

mPreviewWindow = window;

}

可以看出mPreviewWindow就是相当于刚才创建的Surface。

继续分析startPreviewL

status_t Camera2Client::startPreviewL(Parameters ¶ms, bool restart) {

...

res = mStreamingProcessor->updatePreviewStream(params);

...

outputStreams.push(getPreviewStreamId());//一个Camera3OutputStream对应一个id

res = mStreamingProcessor->updatePreviewRequest(params);

...

res = mStreamingProcessor->startStream(StreamingProcessor::PREVIEW,

outputStreams);

}

status_t StreamingProcessor::updatePreviewRequest(const Parameters ¶ms) {

sp<CameraDeviceBase> device = mDevice.promote();

res = device->createDefaultRequest(CAMERA3_TEMPLATE_PREVIEW,&mPreviewRequest);

res = mPreviewRequest.update(ANDROID_REQUEST_ID,&mPreviewRequestId, 1);//这个mPreviewRequest下面就会用到

}

status_t Camera3Device::createDefaultRequest(int templateId,CameraMetadata *request) {

const camera_metadata_t *rawRequest;

rawRequest = mHal3Device->ops->construct_default_request_settings(mHal3Device, templateId);//hal层去填充默认设置

*request = rawRequest;//上一节分析以及指导CameraMetadata维护的就是camera_metadata_t

}

继续分析startPreviewL调用的mStreamingProcessor->startStream

status_t StreamingProcessor::startStream(StreamType type,

const Vector<int32_t> &outputStreams) {

CameraMetadata &request = (type == PREVIEW) ?//这里type是PREVIEW所以会使用刚才赋值的mPreviewRequest

mPreviewRequest : mRecordingRequest;

res = request.update(

ANDROID_REQUEST_OUTPUT_STREAMS,

outputStreams);//把刚才创建Camera3OutputStream对应的id保存到这个request里面

...

res = device->setStreamingRequest(request);

}

status_t Camera3Device::setStreamingRequest(const CameraMetadata &request,int64_t* /*lastFrameNumber*/) {

List<const CameraMetadata> requests;

requests.push_back(request);

return setStreamingRequestList(requests, /*lastFrameNumber*/NULL);

}

status_t Camera3Device::setStreamingRequestList(const List<const CameraMetadata> &requests,int64_t *lastFrameNumber) {

return submitRequestsHelper(requests, /*repeating*/true, lastFrameNumber);

}

status_t Camera3Device::submitRequestsHelper(

const List<const CameraMetadata> &requests, bool repeating,

/*out*/

int64_t *lastFrameNumber) {

RequestList requestList;

res = convertMetadataListToRequestListLocked(requests, /*out*/&requestList);//利用CameraMetadata创建CaptureRequest

if (repeating) {//第二个参数是true

res = mRequestThread->setRepeatingRequests(requestList, lastFrameNumber);

}

}

status_t Camera3Device::convertMetadataListToRequestListLocked(...){

for (List<const CameraMetadata>::const_iterator it = metadataList.begin();it != metadataList.end(); ++it){

sp<CaptureRequest> newRequest = setUpRequestLocked(*it);

}

}

sp<Camera3Device::CaptureRequest> Camera3Device::setUpRequestLocked(...){

sp<CaptureRequest> newRequest = createCaptureRequest(request);

}

sp<Camera3Device::CaptureRequest> Camera3Device::createCaptureRequest(...){

camera_metadata_entry_t streams = //获取到刚才保存的id

newRequest->mSettings.find(ANDROID_REQUEST_OUTPUT_STREAMS);

for (size_t i = 0; i < streams.count; i++) {

int idx = mOutputStreams.indexOfKey(streams.data.i32[i]);

sp<Camera3OutputStreamInterface> stream =mOutputStreams.editValueAt(idx);

newRequest->mOutputStreams.push(stream);//得到outputstream的对象,保存到CaptureRequest中

}

}

status_t Camera3Device::RequestThread::setRepeatingRequests(const RequestList &requests,/*out*/ int64_t *lastFrameNumber) {

mRepeatingRequests.clear();

mRepeatingRequests.insert(mRepeatingRequests.begin(),requests.begin(), requests.end());

....

}

到了这里,mRepeatingRequests会被插入一个RequestList,mRepeatingRequests如果不为空的话,RequestThread会不断的触发预览事件。

threadLoop和帧数据回调

Camera3Device::RequestThread::threadLoop是一个处理Request的线程,得到request后它会去调用hal接口获取到图像数据,然后hal层会回调Camera3Device::processCaptureResult接口,通过层层调用把图像数据送给显示系统显示图像。

sp<Camera3Device::CaptureRequest> Camera3Device::RequestThread::waitForNextRequest() {

while (mRequestQueue.empty()) {

if (!mRepeatingRequests.empty()) {//从上面知道这里不是空的,mRepeatingRequests也没有擦除,所以一直进来这里

const RequestList &requests = mRepeatingRequests;

RequestList::const_iterator firstRequest =requests.begin();

nextRequest = *firstRequest;

mRequestQueue.insert(mRequestQueue.end(), ++firstRequest, requests.end());

break; //直接退出循环了,无需等待

}

res = mRequestSignal.waitRelative(mRequestLock, kRequestTimeout);

......

}

......

return nextRequest;

}

bool Camera3Device::RequestThread::threadLoop() {

sp<CaptureRequest> nextRequest = waitForNextRequest();

camera3_capture_request_t request = camera3_capture_request_t();//构建一个camera3_capture_request_t

....

outputBuffers.insertAt(camera3_stream_buffer_t(), 0,

nextRequest->mOutputStreams.size());//先插入一个camera3_stream_buffer_t

request.output_buffers = outputBuffers.array();//保存到request中

for (size_t i = 0; i < nextRequest->mOutputStreams.size(); i++) {

res = nextRequest->mOutputStreams.editItemAt(i)->//前面已经知道了这里获取到的item是Camera3OutputStream

getBuffer(&outputBuffers.editItemAt(i));//调用Camera3Stream::returnBuffer去填充camera3_stream_buffer

}

....

res = mHal3Device->ops->process_capture_request(mHal3Device, &request);//调用hal去获取一帧数据

}

status_t Camera3Stream::getBuffer(camera3_stream_buffer *buffer) {

res = getBufferLocked(buffer);

}

status_t Camera3OutputStream::getBufferLocked(camera3_stream_buffer *buffer) {

ANativeWindowBuffer* anb;

sp<ANativeWindow> currentConsumer = mConsumer;//前面创建的surface

res = currentConsumer->dequeueBuffer(currentConsumer.get(), &anb, &fenceFd);//出队buffer

handoutBufferLocked(*buffer, &(anb->handle), /*acquireFence*/fenceFd, //填充camera3_stream_buffer

/*releaseFence*/-1, CAMERA3_BUFFER_STATUS_OK, /*output*/true);

}

void Camera3IOStreamBase::handoutBufferLocked(camera3_stream_buffer &buffer,buffer_handle_t *handle,int acquireFence,

int releaseFence, camera3_buffer_status_t status,bool output) {

buffer.stream = this; //设置回调

buffer.buffer = handle;

buffer.acquire_fence = acquireFence;

buffer.release_fence = releaseFence;

buffer.status = status;

...

}

(参考类图)camera3_stream_buffer的stream类型是camera3_stream_t *,所以buffer.stream = this让camera3_stream_buffer的stream指向了Camera3OutputStream的父类camera3_stream,通过类图可以知道camera3_stream_buffer是camera3_capture_request的成员,所以camera3_capture_request可以通过camera3_stream_buffer的stream成员拿到camera3_stream的地址,而camera3_stream又是Camera3OutputStream的父类,所以camera3_capture_request可以访问到刚才创建的Camera3OutputStream。这一点非常重要,因为下面的帧数据回调就是以这个为基础的。

填充完一个camera3_capture_request后,threadLoop回调用hal接口mHal3Device->ops->process_capture_request(mHal3Device, &request)去hal层取图像。我这里是取一帧图像。

//hal层

camera3_device_ops_t Camera::sOps = {

...

.configure_streams = Camera::configureStreams,

.process_capture_request = Camera::processCaptureRequest,

...

}

int Camera::processCaptureRequest(camera3_capture_request_t *request) {

const V4l2Device::VBuffer *frame = NULL;

Vector<camera3_stream_buffer> buffers;

if(request->input_buffer) {//我这里不关心输入buffer,所以我这里直接设置release_fence为-1

request->input_buffer->release_fence = -1;

}

BENCHMARK_SECTION("Lock/Read") {

frame = mDev->readLock();//其实就是调用ioctl(mFd, VIDIOC_DQBUF, &bufInfo)取出一片buffer

}

for(size_t i = 0; i < request->num_output_buffers; ++i) {//我这里只有一个buffer

const camera3_stream_buffer &srcBuf = request->output_buffers[i];//取出刚才所说的那个camera3_stream_buffer

sp<Fence> acquireFence = new Fence(srcBuf.acquire_fence);//创建一个Fence,Fence是显示系统的一种同步机制

e = acquireFence->wait(1000); /* FIXME: magic number */ //等待一下,返回的时候说明刚才调用dequeueBuffer出队的buffer真正可用了

if(e == NO_ERROR) {

const Rect rect((int)srcBuf.stream->width, (int)srcBuf.stream->height);

e = GraphicBufferMapper::get().lock(*srcBuf.buffer, GRALLOC_USAGE_SW_WRITE_OFTEN, rect, (void **)&buf);//锁住buffer

switch(srcBuf.stream->format) {//这个是在configure_streams的时候指定的

case HAL_PIXEL_FORMAT_RGB_565:{//我这边指定的是rgb565

//把一帧图像数据保存到buf里面

}

}

}

for(size_t i = 0; i < request->num_output_buffers; ++i) {//只有一个output buffer

const camera3_stream_buffer &srcBuf = request->output_buffers[i];

GraphicBufferMapper::get().unlock(*srcBuf.buffer); //解锁buffer

buffers.push_back(srcBuf); //保存到Vector这里的process_capture_result在Camera3Device的构造函数中指定好了,camera3_callback_ops::process_capture_result = &sProcessCaptureResult,所以这里会回调Camera3Device的sProcessCaptureResult函数,进而调用Camera3Device::processCaptureResult函数。

void Camera3Device::processCaptureResult(const camera3_capture_result *result) {

for (size_t i = 0; i < result->num_output_buffers; i++){

//咦,这个result->output_buffers[i].stream)不就是前面指定为Camera3IOStreamBase了吗

//所以这个把Camera3IOStreamBase指针强转成它的父类指针Camera3Stream

Camera3Stream *stream =Camera3Stream::cast(result->output_buffers[i].stream);

res = stream->returnBuffer(result->output_buffers[i], timestamp);

....

}

}

status_t Camera3Stream::returnBuffer(const camera3_stream_buffer &buffer,nsecs_t timestamp) {

status_t res = returnBufferLocked(buffer, timestamp);

}

status_t Camera3OutputStream::returnBufferLocked( const camera3_stream_buffer &buffer,nsecs_t timestamp) {

status_t res = returnAnyBufferLocked(buffer, timestamp, /*output*/true);

}

status_t Camera3IOStreamBase::returnAnyBufferLocked(const camera3_stream_buffer &buffer,nsecs_t timestamp,bool output) {

sp<Fence> releaseFence;

res = returnBufferCheckedLocked(buffer, timestamp, output,

&releaseFence);

}

status_t Camera3OutputStream::returnBufferCheckedLocked(

const camera3_stream_buffer &buffer,

nsecs_t timestamp,

bool output,

/*out*/

sp<Fence> *releaseFenceOut) {

.....

res = currentConsumer->queueBuffer(currentConsumer.get(),//送帧图像给显示系统,然后会显示在屏幕上面

container_of(buffer.buffer, ANativeWindowBuffer, handle),

anwReleaseFence);

}