Mask-rcnn训练自己的数据实践

Mask-rcnn训练自己的数据实践,这里只做了一类。

主要步骤:

1. 数据准备

(1)做标签:这里用的labelme,一张图片对应一个.json文件。数据大小1024×1024。

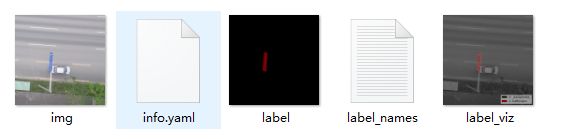

(2)转数据格式:在./labelme/cli/路径下找到 json_to_dataset.py,这里需要小改动一下,实现批量转格式。将步骤(1)中的.json转成训练需要的数据,一张图片对应5个文件,具体如下图。

2. mask-rcnn下载地址:https://github.com/matterport/Mask_RCNN

pre-trained model: https://github.com/matterport/Mask_RCNN/releases

3. 写train.py文件:

import os

import sys

import random

import math

import re

import time

import numpy as np

import cv2

import matplotlib

import matplotlib.pyplot as plt

from PIL import Image

import yaml

# Root directory of the project

ROOT_DIR = os.path.abspath("./")

# Import Mask RCNN

sys.path.append(ROOT_DIR) # To find local version of the library

from mrcnn.config import Config

from mrcnn import utils

import mrcnn.model as modellib

from mrcnn import visualize

from mrcnn.model import log

# Directory to save logs and trained model

MODEL_DIR = os.path.join(ROOT_DIR, "logs")

# iter_num=0

# Local path to trained weights file

COCO_MODEL_PATH = os.path.join(ROOT_DIR, 'pretrain_model' , "mask_rcnn_coco.h5").replace('//', '/')

# Download COCO trained weights from Releases if needed

if not os.path.exists(COCO_MODEL_PATH):

utils.download_trained_weights(COCO_MODEL_PATH)

##Confiurations

class ShapesConfig(Config):

"""Configuration for training on the toy shapes dataset.

Derives from the base Config class and overrides values specific

to the toy shapes dataset.

"""

# Give the configuration a recognizable name

NAME = "shapes"

# Train on 1 GPU and 8 images per GPU. We can put multiple images on each

# GPU because the images are small. Batch size is 8 (GPUs * images/GPU).

GPU_COUNT = 1

IMAGES_PER_GPU = 1

# Number of classes (including background)

NUM_CLASSES = 1 + 1 # background + 1 shapes

# Use small images for faster training. Set the limits of the small side

# the large side, and that determines the image shape.

IMAGE_MIN_DIM = 1024

IMAGE_MAX_DIM = 1024

# Use smaller anchors because our image and objects are small

RPN_ANCHOR_SCALES = (8, 16, 32, 64, 128) # anchor side in pixels

# Reduce training ROIs per image because the images are small and have

# few objects. Aim to allow ROI sampling to pick 33% positive ROIs.

TRAIN_ROIS_PER_IMAGE = 32

# Use a small epoch since the data is simple

STEPS_PER_EPOCH = 238

# use small validation steps since the epoch is small

VALIDATION_STEPS = 100

config = ShapesConfig()

config.display()

#dataset

class ShapesDataset(utils.Dataset):

#得到该图中有多少个实例(物体)

def get_obj_index(self, image):

n = np.max(image)

return n

#解析labelme中得到的yaml文件,从而得到mask每一层对应的实例标签

def from_yaml_get_class(self,image_id):

info = self.image_info[image_id]

with open(info['yaml_path']) as f:

temp=yaml.load(f.read())

labels=temp['label_names']

del labels[0]

return labels

#重新写draw_mask

def draw_mask(self, num_obj, mask, image, image_id):

info = self.image_info[image_id]

for index in range(num_obj):

for i in range(info['width']):

for j in range(info['height']):

at_pixel = image.getpixel((i, j))

if at_pixel == index + 1:

mask[j, i, index] =1

return mask

def load_shapes(self, count, height, width, imgfolder, folders_list):

# Add classes

self.add_class("shapes", 1, "trafficsign")

# Add images

for i in range(count):

foldername = folders_list[i]

img_path = imgfolder + foldername + '/' + 'img.png'

mask_path = imgfolder + foldername + '/' + 'label.png'

yaml_path = imgfolder + foldername + '/' + 'info.yaml'

self.add_image("shapes", image_id=i, path=img_path, width=width, height=height, mask_path=mask_path,yaml_path=yaml_path)

#重写load_mask

def load_mask(self, image_id):

"""Generate instance masks for shapes of the given image ID.

"""

# global iter_num

info = self.image_info[image_id]

count = 1 # number of object

img = Image.open(info['mask_path'])

num_obj = self.get_obj_index(img)

mask = np.zeros([info['height'], info['width'], num_obj], dtype=np.uint8)

mask = self.draw_mask(num_obj, mask, img, image_id)

occlusion = np.logical_not(mask[:, :, -1]).astype(np.uint8)

for i in range(count - 2, -1, -1):

mask[:, :, i] = mask[:, :, i] * occlusion

occlusion = np.logical_and(occlusion, np.logical_not(mask[:, :, i]))

labels=[]

labels=self.from_yaml_get_class(image_id)

labels_form=[]

for i in range(len(labels)):

if labels[i].find("trafficsign")!=-1:

#print "box"

labels_form.append("trafficsign")

class_ids = np.array([self.class_names.index(s) for s in labels_form])

return mask, class_ids.astype(np.int32)

# Training dataset

imgfolder_train = '/home/liesmars/maoz/Data/TStraning/train/'

folder_train_list = os.listdir(imgfolder_train)

count_train = len(os.listdir(imgfolder_train))

dataset_train = ShapesDataset()

dataset_train.load_shapes(count_train, config.IMAGE_SHAPE[0], config.IMAGE_SHAPE[1], imgfolder_train, folder_train_list)

dataset_train.prepare()

# Validation dataset

imgfolder_val = '/home/liesmars/maoz/Data/TStraning/val/'

folder_val_list = os.listdir(imgfolder_val)

count_val = len(os.listdir(imgfolder_val))

dataset_val = ShapesDataset()

dataset_val.load_shapes(count_val, config.IMAGE_SHAPE[0], config.IMAGE_SHAPE[1], imgfolder_val, folder_val_list)

dataset_val.prepare()

# Create model in training mode

model = modellib.MaskRCNN(mode="training", config=config,

model_dir=MODEL_DIR)

# Which weights to start with?

init_with = "coco" # imagenet, coco, or last

if init_with == "imagenet":

model.load_weights(model.get_imagenet_weights(), by_name=True)

elif init_with == "coco":

# Load weights trained on MS COCO, but skip layers that

# are different due to the different number of classes

# See README for instructions to download the COCO weights

model.load_weights(COCO_MODEL_PATH, by_name=True,

exclude=["mrcnn_class_logits", "mrcnn_bbox_fc",

"mrcnn_bbox", "mrcnn_mask"])

elif init_with == "last":

# Load the last model you trained and continue training

model.load_weights(model.find_last(), by_name=True)

# Train

model.train(dataset_train, dataset_val,

learning_rate=config.LEARNING_RATE,

epochs=100,

layers="all")

可以用tensorboard看loss曲线,模型和event文件在logs文件夹里面。

4. 准备test.py

import os

import sys

import random

import math

import numpy as np

import skimage.io

import matplotlib

import matplotlib.pyplot as plt

from PIL import Image

# Root directory of the project

ROOT_DIR = os.path.abspath("./")

# Import Mask RCNN

sys.path.append(ROOT_DIR) # To find local version of the library

from mrcnn import utils

import mrcnn.model as modellib

from mrcnn import visualize

from mrcnn.config import Config

# Import COCO config

# sys.path.append(os.path.join(ROOT_DIR, "samples/coco/")) # To find local version

# import coco

import mrcnn

import pickle

# Directory to save logs and trained model

MODEL_DIR = os.path.join(ROOT_DIR, "logs")

# Local path to trained weights file

MODEL_PATH = os.path.join(MODEL_DIR, "shapes20191012T1103/mask_rcnn_shapes_0080.h5")

# Download COCO trained weights from Releases if needed

# Directory of images to run detection on

IMAGE_DIR = os.path.join(ROOT_DIR, "img")

# Directory to run save the detection results

IMAGE_SAVE = os.path.join(ROOT_DIR, "imgresult")

if not os.path.exists(IMAGE_SAVE):

os.makedirs(IMAGE_SAVE)

##Confiurations

class ShapesConfig(Config):

"""Configuration for training on the toy shapes dataset.

Derives from the base Config class and overrides values specific

to the toy shapes dataset.

"""

# Give the configuration a recognizable name

NAME = "shapes"

# Train on 1 GPU and 8 images per GPU. We can put multiple images on each

# GPU because the images are small. Batch size is 8 (GPUs * images/GPU).

GPU_COUNT = 1

IMAGES_PER_GPU = 1

# Number of classes (including background)

NUM_CLASSES = 1 + 1 # background + 1 shapes

# Use small images for faster training. Set the limits of the small side

# the large side, and that determines the image shape.

IMAGE_MIN_DIM = 1024

IMAGE_MAX_DIM = 1024

# Use smaller anchors because our image and objects are small

RPN_ANCHOR_SCALES = (8, 16, 32, 64, 128) # anchor side in pixels

# Reduce training ROIs per image because the images are small and have

# few objects. Aim to allow ROI sampling to pick 33% positive ROIs.

TRAIN_ROIS_PER_IMAGE = 32

# Use a small epoch since the data is simple

STEPS_PER_EPOCH = 238

# use small validation steps since the epoch is small

VALIDATION_STEPS = 100

class InferenceConfig(ShapesConfig):

# Set batch size to 1 since we'll be running inference on

# one image at a time. Batch size = GPU_COUNT * IMAGES_PER_GPU

GPU_COUNT = 1

IMAGES_PER_GPU = 1

config = InferenceConfig()

config.display()

# Create model object in inference mode.

model = modellib.MaskRCNN(mode="inference", model_dir=MODEL_DIR, config=config)

# Load weights trained on MS-COCO

model.load_weights(MODEL_PATH, by_name=True)

# COCO Class names

# Index of the class in the list is its ID. For example, to get ID of

# the teddy bear class, use: class_names.index('teddy bear')

class_names = ['BG', 'trafficsign']

# Load a random image from the images folder

# file_names = next(os.walk(IMAGE_DIR))[2]

file_names = os.listdir(IMAGE_DIR)

# APs = []

for file in file_names:

image = skimage.io.imread(os.path.join(IMAGE_DIR, file))

# Run detection

results = model.detect([image], verbose=1)

# Visualize results

r = results[0]

savepath = IMAGE_SAVE + '/' + file # the path to save the image result of testing

name, ext = os.path.splitext(file)

arr_mask = r['masks']

## save segmentaion result of object

np.save(IMAGE_SAVE+'/'+ name +'.npy', arr_mask)

with open(IMAGE_SAVE + '/' + name + '.pkl', 'wb') as f:

pickle.dump( {'rois':r['rois'], 'class_ids':r['class_ids'], 'class_names':class_names,'scores': r['scores']} , f)

## save detection result with plt

visualize.display_instances(savepath, image, r['rois'], r['masks'], r['class_ids'], class_names, r['scores'])

5. evaluation:

(1) 将输出预测的结果转为label的图片,用作后面计算IoU。这里的npy文件是预测结果的array,在test阶段生成的。

# To convert the detection results(instance segmantation result) to image for evaluation

from numpy import *

import numpy as np

import cv2

import os

npyDir = './npy/'

outputDir = './pre-label/'

if not os.path.exists(outputDir):

os.makedirs(outputDir)

for f in os.listdir(npyDir):

path = os.path.join(npyDir, f)

savepath = os.path

mask= np.load(os.path.join(npyDir, f))

fname, ext = os.path.splitext(f)

output = outputDir + fname +'.png'

objnum = mask.shape[2] #object number equels the channel of mask.

b_0 = np.zeros((1024,1024), dtype=float)

for i in range (objnum):

b_0 += 1*np.float32(mask[:,:,i]) #Matrix slice

b_0 = np.reshape(b_0, (1024,1024,1))

if not os.path.exists(output):

cv2.imwrite(output, 1*np.float32(b_0))(2) 计算IoU,评价训练结果。

# -*- coding: utf-8 -*-

import os

import cv2

import numpy as np

class IOUMetric:

"""

Class to calculate mean-iou using fast_hist method

"""

def __init__(self, num_classes):

self.num_classes = num_classes

self.hist = np.zeros((num_classes, num_classes))

def _fast_hist(self, label_pred, label_true):

# 找出标签中需要计算的类别,去掉了背景

mask = (label_true >= 0) & (label_true < self.num_classes)

hist = np.bincount(

self.num_classes * label_true[mask].astype(int) +

label_pred[mask], minlength=self.num_classes ** 2).reshape(self.num_classes, self.num_classes)

return hist

# input:prediction and true label.

# 语义分割的任务是为每个像素点分配一个label

def evaluate(self, predictions, gts):

for lp, lt in zip(predictions, gts):

assert len(lp.flatten()) == len(lt.flatten())

self.hist += self._fast_hist(lp.flatten(), lt.flatten())

# miou

iou = np.diag(self.hist) / (self.hist.sum(axis=1) + self.hist.sum(axis=0) - np.diag(self.hist))

miou = np.nanmean(iou)

# -----------------其他指标------------------------------

# mean acc

acc = np.diag(self.hist).sum() / self.hist.sum()

acc_cls = np.nanmean(np.diag(self.hist) / self.hist.sum(axis=1))

freq = self.hist.sum(axis=1) / self.hist.sum()

fwavacc = (freq[freq > 0] * iou[freq > 0]).sum()

return acc, acc_cls, iou, miou, fwavacc

if __name__ == '__main__':

label_path = './Labelme2json'

predict_path = './pre-label'

pres = os.listdir(predict_path)

labels = []

predicts = []

for im in pres:

name, ext = os.path.splitext(im)

if ext == '.png':

lab_path = os.path.join(label_path, name, 'label.png').replace('\\','/')

pre_path = os.path.join(predict_path, im)

label = cv2.imread(lab_path,0) #1024,1024

test = label.flatten() #(1048576,)

pre = cv2.imread(pre_path,0)

labels.append(label)

predicts.append(pre)

el = IOUMetric(2) #backgroud + class

acc, acc_cls, iou, miou, fwavacc = el.evaluate(predicts, labels)

print('acc: ',acc)

print('acc_cls: ',acc_cls)

print('iou: ',iou)

# print('miou: ',miou) #No mean IoU as there is only one class.

print('fwavacc: ',fwavacc)