面试编程

面试知识点总结

https://blog.csdn.net/oBrightLamp/article/details/85067981

ps:部分图截自其他博客,忘记是哪几篇了,如果有问题,请联系我哈

1. 实现NMS

目标: 加快检测速度,使用NMS去除冗余框,找到具有代表性的bbox,进行后续操作

算法步骤:

根据候选框的类别分类概率做排序:A

step2:从最大概率矩形框F开始,分别判断A~E与F的重叠度IOU(两框的交并比)是否大于某个设定的阈值,假设B、D与F的重叠度超过阈值,那么就扔掉B、D;

step3:从剩下的矩形框A、C、E中,选择概率最大的E,标记为要保留下来的,然后判读E与A、C的重叠度,扔掉重叠度超过设定阈值的矩形框

算法实现:

import numpy as np

def nms_op(dets, thresh):

#获取x1/y1/x2/y2/score

x1 = dets[:,0] #框的个数,P

y1 = dets[:,1]

x2 = dets[:, 2]

y2 = dets[:, 3]

scores = dets[:, 4]

#标记置信度最高的框进行保留

order = scores.argsort()[::-1] #从大到小 返回下标

#计算所有框的面积

areas = (x2-x1+1)*(y2-y1+1)

#最终保留的框

keep = []

#执行step2,计算交并比

while order.size > 0:

i = order[0]

keep.append(i)

#计算当前概率最大矩形框与其他矩形框的相交框的坐标,会用到numpy的broadcast机制,得到的是向量

xx1 = np.maximum(x1[i], x1[order[1:]])

yy1 = np.maximum(y1[i], y1[order[1:]])

xx2 = np.minimum(x2[i], x2[order[1:]])

yy2 = np.minimum(y2[i], y2[order[1:]])

#计算相交框的面积,注意矩形框不相交时w或h算出来会是负数,用0代替

w = np.maximum(0.0, xx2 - xx1 + 1)

h = np.maximum(0.0, yy2 - yy1 + 1)

inter = w * h

#计算重叠度IOU:重叠面积/(面积1+面积2-重叠面积)

ovr = inter / (areas[i] + areas[order[1:]] - inter)

#找到重叠度不高于阈值的矩形框索引

inds = np.where(ovr <= thresh)[0] #返回满足阈值要求的索引,[0]相当于step3中的E

#将order序列更新,由于前面得到的矩形框索引要比矩形框在原order序列中的索引小1,所以要把这个1加回来

order = order[inds + 1]

return keep

# test

if __name__ == "__main__":

dets = np.array([[30, 20, 230, 200, 1],

[50, 50, 260, 220, 0.9],

[210, 30, 420, 5, 0.8],

[430, 280, 460, 360, 0.7]])

thresh = 0.35

keep_dets = py_nms(dets, thresh)

print(keep_dets)

print(dets[keep_dets])

2.实现Resize函数

3.均值滤波 | 中值滤波

请对图像的中值滤波进行实现(输入为HW, kernalsize=mm)默认为:灰度图像

ps:不进行padding

均值滤波(blue)

#==========================中值滤波===================================================

from PIL import Image

import numpy as np

def MedianFilter(src, dst, k = 3, padding = None):

imarray = np.array(Image.open(src))

height, width = imarray.shape

if not padding:

edge = int((k-1)/2)

#若图像大小<卷积核大小,直接返回None

if height - 1 - edge <= edge or width - 1 - edge <= edge:

print("The parameter k is to large.")

return None

#图像大小>卷积核大小,滤波

new_arr = np.zeros((height, width), dtype = "uint8")

for i in range(height):

for j in range(width):

#四周的边框点保持不动,如上图无颜色的点

if i <= edge - 1 or i >= height - 1 - edge or j <= edge - 1 or j >= height - edge - 1:

new_arr[i, j] = imarray[i, j]

#其他进行中值操作,如上图有颜色的点

else:

new_arr[i, j] = np.median(imarray[i - edge:i + edge + 1, j - edge:j + edge + 1])

#将array转换为Image格式,保存为图片

new_im = Image.fromarray(new_arr)

new_im.save(dst)

if __name__ == '__main__':

src = 'input.jpg'

dst = 'out.jpg'

MedianFilter(src, dst)

#==========================均值滤波===================================================

ps: np.median ---> np.mean

4.实现maxpool的前向和反向

- 请写出MaxPooling层的前向计算和反向计算过程

参考答案:https://blog.csdn.net/oBrightLamp/article/details/84635308

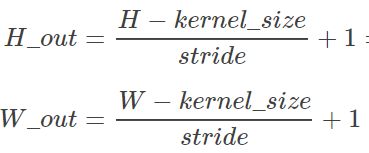

输出feature大小计算:

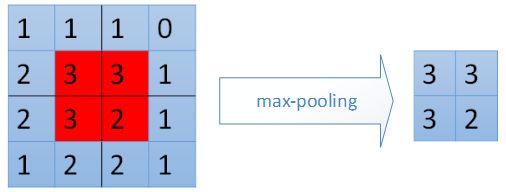

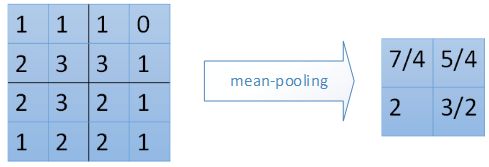

maxpool和mean pool的不同:

对于max-pooling,在前向计算时,是选取的每个22区域中的最大值,这里需要记录下最大值在每个小区域中的位置。在反向传播时,只有那个最大值对下一层有贡献,所以将残差传递到该最大值的位置,区域内其他22-1=3个位置置零。

对于mean-pooling,我们需要把残差平均分成2*2=4份,传递到前边小区域的4个单元即可。具体过程如图:

算法实现:

#============================= 前向 =======================================================

import numpy as np

import torch

class MaxPool2D():

def __init__(self,x, kernel_size=(2, 2), stride=2):

self.stride = stride

self.kernel_size = kernel_size

self.w_height = kernel_size[0]

self.w_width = kernel_size[1]

self.x = x

self.in_height = None

self.in_width = None

self.out_height = None

self.out_width = None

self.arg_max = None

def forward(self, x):

self.x = x

self.out_height = int((self.in_height - self.w_height) / self.stride) + 1

self.out_width = int((self.in_width - self.w_width) / self.stride) + 1

N, C, H, W = self.x.shape #pytorch

out = np.zeros((N, C, self.out_height, self.out_width))

for i in range(self.out_height):

for j in range(self.out_width):

x_masked = in_data[:, :, i * self.stride: i * self.stride + self.kernel_size, j * self.stride: j * self.stride + self.kernel_size]

out[:, :, i, j] = np.max(x_masked, axis=(2, 3))

return out

def backward(self, d_loss):

dx = np.zeros_like(self.x)

for i in range(self.out_height):

for j in range(self.out_width):

start_i = i * self.stride

start_j = j * self.stride

end_i = start_i + self.w_height

end_j = start_j + self.w_width

#找到最大元素在原数组中的索引

index = np.unravel_index(out[i, j], self.kernel_size)

#将该loss传递到该位置

dx[start_i: end_i, start_j: end_j][index] = d_loss[i, j]

return dx

if __name__ == '__main__':

np.set_printoptions(precision=8, suppress=True, linewidth=120)

np.random.seed(123)

x_numpy = np.random.random((1, 1, 6, 8))

x_tensor = torch.tensor(x_numpy, requires_grad=True)

max_pool_tensor = torch.nn.MaxPool2d((2, 2), 2)

max_pool_numpy = MaxPool2D((2, 2), stride=2)

out_numpy = max_pool_numpy(x_numpy[0, 0])

out_tensor = max_pool_tensor(x_tensor)

d_loss_numpy = np.random.random(out_tensor.shape)

d_loss_tensor = torch.tensor(d_loss_numpy, requires_grad=True)

out_tensor.backward(d_loss_tensor)

dx_numpy = max_pool_numpy.backward(d_loss_numpy[0, 0])

dx_tensor = x_tensor.grad

print("out_numpy \n", out_numpy)

print("out_tensor \n", out_tensor.data.numpy())

print("dx_numpy \n", dx_numpy)

print("dx_tensor \n", dx_tensor.data.numpy())

#================================= 反向 ==================================================

5.实现Kmeans

算法步骤:

step1:创建k个点作为初始的质心点(随机选择)

step2:将所有点分配到k个簇中

step3:重新计算质心(簇的均值)

step4:再将所有点分配到新的质心

step5:重复上述步骤

算法实现:

from numpy import *

import time

import matplotlib.pyplot as plt

# calculate Euclidean distance

def euclDistance(vector1, vector2):

return sqrt(sum(power(vector2 - vector1, 2)))

# init centroids with random samples

def initCentroids(dataSet, k):

numSamples, dim = dataSet.shape

centroids = zeros((k, dim))

for i in range(k):

index = int(random.uniform(0, numSamples))

centroids[i, :] = dataSet[index, :]

return centroids

# k-means cluster

def kmeans(dataSet, k):

numSamples = dataSet.shape[0]

# first column stores which cluster this sample belongs to,

# second column stores the error between this sample and its centroid

clusterAssment = mat(zeros((numSamples, 2)))

clusterChanged = True

## step 1: init centroids

centroids = initCentroids(dataSet, k)

while clusterChanged:

clusterChanged = False

## for each sample

for i in xrange(numSamples):

minDist = 100000.0

minIndex = 0

## for each centroid

## step 2: find the centroid who is closest

for j in range(k):

distance = euclDistance(centroids[j, :], dataSet[i, :])

if distance < minDist:

minDist = distance

minIndex = j

## step 3: update its cluster

if clusterAssment[i, 0] != minIndex:

clusterChanged = True

clusterAssment[i, :] = minIndex, minDist**2

## step 4: update centroids

for j in range(k):

pointsInCluster = dataSet[nonzero(clusterAssment[:, 0].A == j)[0]]

centroids[j, :] = mean(pointsInCluster, axis = 0)

print 'Congratulations, cluster complete!'

return centroids, clusterAssment