算法进阶day3模型融合Stacking

算法进阶day3--模型融合Stacking

- 任务

- Stacking

- 代码实现

- 结果

任务

用你目前评分最高的模型作为基准模型,和其他模型进行stacking融合,得到最终模型及评分果。

Stacking

对于Stacking我也是个刚刚接触,翻看了很多材料,发现这篇文章写得比较详细,此处贴上引用地址:(https://blog.csdn.net/u011630575/article/details/81302994)

Stacking是模型融合的一种方法,对于StackingCVClassifier的参数说明:

classifiers : 基分类器,数组形式,[cl1, cl2, cl3]. 每个基分类器的属性被存储在类属性 self.clfs_.

meta_classifier : 目标分类器,即将前面分类器合起来的分类器

use_probas : bool (default: False) ,如果设置为True, 那么目标分类器的输入就是前面分类输出的类别概率值而不是类别标签

average_probas : bool (default: False),当上一个参数use_probas = True时需设置,average_probas=True表示所有基分类器输出的概率值需被平均,否则拼接。

verbose : int, optional (default=0)。用来控制使用过程中的日志输出,当 verbose = 0时,什么也不输出, verbose = 1,输出回归器的序号和名字。verbose = 2,输出详细的参数信息。verbose > 2, 自动将verbose设置为小于2的,verbose -2.

use_features_in_secondary : bool (default: False). 如果设置为True,那么最终的目标分类器就被基分类器产生的数据和最初的数据集同时训练。如果设置为False,最终的分类器只会使用基分类器产生的数据训练。

代码实现

from mlxtend.classifier import StackingCVClassifier

from sklearn import metrics

# 基分类器3个:DecisionTreeClassifier, GradientBoostingClassifier,RandomForestClassifier

# 目标分类器:LogisticRegression

# 使用3折交叉验证

basemodel1 = tree.DecisionTreeClassifier()

basemodel2 = GradientBoostingClassifier()

basemodel3 = RandomForestClassifier()

lr = LogisticRegression()

sclf = StackingCVClassifier(classifiers=[basemodel1, basemodel2, basemodel3], meta_classifier=lr,

use_probas=True, verbose=2, cv=3)

sclf.fit(X_train, y_train)

sclf_predict = sclf.predict(X_test)

sclf_predict_proba = sclf.predict_proba(X_test)[:, 1]

get_scores(y_test, sclf_predict, sclf_predict_proba)

结果

['trans_amount_increase_rate_lately', 'trans_activity_day', 'repayment_capability', 'first_transaction_time', 'historical_trans_day', 'rank_trad_1_month', 'trans_amount_3_month', 'abs', 'avg_price_last_12_month', 'trans_fail_top_count_enum_last_1_month', 'trans_fail_top_count_enum_last_6_month', 'trans_fail_top_count_enum_last_12_month', 'max_cumulative_consume_later_1_month', 'pawns_auctions_trusts_consume_last_1_month', 'pawns_auctions_trusts_consume_last_6_month', 'first_transaction_day', 'trans_day_last_12_month', 'apply_score', 'loans_score', 'loans_count', 'loans_overdue_count', 'history_suc_fee', 'history_fail_fee', 'latest_one_month_suc', 'latest_one_month_fail', 'loans_long_time', 'loans_avg_limit', 'consfin_credit_limit', 'consfin_max_limit', 'consfin_avg_limit', 'latest_query_day', 'loans_latest_day']

['trans_fail_top_count_enum_last_1_month', 'history_fail_fee', 'apply_score', 'loans_score', 'latest_one_month_fail', 'max_cumulative_consume_later_1_month', 'trans_fail_top_count_enum_last_12_month', 'trans_amount_3_month', 'Unnamed: 0', 'trans_day_last_12_month', 'abs', 'pawns_auctions_trusts_consume_last_6_month', 'repayment_capability', 'first_transaction_time', 'avg_price_last_12_month', 'first_transaction_day', 'loans_overdue_count', 'latest_query_day', 'loans_latest_day', 'apply_credibility']

(4754, 34)

Fitting 3 classifiers...

Fitting classifier1: decisiontreeclassifier (1/3)

DecisionTreeClassifier(class_weight=None, criterion='gini', max_depth=None,

max_features=None, max_leaf_nodes=None,

min_impurity_decrease=0.0, min_impurity_split=None,

min_samples_leaf=1, min_samples_split=2,

min_weight_fraction_leaf=0.0, presort=False, random_state=None,

splitter='best')

Training and fitting fold 1 of 3...

Training and fitting fold 2 of 3...

Training and fitting fold 3 of 3...

Fitting classifier2: gradientboostingclassifier (2/3)

GradientBoostingClassifier(criterion='friedman_mse', init=None,

learning_rate=0.1, loss='deviance', max_depth=3,

max_features=None, max_leaf_nodes=None,

min_impurity_decrease=0.0, min_impurity_split=None,

min_samples_leaf=1, min_samples_split=2,

min_weight_fraction_leaf=0.0, n_estimators=100,

presort='auto', random_state=None, subsample=1.0, verbose=0,

warm_start=False)

Training and fitting fold 1 of 3...

Training and fitting fold 2 of 3...

Training and fitting fold 3 of 3...

Fitting classifier3: randomforestclassifier (3/3)

RandomForestClassifier(bootstrap=True, class_weight=None, criterion='gini',

max_depth=None, max_features='auto', max_leaf_nodes=None,

min_impurity_decrease=0.0, min_impurity_split=None,

min_samples_leaf=1, min_samples_split=2,

min_weight_fraction_leaf=0.0, n_estimators=10, n_jobs=1,

oob_score=False, random_state=None, verbose=0,

warm_start=False)

Training and fitting fold 1 of 3...

Training and fitting fold 2 of 3...

Training and fitting fold 3 of 3...

准确率: 0.7904695164681149

精准率: 0.647887323943662

召回率: 0.3812154696132597

F1-score: 0.48

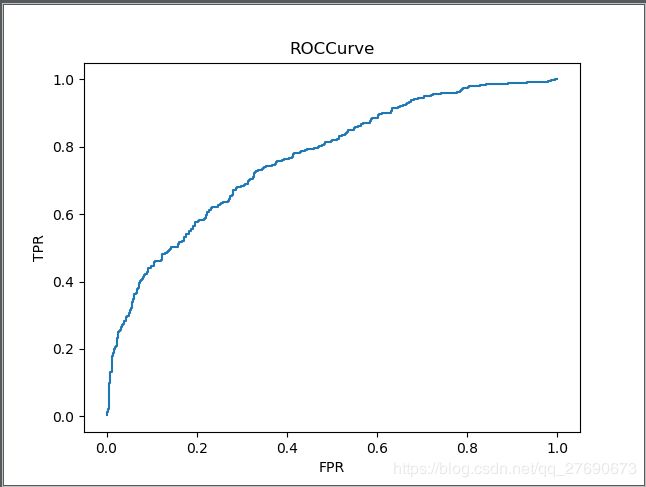

AUC: 0.7662983425414365