Android系统中的Camera系统

Android系统中的Camera系统

一、Android中camera简介

1.Camera

1.1camera初识

- 摄像头模组,全称CameraCompact Module ,以下简称CCM,是影像捕捉至关重要的电子器件。

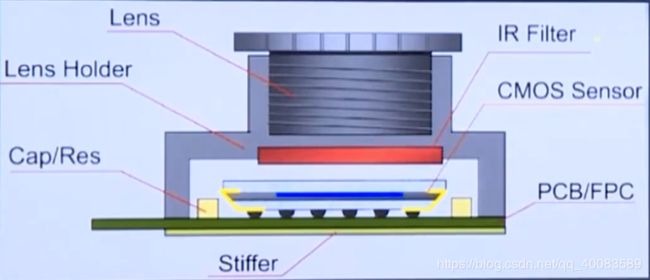

1.2.camera硬件组成

- CCM组成

1.3.camera工作原理

- 工作原理。物体通过镜头( lens )聚集的光,通过CMOS或CCD集成电路,把光信号转换成电信号,再经过内部图像处理器( ISP )转换成数字图像信号输出到数字信号处理器( DSP )加工处理,转换成标准的GRB、YUV等格式图像信号。

1.4.camera图像格式

- RGB格式:

- 采用这种编码方法,每种颜色都可用三个变量来表示红色、绿色以及蓝色的强度,每一个像素有三原色R红色、G绿色、B蓝色组成。

- YUV格式:

- 其中"Y" 表示明亮度(Luminance或Luma) ,就是灰阶值;而"U" 和"V" 表示色度(Chrominance或Chroma) ,是描述影像色彩及饱和度,用于指定像素的颜色。

- RAW DATA 格式:

- CCD或CMOS在将光信号转换为电信号时的电平高低的原始记录,单纯地将没有进行任何处理的图像数据,即摄像元件直接得到的电信号进行数字化处理而得到的。

- YCbCr格式:

YCbCr其中Y是指亮度分量,Cb指蓝色色度分量,而Cr指红色色度分量。人的肉眼对视频的Y分量更敏感,因此在通过对色度分量进行子采样来减少色度分量后,肉眼将察觉不到的图像质量的变化。主要的子采样格式有YCbCr4:2:0、YCbCr 4:2:2和YCbCr 4:4:4。

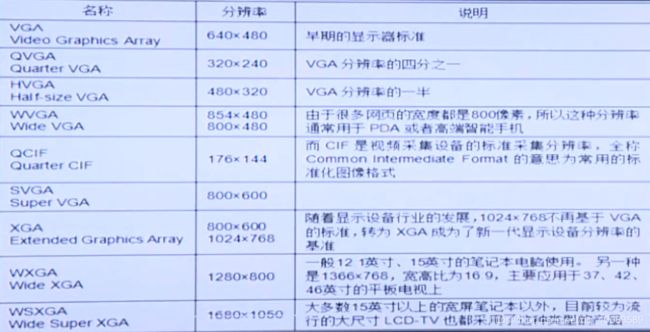

1.5.Camera分辨率

- 分辨率就是显示像素点的数量。常见的分辨率如下:

1.6.Camera传输率

- 传感器采集来的数据般由专用芯片进行处理 ,处理后的数据就是视频流格式也有很多。如MPEG (运动图像专家组Motion Picture Experts Group )、AVI (音频视频交错Audio Video Interleaved)、 MOV ( QuickTime影片格式)、ASF (高级流格式Advanced stift) WMV (windows media vido) 3GP ( 3C流媒体Streaming format)、 的视频编码格式)、 FLV ( FLASH VIDEO )、RM与RMVB等等。

- 视频流的传输速度就是传输率,该参数主要对连拍和摄像有影响。一般传输速率越高,视频越流畅。常见的传输速率有15fps , 30fps,60fps,120fps等。

- 传输速率与图像的分辨率有关,图像分辨率越低,传输速率越高,例如某摄像头在CIF ( 352x288 )分辨率下可实现30fps传输速率,则在VGA ( 640x480 )分辨率下就只有10fps左右。故此传输率的选择会参考到对应的分辨率。一般手机应用30fps的流畅度就足够了。

2.V4L2

2.1.V4L2框架

-

V4L2其全称为video for linux two. 是1 inux内核关于视频设备的API接口,涉及开关视频设备,以及该类设备采集并处理相关的音、视频信息。

-

V412有几个重要的文档是必须要读的,

Documentat ion/v ideo4l inux目录下的V4L2- framework. txt和videobuf、V4L2的官方API文档V4L2 API Specification ,

dri vers/ media/video目录下的vivi.c (虚拟视频驱动程序此代码模拟一个真正的视频设备V4L2 API )。

2.2.V412接口

V412可以支持多种设备,它可以有以下几种接口:

- 视频采集接口(video capture interface) :这种应用的设备可以是高频头或者摄像头,V4L2的最初设计就是应用于这种功能的。

- 视频输出接口(videooutputinterface) :可以驱动计算机的外围视频图像设备–像可以输出电视信号格式的设备。

- 直接传输视频接1 (video overlay interface) :它的主要工作是把从视频采集设备采集过来的信号直接输出到输出设备之上, 而不用经过系统的CPU。

- 视频间隔消隐信号接口(VBI interface) :它可以使应用可以访问传输消隐期的视频信号。

- 收音机接口(radio interface) :可用来处理从AM或FM高频头设备接收来的音物流。

二、虚拟摄像头驱动分析

1.虚拟摄像头驱动vivi.c

-

编译/drivers/media/video/vivi.c生成viiv.ko。

-

modprobe vivi装载驱动。(modprobe 将vivi.ko所以来的模块也进行装载。)

-

利用xawtv工具测试虚拟video0驱动。

2.vivi源码

/*

* Virtual Video driver - This code emulates a real video device with v4l2 api

*

* Copyright (c) 2006 by:

* Mauro Carvalho Chehab

* Ted Walther

* John Sokol

* http://v4l.videotechnology.com/

*

* Conversion to videobuf2 by Pawel Osciak & Marek Szyprowski

* Copyright (c) 2010 Samsung Electronics

*

* This program is free software; you can redistribute it and/or modify

* it under the terms of the BSD Licence, GNU General Public License

* as published by the Free Software Foundation; either version 2 of the

* License, or (at your option) any later version

*/

#include 三、camera应用程序编写

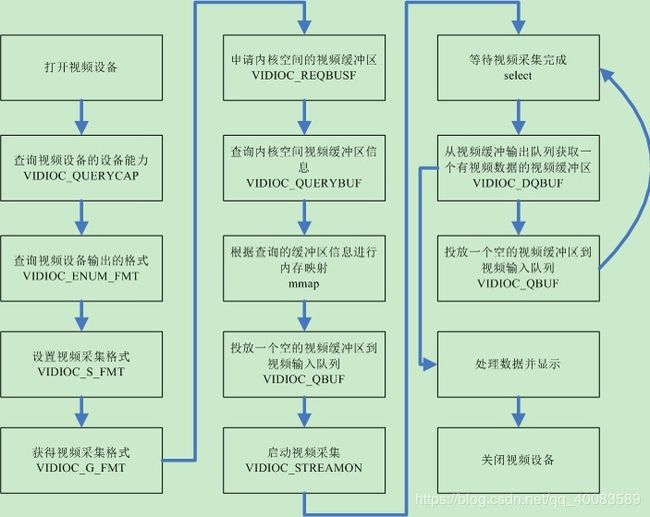

1.程序运行及编写流程

2.应用程序源码

#include 四、camera服务注册过程、服务获取过程、HAL层实现

1./frameworks/av/camera/cameraserver/main_cameraserver.cpp

/*

* Copyright (C) 2015 The Android Open Source Project

*

* Licensed under the Apache License, Version 2.0 (the "License");

* you may not use this file except in compliance with the License.

* You may obtain a copy of the License at

*

* http://www.apache.org/licenses/LICENSE-2.0

*

* Unless required by applicable law or agreed to in writing, software

* distributed under the License is distributed on an "AS IS" BASIS,

* WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

* See the License for the specific language governing permissions and

* limitations under the License.

*/

#define LOG_TAG "cameraserver"

//#define LOG_NDEBUG 0

#include "CameraService.h"

#include 2./frameworks/base/core/java/android/hardware/Camera.java

public static Camera open() {

int numberOfCameras = getNumberOfCameras();

CameraInfo cameraInfo = new CameraInfo();

for (int i = 0; i < numberOfCameras; i++) {

getCameraInfo(i, cameraInfo);

if (cameraInfo.facing == CameraInfo.CAMERA_FACING_BACK) {

return new Camera(i);

}

}

return null;

}

Camera.java源码

3./frameworks/base/core/jni/android_hardware_Camera.cpp

/*

**

** Copyright 2008, The Android Open Source Project

**

** Licensed under the Apache License, Version 2.0 (the "License");

** you may not use this file except in compliance with the License.

** You may obtain a copy of the License at

**

** http://www.apache.org/licenses/LICENSE-2.0

**

** Unless required by applicable law or agreed to in writing, software

** distributed under the License is distributed on an "AS IS" BASIS,

** WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

** See the License for the specific language governing permissions and

** limitations under the License.

*/

//#define LOG_NDEBUG 0

#define LOG_TAG "Camera-JNI"

#include " , "()V");

clazz = FindClassOrDie(env, "android/hardware/Camera$Face");

fields.face_constructor = GetMethodIDOrDie(env, clazz, "" , "()V");

clazz = env->FindClass("android/graphics/Point");

fields.point_constructor = env->GetMethodID(clazz, "" , "()V");

if (fields.point_constructor == NULL) {

ALOGE("Can't find android/graphics/Point()");

return -1;

}

// Register native functions

return RegisterMethodsOrDie(env, "android/hardware/Camera", camMethods, NELEM(camMethods));

}

4./hardware/libhardware/modules/camera/CameraHAL.cpp

/*

* Copyright (C) 2012 The Android Open Source Project

*

* Licensed under the Apache License, Version 2.0 (the "License");

* you may not use this file except in compliance with the License.

* You may obtain a copy of the License at

*

* http://www.apache.org/licenses/LICENSE-2.0

*

* Unless required by applicable law or agreed to in writing, software

* distributed under the License is distributed on an "AS IS" BASIS,

* WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

* See the License for the specific language governing permissions and

* limitations under the License.

*/

#include