1.修改配置hive-site.xml hadoop core-site.xml限制---参考Hive记录-部署Hive环境

2.启动hadoop

#sh /usr/app/hadoop/sbin/start-all.sh4.启动hiveserver2服务

#hive --service hiveserver2

#netstat -ant | grep 10000 #监听5.beeline连接

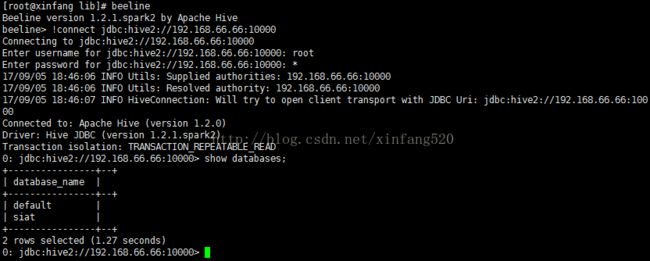

#beeline

#beeline>!connect jdbc:hive2://192.168.66.66:10000

#输入用户名和密码 ###登录系统的用户名root也行,但是要有操作hadoop hive文件夹的权限

#连接成功就可以操作hive数据了6.Java远程连接

6.1 所需包(hive-xxx.jar要与服务器上的hive版本一致)

6.2 src下新建log4j.properties

-------------------------------------------------------

inceptor.root.logger=INFO,RFA

inceptor.log.dir=/usr/app/hive/logs

inceptor.log.file=spark.log

# Define the root logger to the system property "hadoop.root.logger".

log4j.rootLogger=${inceptor.root.logger}

# Set everything to be logged to the console

log4j.appender.console=org.apache.log4j.ConsoleAppender

log4j.appender.console.target=System.err

log4j.appender.console.layout=org.apache.log4j.PatternLayout

log4j.appender.console.layout.ConversionPattern=%d{yy/MM/dd HH:mm:ss} %p %c: %m%n

# output to file

log4j.appender.RFA=org.apache.log4j.RollingFileAppender

log4j.appender.RFA.File=${inceptor.log.dir}/${inceptor.log.file}

# The MaxFileSize can be 512KB

log4j.appender.RFA.MaxFileSize=10MB

# Keep three backup files.

log4j.appender.RFA.MaxBackupIndex=1024

# Pattern to output: date priority [category] - message

log4j.appender.RFA.layout=org.apache.log4j.PatternLayout

log4j.appender.RFA.layout.ConversionPattern=%d{yy/MM/dd HH:mm:ss} %p %c: %m%n

# Ignore messages below warning level from Jetty, because it's a bit verbose

log4j.logger.org.eclipse.jetty=WARNpackage hive;

import java.sql.Connection;

import java.sql.DriverManager;

import java.sql.ResultSet;

import java.sql.SQLException;

import java.sql.Statement;

public class ToHive {

private static String driverName = "org.apache.hive.jdbc.HiveDriver";

public boolean run() {

try {

Class.forName(driverName);

Connection con = null;

//端口号默认为10000,根据实际情况修改;

//用户名:root,密码:1(登录linux系统)

con = DriverManager.getConnection(

"jdbc:hive2://192.168.66.66:10000/siat", "root", "1");

Statement stmt = con.createStatement();

ResultSet res = null;

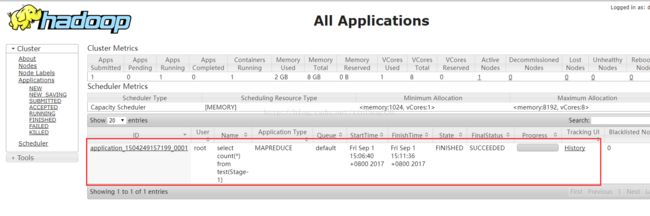

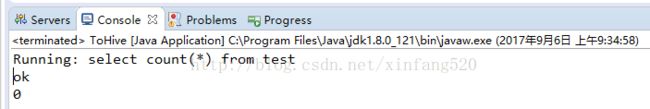

String sql = "select count(*) from test";

System.out.println("Running: " + sql);

res = stmt.executeQuery(sql);

System.out.println("ok");

while (res.next()) {

System.out.println(res.getString(1));

}

return true;

} catch (Exception e) {

e.printStackTrace();

System.out.println("error");

return false;

}

}

public static void main(String[] args) throws SQLException {

ToHive hiveJdbcClient = new ToHive();

hiveJdbcClient.run();

}

}