- Python爬虫解析工具之xpath使用详解

eqa11

python爬虫开发语言

文章目录Python爬虫解析工具之xpath使用详解一、引言二、环境准备1、插件安装2、依赖库安装三、xpath语法详解1、路径表达式2、通配符3、谓语4、常用函数四、xpath在Python代码中的使用1、文档树的创建2、使用xpath表达式3、获取元素内容和属性五、总结Python爬虫解析工具之xpath使用详解一、引言在Python爬虫开发中,数据提取是一个至关重要的环节。xpath作为一门

- python爬取微信小程序数据,python爬取小程序数据

2301_81900439

前端

大家好,小编来为大家解答以下问题,python爬取微信小程序数据,python爬取小程序数据,现在让我们一起来看看吧!Python爬虫系列之微信小程序实战基于Scrapy爬虫框架实现对微信小程序数据的爬取首先,你得需要安装抓包工具,这里推荐使用Charles,至于怎么使用后期有时间我会出一个事例最重要的步骤之一就是分析接口,理清楚每一个接口功能,然后连接起来形成接口串思路,再通过Spider的回调

- 【Python爬虫】百度百科词条内容

PokiFighting

数据处理python爬虫开发语言

词条内容我这里随便选取了一个链接,用的是FBI的词条importurllib.requestimporturllib.parsefromlxmlimportetreedefquery(url):headers={'user-agent':'Mozilla/5.0(WindowsNT6.1;Win64;x64)AppleWebKit/537.36(KHTML,likeGecko)Chrome/80.

- Python爬虫代理池

极客李华

python授课python爬虫开发语言

Python爬虫代理池网络爬虫在数据采集和信息抓取方面起到了关键作用。然而,为了应对网站的反爬虫机制和保护爬虫的真实身份,使用代理池变得至关重要。1.代理池的基本概念:代理池是一组包含多个代理IP地址的集合。通过在爬虫中使用代理池,我们能够隐藏爬虫的真实IP地址,实现一定程度的匿名性。这有助于防止被目标网站封锁或限制访问频率。2.为何使用代理池:匿名性:代理池允许爬虫在请求目标网站时使用不同的IP

- 10个高效的Python爬虫框架,你用过几个?

进击的C语言

python

小型爬虫需求,requests库+bs4库就能解决;大型爬虫数据,尤其涉及异步抓取、内容管理及后续扩展等功能时,就需要用到爬虫框架了。下面介绍了10个爬虫框架,大家可以学习使用!1.Scrapyscrapy官网:https://scrapy.org/scrapy中文文档:https://www.osgeo.cn/scrapy/intro/oScrapy是一个为了爬取网站数据,提取结构性数据而编写的

- python爬虫(5)之CSDN

It is a deal️

小项目pythonjson爬虫

CSDN的爬虫相对于doubatop250更加简单,一般只需要title和url即可下面是相关的代码:#爬虫之csdn#分析urlhttps://www.csdn.net/api/articles?type=more&category=python&shown_offset=0(firstpage)#https://www.csdn.net/api/articles?type=more&categ

- Python——爬虫

星和月

python

当编写一个Python爬虫时,你可以使用BeautifulSoup库来解析网页内容,使用requests库来获取网页的HTML代码。下面是一个简单的示例,演示了如何获取并解析网页内容:importrequestsfrombs4importBeautifulSoup#发送HTTP请求获取网页内容url='https://www.example.com'#要爬取的网页的URLresponse=requ

- 基于Python爬虫四川成都二手房数据可视化系统设计与实现(Django框架) 研究背景与意义、国内外研究现状_django商品房数据分析论文(1)

莫莫Android开发

信息可视化python爬虫

3.国外研究现状在国外,二手房数据可视化也是一个热门的研究领域。以美国为例,有很多公司和网站提供了专门的二手房数据可视化工具,如Zillow、Redfin等。这些工具通常提供房价趋势图、房价分布图、房源信息等功能,帮助用户更好地了解房市动态。综上所述,虽然国内外在二手房数据可视化方面已经有了一些研究成果,但对于四川成都地区的二手房市场还没有相关的研究和可视化系统。因此,本研究旨在设计并实现一个基于

- python requests下载网页_python爬虫 requests-html的使用

weixin_39600319

pythonrequests下载网页

一介绍Python上有一个非常著名的HTTP库——requests,相信大家都听说过,用过的人都说非常爽!现在requests库的作者又发布了一个新库,叫做requests-html,看名字也能猜出来,这是一个解析HTML的库,具备requests的功能以外,还新增了一些更加强大的功能,用起来比requests更爽!接下来我们来介绍一下它吧。#官网解释'''Thislibraryintendsto

- 解决“Python中 pip不是内部或外部命令,也不是可运行的程序或批处理文件”的方法。

གཡུ །

Python常规问题pythonpip机器学习自然语言处理

解决‘Python中pip不是内部或外部命令,也不是可运行的程序或批处理文件。’的方法1、pip是什么?pip是一个以Python计算机程序语言写成的软件包管理系统,他可以安装和管理软件包,另外不少的软件包也可以在“Python软件包索引”中找到。它可以通过cmd(命令提示符)非常方便地下载和管理Python第三方库,比如,Python爬虫中常见的requests库等。但是我们在使用cmd运行pi

- python爬虫的urlib知识梳理

卑微小鹿

爬虫

1:urlib.request.urlopen发送请求getpost网络超时timeout=0.1网络请求模拟一个浏览器所发送的网络请求创建requestrequest头信息➕host/IP➕验证➕请求方式cookice客户返回响应数据所留下来的标记代理ipUrlib.request.proxyhander字典类型异常处理codereasonhearders拆分URLurlpaseurlsplit

- Python爬虫入门实战:抓取CSDN博客文章

A Bug's Code Journey

爬虫python

一、前言在大数据时代,网络上充斥着海量的信息,而爬虫技术就是解锁这些信息宝库的钥匙。Python,以其简洁易读的语法和强大的库支持,成为编写爬虫的首选语言。本篇博客将从零开始,带你一步步构建一个简单的Python爬虫,抓取CSDN博客的文章标题和链接。二、环境准备在开始之前,确保你的环境中安装了Python和以下必要的库:1.requests:用于发送HTTP请求2.BeautifulSoup:用

- Python爬虫——Selenium方法爬取LOL页面

张小生180

python爬虫selenium

文章目录Selenium介绍用Selenium方法爬取LOL每个英雄的图片及名字Selenium介绍Selenium是一个用于自动化Web应用程序测试的工具,但它同样可以被用来进行网页数据的抓取(爬虫)。Selenium通过模拟用户在浏览器中的操作(如点击、输入、滚动等)来与网页交互,并可以捕获网页的渲染结果,这对于需要JavaScript渲染的网页特别有用。安装Selenium首先,你需要安装S

- Python爬虫如何搞定动态Cookie?小白也能学会!

图灵学者

python精华python爬虫github

目录1、动态Cookie基础1.1Cookie与Session的区别1.2动态Cookie生成原理2、requests.Session方法2.1Session对象保持2.2处理登录与Cookie刷新2.3长连接与状态保持策略3、Selenium结合ChromeDriver实战3.1安装配置Selenium3.2动态抓取&处理Cookie4、requests-Session结合Selenium技巧4

- Python爬虫基础知识

板栗妖怪

python爬虫开发语言

(未完成)爬虫概念爬虫用于爬取数据,又称之为数据采集程序爬取数据来源于网络,网络中数据可以是有web服务器、数据库服务器、索引库、大数据等等提供爬取数据是公开的、非盈利。python爬虫使用python编写的爬虫脚本可以完成定时、定量、指定目标的数据爬取。主要使用多(单)线程/进程、网络请求库、数据解析、数据储存、任务调度等相关技术。爬虫和web后端服务关系爬虫使用网络请求库,相当于客户端请求,w

- python爬虫处理滑块验证_python selenium爬虫滑块验证

用户6731453637

python爬虫处理滑块验证

importrandomimporttimefromPILimportImagefromioimportBytesIOimportrequestsasrqfrombs4importBeautifulSoupasbsfromseleniumimportwebdriverfromselenium.webdriverimportActionChainsfromselenium.webdriverimpo

- 如何用python爬取股票数据选股_用python爬取股票数据

weixin_39752087

获取数据是数据分析中必不可少的一部分,而网络爬虫是是获取数据的一个重要渠道之一。鉴于此,我拾起了Python这把利器,开启了网络爬虫之路。本篇使用的版本为python3.5,意在抓取证券之星上当天所有A股数据。程序主要分为三个部分:网页源码的获取、所需内容的提取、所得结果的整理。一、网页源码的获取很多人喜欢用python爬虫的原因之一就是它容易上手。只需以下几行代码既可抓取大部分网页的源码。imp

- Python爬虫基础总结

醉蕤

Pythonpython爬虫

活动地址:CSDN21天学习挑战赛学习的最大理由是想摆脱平庸,早一天就多一份人生的精彩;迟一天就多一天平庸的困扰。学习日记目录学习日记一、关于爬虫1、爬虫的概念2、爬虫的优点3、爬虫的分类4、重要提醒5、反爬和反反爬机制6、协议7、常用请求头和常用的请求方法8、常见的响应状态码9、url的详解二、爬虫基本流程三、可能需要的库四、小例1、requests请求网页2、python解析网页源码(使用Be

- 2024年最新初面蚂蚁金服,Python爬虫实战:爬取股票信息(1),面试题解析已整理成文档怎么办

imtokenmax合约众筹

2024年程序员学习python爬虫开发语言

收集整理了一份《2024年最新Python全套学习资料》免费送给大家,初衷也很简单,就是希望能够帮助到想自学提升又不知道该从何学起的朋友。既有适合小白学习的零基础资料,也有适合3年以上经验的小伙伴深入学习提升的进阶课程,涵盖了95%以上Python知识点,真正体系化!由于文件比较多,这里只是将部分目录截图出来如果你需要这些资料,可以添加V无偿获取:hxbc188(备注666)正文首先要爬取股票数据

- Python怎么去抓取公众号的文章?Python爬虫爬取微信公众号方法

快乐星球没有乐

python爬虫微信

很多小伙伴在学习了爬虫之后都能够使用它去抓取一些网页上的数据了,但是最近有小伙伴问我微信公众号上的文章要怎么去抓取出来。那这一篇文章将会以实际的代码示例来介绍如何去使用python爬虫抓取微信公众号的文章。1.下载wkhtmltopdf1这个应用程序,它可以将HTML格式的数据转换成PDF格式的。2.打开python编辑器,新建一个python项目命名为wxgzhPDF并在里面创建一个空白的pyt

- Python爬虫——使用JSON库解析JSON数据_爬虫json解析

Java老杨

程序员python爬虫json

文章目录1如何在网页中获取JSON数据?2Python内置的JSON库这几天在琢磨爬取动态网页,发现需要爬取js内容,虽然说最后还是没有用上JSON库进行解析,不过笔记写的都写了,就发出来记录一下吧。1如何在网页中获取JSON数据?打开一个具有动态渲染的网页,按F12打开浏览器开发工具,点击“网络”,再刷新一下网页,观察是否有新的数据包。发现有js后缀的文件,这就是我们想要的json数据了。2Py

- Python100个库分享第16个—sqlparse(SQL解析器)

一晌小贪欢

Python100个库分享sqlpython爬虫开发语言python学习python爬虫

目录专栏导读库的介绍库的安装1、解析SQL语句2、格式化SQL语句3、提取表名4、分割多条SQL语句实际应用代码参考:总结专栏导读欢迎来到Python办公自动化专栏—Python处理办公问题,解放您的双手️博客主页:请点击——>一晌小贪欢的博客主页求关注该系列文章专栏:请点击——>Python办公自动化专栏求订阅此外还有爬虫专栏:请点击——>Python爬虫基础专栏求订阅此外还有python基础

- python web自动化

gaoguide2015

自动化脚本webhtml

1.python爬虫之模拟登陆csdn(登录、cookie)http://blog.csdn.net/yanggd1987/article/details/52127436?locationNum=32、xml解析:Python网页解析:BeautifulSoup与lxml.html方式对比(xpath)lxml库速度快,功能强大,推荐。http://blog.sina.com.cn/s/blog

- Python爬虫-小某书达人榜单

写python的鑫哥

爬虫实战进阶python爬虫开发语言cookierequests

前言本文是该专栏的第35篇,后面会持续分享python爬虫干货知识,记得关注。本文案例来介绍某平台达人榜单,值得注意的是,在开始之前,需要提前登录,否则榜单无法拿到。废话不多说,下面跟着笔者直接往下看正文。正文目标:aHR0cHM6Ly9keS5odWl0dW4uY29tL2FwcC8jL2FwcC9kYXNoYm9hcmQ=(注:使用base64自行解码)需求:红薯版-达人榜单打开页面之后,先点

- 【Python爬虫实战】:二手房数据爬取

3344什么都不是

pythonpandas数据分析

文章目录系列文章目录前言一、pandas是什么?二、使用步骤1.引入库2.读入数据总结前言万维网上有着无数的网页,包含着海量的信息,无孔不入、森罗万象。但很多时候,无论出于数据分析或产品需求,我们需要从某些网站,提取出我们感兴趣、有价值的内容,但是纵然是进化到21世纪的人类,依然只有两只手,一双眼,不可能去每一个网页去点去看,然后再复制粘贴。所以我们需要一种能自动获取网页内容并可以按照指定规则提取

- Python爬虫实战

weixin_34007879

爬虫jsonjava

引言网络爬虫是抓取互联网信息的利器,成熟的开源爬虫框架主要集中于两种语言Java和Python。主流的开源爬虫框架包括:1.分布式爬虫框架:Nutch2.Java单机爬虫框架:Crawler4j,WebMagic,WebCollector、Heritrix3.python单机爬虫框架:scrapy、pyspiderNutch是专为搜索引擎设计的的分布式开源框架,上手难度高,开发复杂,基本无法满足快

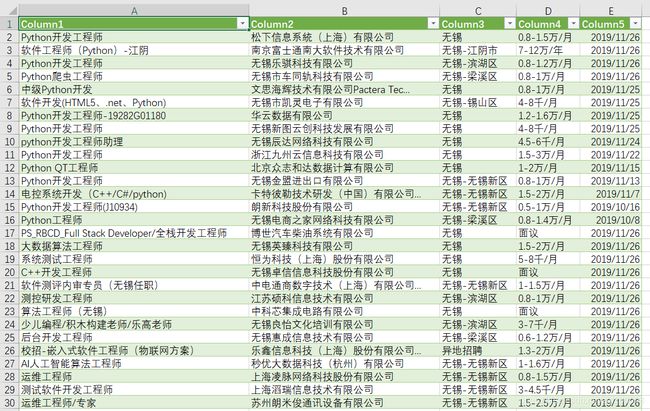

- 2024年Python爬虫:爬取招聘网站系列 - 前程无忧

2401_84562659

程序员python爬虫开发语言

importpprint#格式化输出模块importcsv#保存csv数据算了,我直接贴代码吧,流程都写清楚了,我把注释也标上了。兄弟们在学习的时候没有人解答和好的学习资料教程就很痛苦,解答或者其它教程都在这了电子书、视频都有!对应视频教程:【Python爬虫】招聘网站实战合集第一弹:爬取前程无忧,零基础也能学会!f=open(‘python招聘数据1.csv’,mode=‘a’,encoding

- 2024年Python最新Python爬虫入门教程30:爬取拉勾网招聘数据信息(1)

2401_84584609

程序员python爬虫信息可视化

Python爬虫入门教程23:A站视频的爬取,解密m3u8视频格式Python爬虫入门教程24:下载某网站付费文档保存PDFPython爬虫入门教程25:绕过JS加密参数,实现批量下载抖某音无水印视频内容Python爬虫入门教程26:快手视频网站数据内容下载Python爬虫入门教程27:爬取某电商平台数据内容并做数据可视化Python爬虫入门教程28:爬取微博热搜榜并做动态数据展示Python爬虫

- python爬虫面试真题及答案_Python面试题爬虫篇(附答案)

朴少

python爬虫面试真题及答案

0|1第一部分必答题注意:第31题1分,其他题均每题3分。1,了解哪些基于爬虫相关的模块?-网络请求:urllib,requests,aiohttp-数据解析:re,xpath,bs4,pyquery-selenium-js逆向:pyexcJs2,常见的数据解析方式?-re、lxml、bs43,列举在爬虫过程中遇到的哪些比较难的反爬机制?-动态加载的数据-动态变化的请求参数-js加密-代理-coo

- 2024年Python最全Python爬虫实战:爬取股票信息_python 获取a股所有代码(1)

2401_84585339

程序员python爬虫windows

doc=PyQuery(r.text)list=[]#获取所有section中a节点,并进行迭代foriindoc('.stockTablea').items():try:href=i.attr.hreflist.append(re.findall(r"\d{6}",href)[0])except:continuelist=[item.lower()foriteminlist]#将爬取信息转换小写

- java工厂模式

3213213333332132

java抽象工厂

工厂模式有

1、工厂方法

2、抽象工厂方法。

下面我的实现是抽象工厂方法,

给所有具体的产品类定一个通用的接口。

package 工厂模式;

/**

* 航天飞行接口

*

* @Description

* @author FuJianyong

* 2015-7-14下午02:42:05

*/

public interface SpaceF

- nginx频率限制+python测试

ronin47

nginx 频率 python

部分内容参考:http://www.abc3210.com/2013/web_04/82.shtml

首先说一下遇到这个问题是因为网站被攻击,阿里云报警,想到要限制一下访问频率,而不是限制ip(限制ip的方案稍后给出)。nginx连接资源被吃空返回状态码是502,添加本方案限制后返回599,与正常状态码区别开。步骤如下:

- java线程和线程池的使用

dyy_gusi

ThreadPoolthreadRunnabletimer

java线程和线程池

一、创建多线程的方式

java多线程很常见,如何使用多线程,如何创建线程,java中有两种方式,第一种是让自己的类实现Runnable接口,第二种是让自己的类继承Thread类。其实Thread类自己也是实现了Runnable接口。具体使用实例如下:

1、通过实现Runnable接口方式 1 2

- Linux

171815164

linux

ubuntu kernel

http://kernel.ubuntu.com/~kernel-ppa/mainline/v4.1.2-unstable/

安卓sdk代理

mirrors.neusoft.edu.cn 80

输入法和jdk

sudo apt-get install fcitx

su

- Tomcat JDBC Connection Pool

g21121

Connection

Tomcat7 抛弃了以往的DBCP 采用了新的Tomcat Jdbc Pool 作为数据库连接组件,事实上DBCP已经被Hibernate 所抛弃,因为他存在很多问题,诸如:更新缓慢,bug较多,编译问题,代码复杂等等。

Tomcat Jdbc P

- 敲代码的一点想法

永夜-极光

java随笔感想

入门学习java编程已经半年了,一路敲代码下来,现在也才1w+行代码量,也就菜鸟水准吧,但是在整个学习过程中,我一直在想,为什么很多培训老师,网上的文章都是要我们背一些代码?比如学习Arraylist的时候,教师就让我们先参考源代码写一遍,然

- jvm指令集

程序员是怎么炼成的

jvm 指令集

转自:http://blog.csdn.net/hudashi/article/details/7062675#comments

将值推送至栈顶时 const ldc push load指令

const系列

该系列命令主要负责把简单的数值类型送到栈顶。(从常量池或者局部变量push到栈顶时均使用)

0x02 &nbs

- Oracle字符集的查看查询和Oracle字符集的设置修改

aijuans

oracle

本文主要讨论以下几个部分:如何查看查询oracle字符集、 修改设置字符集以及常见的oracle utf8字符集和oracle exp 字符集问题。

一、什么是Oracle字符集

Oracle字符集是一个字节数据的解释的符号集合,有大小之分,有相互的包容关系。ORACLE 支持国家语言的体系结构允许你使用本地化语言来存储,处理,检索数据。它使数据库工具,错误消息,排序次序,日期,时间,货

- png在Ie6下透明度处理方法

antonyup_2006

css浏览器FirebugIE

由于之前到深圳现场支撑上线,当时为了解决个控件下载,我机器上的IE8老报个错,不得以把ie8卸载掉,换个Ie6,问题解决了,今天出差回来,用ie6登入另一个正在开发的系统,遇到了Png图片的问题,当然升级到ie8(ie8自带的开发人员工具调试前端页面JS之类的还是比较方便的,和FireBug一样,呵呵),这个问题就解决了,但稍微做了下这个问题的处理。

我们知道PNG是图像文件存储格式,查询资

- 表查询常用命令高级查询方法(二)

百合不是茶

oracle分页查询分组查询联合查询

----------------------------------------------------分组查询 group by having --平均工资和最高工资 select avg(sal)平均工资,max(sal) from emp ; --每个部门的平均工资和最高工资

- uploadify3.1版本参数使用详解

bijian1013

JavaScriptuploadify3.1

使用:

绑定的界面元素<input id='gallery'type='file'/>$("#gallery").uploadify({设置参数,参数如下});

设置的属性:

id: jQuery(this).attr('id'),//绑定的input的ID

langFile: 'http://ww

- 精通Oracle10编程SQL(17)使用ORACLE系统包

bijian1013

oracle数据库plsql

/*

*使用ORACLE系统包

*/

--1.DBMS_OUTPUT

--ENABLE:用于激活过程PUT,PUT_LINE,NEW_LINE,GET_LINE和GET_LINES的调用

--语法:DBMS_OUTPUT.enable(buffer_size in integer default 20000);

--DISABLE:用于禁止对过程PUT,PUT_LINE,NEW

- 【JVM一】JVM垃圾回收日志

bit1129

垃圾回收

将JVM垃圾回收的日志记录下来,对于分析垃圾回收的运行状态,进而调整内存分配(年轻代,老年代,永久代的内存分配)等是很有意义的。JVM与垃圾回收日志相关的参数包括:

-XX:+PrintGC

-XX:+PrintGCDetails

-XX:+PrintGCTimeStamps

-XX:+PrintGCDateStamps

-Xloggc

-XX:+PrintGC

通

- Toast使用

白糖_

toast

Android中的Toast是一种简易的消息提示框,toast提示框不能被用户点击,toast会根据用户设置的显示时间后自动消失。

创建Toast

两个方法创建Toast

makeText(Context context, int resId, int duration)

参数:context是toast显示在

- angular.identity

boyitech

AngularJSAngularJS API

angular.identiy 描述: 返回它第一参数的函数. 此函数多用于函数是编程. 使用方法: angular.identity(value); 参数详解: Param Type Details value

*

to be returned. 返回值: 传入的value 实例代码:

<!DOCTYPE HTML>

- java-两整数相除,求循环节

bylijinnan

java

import java.util.ArrayList;

import java.util.List;

public class CircleDigitsInDivision {

/**

* 题目:求循环节,若整除则返回NULL,否则返回char*指向循环节。先写思路。函数原型:char*get_circle_digits(unsigned k,unsigned j)

- Java 日期 周 年

Chen.H

javaC++cC#

/**

* java日期操作(月末、周末等的日期操作)

*

* @author

*

*/

public class DateUtil {

/** */

/**

* 取得某天相加(减)後的那一天

*

* @param date

* @param num

*

- [高考与专业]欢迎广大高中毕业生加入自动控制与计算机应用专业

comsci

计算机

不知道现在的高校还设置这个宽口径专业没有,自动控制与计算机应用专业,我就是这个专业毕业的,这个专业的课程非常多,既要学习自动控制方面的课程,也要学习计算机专业的课程,对数学也要求比较高.....如果有这个专业,欢迎大家报考...毕业出来之后,就业的途径非常广.....

以后

- 分层查询(Hierarchical Queries)

daizj

oracle递归查询层次查询

Hierarchical Queries

If a table contains hierarchical data, then you can select rows in a hierarchical order using the hierarchical query clause:

hierarchical_query_clause::=

start with condi

- 数据迁移

daysinsun

数据迁移

最近公司在重构一个医疗系统,原来的系统是两个.Net系统,现需要重构到java中。数据库分别为SQL Server和Mysql,现需要将数据库统一为Hana数据库,发现了几个问题,但最后通过努力都解决了。

1、原本通过Hana的数据迁移工具把数据是可以迁移过去的,在MySQl里面的字段为TEXT类型的到Hana里面就存储不了了,最后不得不更改为clob。

2、在数据插入的时候有些字段特别长

- C语言学习二进制的表示示例

dcj3sjt126com

cbasic

进制的表示示例

# include <stdio.h>

int main(void)

{

int i = 0x32C;

printf("i = %d\n", i);

/*

printf的用法

%d表示以十进制输出

%x或%X表示以十六进制的输出

%o表示以八进制输出

*/

return 0;

}

- NsTimer 和 UITableViewCell 之间的控制

dcj3sjt126com

ios

情况是这样的:

一个UITableView, 每个Cell的内容是我自定义的 viewA viewA上面有很多的动画, 我需要添加NSTimer来做动画, 由于TableView的复用机制, 我添加的动画会不断开启, 没有停止, 动画会执行越来越多.

解决办法:

在配置cell的时候开始动画, 然后在cell结束显示的时候停止动画

查找cell结束显示的代理

- MySql中case when then 的使用

fanxiaolong

casewhenthenend

select "主键", "项目编号", "项目名称","项目创建时间", "项目状态","部门名称","创建人"

union

(select

pp.id as "主键",

pp.project_number as &

- Ehcache(01)——简介、基本操作

234390216

cacheehcache简介CacheManagercrud

Ehcache简介

目录

1 CacheManager

1.1 构造方法构建

1.2 静态方法构建

2 Cache

2.1&

- 最容易懂的javascript闭包学习入门

jackyrong

JavaScript

http://www.ruanyifeng.com/blog/2009/08/learning_javascript_closures.html

闭包(closure)是Javascript语言的一个难点,也是它的特色,很多高级应用都要依靠闭包实现。

下面就是我的学习笔记,对于Javascript初学者应该是很有用的。

一、变量的作用域

要理解闭包,首先必须理解Javascript特殊

- 提升网站转化率的四步优化方案

php教程分享

数据结构PHP数据挖掘Google活动

网站开发完成后,我们在进行网站优化最关键的问题就是如何提高整体的转化率,这也是营销策略里最最重要的方面之一,并且也是网站综合运营实例的结果。文中分享了四大优化策略:调查、研究、优化、评估,这四大策略可以很好地帮助用户设计出高效的优化方案。

PHP开发的网站优化一个网站最关键和棘手的是,如何提高整体的转化率,这是任何营销策略里最重要的方面之一,而提升网站转化率是网站综合运营实力的结果。今天,我就分

- web开发里什么是HTML5的WebSocket?

naruto1990

Webhtml5浏览器socket

当前火起来的HTML5语言里面,很多学者们都还没有完全了解这语言的效果情况,我最喜欢的Web开发技术就是正迅速变得流行的 WebSocket API。WebSocket 提供了一个受欢迎的技术,以替代我们过去几年一直在用的Ajax技术。这个新的API提供了一个方法,从客户端使用简单的语法有效地推动消息到服务器。让我们看一看6个HTML5教程介绍里 的 WebSocket API:它可用于客户端、服

- Socket初步编程——简单实现群聊

Everyday都不同

socket网络编程初步认识

初次接触到socket网络编程,也参考了网络上众前辈的文章。尝试自己也写了一下,记录下过程吧:

服务端:(接收客户端消息并把它们打印出来)

public class SocketServer {

private List<Socket> socketList = new ArrayList<Socket>();

public s

- 面试:Hashtable与HashMap的区别(结合线程)

toknowme

昨天去了某钱公司面试,面试过程中被问道

Hashtable与HashMap的区别?当时就是回答了一点,Hashtable是线程安全的,HashMap是线程不安全的,说白了,就是Hashtable是的同步的,HashMap不是同步的,需要额外的处理一下。

今天就动手写了一个例子,直接看代码吧

package com.learn.lesson001;

import java

- MVC设计模式的总结

xp9802

设计模式mvc框架IOC

随着Web应用的商业逻辑包含逐渐复杂的公式分析计算、决策支持等,使客户机越

来越不堪重负,因此将系统的商业分离出来。单独形成一部分,这样三层结构产生了。

其中‘层’是逻辑上的划分。

三层体系结构是将整个系统划分为如图2.1所示的结构[3]

(1)表现层(Presentation layer):包含表示代码、用户交互GUI、数据验证。

该层用于向客户端用户提供GUI交互,它允许用户