Python3爬虫Scrapy框架发送post请求详细笔记(带代码)

scrapy 简单的post请求(先把我用的拿出来表示尊敬)

学了本文你能学到什么?仅供学习,如有疑问,请留言。。。

import scrapy

#发送post请求 这里的post请求没有实际的应用 直接使用request来发送post请求比较简单

#需求 通过百度翻译中的搜索 也就是post请求 这里搜索的内容是dog

class PostSpider(scrapy.Spider):

name = 'post'

# allowed_domains = ['www.xxx.com']

start_urls = ['https://fanyi.baidu.com/sug']

#这是父类中的方法 start_urls被封装到这里面来

def start_requests(self):

#注意 这是原先的

# for url in self.start_urls:

# #直接发送get请求

# # yield scrapy.Request(url=url,callback=self.parse)

data ={

'kw':'dog'

}

#重写父类的方法start_urls

for url in self.start_urls:

#直接发送get请求

yield scrapy.FormRequest(url=url,formdata=data,callback=self.parse)

def parse(self, response):

#这里拿到的是一个json 而不是源码 不需要使用response.xpath

print(response.text)Request对象

源码如下:

"""

This module implements the Request class which is used to represent HTTP

requests in Scrapy.

See documentation in docs/topics/request-response.rst

"""

import six

from w3lib.url import safe_url_string

from scrapy.http.headers import Headers

from scrapy.utils.python import to_bytes

from scrapy.utils.trackref import object_ref

from scrapy.utils.url import escape_ajax

from scrapy.http.common import obsolete_setter

class Request(object_ref):

#初始化

def __init__(self, url, callback=None, method='GET', headers=None, body=None,

cookies=None, meta=None, encoding='utf-8', priority=0,

dont_filter=False, errback=None, flags=None):

self._encoding = encoding # this one has to be set first

self.method = str(method).upper()

self._set_url(url)

self._set_body(body)

assert isinstance(priority, int), "Request priority not an integer: %r" % priority

self.priority = priority

if callback is not None and not callable(callback):

raise TypeError('callback must be a callable, got %s' % type(callback).__name__)#raise自动引出异常

if errback is not None and not callable(errback):

raise TypeError('errback must be a callable, got %s' % type(errback).__name__)

assert callback or not errback, "Cannot use errback without a callback"

self.callback = callback

self.errback = errback

self.cookies = cookies or {}

self.headers = Headers(headers or {}, encoding=encoding)

self.dont_filter = dont_filter

self._meta = dict(meta) if meta else None

self.flags = [] if flags is None else list(flags)

@property

def meta(self):

if self._meta is None:

self._meta = {}

return self._meta

def _get_url(self):

return self._url

def _set_url(self, url):

if not isinstance(url, six.string_types):

raise TypeError('Request url must be str or unicode, got %s:' % type(url).__name__)

s = safe_url_string(url, self.encoding)

self._url = escape_ajax(s)

if ':' not in self._url:

raise ValueError('Missing scheme in request url: %s' % self._url)

url = property(_get_url, obsolete_setter(_set_url, 'url'))

def _get_body(self):

return self._body

def _set_body(self, body):

if body is None:

self._body = b''

else:

self._body = to_bytes(body, self.encoding)

body = property(_get_body, obsolete_setter(_set_body, 'body'))

@property

def encoding(self):

return self._encoding

def __str__(self):

return "<%s %s>" % (self.method, self.url)

__repr__ = __str__

def copy(self):

"""Return a copy of this Request"""

return self.replace()

def replace(self, *args, **kwargs):

"""Create a new Request with the same attributes except for those

given new values.

"""

for x in ['url', 'method', 'headers', 'body', 'cookies', 'meta', 'flags',

'encoding', 'priority', 'dont_filter', 'callback', 'errback']:

kwargs.setdefault(x, getattr(self, x))

cls = kwargs.pop('cls', self.__class__)

return cls(*args, **kwargs)

其中本人常用的参数:

url: 就是需要请求,并进行下一步处理的url

callback: 指定该请求返回的Response,由那个函数来处理。

method: 请求一般不需要指定,默认GET方法,可设置为"GET", "POST", "PUT"等,且保证字符串大写

headers: 请求时,包含的头文件。一般不需要。内容一般如下:

# 自己写过爬虫的肯定知道

Host: media.readthedocs.org

User-Agent: Mozilla/5.0 (Windows NT 6.2; WOW64; rv:33.0) Gecko/20100101 Firefox/33.0

Accept: text/css,*/*;q=0.1

Accept-Language: zh-cn,zh;q=0.8,en-us;q=0.5,en;q=0.3

Accept-Encoding: gzip, deflate

Referer: http://scrapy-chs.readthedocs.org/zh_CN/0.24/

Cookie: _ga=GA1.2.1612165614.1415584110;

Connection: keep-alive

If-Modified-Since: Mon, 25 Aug 2014 21:59:35 GMT

Cache-Control: max-age=0meta: 比较常用,在不同的请求之间传递数据使用的。字典dict型

request_with_cookies = Request(

url="http://www.example.com",

cookies={'currency': 'USD', 'country': 'UY'},

meta={'dont_merge_cookies': True}

)encoding: 使用默认的 'utf-8' 就行。

dont_filter: 表明该请求不由调度器过滤。这是当你想使用多次执行相同的请求,忽略重复的过滤器。默认为False。(具体可以看具体原因就是request的回调函数执行与不执行的问题,

在scrapy的文档中就有提及这个问题

文档

If the spider doesn’t define an allowed_domains attribute, or the attribute is empty, the offsite middleware will allow all requests.

If the request has the dont_filter attribute set, the offsite middleware will allow the request even if its domain is not listed in allowed domains

详情也可以看我的另一篇博客~~~scrapy爬虫 Filterd offsite request 错误

)

errback: 指定错误处理函数

Response对象

部分源码如下:

# 部分代码

class Response(object_ref):

def __init__(self, url, status=200, headers=None, body='', flags=None, request=None):

self.headers = Headers(headers or {})

self.status = int(status)

self._set_body(body)

self._set_url(url)

self.request = request

self.flags = [] if flags is None else list(flags)

@property

def meta(self):

try:

return self.request.meta

except AttributeError:

raise AttributeError("Response.meta not available, this response " \

"is not tied to any request")大部分和上面差不多

status: #响应码

_set_body(body): 响应体

_set_url(url):响应url

self.request = request

post模拟登录github

方式一:解析登录请求参数发送请求登录

可以使用 yield scrapy.FormRequest(url, formdata, callback)方法发送POST请求。

# -*- coding: utf-8 -*- import scrapy import re class GithubSpider(scrapy.Spider): name = 'github' allowed_domains = ['github.com'] start_urls = ['https://github.com/login'] def parse(self, response): #构造请求参数 authenticity_token = response.xpath("//input[@name='authenticity_token']/@value").extract_first() utf8 = response.xpath("//input[@name='utf8']/@value").extract_first() commit = response.xpath("//input[@name='commit']/@value").extract_first() post_data = dict( login="[email protected]", password="****8", authenticity_token=authenticity_token, utf8=utf8, commit=commit ) yield scrapy.FormRequest( "https://github.com/session", formdata=post_data, callback=self.login_parse ) def login_parse(self,response): print(re.findall("yangge",response.body.decode()))

方式二:通过预定义表单数据登录

使用FormRequest.from_response()方法模拟用户登录

通常网站通过 实现对某些表单字段(如数据或是登录界面中的认证令牌等)的预填充。

使用Scrapy抓取网页时,如果想要预填充或重写像用户名、用户密码这些表单字段, 可以使用 FormRequest.from_response() 方法实现。

import scrapy import re class Github2Spider(scrapy.Spider): name = 'github2' allowed_domains = ['github.com'] start_urls = ['https://github.com/login'] def parse(self, response): yield scrapy.FormRequest.from_response( response, #自动的从response中寻找from表单 formdata={"login":"[email protected]","password":"*****8"}, callback = self.after_login ) def after_login(self,response): print(re.findall("yangge",response.body.decode()

方式三:通过重写strat_request方法,加入cookie请求登录后的页面

如果希望程序执行一开始就发送POST请求,可以重写Spider类的start_requests(self) 方法,并且不再调用start_urls里的url。

class mySpider(scrapy.Spider): name = 'github2' allowed_domains = ['github.com'] start_urls = ['https://github.com/login'] def start_requests(self): # FormRequest 是Scrapy发送POST请求的方法 cookies = {''} #cookies 字典的形式 yield scrapy.Request( url = strat_url[0] cookies=cookies callback = self.parse_login ) def parse_login(self, response): print(re.findall("yangge",response.body.decode()

如果你以为看到这里就能完整的解出post请求?大错特错

要玩转scrapy post 就接着往下看:

目标网站:http://222.76.243.118:8090/page/double_publicity/allow.html

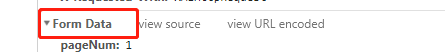

首先要了解Form Data 和 request payload的区别

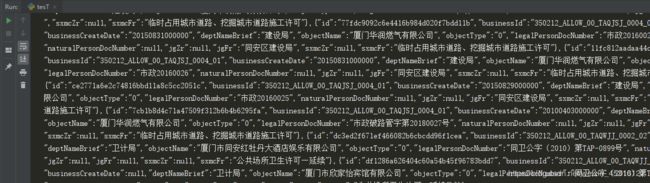

request payload 的 Content-Type请求头是application/json 请求过来的数据是json格式,如下图

而scrapy 的post请求默认的请求头是application/x-www-form-urlencoded

如果按照scrapy的post写法

怎么也请求不出来,也不会报错:

遇到Request payload表单时,用Request和body搭配使用(记得加上json.dumps())。

修改后的代码如下:

class XkSdl10822Spider(scrapy.Spider):

name = 'XK-FJM-0102'

url = 'http://222.76.243.118:8090/publicity/get_double_publicity_record_list'

headers = {

'Origin': 'http://222.76.243.118:8090',

'Accept-Encoding': 'gzip, deflate',

'Accept-Language': 'zh-CN,zh;q=0.9',

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/74.0.3729.131 Safari/537.36',

'Content-Type': 'application/json; charset=UTF-8',

'Accept': 'application/json, text/javascript, */*; q=0.01',

'Referer': 'http://222.76.243.118:8090/page/double_publicity/allow.html',

'X-Requested-With': 'XMLHttpRequest',

'Connection': 'keep-alive',

}

def start_requests(self):

data = {

'listSql': '',

'linesPerPage': "6547",

'currentPage': "1",

'deptId': '',

'searchKeyword': '',

'tag': 'ALLOW'

}

yield scrapy.Request(url=self.url, body = json.dumps(data), method='POST',headers=self.headers,callback=self.parse_list)

def parse_list(self, response):

print(response.text)

pass打印结果

完美解决。

总结

form data 的Content-Type请求头是application/x-www-form-urlencoded,这种格式 所以请求过来的数据是key1=value1&key2=value2格式

request payload 的 Content-Type请求头是application/json 请求过来的数据是json格式,解决办法就是将请求头变成application/json 然后用Body传递json数据(json数据是放在body data里面传输的 所以要用body传递数据)

另外注意:scrapy 的post请求默认的请求头是application/x-www-form-urlencoded,所以要看清是

还是

用FormRuquest这个的时候要注意 传输是字符串,其他Ruquest的不是字符串类型是可以的

scrapy post 请求就这里介绍了。。。

思考一下:

Accept: text/javascript, */*; q=0.01情况如何处理