pytorch以Mnist为例进行中间层特征图可视化

接上一篇文章,在得到训练模型后,进行加载模型后,对模型中间层特征进行提取并输出预测精度:

方法

参考《pytorch 提取卷积神经网络的特征图可视化》

class FeatureExtractor(nn.Module):

def __init__(self,submodule,extracted_layer):

super(FeatureExtractor,self).__init__()

self.submodule=submodule

self.extracted_layer=extracted_layer

def forward(self, x):

outputs=[]

for name,module in self.submodule._modules.items():

x=module(x)

if name in self.extracted_layer:

outputs.append(x)

return outputs

extracted_layer=['0','2','3','5']

def Feature_visual(outputs):

for i in range(len(outputs)):

out=outputs[i].data.squeeze().numpy()

feature_img = out[0, :, :].squeeze() #选择第一个特征图进行可视化

feature_img = np.asarray(feature_img * 255, dtype=np.uint8)

plt.imshow(feature_img,cmap='gray')

plt.show()全部代码

import torch

import dill

import torch.nn as nn

import torch.utils.data as Data

import torchvision # 数据库

import torch.nn.functional as F

from matplotlib import pyplot as plt

import numpy as np

# torch.manual_seed(1)

epoches = 1 # 训练整批数据的次数

batch_size = 500 # 每次训练的样本数

lr = 0.001

# 1.获得训练数据集

train_data = torchvision.datasets.MNIST(

root='./mnist', # 下载路径

train=True, # 下载训练数据

transform=torchvision.transforms.ToTensor(), # 将数据转化为tensor类型

download=False # 是否下载MNIST数据集

)

# 获得测试数据集

test_data = torchvision.datasets.MNIST(

root='./mnist',

train=False

)

# 将dataset放入DataLoader中 (batch, channel, 28, 28)

loader = Data.DataLoader(

dataset=train_data,

batch_size=batch_size, # 设置batch size

shuffle=True # 打乱数据

)

# 利用前20个样本和标签测试,并归一化

test_x = torch.unsqueeze(test_data.test_data, dim=1).type(

torch.FloatTensor)[:1] / 255

test_y = test_data.test_labels[:1]

class Lambda(nn.Module):

def __init__(self, func):

super().__init__()

self.func = func

def forward(self, x):

return self.func(x)

model=nn.Sequential(

nn.Conv2d(

in_channels=1, # 输入图片深度

out_channels=16, # 滤波器数量

kernel_size=5, # 滤波器大小

stride=1, # 步长

padding=2), # 保持输出图片大小不变 padding=(kernel_size-stride)/2 ) #name='0'

nn.ReLU(), #name='1'

nn.MaxPool2d(kernel_size=2), #name='2'

nn.Conv2d(16, 32, 3, 1, 1), #name='3'

nn.ReLU(), #name='4'

nn.MaxPool2d(2), ##name='5'

Lambda(lambda x: x.view(x.size(0), -1)), #name='6'

nn.Linear(32 * 7 * 7, 10) #name='7'

)

model.load_state_dict(torch.load('cnn.pth'))

class FeatureExtractor(nn.Module):

def __init__(self,submodule,extracted_layer):

super(FeatureExtractor,self).__init__()

self.submodule=submodule

self.extracted_layer=extracted_layer

def forward(self, x):

outputs=[]

for name,module in self.submodule._modules.items():

x=module(x)

if name in self.extracted_layer:

outputs.append(x)

return outputs

extracted_layer=['0','2','3','5']

def Feature_visual(outputs):

for i in range(len(outputs)):

out=outputs[i].data.squeeze().numpy()

feature_img = out[0, :, :].squeeze() #选择第一个特征图进行可视化

feature_img = np.asarray(feature_img * 255, dtype=np.uint8)

plt.imshow(feature_img,cmap='gray')

plt.show()

def eval():

# model.eval()

with torch.no_grad():

extract_feature = FeatureExtractor(model,extracted_layer)(test_x)

Feature_visual(extract_feature)

test_out = model(test_x) # 输入测试集

# 获得当前softmax层最大概率对应的索引值

pred = torch.max(test_out, 1)[1]

# 将二维压缩为一维

pred_y = pred.data.numpy().squeeze()

label_y = test_y.data.numpy()

accuracy = sum(pred_y == label_y) / test_y.size()

print("准确率为 %.2f" % (accuracy))

if __name__ == '__main__':

eval()可视化结果

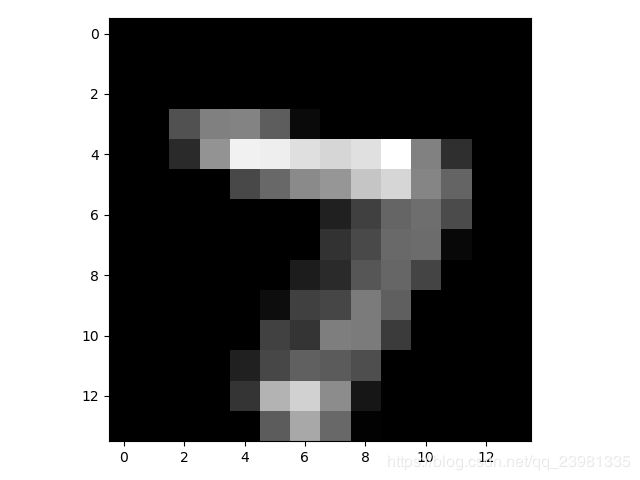

name='0'层第1个特征图可视化结果(![]() ):

):

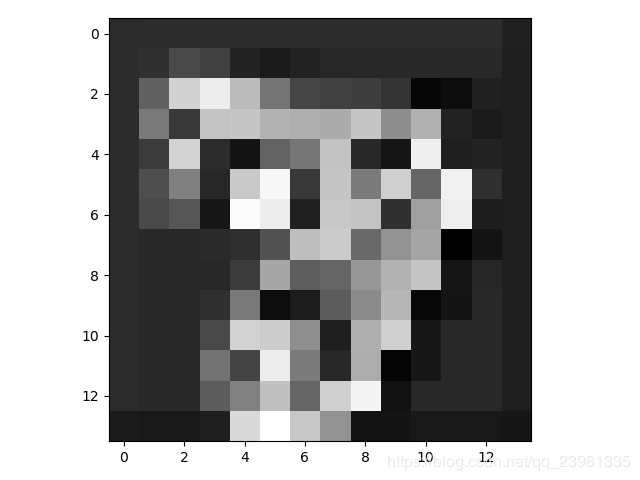

name='2'层第1个特征图可视化结果(![]() ):

):

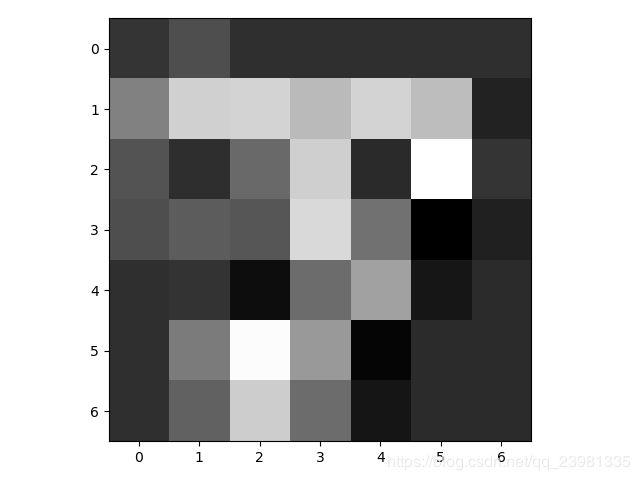

name='3'层第1个特征图可视化结果(![]() ):

):

name='5'层第1个特征图可视化结果(![]() ):

):