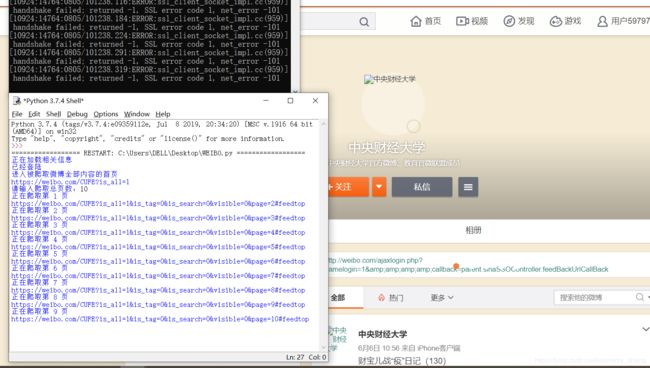

微博文本爬虫

微博文本爬虫使用须知

1.已安装selenium,time,bs4,json库

2.已配置谷歌浏览器webdriver路径

3.谷歌浏览器Default文件位于C:\Users\DELL\AppData\Local\Google\Chrome\User Data

4.使用爬虫前确保谷歌浏览器已关闭

5.推荐网页端微博设置为自动登录

全代码

from selenium import webdriver

from selenium.webdriver.common.keys import Keys

import time

from bs4 import BeautifulSoup

import bs4

import json

def get_user_info():

#获取用户账号、密码及被爬取微博主页url

try:

f=open('user_info.txt','r')

except FileNotFoundError:

print('首次使用,请输入相关信息')

f=open('user_info.txt','w')

user_id=input('请输入微博账户:')

password=input('请输入微博密码:')

url=input('请输入被爬取微博主页的url:')

f.write(json.dumps({'user_id':user_id,'password':password,'url':url}))

f.close()

else:

print('正在加载相关信息')

user_info=json.loads(f.read())

user_id=user_info['user_id']

password=user_info['password']

url=user_info['url']

return user_id,password,url

def sign_in(user_id,password,url,browser):

#模拟登陆,进入被爬取微博全部内容的首页

try:

user_id_input=browser.find_element_by_id('loginname')

password_input=browser.find_element_by_name('password')

time.sleep(3)

except:

print('已经登陆')

else:

print('正在登陆')

user_id_input.clear()

user_id_input.send_keys(user_id)

time.sleep(3)

password_input.clear()

password_input.send_keys(password)

time.sleep(3)

password_input.send_keys(Keys.ENTER)

time.sleep(15)

print('进入被爬取微博全部内容的首页')

browser.get(url)

print(url)

time.sleep(5)

def get_one_page(browser):

#获得当前页面所有微博的文本内容

global texts

for i in range(4):

browser.execute_script('window.scrollTo(0,document.body.scrollHeight)')

time.sleep(2)

soup=BeautifulSoup(browser.page_source,'html.parser')

weibo_tags=soup.find_all(name='div',attrs={'class':'WB_text W_f14'})

for weibo_tag in weibo_tags:

text=[]

for content in weibo_tag.contents:

if content.name=='a':

text.append(content.string)

if isinstance(content,bs4.element.NavigableString):

text.append(content)

if content.name=='img':

text.append(content.get('title'))

texts.append(text)

def get_pages(n,url,browser):

#获得指定页数所有微博的文本内容

print('正在爬取第 1 页')

get_one_page(browser)

for i in range(2,n+1):

print(url+'&is_tag=0&is_search=0&visible=0&page={}#feedtop'.format(i))

try:

browser.get(url+'&is_tag=0&is_search=0&visible=0&page={}#feedtop'.format(i))

time.sleep(5)

except:

print('出现异常')

break

else:

print('正在爬取第 {} 页'.format(i))

get_one_page(browser)

time.sleep(2)

time.sleep(3)

print('爬取完毕')

browser.close()

def main(browser):

#主程序

global texts

user_id,password,url=get_user_info()

if '?' not in url:

url+='?'

else:

url=url.replace('is_hot','is_all')

sign_in(user_id,password,url,browser)

number=eval(input('请输入爬取总页数:'))

get_pages(number,url,browser)

filename='WEIBO.txt'

with open(filename,'w',encoding='utf-8') as f:

for text in texts:

for sentence in text:

f.write(str(sentence).strip())

f.write('\n\n')

f.close()

if __name__=='__main__':

texts=[]

chrome_options = webdriver.ChromeOptions()

chrome_options.add_argument(r'--user-data-dir=C:\Users\DELL\AppData\Local\Google\Chrome\User Data')

chrome_options.add_experimental_option('prefs',{'profile.managed_default_content_settings.images': 2})

browser=webdriver.Chrome(options=chrome_options)

browser.get('https://weibo.com/')

time.sleep(10)

main(browser)