美国人口普查数据预测收入sklearn算法汇总3之ROC: KNN,LogisticRegression,RandomForest,NaiveBayes,StochasticGradientDece

接<美国人口普查数据预测收入sklearn算法汇总1: 了解数据以及数据预处理>

<美国人口普查数据预测收入sklearn算法汇总2: 特征编码, 特征选择, 降维, 递归特征消除>

九. 机器学习算法

- KNN

Logistic Regression

Random Forest

Naive Bayes

Stochastic Gradient Decent

Linear SVC

Decision Tree

Gradient Boosted Trees

import random

random.seed(42)

from sklearn.neighbors import KNeighborsRegressor #K近邻

knn = KNeighborsRegressor(n_neighbors = 3)

knn.fit(X_train, y_train)

print('KNN score: ',knn.score(X_test, y_test))

from sklearn.linear_model import LogisticRegression #逻辑回归

lr = LogisticRegression(C = 10, solver='liblinear', penalty='l1')

lr.fit(X_train, y_train)

print('Logistic Regression score: ',lr.score(X_test, y_test))

from sklearn.tree import DecisionTreeRegressor #决策树

dtr = DecisionTreeRegressor(max_depth = 10)

dtr.fit(X_train, y_train)

print('Decision Tree score: ',dtr.score(X_test, y_test))

from sklearn.ensemble import RandomForestRegressor #随机森林

rfr = RandomForestRegressor(n_estimators=300, max_features=3, max_depth=10)

rfr.fit(X_train, y_train)

print('Random Forest score: ',rfr.score(X_test, y_test))

from sklearn.naive_bayes import MultinomialNB #多项式朴素贝叶斯

nb = MultinomialNB()

nb.fit(X_train, y_train)

print('Naive Bayes score: ',nb.score(X_test, y_test))

from sklearn.svm import LinearSVC #支持向量机

svc = LinearSVC()

svc.fit(X_train, y_train)

print('Linear SVC score: ',svc.score(X_test, y_test))

from sklearn.ensemble import GradientBoostingClassifier #梯度上升

gbc = GradientBoostingClassifier()

gbc.fit(X_train, y_train)

print('Gradient Boosting score: ',gbc.score(X_test, y_test))

from sklearn.linear_model import SGDClassifier #梯度下降

sgd = SGDClassifier()

sgd.fit(X_train, y_train)

print('Stochastic Gradient Descent score: ',sgd.score(X_test, y_test))

KNN score: 0.2411992336105936

Logistic Regression score: 0.8379853954821538

Decision Tree score: 0.44242767068578853

Random Forest score: 0.46396449365628084

Naive Bayes score: 0.785982392684092

Linear SVC score: 0.5869105302668396

Gradient Boosting score: 0.8618712891558042

Stochastic Gradient Descent score: 0.7659182419982257

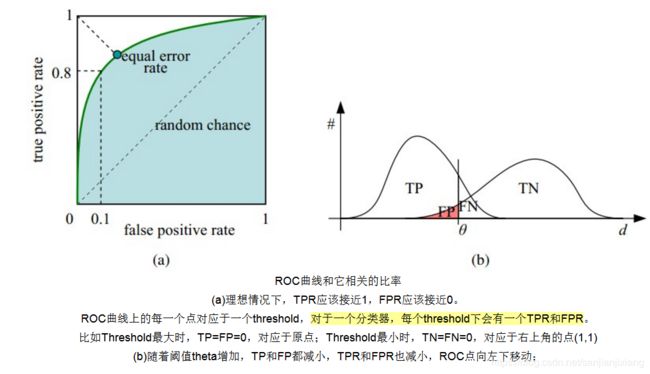

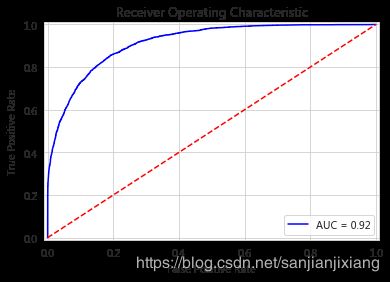

十. ROC 与 AUC

接下来我们考虑ROC曲线图中的四个点和一条线。第一个点,(0,1),即FPR=0, TPR=1,这意味着FN(false negative)=0,并且FP(false positive)=0。Wow,这是一个完美的分类器,它将所有的样本都正确分类。第二个点,(1,0),即FPR=1,TPR=0,类似地分析可以发现这是一个最糟糕的分类器,因为它成功避开了所有的正确答案。第三个点,(0,0),即FPR=TPR=0,即FP(false positive)=TP(true positive)=0,可以发现该分类器预测所有的样本都为负样本(negative)。类似的,第四个点(1,1),分类器实际上预测所有的样本都为正样本。经过以上的分析,我们可以断言,ROC曲线越接近左上角,该分类器的性能越好。

下面考虑ROC曲线图中的虚线y=x上的点。这条对角线上的点其实表示的是一个采用随机猜测策略的分类器的结果,例如(0.5,0.5),表示该分类器随机对于一半的样本猜测其为正样本,另外一半的样本为负样本。

AUC(Area Under Curve)被定义为ROC曲线下的面积,显然这个面积的数值不会大于1。又由于ROC曲线一般都处于y=x这条直线的上方,所以AUC的取值范围在0.5和1之间。使用AUC值作为评价标准是因为很多时候ROC曲线并不能清晰的说明哪个分类器的效果更好,而作为一个数值,对应AUC更大的分类器效果更好。

from sklearn import model_selection, metrics

from sklearn.linear_model import LogisticRegression

from sklearn.neighbors import KNeighborsClassifier

from sklearn.naive_bayes import GaussianNB #高斯分布朴素贝叶斯

from sklearn.tree import DecisionTreeClassifier

from sklearn.ensemble import RandomForestClassifier, GradientBoostingClassifier

from sklearn.metrics import roc_curve, auc

from sklearn.svm import LinearSVC

# 在不同阈值上计算fpr

def plot_roc_curve(y_test, preds):

fpr,tpr,threshold = metrics.roc_curve(y_test, preds)

roc_auc = metrics.auc(fpr, tpr)

plt.plot(fpr, tpr, 'b', label = 'AUC = %0.2f' % roc_auc)

plt.plot([0,1],[0,1], 'r--')

plt.xlim([-0.01, 1.01])

plt.ylim([-0.01, 1.01])

plt.xlabel('False Positive Rate')

plt.ylabel('True Positive Rate')

plt.title('Receiver Operating Characteristic')

plt.legend(loc = 'best')

plt.show()

# 返回结果

def fit_ml_algo(algo, X_train, y_train, X_test, cv):

model = algo.fit(X_train,y_train)

test_pred = model.predict(X_test)

if isinstance(algo, (LogisticRegression, KNeighborsClassifier, GaussianNB,

DecisionTreeClassifier,RandomForestClassifier,GradientBoostingClassifier)):

# isinstance() 函数来判断一个对象是否是一个已知的类型,类似 type()

probs = model.predict_proba(X_test)[:,1]

# predict_proba返回的是一个n行k列的数组,第i行第j列上的数值是模型预测第i个预测样本为某个标签的概率,并且每一行的概率和为1。

else:

probs = 'Not Available'

acc = round(model.score(X_test, y_test) * 100, 2)

train_pred = model_selection.cross_val_predict(algo,X_train,y_train,cv=cv,n_jobs=-1)

acc_cv = round(metrics.accuracy_score(y_train,train_pred) * 100, 2)

return train_pred, test_pred, acc, acc_cv, probs

- isinstance() 函数来判断一个对象是否是一个已知的类型,类似 type()

- predict_proba返回的是一个n行k列的数组,第i行第j列上的数值是模型预测第i个预测样本为某个标签的概率,并且每一行的概率和为1。

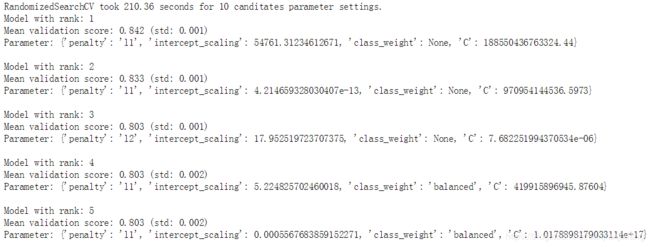

import time

from sklearn.linear_model import LogisticRegression

from sklearn.model_selection import RandomizedSearchCV

def report(results, n_top=5):

for i in range(1, n_top + 1):

candidates = np.flatnonzero(results['rank_test_score'] == i)

#np.flatnonzero()函数输入一个矩阵,返回扁平化后矩阵中非零元素的位置(index)

for candidate in candidates:

print('Model with rank: {}'.format(i))

print('Mean validation score: {0:.3f} (std: {1:.3f})'.format(results['mean_test_score'][candidate],

results['std_test_score'][candidate]))

print('Parameter: {}'.format(results['params'][candidate]))

print('')

param_dist = {'penalty':['l2','l1'],'class_weight':[None,'balanced'],'C':np.logspace(-20,20,10000),

'intercept_scaling':np.logspace(-20,20,10000)}

n_iter_search = 10

lr = LogisticRegression()

random_search = RandomizedSearchCV(lr, n_jobs=-1, param_distributions=param_dist,n_iter=n_iter_search)

start = time.time()

random_search.fit(X_train, y_train)

print('RandomizedSearchCV took %.2f seconds for %d canditates parameter settings.' %

((time.time() - start), n_iter_search))

report(random_search.cv_results_)

-

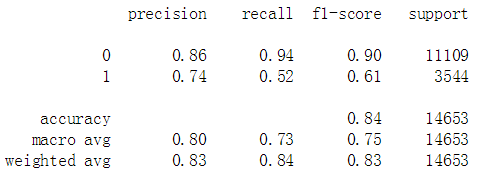

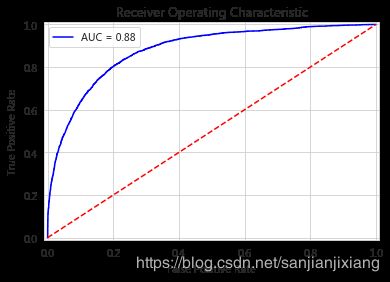

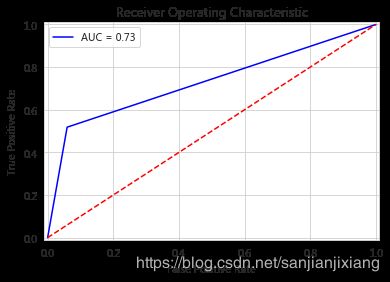

10.1 LogisticRegression

import datetime

start_time = time.time()

train_pred_log,test_pred_log,acc_log,acc_cv_log,probs_log=fit_ml_algo(LogisticRegression(n_jobs=-1),

X_train,y_train,X_test,10)

log_time = (time.time() - start_time)

print('Accuracy: %s' % acc_log)

print('Accuracy CV 10-Fold: %s' % acc_cv_log)

print('Running Time: %s' % datetime.timedelta(seconds=log_time))

print(metrics.classification_report(y_train, train_pred_log))

print(metrics.classification_report(y_test, test_pred_log))

plot_roc_curve(y_test, probs_log)

plot_roc_curve(y_test, test_pred_log)

Accuracy: 83.84

Accuracy CV 10-Fold: 84.2

Running Time: 0:00:03.617207

-

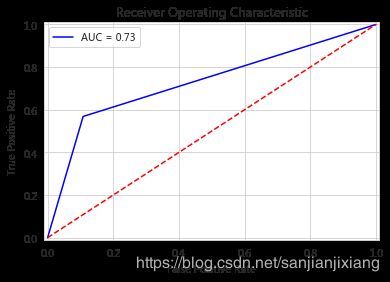

10.2 K-Nearest Neighbors

start_time = time.time()

train_pred_knn,test_pred_knn,acc_knn,acc_cv_knn,probs_knn=fit_ml_algo(KNeighborsClassifier(n_neighbors=3,n_jobs=-1),

X_train,y_train,X_test,10)

knn_time = time.time() - start_time

print('Accuracy: %s' % acc_cv_knn)

print('Accuracy CV 10-Fold: %s' % acc_cv_knn)

print('Running Time: %s' % datetime.timedelta(seconds = knn_time))

print(metrics.classification_report(y_train, train_pred_knn))

print(metrics.classification_report(y_test, test_pred_knn))

plot_roc_curve(y_test, probs_knn)

plot_roc_curve(y_test, test_pred_knn)

Accuracy: 81.32

Accuracy CV 10-Fold: 81.32

Running Time: 0:00:02.768158

-

10.3 Gaussian Naive Bayes

start_time = time.time()

train_pred_gau,test_pred_gau,acc_gau,acc_cv_gau,probs_gau=fit_ml_algo(GaussianNB(),

X_train,y_train,X_test,10)

gaussian_time = time.time() - start_time

print('Accuracy: %s' % acc_gau)

print('Accuracy CV 10-Fold: %s' % acc_cv_gau)

print('Running Time: %s' % datetime.timedelta(seconds = gaussian_time))

print(metrics.classification_report(y_train, train_pred_gau))

print(metrics.classification_report(y_test, test_pred_gau))

plot_roc_curve(y_test, probs_gau)

plot_roc_curve(y_test, test_pred_gau)

Accuracy: 82.34

Accuracy CV 10-Fold: 82.13

Running Time: 0:00:00.355020

-

10.4 Linear SVC

start_time = time.time()

train_pred_svc,test_pred_svc,acc_svc,acc_cv_svc,_=fit_ml_algo(LinearSVC(),X_train,

y_train,X_test,10)

svc_time = time.time() - start_time

print('Accuracy: %s' % acc_svc)

print('Accuracy CV 10-FoldL %s' % acc_cv_svc)

print('Running Time: %s' % datetime.timedelta(seconds = svc_time))

print(metrics.classification_report(y_train, train_pred_svc))

print(metrics.classification_report(y_test, test_pred_svc))

Accuracy: 59.58

Accuracy CV 10-FoldL 69.53

Running Time: 0:00:23.749358

-

10.5 Stochastic Gradient Descent

from sklearn.linear_model import SGDClassifier

start_time = time.time()

train_pred_sgd,test_pred_sgd,acc_sgd,acc_cv_sgd,_=fit_ml_algo(SGDClassifier(n_jobs=-1),

X_train,y_train,X_test,10)

sgd_time = time.time() - start_time

print('Accuracy: %s' % acc_sgd)

print('Accuracy CV 10-Fold: %s' % acc_cv_sgd)

print('Running Time %s' % datetime.timedelta(seconds = sgd_time))

print(metrics.classification_report(y_train, train_pred_sgd))

print(metrics.classification_report(y_test, test_pred_sgd))

Accuracy: 80.9

Accuracy CV 10-Fold: 77.66

Running Time 0:00:04.138237

-

10.6 Decision Tree Classifier

start_time = time.time()

train_pred_dtc,test_pred_dtc,acc_dtc,acc_cv_dtc,probs_dtc=fit_ml_algo(DecisionTreeClassifier(),

X_train,y_train,X_test,10)

dtc_time = time.time() - start_time

print('Accuracy: %s' % acc_dtc)

print('Accuracy CV 10-Fold: %s' % acc_cv_dtc)

print('Running Time: %s' % datetime.timedelta(seconds = dtc_time))

print(metrics.classification_report(y_train, train_pred_dtc))

print(metrics.classification_report(y_test, test_pred_dtc))

plot_roc_curve(y_test, probs_dtc)

plot_roc_curve(y_test, test_pred_dtc)

Accuracy: 81.71

Accuracy CV 10-Fold: 82.28

Running Time: 0:00:00.694040

-

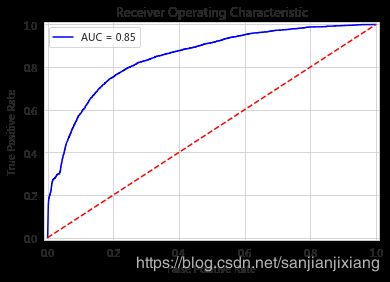

10.7 Random Forest Classifier

def report(results, n_top=5):

for i in range(1, n_top + 1):

candidates = np.flatnonzero(results['rank_test_score'] == i)

# np.flatnonzero()函数输入一个矩阵,返回扁平化后矩阵中非零元素的位置(index)

for candidate in candidates:

print('Model with rank: {}'.format(i))

print('Mean validation score: {0:.3f} (std: {1:.3f})'.format(results['mean_test_score'][candidate],

results['std_test_score'][candidate]))

print('Parameters: {}'.format(results['params'][candidate]))

from scipy.stats import randint as sp_randint # 产生均匀分布的随机整数矩阵

from sklearn.model_selection import RandomizedSearchCV

param_dist={'max_depth':[10, None],'max_features':sp_randint(1,11),'bootstrap':[True,False],

'min_samples_split':sp_randint(2,20),'min_samples_leaf':sp_randint(1,11),'criterion':['gini','entropy']}

n_iter_search = 10

rfc = RandomForestClassifier(n_estimators = 10)

random_search=RandomizedSearchCV(rfc,n_jobs=-1,param_distributions=param_dist,n_iter=n_iter_search)

start_time = time.time()

random_search.fit(X_train, y_train)

print('RandomizedSearchCV took %.2f seconds for %d candidates parameter settings.' % (time.time()-start_time,n_iter_search))

report(random_search.cv_results_)

- 10.7.1 RandomizedSearchCV

from sklearn.model_selection import RandomizedSearchCV

random_grid = {'n_estimators':[10,20,30,40,50,100], 'max_features':[3,5,8],

'max_depth':[10,20,30], 'min_samples_split':[2,5,10],

'min_samples_leaf':[2,5,10], 'bootstrap':[True,False],

'criterion':['gini','entropy']}

rfc = RandomForestClassifier()

rfc_search = RandomizedSearchCV(rfc, param_distributions = random_grid,

n_iter=10, n_jobs=-1 ,cv=10, verbose=2)

start_time = time.time()

rfc_search.fit(X_train,y_train)

print('RandomizedSearchCV took %.2f seconds for RandomForestClassifier.' % (time.time()-start_time))

rfc_search.best_params_

RandomizedSearchCV took 28.47 seconds for RandomForestClassifier.

{‘n_estimators’: 50, ‘min_samples_split’: 2, ‘min_samples_leaf’: 5, ‘max_features’: 8, ‘max_depth’: 10, ‘criterion’: ‘gini’, ‘bootstrap’: True}

start_time = time.time()

rfc = rfc_search.best_estimator_

train_pred_rfc,test_pred_rfc,acc_rfc,acc_cv_rfc,probs_rfc=fit_ml_algo(rfc,X_train,y_train,X_test,10)

rfc_time = time.time() - start_time

print('Accuracy: %s' % acc_rfc)

print('Accuracy CV 10-Fold: %s' % acc_cv_rfc)

print('Running Time: %s' % datetime.timedelta(seconds = rfc_time))

Accuracy: 85.98

Accuracy CV 10-Fold: 86.28

Running Time: 0:00:06.261358

- 10.7.2 GridSearchCV

from sklearn.model_selection import GridSearchCV

param_grid = {'n_estimators':[50,200], 'min_samples_split':[2,3,4],

'min_samples_leaf':[4,5,8], 'max_features':[6,8,10], 'max_depth':[5,10,50],

'criterion':['gini','entropy'], 'bootstrap':[True,False]}

rf = RandomForestClassifier()

grid_search = GridSearchCV(estimator=rf, param_grid=param_grid, cv=3, n_jobs=-1)

start_time = time.time()

grid_search.fit(X_train, y_train)

print('GridSearchCV took %.2f seconds for RandomForestClassifier.' % (time.time()-start_time))

grid_search.best_params_

GridSearchCV took 1745.90 seconds for RandomForestClassifier.

{‘bootstrap’: True, ‘criterion’: ‘gini’, ‘max_depth’: 10, ‘max_features’: 10, ‘min_samples_leaf’: 5, ‘min_samples_split’: 3, ‘n_estimators’: 200}

start_time = time.time()

rf = grid_search.best_estimator_

train_pred_rf,test_pred_rf,acc_rf,acc_cv_rf,probs_rf=fit_ml_algo(rf,X_train,y_train,X_test,10)

rf_time = time.time() - start_time

print('Accuracy: %s' % acc_rf)

print('Accuracy CV 10-Fold: %s' % acc_cv_rf)

print('Running Time: %s' % datetime.timedelta(seconds = rf_time))

print(metrics.classification_report(y_train, train_pred_rf))

print(metrics.classification_report(y_test, test_pred_rf))

plot_roc_curve(y_test, probs_rf)

plot_roc_curve(y_test, test_pred_rf)

Accuracy: 86.13

Accuracy CV 10-Fold: 86.38

Running Time: 0:00:30.193727

-

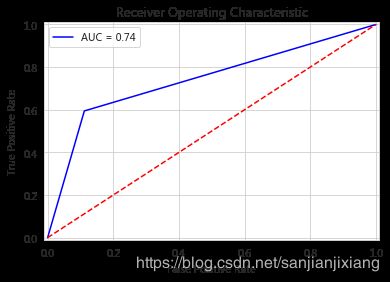

10.8 Gradient Boosting Trees

start_time = time.time()

train_pred_gbt,test_pred_gbt,acc_gbt,acc_cv_gbt,probs_gbt=fit_ml_algo(GradientBoostingClassifier(),

X_train,y_train,X_test,10)

gbt_time = time.time()

print('Accuracy: %s' % acc_gbt)

print('Accuracy CV 10-Fold: %s' % acc_cv_gbt)

print('Running Time: %s' % datetime.timedelta(seconds=gbt_time))

print(metrics.classification_report(y_train, train_pred_gbt))

print(metrics.classification_report(y_test, test_pred_gbt))

plot_roc_curve(y_test, probs_gbt)

plot_roc_curve(y_test, test_pred_gbt)

Accuracy: 86.19

Accuracy CV 10-Fold: 86.58

Running Time: 18239 days, 12:44:33.938080

终: Ranking Results

Let’s rank the results for all the algorithms we have used

models = pd.DataFrame({'Model':['KNN', 'Logistic Regression', 'Random Forest',

'Naive Bayes', 'Linear SVC', 'Decision Tree',

'Stochastic Gradient Descent', 'Gradient Boosting Trees'],

'Score':[acc_knn,acc_log,acc_rf,acc_gau,acc_svc,acc_dtc,acc_sgd,acc_gbt]})

models.sort_values(by='Score', ascending=False)

model_cv=pd.DataFrame({'Model':['KNN', 'Logistic Regression', 'Random Forest',

'Naive Bayes', 'Linear SVC', 'Decision Tree',

'Stochastic Gradient Descent', 'Gradient Boosting Trees'],

'Score':[acc_cv_knn, acc_cv_log, acc_cv_rf, acc_cv_gau,

acc_cv_svc, acc_cv_dtc, acc_cv_sgd, acc_cv_gbt]})

model_cv.sort_values(by = 'Score', ascending = False)

plt.figure(figsize = (10,10))

models = ['KNN','Logistic Regression', 'Random Forest', 'Naive Bayes', 'Decision Tree', 'Gradient Boosting Trees']

probs = [probs_knn, probs_log, probs_rf, probs_gau, probs_dtc, probs_gbt]

colors = ['blue', 'green', 'red', 'yellow', 'purple', 'gray'] #violet

plt.plot([0,1],[0,1], 'r--')

plt.title('Receiver Operating Characteristic')

plt.xlim([-0.01,1.01])

plt.ylim([-0.01,1.01])

plt.xlabel('False Positive Rate')

plt.ylabel('True Positive Rate')

def plot_roc_curves(y_test, prob, model):

fpr, tpr, threshold = metrics.roc_curve(y_test, prob)

roc_auc = metrics.auc(fpr, tpr)

plt.plot(fpr, tpr, 'b', label = model + ' AUC = %.2f' % roc_auc, color=colors[i])

plt.legend(loc = 'lower right')

for i,model in list(enumerate(models)):

plot_roc_curves(y_test, probs[i], models[i])

plt.show()