HBase 存储原理理解

HBase 存储原理理解

这里通过一次 put 操作从宏观和微观两个角度进行分析。

宏观

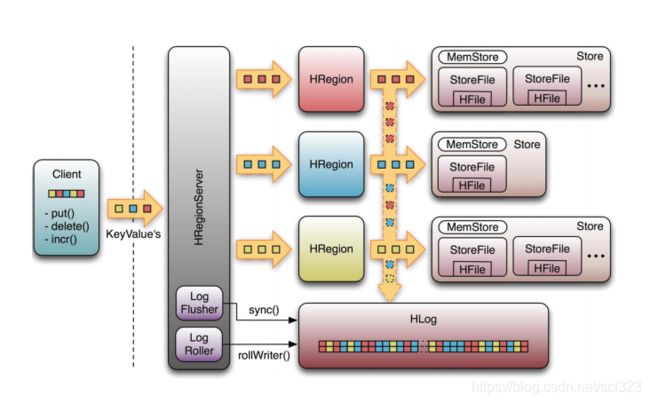

put 提交之后,数据首先会放入 MemStore,然后再写 WAL (Write Ahead Log),当 MemStore 满了之后就会往 StoreFile 里面刷( HBase 并不会直接将数据落盘,而是先写入缓存,等缓存满足一定大小之后再落盘。)。当 StoreFile 文件数量增长到一定阈值之后,会触发Compact合并操作,将多个 StoreFiles 合并成一个 StoreFile。

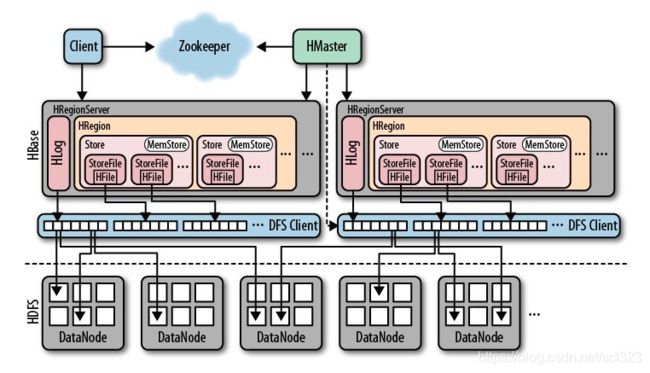

- 一台 RegionServer 节点有一个 HRegionServer。

- 一个 HRegionServer 包含一个 HLog 和多个 HRegion ( 对应 Table 中的一个 Region )。

- 一个 HRegion 包含多个 HStore。

- 一个 HStore 包含一个 MemStore 和多个 StoreFile ( 每个 HStore 对应 Table 的一个列族 cf )。

- 当一个 HStore 里面 StoreFile 的数量增长到一定阈值之后,会触发Compact合并操作,将多个 StoreFiles 合并成一个 StoreFile。

- 当 StoreFile 的大小增长到一定阈值之后,会触发 Split 操作,同时把当前 Region Split 成 2 个 Region,父 Region 会下线,新 Split 出的 2 个孩子 Region 会被 HMaster 分配到相应的 HRegionServer 上,使得原先 1 个 Region 的压力得以分流到 2 个 Region 上。

微观

微观就是讲 HRegion 他到底是怎么存的,这个就要看源码了。

首先我们定位到源码:org.apache.hadoop.hbase.regionserver.HRegion#doMiniBatchMutation

可以看到开发者对每一步都有注释,我把每步的英文注释翻译成了中文。

private long doMiniBatchMutation(BatchOperationInProgress<?> batchOp) throws IOException {

boolean isInReplay = batchOp.isInReplay();

// variable to note if all Put items are for the same CF -- metrics related

boolean putsCfSetConsistent = true;

//The set of columnFamilies first seen for Put.

Set<byte[]> putsCfSet = null;

// variable to note if all Delete items are for the same CF -- metrics related

boolean deletesCfSetConsistent = true;

//The set of columnFamilies first seen for Delete.

Set<byte[]> deletesCfSet = null;

long currentNonceGroup = HConstants.NO_NONCE, currentNonce = HConstants.NO_NONCE;

WALEdit walEdit = new WALEdit(isInReplay);

MultiVersionConcurrencyControl.WriteEntry writeEntry = null;

long txid = 0;

boolean doRollBackMemstore = false;

boolean locked = false;

/** Keep track of the locks we hold so we can release them in finally clause */

List<RowLock> acquiredRowLocks = Lists.newArrayListWithCapacity(batchOp.operations.length);

// reference family maps directly so coprocessors can mutate them if desired

Map<byte[], List<Cell>>[] familyMaps = new Map[batchOp.operations.length];

// We try to set up a batch in the range [firstIndex,lastIndexExclusive)

int firstIndex = batchOp.nextIndexToProcess;

int lastIndexExclusive = firstIndex;

boolean success = false;

int noOfPuts = 0, noOfDeletes = 0;

WALKey walKey = null;

long mvccNum = 0;

try {

// ------------------------------------

// STEP 1. Try to acquire as many locks as we can, and ensure

// we acquire at least one.

// 第一步:尝试获取尽可能多的锁,并确保我们至少获得一个。

// 解析:这里获取的锁是 rowlock 和 Region 更新共享锁。

// HBase 中使用 rowlock 保证对同一行数据的更新都是互斥操作,用以保证更新的原子性,要么更新成功,要么失败。

// ----------------------------------

int numReadyToWrite = 0;

long now = EnvironmentEdgeManager.currentTime();

while (lastIndexExclusive < batchOp.operations.length) {

Mutation mutation = batchOp.getMutation(lastIndexExclusive);

boolean isPutMutation = mutation instanceof Put;

Map<byte[], List<Cell>> familyMap = mutation.getFamilyCellMap();

// store the family map reference to allow for mutations

familyMaps[lastIndexExclusive] = familyMap;

// skip anything that "ran" already

if (batchOp.retCodeDetails[lastIndexExclusive].getOperationStatusCode()

!= OperationStatusCode.NOT_RUN) {

lastIndexExclusive++;

continue;

}

try {

if (isPutMutation) {

// Check the families in the put. If bad, skip this one.

if (isInReplay) {

removeNonExistentColumnFamilyForReplay(familyMap);

} else {

checkFamilies(familyMap.keySet());

}

checkTimestamps(mutation.getFamilyCellMap(), now);

} else {

prepareDelete((Delete) mutation);

}

checkRow(mutation.getRow(), "doMiniBatchMutation");

} catch (NoSuchColumnFamilyException nscf) {

LOG.warn("No such column family in batch mutation", nscf);

batchOp.retCodeDetails[lastIndexExclusive] = new OperationStatus(

OperationStatusCode.BAD_FAMILY, nscf.getMessage());

lastIndexExclusive++;

continue;

} catch (FailedSanityCheckException fsce) {

LOG.warn("Batch Mutation did not pass sanity check", fsce);

batchOp.retCodeDetails[lastIndexExclusive] = new OperationStatus(

OperationStatusCode.SANITY_CHECK_FAILURE, fsce.getMessage());

lastIndexExclusive++;

continue;

} catch (WrongRegionException we) {

LOG.warn("Batch mutation had a row that does not belong to this region", we);

batchOp.retCodeDetails[lastIndexExclusive] = new OperationStatus(

OperationStatusCode.SANITY_CHECK_FAILURE, we.getMessage());

lastIndexExclusive++;

continue;

}

// If we haven't got any rows in our batch, we should block to

// get the next one.

RowLock rowLock = null;

try {

rowLock = getRowLock(mutation.getRow(), true);

} catch (IOException ioe) {

LOG.warn("Failed getting lock in batch put, row="

+ Bytes.toStringBinary(mutation.getRow()), ioe);

}

if (rowLock == null) {

// We failed to grab another lock

break; // stop acquiring more rows for this batch

} else {

acquiredRowLocks.add(rowLock);

}

lastIndexExclusive++;

numReadyToWrite++;

if (isPutMutation) {

// If Column Families stay consistent through out all of the

// individual puts then metrics can be reported as a mutliput across

// column families in the first put.

if (putsCfSet == null) {

putsCfSet = mutation.getFamilyCellMap().keySet();

} else {

putsCfSetConsistent = putsCfSetConsistent

&& mutation.getFamilyCellMap().keySet().equals(putsCfSet);

}

} else {

if (deletesCfSet == null) {

deletesCfSet = mutation.getFamilyCellMap().keySet();

} else {

deletesCfSetConsistent = deletesCfSetConsistent

&& mutation.getFamilyCellMap().keySet().equals(deletesCfSet);

}

}

}

// we should record the timestamp only after we have acquired the rowLock,

// otherwise, newer puts/deletes are not guaranteed to have a newer timestamp

now = EnvironmentEdgeManager.currentTime();

byte[] byteNow = Bytes.toBytes(now);

// Nothing to put/delete -- an exception in the above such as NoSuchColumnFamily?

if (numReadyToWrite <= 0) return 0L;

// We've now grabbed as many mutations off the list as we can

// ------------------------------------

// STEP 2. Update any LATEST_TIMESTAMP timestamps

// 第二步: 更新所有的 LATEST_TIMESTAMP 的时间戳。

// 解析:在拿到 rowlock 时就已经获取了当前时间戳,用来更新新的 puts/deletes 的时间戳。

// ----------------------------------

for (int i = firstIndex; !isInReplay && i < lastIndexExclusive; i++) {

// skip invalid

if (batchOp.retCodeDetails[i].getOperationStatusCode()

!= OperationStatusCode.NOT_RUN) continue;

Mutation mutation = batchOp.getMutation(i);

if (mutation instanceof Put) {

updateCellTimestamps(familyMaps[i].values(), byteNow);

noOfPuts++;

} else {

prepareDeleteTimestamps(mutation, familyMaps[i], byteNow);

noOfDeletes++;

}

rewriteCellTags(familyMaps[i], mutation);

}

lock(this.updatesLock.readLock(), numReadyToWrite);

locked = true;

// calling the pre CP hook for batch mutation

if (!isInReplay && coprocessorHost != null) {

MiniBatchOperationInProgress<Mutation> miniBatchOp =

new MiniBatchOperationInProgress<Mutation>(batchOp.getMutationsForCoprocs(),

batchOp.retCodeDetails, batchOp.walEditsFromCoprocessors, firstIndex, lastIndexExclusive);

if (coprocessorHost.preBatchMutate(miniBatchOp)) return 0L;

}

// ------------------------------------

// STEP 3. Build WAL edit

// 第三步: 建立 WAL 的编辑器。

// 这里没有开始写 WAL 只是准备好了 WAL 的编辑器。

// ----------------------------------

Durability durability = Durability.USE_DEFAULT;

for (int i = firstIndex; i < lastIndexExclusive; i++) {

// Skip puts that were determined to be invalid during preprocessing

if (batchOp.retCodeDetails[i].getOperationStatusCode() != OperationStatusCode.NOT_RUN) {

continue;

}

Mutation m = batchOp.getMutation(i);

Durability tmpDur = getEffectiveDurability(m.getDurability());

if (tmpDur.ordinal() > durability.ordinal()) {

durability = tmpDur;

}

if (tmpDur == Durability.SKIP_WAL) {

recordMutationWithoutWal(m.getFamilyCellMap());

continue;

}

long nonceGroup = batchOp.getNonceGroup(i), nonce = batchOp.getNonce(i);

// In replay, the batch may contain multiple nonces. If so, write WALEdit for each.

// Given how nonces are originally written, these should be contiguous.

// They don't have to be, it will still work, just write more WALEdits than needed.

if (nonceGroup != currentNonceGroup || nonce != currentNonce) {

if (walEdit.size() > 0) {

assert isInReplay;

if (!isInReplay) {

throw new IOException("Multiple nonces per batch and not in replay");

}

// txid should always increase, so having the one from the last call is ok.

// we use HLogKey here instead of WALKey directly to support legacy coprocessors.

walKey = new ReplayHLogKey(this.getRegionInfo().getEncodedNameAsBytes(),

this.htableDescriptor.getTableName(), now, m.getClusterIds(),

currentNonceGroup, currentNonce, mvcc);

txid = this.wal.append(this.htableDescriptor, this.getRegionInfo(), walKey,

walEdit, true);

walEdit = new WALEdit(isInReplay);

walKey = null;

}

currentNonceGroup = nonceGroup;

currentNonce = nonce;

}

// Add WAL edits by CP

WALEdit fromCP = batchOp.walEditsFromCoprocessors[i];

if (fromCP != null) {

for (Cell cell : fromCP.getCells()) {

walEdit.add(cell);

}

}

addFamilyMapToWALEdit(familyMaps[i], walEdit);

}

// -------------------------

// STEP 4. Append the final edit to WAL. Do not sync wal.

// 第四步:添加最终编辑到 WAL, 不要同步 WAL。

// -------------------------

Mutation mutation = batchOp.getMutation(firstIndex);

if (isInReplay) {

// use wal key from the original

walKey = new ReplayHLogKey(this.getRegionInfo().getEncodedNameAsBytes(),

this.htableDescriptor.getTableName(), WALKey.NO_SEQUENCE_ID, now,

mutation.getClusterIds(), currentNonceGroup, currentNonce, mvcc);

long replaySeqId = batchOp.getReplaySequenceId();

walKey.setOrigLogSeqNum(replaySeqId);

}

if (walEdit.size() > 0) {

if (!isInReplay) {

// we use HLogKey here instead of WALKey directly to support legacy coprocessors.

walKey = new HLogKey(this.getRegionInfo().getEncodedNameAsBytes(),

this.htableDescriptor.getTableName(), WALKey.NO_SEQUENCE_ID, now,

mutation.getClusterIds(), currentNonceGroup, currentNonce, mvcc);

}

txid = this.wal.append(this.htableDescriptor, this.getRegionInfo(), walKey, walEdit, true);

}

// ------------------------------------

// Acquire the latest mvcc number

// ----------------------------------

if (walKey == null) {

// If this is a skip wal operation just get the read point from mvcc

walKey = this.appendEmptyEdit(this.wal);

}

if (!isInReplay) {

writeEntry = walKey.getWriteEntry();

mvccNum = writeEntry.getWriteNumber();

} else {

mvccNum = batchOp.getReplaySequenceId();

}

// ------------------------------------

// STEP 5. Write back to memstore

// Write to memstore. It is ok to write to memstore

// first without syncing the WAL because we do not roll

// forward the memstore MVCC. The MVCC will be moved up when

// the complete operation is done. These changes are not yet

// visible to scanners till we update the MVCC. The MVCC is

// moved only when the sync is complete.

// 第五步:写入memstore。

// 可以先写入memstore而不同步WAL,

// 因为我们不会前滚memstore MVCC。

// 完成操作后,MVCC将向上移动。

// 在更新MVCC之前,扫描仪尚未看到这些更改。仅在同步完成时才移动MVCC。

// 解析:正常来说是要先写 WAL 再写 MemStore 来保证事务一致性的,但是这里用了 MVCC 来保证。

// ----------------------------------

long addedSize = 0;

for (int i = firstIndex; i < lastIndexExclusive; i++) {

if (batchOp.retCodeDetails[i].getOperationStatusCode()

!= OperationStatusCode.NOT_RUN) {

continue;

}

doRollBackMemstore = true; // If we have a failure, we need to clean what we wrote

addedSize += applyFamilyMapToMemstore(familyMaps[i], mvccNum, isInReplay);

}

// -------------------------------

// STEP 6. Release row locks, etc. 释放 rowlock

// -------------------------------

if (locked) {

this.updatesLock.readLock().unlock();

locked = false;

}

releaseRowLocks(acquiredRowLocks);

// -------------------------

// STEP 7. Sync wal. 同步 WAL

// -------------------------

if (txid != 0) {

syncOrDefer(txid, durability);

}

doRollBackMemstore = false;

// calling the post CP hook for batch mutation

if (!isInReplay && coprocessorHost != null) {

MiniBatchOperationInProgress<Mutation> miniBatchOp =

new MiniBatchOperationInProgress<Mutation>(batchOp.getMutationsForCoprocs(),

batchOp.retCodeDetails, batchOp.walEditsFromCoprocessors, firstIndex, lastIndexExclusive);

coprocessorHost.postBatchMutate(miniBatchOp);

}

// ------------------------------------------------------------------

// STEP 8. Advance mvcc. This will make this put visible to scanners and getters.

// 处理 mvcc , 让这次 put 对 scanners 和 getters 可见。

// ------------------------------------------------------------------

if (writeEntry != null) {

mvcc.completeAndWait(writeEntry);

writeEntry = null;

} else if (isInReplay) {

// ensure that the sequence id of the region is at least as big as orig log seq id

mvcc.advanceTo(mvccNum);

}

for (int i = firstIndex; i < lastIndexExclusive; i ++) {

if (batchOp.retCodeDetails[i] == OperationStatus.NOT_RUN) {

batchOp.retCodeDetails[i] = OperationStatus.SUCCESS;

}

}

// ------------------------------------

// STEP 9. Run coprocessor post hooks. This should be done after the wal is

// synced so that the coprocessor contract is adhered to.

// 第九步:运行协处理器后挂钩。这应该在同步wal之后完成,以便遵守协处理器合同。

// ------------------------------------

if (!isInReplay && coprocessorHost != null) {

for (int i = firstIndex; i < lastIndexExclusive; i++) {

// only for successful puts

if (batchOp.retCodeDetails[i].getOperationStatusCode()

!= OperationStatusCode.SUCCESS) {

continue;

}

Mutation m = batchOp.getMutation(i);

if (m instanceof Put) {

coprocessorHost.postPut((Put) m, walEdit, m.getDurability());

} else {

coprocessorHost.postDelete((Delete) m, walEdit, m.getDurability());

}

}

}

success = true;

return addedSize;

} finally {

// if the wal sync was unsuccessful, remove keys from memstore

if (doRollBackMemstore) {

for (int j = 0; j < familyMaps.length; j++) {

for(List<Cell> cells:familyMaps[j].values()) {

rollbackMemstore(cells);

}

}

if (writeEntry != null) mvcc.complete(writeEntry);

} else if (writeEntry != null) {

mvcc.completeAndWait(writeEntry);

}

if (locked) {

this.updatesLock.readLock().unlock();

}

releaseRowLocks(acquiredRowLocks);

// See if the column families were consistent through the whole thing.

// if they were then keep them. If they were not then pass a null.

// null will be treated as unknown.

// Total time taken might be involving Puts and Deletes.

// Split the time for puts and deletes based on the total number of Puts and Deletes.

if (noOfPuts > 0) {

// There were some Puts in the batch.

if (this.metricsRegion != null) {

this.metricsRegion.updatePut();

}

}

if (noOfDeletes > 0) {

// There were some Deletes in the batch.

if (this.metricsRegion != null) {

this.metricsRegion.updateDelete();

}

}

if (!success) {

for (int i = firstIndex; i < lastIndexExclusive; i++) {

if (batchOp.retCodeDetails[i].getOperationStatusCode() == OperationStatusCode.NOT_RUN) {

batchOp.retCodeDetails[i] = OperationStatus.FAILURE;

}

}

}

if (coprocessorHost != null && !batchOp.isInReplay()) {

// call the coprocessor hook to do any finalization steps

// after the put is done

MiniBatchOperationInProgress<Mutation> miniBatchOp =

new MiniBatchOperationInProgress<Mutation>(batchOp.getMutationsForCoprocs(),

batchOp.retCodeDetails, batchOp.walEditsFromCoprocessors, firstIndex,

lastIndexExclusive);

coprocessorHost.postBatchMutateIndispensably(miniBatchOp, success);

}

batchOp.nextIndexToProcess = lastIndexExclusive;

}

}

- (1)获取行锁、Region更新共享锁: HBase中使用行锁保证对同一行数据的更新都是互斥操作,用以保证更新的原子性,要么更新成功,要么失败。

- (2)开始写事务:获取write number,用于实现MVCC,实现数据的非锁定读,在保证读写一致性的前提下提高读取性能。

- (3)写缓存memstore:HBase中每列族都会对应一个store,用来存储该列数据。每个store都会有个写缓存memstore,用于缓存写入数据。HBase并不会直接将数据落盘,而是先写入缓存,等缓存满足一定大小之后再一起落盘。

- (4)Append HLog:HBase使用WAL机制保证数据可靠性,即首先写日志再写缓存,即使发生宕机,也可以通过恢复HLog还原出原始数据。该步骤就是将数据构造为WALEdit对象,然后顺序写入HLog中,此时不需要执行sync操作。0.98版本采用了新的写线程模式实现HLog日志的写入,可以使得整个数据更新性能得到极大提升,具体原理见下一个章节。

- (5)释放行锁以及共享锁

- (6)Sync HLog:HLog真正sync到HDFS,在释放行锁之后执行sync操作是为了尽量减少持锁时间,提升写性能。如果Sync失败,执行回滚操作将memstore中已经写入的数据移除。

- (7)结束写事务:此时该线程的更新操作才会对其他读请求可见,更新才实际生效。

- (8)flush memstore:当写缓存满64M之后,会启动flush线程将数据刷新到硬盘。刷新操作涉及到HFile相关结构,后面会详细对此进行介绍。