baseline的骨骼检测流程记录

0.背景

2018年coco挑战赛亚军,pipeline非常简洁,VGG16+额外几层反卷积分,输出关节点热力图。主要了解pipeline构建过程,学习一下代码怎么写。https://github.com/microsoft/human-pose-estimation.pytorch

1.相关知识

1.1 关键点数量

17个关键点,具体如下。所以网络层最终输出 B* 17* Heatmap_H* Heatmap_W

'''

"keypoints": {

0: "nose",

1: "left_eye",

2: "right_eye",

3: "left_ear",

4: "right_ear",

5: "left_shoulder",

6: "right_shoulder",

7: "left_elbow",

8: "right_elbow",

9: "left_wrist",

10: "right_wrist",

11: "left_hip",

12: "right_hip",

13: "left_knee",

14: "right_knee",

15: "left_ankle",

16: "right_ankle"

},

"skeleton": [

[16,14],[14,12],[17,15],[15,13],[12,13],[6,12],[7,13], [6,7],[6,8],

[7,9],[8,10],[9,11],[2,3],[1,2],[1,3],[2,4],[3,5],[4,6],[5,7]]

'''1.2 网络结构

self.features = nn.Sequential(*features)

#原先是VGG16,224-->7.1/32,更换为MobilenetV2, 7*7*1280

self.deconv_layers = self._make_deconv_layer(

extra.NUM_DECONV_LAYERS,

extra.NUM_DECONV_FILTERS,

extra.NUM_DECONV_KERNELS,

) #1/32-->1/4 channal=256

self.final_layer = nn.Conv2d(

in_channels=extra.NUM_DECONV_FILTERS[-1],

out_channels=cfg.MODEL.NUM_JOINTS,

kernel_size=extra.FINAL_CONV_KERNEL,

stride=1,

padding=1 if extra.FINAL_CONV_KERNEL == 3 else 0

) #1/4,channal=256-->17. 默认输入3*256*192,输出17*64*48部分初始化参数

def init_weights(self, pretrained=''):

if os.path.isfile(pretrained):

logger.info('=> init deconv weights from normal distribution')

for name, m in self.deconv_layers.named_modules():

if isinstance(m, nn.ConvTranspose2d):

logger.info('=> init {}.weight as normal(0, 0.001)'.format(name))

logger.info('=> init {}.bias as 0'.format(name))

nn.init.normal_(m.weight, std=0.001)

if self.deconv_with_bias:

nn.init.constant_(m.bias, 0)

elif isinstance(m, nn.BatchNorm2d):

logger.info('=> init {}.weight as 1'.format(name))

logger.info('=> init {}.bias as 0'.format(name))

nn.init.constant_(m.weight, 1)

nn.init.constant_(m.bias, 0)

logger.info('=> init final conv weights from normal distribution')

for m in self.final_layer.modules():

if isinstance(m, nn.Conv2d):

# nn.init.kaiming_normal_(m.weight, mode='fan_out', nonlinearity='relu')

logger.info('=> init {}.weight as normal(0, 0.001)'.format(name))

logger.info('=> init {}.bias as 0'.format(name))

nn.init.normal_(m.weight, std=0.001)

nn.init.constant_(m.bias, 0)

# pretrained_state_dict = torch.load(pretrained)

logger.info('=> loading pretrained model {}'.format(pretrained))

# self.load_state_dict(pretrained_state_dict, strict=False)

checkpoint = torch.load(pretrained)

if isinstance(checkpoint, OrderedDict):

state_dict = checkpoint

elif isinstance(checkpoint, dict) and 'state_dict' in checkpoint:

state_dict_old = checkpoint['state_dict']

state_dict = OrderedDict()

# delete 'module.' because it is saved from DataParallel module

for key in state_dict_old.keys():

if key.startswith('module.'):

# state_dict[key[7:]] = state_dict[key]

# state_dict.pop(key),原先权重可能是在多卡下存储的,或多一个

# modele.。解决办法两种,一种保存模型时,model.module.dict()

# 一种 model = \

# torch.nn.DataParallel(model,device_ids=gpus).cuda()

# 再加载 model.load

model.load_state_dict(checkpoint) # mudule 类自带的函数,strict=False

state_dict[key[7:]] = state_dict_old[key]

else:

state_dict[key] = state_dict_old[key]

else:

raise RuntimeError(

'No state_dict found in checkpoint file {}'.format(pretrained))

self.load_state_dict(state_dict, strict=False) #mudule 类自带的函数

else:

logger.error('=> imagenet pretrained model dose not exist')

logger.error('=> please download it first')

raise ValueError('imagenet pretrained model does not exist')

1.3 文件夹布局

重要的几个

--core

--__init__.py

--config.py #用easydict储存配置信息

--evaluate.py #计算PCK0.5,得出各关节点在一个Batch的ACC

--function.py ##定义 train()和 val()

--inference.py ##get_max_preds(),输入 b*64*32, 输出b*17*2(峰值坐标,有的为0),b*17*1(score)

--loss.py ##定义loss类,forward中实现 L2loss,输出(1,)

--dataset

--__init__.py

--coco.py ##自定义数据类coco,继承jioint类,加载GT在自身中。还包含evalution函数计算AP,最重要

--Jioint.py ##这里定义 __getitem__

--model

--pose.py ##定义网络

--utils.py

--transform.py ##定义 flip、仿射变换等数据增强

--vis.py ##展示图片

--utils.py ##定义logger,optimizer等操作2.数据加载

2.1 coco类介绍

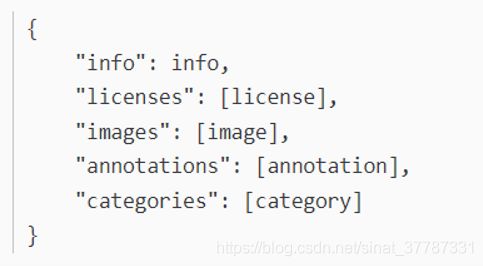

info、image、license 共享 annotion滑头最多

images数组和annotations数组的元素数量是不相等的,annotion数量多于图片,每个图片里的每一个对象有一个自己的id,且有对应image对应的image_id. catergories里只有一个人类。

annotion:

2.2 自定义coco数据类

self.image_set_index = self._load_image_set_index() #[1122,1212,12121,...] int array,存有train or val所有的图片id

self.db = self._get_db() #[{},{}],keypoint x,y,vision, center,一个{}就是一个人的信息,很全面

def __getitem__(self, idx):

db_rec = copy.deepcopy(self.db[idx]) #{}

image_file = db_rec['image'] #'xx/.jpg'

filename = db_rec['filename'] if 'filename' in db_rec else ''

imgnum = db_rec['imgnum'] if 'imgnum' in db_rec else '' #0

if self.data_format == 'zip':

from utils import zipreader

data_numpy = zipreader.imread(

image_file, cv2.IMREAD_COLOR | cv2.IMREAD_IGNORE_ORIENTATION)

else:

data_numpy = cv2.imread(

image_file, cv2.IMREAD_COLOR | cv2.IMREAD_IGNORE_ORIENTATION)

if data_numpy is None:

logger.error('=> fail to read {}'.format(image_file))

raise ValueError('Fail to read {}'.format(image_file))

joints = db_rec['joints_3d'] #[x,y,0]

joints_vis = db_rec['joints_3d_vis'] #[0,0,0] or [1,1,0]

c = db_rec['center'] #[111,222]

s = db_rec['scale'] #[1.1,2.3],people's h,w/200

score = db_rec['score'] if 'score' in db_rec else 1 #1

r = 0

if self.is_train:

sf = self.scale_factor #0.3

rf = self.rotation_factor #40

s = s * np.clip(np.random.randn()*sf + 1, 1 - sf, 1 + sf)

r = np.clip(np.random.randn()*rf, -rf*2, rf*2) \

if random.random() <= 0.6 else 0

if self.flip and random.random() <= 0.5:

data_numpy = data_numpy[:, ::-1, :] #hwc,w

joints, joints_vis = fliplr_joints(

joints, joints_vis, data_numpy.shape[1], self.flip_pairs) #17*3,17*3

c[0] = data_numpy.shape[1] - c[0] - 1

trans = get_affine_transform(c, s, r, self.image_size) ##return a matrix,2*3

input = cv2.warpAffine(

data_numpy,

trans,

(int(self.image_size[0]), int(self.image_size[1])),

flags=cv2.INTER_LINEAR) #仿射变换

if self.transform:

input = self.transform(input) ##normaize

for i in range(self.num_joints):

if joints_vis[i, 0] > 0.0:

joints[i, 0:2] = affine_transform(joints[i, 0:2], trans)

target, target_weight = self.generate_target(joints, joints_vis)

# targrt = 17*heatmap, target_weight = 17*1,关键点在边缘或者没有的 ->0

target = torch.from_numpy(target)

target_weight = torch.from_numpy(target_weight)

meta = {

'image': image_file,

'filename': filename,

'imgnum': imgnum,

'joints': joints, #(x,y,0) or (0,0,0)

'joints_vis': joints_vis, #(1,1,0) or (0,0,0)

'center': c,

'scale': s,

'rotation': r,

'score': score

}

return input, target, target_weight, meta3.train流程

model = eval('models.'+'pose_mobilenetv2'+'.get_pose_net2')(

config, is_train=False

) # 调用get_pose_net,参数在后面,返回model,output B*() *16

checkpoint = torch.load('0.633-model_best.pth.tar')

#model.load_state_dict(checkpoint['state_dict']) #mudule 类自带的函数,strict=False

#writer = SummaryWriter(log_dir='tensorboard_logs')

gpus = [int(i) for i in config.GPUS.split(',')] # [0]

model = torch.nn.DataParallel(model, device_ids=gpus).cuda()

model.load_state_dict(checkpoint) # mudule 类自带的函数,strict=False

# define loss function (criterion) and optimizer

criterion = JointsMSELoss(

use_target_weight=config.LOSS.USE_TARGET_WEIGHT

).cuda() # call(output, target, target_weight)

optimizer = get_optimizer(config, model) #LR=0.001

lr_scheduler = torch.optim.lr_scheduler.CosineAnnealingLR(optimizer,15,0.000001,-1)

# Data loading code

normalize = transforms.Normalize(mean=[0.485, 0.456, 0.406],

std=[0.229, 0.224, 0.225])

train_dataset = eval('dataset.'+config.DATASET.DATASET)(

config,

config.DATASET.ROOT,

config.DATASET.TRAIN_SET,

True,

transforms.Compose([

transforms.ToTensor(),

normalize,

])

) # COCO,include img_id[].gt[]

train_loader = torch.utils.data.DataLoader(

train_dataset,

batch_size=config.TRAIN.BATCH_SIZE*len(gpus),

shuffle=config.TRAIN.SHUFFLE,

num_workers=config.WORKERS,

pin_memory=True

)

best_perf = 0.0

best_model = False

for epoch in range(150, 220): #config.TRAIN.BEGIN_EPOCH, config.TRAIN.END_EPOCH

lr_scheduler.step()

# train for one epoch

train(config, train_loader, model, criterion, optimizer, epoch,

final_output_dir, tb_log_dir)

# evaluate on validation set,return AP

perf_indicator = validate(config, valid_loader, valid_dataset, model,

criterion, final_output_dir, tb_log_dir)

if perf_indicator > best_perf:

best_perf = perf_indicator

best_model = True

else:

best_model = False

logger.info('=> saving checkpoint to {}'.format(final_output_dir))

save_checkpoint({

'epoch': epoch + 1,

'model': get_model_name(config),

'state_dict': model.state_dict(),

'perf': perf_indicator,

'optimizer': optimizer.state_dict(),

}, best_model, final_output_dir)

final_model_state_file = os.path.join(final_output_dir,

'final_state.pkl')

logger.info('saving final model state to {}'.format(

final_model_state_file))

torch.save(model.module.state_dict(), final_model_state_file)3.1 train函数

def train(config, train_loader, model, criterion, optimizer, epoch,

output_dir, tb_log_dir, writer_dict=None):

batch_time = AverageMeter()

data_time = AverageMeter()

losses = AverageMeter()

acc = AverageMeter()

# switch to train mode

model.train()

end = time.time()

for i, (input, target, target_weight, meta) in enumerate(train_loader):

# measure data loading time

data_time.update(time.time() - end)

# compute output

output = model(input) #17*h*w

target = target.cuda(non_blocking=True) #17*4*h,0-1

target_weight = target_weight.cuda(non_blocking=True) #17*1,0 or 1

loss = criterion(output, target, target_weight) # 1*1

# compute gradient and do update step

optimizer.zero_grad()

loss.backward()

optimizer.step()

# measure accuracy and record loss

losses.update(loss.item(), input.size(0))

_, avg_acc, cnt, pred = accuracy(output.detach().cpu().numpy(),

target.detach().cpu().numpy()) #[18,],scalr(与gt比较计算pck0.5),cnt=16,b*17*2(top点坐标,有部分为0,因为峰值低于0)

acc.update(avg_acc, cnt)

# measure elapsed time

batch_time.update(time.time() - end)

end = time.time()

if i % config.PRINT_FREQ == 0:

msg = 'Epoch: [{0}][{1}/{2}]\t' \

'Time {batch_time.val:.3f}s ({batch_time.avg:.3f}s)\t' \

'Speed {speed:.1f} samples/s\t' \

'Data {data_time.val:.3f}s ({data_time.avg:.3f}s)\t' \

'Loss {loss.val:.5f} ({loss.avg:.5f})\t' \

'Accuracy {acc.val:.3f} ({acc.avg:.3f})'.format(

epoch, i, len(train_loader), batch_time=batch_time,

speed=input.size(0)/batch_time.val,

data_time=data_time, loss=losses, acc=acc)

logger.info(msg)

'''

writer = writer_dict['writer']

global_steps = writer_dict['train_global_steps']

writer.add_scalar('train_loss', losses.val, global_steps)

writer.add_scalar('train_acc', acc.val, global_steps)

writer_dict['train_global_steps'] = global_steps + 1

'''

prefix = '{}_{}'.format(os.path.join(output_dir, 'train'), i)

save_debug_images(config, input, meta, target, pred*4, output,

prefix)3.2 计算 pck0.5(按关节点种类来的)

def calc_dists(preds, target, normalize): #b*17*2,

preds = preds.astype(np.float32)

target = target.astype(np.float32)

dists = np.zeros((preds.shape[1], preds.shape[0]))

for n in range(preds.shape[0]):

for c in range(preds.shape[1]):

if target[n, c, 0] > 1 and target[n, c, 1] > 1: #ingore 边缘关键点

normed_preds = preds[n, c, :] / normalize[n] #/6.4,/4.8

normed_targets = target[n, c, :] / normalize[n]

dists[c, n] = np.linalg.norm(normed_preds - normed_targets) #better is 0,>0,L2距离

else:

dists[c, n] = -1

return dists #17*b

def dist_acc(dists, thr=0.5): # 1*b

''' Return percentage below threshold while ignoring values with a -1 '''

dist_cal = np.not_equal(dists, -1)

num_dist_cal = dist_cal.sum()

if num_dist_cal > 0:

return np.less(dists[dist_cal], thr).sum() * 1.0 / num_dist_cal #距离小于0.5,pck0.5

else:

return -1

def accuracy(output, target, hm_type='gaussian', thr=0.5):

'''

Calculate accuracy according to PCK,

but uses ground truth heatmap rather than x,y locations

First value to be returned is average accuracy across 'idxs',

followed by individual accuracies

'''

idx = list(range(output.shape[1])) #17

norm = 1.0

if hm_type == 'gaussian':

pred, _ = get_max_preds(output) #b*17*2,max坐标,部分为0

target, _ = get_max_preds(target)

h = output.shape[2]

w = output.shape[3]

norm = np.ones((pred.shape[0], 2)) * np.array([h, w]) / 10 #6.4,4.8

dists = calc_dists(pred, target, norm) #17*b

acc = np.zeros((len(idx) + 1))

avg_acc = 0

cnt = 0

for i in range(len(idx)):

acc[i + 1] = dist_acc(dists[idx[i]])

if acc[i + 1] >= 0:

avg_acc = avg_acc + acc[i + 1]

cnt += 1

avg_acc = avg_acc / cnt if cnt != 0 else 0

if cnt != 0:

acc[0] = avg_acc

return acc, avg_acc, cnt, pred

4 总结

可视化代码:

def save_batch_image_with_joints(batch_image, batch_joints, batch_joints_vis,

file_name, nrow=8, padding=2):

'''

batch_image: [batch_size, channel, height, width]

batch_joints: [batch_size, num_joints, 3],

batch_joints_vis: [batch_size, num_joints, 1],

}

一行8个

'''

grid = torchvision.utils.make_grid(batch_image, nrow, padding, True)

ndarr = grid.mul(255).clamp(0, 255).byte().permute(1, 2, 0).cpu().numpy() #?均值方差不用动?

ndarr = ndarr.copy()

nmaps = batch_image.size(0)

xmaps = min(nrow, nmaps)

ymaps = int(math.ceil(float(nmaps) / xmaps))

height = int(batch_image.size(2) + padding)

width = int(batch_image.size(3) + padding)

k = 0

for y in range(ymaps):

for x in range(xmaps):

if k >= nmaps:

break

joints = batch_joints[k]

joints_vis = batch_joints_vis[k]

for joint, joint_vis in zip(joints, joints_vis):

joint[0] = x * width + padding + joint[0]

joint[1] = y * height + padding + joint[1]

if joint_vis[0]:

cv2.circle(ndarr, (int(joint[0]), int(joint[1])), 2, [255, 0, 0], 2)

k = k + 1

cv2.imwrite(file_name, ndarr)收获

1.使用 logger打印信息

2.部分加载模型权重

3.自定义一个复杂数据类

4.使用tensorboardX查看训练过程

5.model得到输出后loss在GPU上计算,计算ACC转化到 cpu()numpy()上进行后续操作