scrapy框架实例,爬取美团酒店用户评论并存入MySQL

scrapy框架爬虫实例

scrapy安装在此不做介绍,本实例采用python3.6版本。

1、需求:抓取美团网多个酒店所有用户评论,并存入MySQL。

2、需求分析:美团酒店评分翻页是通过ajax实现,使用浏览器自带的network或者任一抓包工具找到数据接口即可,不是太难,自己进行练习抓包,如找不到后文会给出结果,再次不多做赘述,本文重在描述如何正确搭建框架并成功运行。

3、创建项目:打开cmd或pycharm terminal,cd切换至项目存放位置输入创建项目命令,然后cd至spiders输入创建spider命令,命令分别如下:

scrapy startproject meituan # 创建项目命令

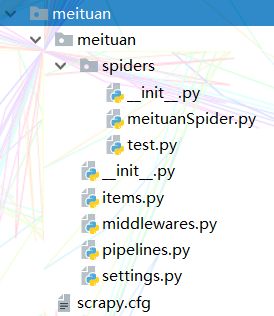

scrapy genspider meituanSpider "ihotel.meituan.com" # 创建spider命令scrapy crawl meituanSpider # 最终运行该爬虫命令得到如图目录结构。

4、编写spider:

(1)拿到将要爬取的美团酒店链接,如该url(https://hotel.meituan.com/117467078),由于我要爬取的所有目标酒店在我本地数据库中,因而在此我通过pymysql读取美团酒店url list(可拓展:如深度爬取,遍历整个美团网站,爬取所有美团酒店):

(2)编写start_requests,向酒店url发起初次请求:

class MeituanspiderSpider(scrapy.Spider):

conn = pymysql.connect(

host='host',

port=3306,

user='root',

password='password',

db='database',

charset="utf8"

)

cursor = conn.cursor()

name = 'meituanSpider'

allowed_domains = ['ihotel.meituan.com']

base_url = 'http://ihotel.meituan.com/group/v1/poi/comment/%s?sortType=default&noempty=1&withpic=0&filter=all&limit=100&offset=%s' # 上文提到的酒店评论接口 def getUrlInfo(self, cursor):

"""return:酒店url list"""

query = '''select * from meituanUrl'''

cursor.execute(query)

res = cursor.fetchall()

return res

def start_requests(self):

meituanUrlList = self.getUrlInfo(self.cursor)

for index, meituanUrl in enumerate(meituanUrlList):

REQUEST = scrapy.Request(

meituanUrl,

callback=self.parse,

meta={'meituanUrl': meituanUrl}, # 向response传参

)

yield REQUEST(3)处理初次请求,得到总评论数(控制页数循环),酒店id参数:

def parse(self, response):

hotelID = re.findall(r'"poiid":"(.*?)"', str(response.text))[0] # 酒店id

_totalComment = response.xpath("//h2[@id='comment']")[0].xpath('string(.)').extract_first()

totalComment = _totalComment.replace('住客点评(', '').replace(')', '') # 评论总数

total = int(totalComment) // 100 + 1 # 循环总次数

offset = 0 # 页数变量

for page in range(total): # 遍历所有评论

page_url = self.base_url % (hotelID, offset)

offset += 100

yield scrapy.Request(page_url, callback=self.detail_parse})(3)处理数据请求接口,提取最终评论内容:

def detail_parse(self, response):

jsonObj = json.loads(response.text)

commentList = jsonObj['data']['feedback']

for comment in commentList:

user_name = comment['username'].replace('\n', '').replace('"', '').replace('\\', '') # 用户名

Comment = comment['comment'].replace('\n', '').replace('"', '').replace('\\', '') # 评论

comment_time = comment['fbtimestamp'] # 评论时间

reply = comment['bizreply'].replace('\n', '').replace('"', '').replace('\\', '') # 客服回复

user_attitude = comment['scoretext'] # 用户态度

score = comment['score'] # 用户评分5、编写item.py:

class MeituanItem(scrapy.Item):

# define the fields for your item here like:

user_name = scrapy.Field()

score = scrapy.Field()

user_attitude = scrapy.Field()

Comment = scrapy.Field()

comment_time = scrapy.Field()

reply = scrapy.Field()6、为items对象赋值:

将一下代码加入至4.(3)末尾

item = MeituanItem()

item['user_name'] = user_name

item['score'] = score

item['user_attitude'] = user_attitude

item['Comment'] = Comment

item['comment_time'] = comment_time

item['reply'] = reply

yield item7、编写piplines.py:

设置数据库连接池,通过piplines将items封装的数据存入MySQL

(1)读取数据库配置,数据库连接信息稍后会在settings.py做标明:

def __init__(self, dbpool):

self.dbpool = dbpool

@classmethod

def from_settings(cls, settings):

"""读取settings中的配置,连接数据库"""

dbparams = dict(

host=settings['MYSQL_HOST'],

db=settings['MYSQL_DBNAME'],

user=settings['MYSQL_USER'],

passwd=settings['MYSQL_PASSWD'],

cursorclass=pymysql.cursors.DictCursor,

use_unicode=True,

charset="utf8mb4" # 由于部分评论中存在emoji表情,因此此处字符集选择utf8mb4

)

dbpool = adbapi.ConnectionPool('pymysql', **dbparams) # **表示将字典扩展为关键字参数,相当于host=xxx,db=yyy....

return cls(dbpool) # 相当于dbpool付给了这个类,self中可以得到(2)写入数据库与捕捉错误:

# 写入数据库中

def _conditional_insert(self, tx, item):

sql = '''insert ignore into meituan_comments(user_name, score, attitude, comment, comment_time, response) VALUES (%s,%s,%s,%s,%s,%s)'''

params = (

item['user_name'],

item['score'],

item['user_attitude'],

item['Comment'],

item['comment_time'],

item['reply'],

)

tx.execute(sql, params) # 错误忽略并打印

def _handle_error(self, failue, item, spider):

print(failue)

pass8、配置setting.py:

setting配置一般需要配置请求头信息,robots协议、打开piplines、添加异常处理、中间件配置(该实例未涉及)、IP代理池(该实例未涉及)等等。该实例大概做了以下配置:

HTTPERROR_ALLOWED_CODES = [403] # 处理403错误

FEED_EXPORT_ENCODING = 'utf-8' # 爬取字符集设置

#Mysql数据库的配置信息

MYSQL_HOST = 'host'

MYSQL_DBNAME = 'db'

MYSQL_USER = 'root'

MYSQL_PASSWD = 'password'

MYSQL_PORT = 3306

# piplines配置

ITEM_PIPELINES = {

'meituan.pipelines.MeituanPipeline': 300,

}

# 请求头设置

DEFAULT_REQUEST_HEADERS = {

'Accept-Language': 'en',

'Connection':'keep-alive',

'Accept':'text/html,application/xhtml+xml,application/xml;q=0.9,image/webp,image/apng,*/*;q=0.8',

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/70.0.3538.102 Safari/537.36'

}

# robots协议

ROBOTSTXT_OBEY = False以上就是个人做的一个简单的scrapy实例,如有打字错误、逻辑错误等或者不懂的朋友或者意见不同的朋友还望在评论里多多留言,交流学习,共同进步。

既然都到这里了,那就...

C:printf("点个赞吧!");

C++ : coutQBasic : Print "点个赞吧!"

Asp : Response.Write "点个赞吧!"

PHP : echo "点个赞吧!";

JScript: alert("点个赞吧!")

VBScript:MsgBox "点个赞吧!"

Jscript:document.write("点个赞吧!")

Delphi: ShowMessage('点个赞吧!');

VB: Print "点个赞吧!"

VC: MessageBox("点个赞吧!");

shell: echo 点个赞吧!

perl: print '点个赞吧!'

java: System.out.println("点个赞吧!");

powerBuilder:messagebox("点个赞吧!")

C#:System.Console.WriteLine("点个赞吧!")

COBOL:DISPLAY '点个赞吧!

Python:print("点个赞吧!")

AS:Alert.show("点个赞吧!");

Foxpro: ? [点个赞吧!]

DOS 批处理: echo 点个赞吧!

易语言:调试输出(“点个赞吧!”)