[kubernetes]11-6 监控部署实战 - Helm+PrometheusOperator暂未解决

11-6 监控部署实战 - Helm+PrometheusOperator

Kubernetes 学习到这一篇卡住了 虽然大部分内容都已经过了一遍,但是还是不能解决这里出现的问题,不管是describe 还是进入容器查看日志.感觉还是太菜了,从头开始补基础.

这里一直卡在helm创建promethous.不管用什么方式,要不就是Alermanager启动不了要么就是promethous启动不了. alert的pod一直在重启 但是看日志就是没问题.如果有大佬有思路或者提供建议 请指教一下.搞了几天还没解决.

重现过程如下

如果可以科学上网 可以通过命令

helm install --name pdabc-prom stable/prometheus-operator

这里使用本地安装的方式 方便后续定制化

先到git hub上下载charts

链接:https://pan.baidu.com/s/1YqBN9I1IwamBdpgobEHGXw

提取码:da05

下载到/opt下面之后 把文件复制到/root下

cd /root

cp -r /opt/charts/stable/prometheus-operator ./

mkdir prometheus-operator/charts

cp -r /opt/charts/stable/kube-state-metrics/ ./prometheus-operator/charts/

cp -r /opt/charts/stable/grafana/ ./prometheus-operator/charts/

cp -r /opt/charts/stable/prometheus-node-exporter/ ./prometheus-operator/charts/

安装

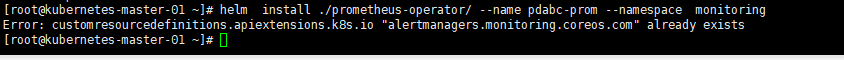

helm install ./prometheus-operator/ --name pdabc-prom --namespace monitoring

如果出现以下报错 需要执行kubectl delete

Error: customresourcedefinitions.apiextensions.k8s.io "alertmanagers.monitoring.coreos.com" already exists

需要删除crd

kubectl get crd

kubectl delete crd prometheuses.monitoring.coreos.com

kubectl delete crd prometheusrules.monitoring.coreos.com

kubectl delete crd servicemonitors.monitoring.coreos.com

kubectl delete crd alertmanagers.monitoring.coreos.com

kubectl delete crd podmonitors.monitoring.coreos.com

再次执行报错

Error: timed out waiting for the condition

因为镜像是国外的缘故 先去节点上下载好prometheus-operator/values.yaml里面需要的镜像

find .|grep values.yaml |xargs grep "image"

改成 ![]() 或者

或者

下面有加速下载的办法

https://github.com/xuxinkun/littleTools#azk8spull

yum -y install git

git clone https://github.com/xuxinkun/littleTools

cd littleTools

chmod +x install.sh

./install.sh

source /etc/profile

azk8spull quay.io/prometheus/alertmanager:v0.19.0

azk8spull squareup/ghostunnel:v1.4.1

azk8spull jettech/kube-webhook-certgen:v1.0.0

azk8spull quay.io/coreos/prometheus-operator:v0.34.0

azk8spull quay.io/coreos/configmap-reload:v0.0.1

azk8spull quay.io/coreos/prometheus-config-reloader:v0.34.0

azk8spull quay.io/prometheus/prometheus:v2.13.1

azk8spull k8s.gcr.io/hyperkube:v1.12.1

docker pull quay.io/prometheus/node-exporter:v0.17.0

azk8spull quay.io/prometheus/prometheus:v2.9.1

azk8spull quay.io/prometheus/alertmanager:v0.16.2

docker pull registry.cn-hangzhou.aliyuncs.com/imooc/k8s-sidecar:0.0.16

azk8spull quay.io/coreos/prometheus-config-reloader:v0.29.0

docker pull registry.cn-hangzhou.aliyuncs.com/imooc/grafana:6.1.6

再次执行

NAME: pdabc-prom

LAST DEPLOYED: Sun Jan 5 22:20:07 2020

NAMESPACE: monitoring

STATUS: DEPLOYED

RESOURCES:

==> v1/Alertmanager

NAME AGE

pdabc-prom-prometheus-oper-alertmanager 10s

==> v1/ClusterRole

NAME AGE

pdabc-prom-grafana-clusterrole 14s

pdabc-prom-prometheus-oper-alertmanager 14s

pdabc-prom-prometheus-oper-operator 14s

pdabc-prom-prometheus-oper-operator-psp 14s

pdabc-prom-prometheus-oper-prometheus 13s

pdabc-prom-prometheus-oper-prometheus-psp 13s

psp-pdabc-prom-kube-state-metrics 14s

==> v1/ClusterRoleBinding

NAME AGE

pdabc-prom-grafana-clusterrolebinding 13s

pdabc-prom-prometheus-oper-alertmanager 13s

pdabc-prom-prometheus-oper-operator 13s

pdabc-prom-prometheus-oper-operator-psp 13s

pdabc-prom-prometheus-oper-prometheus 13s

pdabc-prom-prometheus-oper-prometheus-psp 13s

psp-pdabc-prom-kube-state-metrics 13s

==> v1/ConfigMap

NAME DATA AGE

pdabc-prom-grafana 1 16s

pdabc-prom-grafana-config-dashboards 1 16s

pdabc-prom-grafana-test 1 16s

pdabc-prom-prometheus-oper-etcd 1 16s

pdabc-prom-prometheus-oper-grafana-datasource 1 16s

pdabc-prom-prometheus-oper-k8s-cluster-rsrc-use 1 16s

pdabc-prom-prometheus-oper-k8s-coredns 1 16s

pdabc-prom-prometheus-oper-k8s-node-rsrc-use 1 16s

pdabc-prom-prometheus-oper-k8s-resources-cluster 1 16s

pdabc-prom-prometheus-oper-k8s-resources-namespace 1 16s

pdabc-prom-prometheus-oper-k8s-resources-pod 1 15s

pdabc-prom-prometheus-oper-k8s-resources-workload 1 15s

pdabc-prom-prometheus-oper-k8s-resources-workloads-namespace 1 15s

pdabc-prom-prometheus-oper-nodes 1 15s

pdabc-prom-prometheus-oper-persistentvolumesusage 1 15s

pdabc-prom-prometheus-oper-pods 1 15s

pdabc-prom-prometheus-oper-statefulset 1 15s

==> v1/Deployment

NAME READY UP-TO-DATE AVAILABLE AGE

pdabc-prom-prometheus-oper-operator 0/1 1 0 10s

==> v1/Endpoints

NAME ENDPOINTS AGE

pdabc-prom-prometheus-oper-kube-controller-manager 10.155.20.50:10252 10s

pdabc-prom-prometheus-oper-kube-etcd 10.155.20.50:2379 9s

pdabc-prom-prometheus-oper-kube-scheduler 10.155.20.50:10251 8s

==> v1/Pod(related)

NAME READY STATUS RESTARTS AGE

pdabc-prom-grafana-649677d8cf-9bwqw 0/2 Init:0/1 0 10s

pdabc-prom-kube-state-metrics-75f6f95968-f8twc 0/1 ContainerCreating 0 10s

pdabc-prom-prometheus-node-exporter-4b9fg 0/1 ContainerCreating 0 10s

pdabc-prom-prometheus-node-exporter-55dxc 0/1 Pending 0 10s

pdabc-prom-prometheus-node-exporter-5h6gq 0/1 ContainerCreating 0 11s

pdabc-prom-prometheus-node-exporter-65nzt 0/1 ContainerCreating 0 10s

pdabc-prom-prometheus-node-exporter-dcngq 0/1 Pending 0 10s

pdabc-prom-prometheus-node-exporter-kw9m4 0/1 ContainerCreating 0 10s

pdabc-prom-prometheus-node-exporter-mg4d2 0/1 ContainerCreating 0 10s

pdabc-prom-prometheus-node-exporter-pqj45 0/1 Pending 0 10s

pdabc-prom-prometheus-node-exporter-q888n 0/1 ContainerCreating 0 10s

pdabc-prom-prometheus-node-exporter-tdxzx 0/1 ContainerCreating 0 10s

pdabc-prom-prometheus-node-exporter-vckhg 0/1 Pending 0 10s

pdabc-prom-prometheus-node-exporter-w9kmt 0/1 ContainerCreating 0 10s

pdabc-prom-prometheus-oper-operator-5b98867978-qvc79 0/1 ContainerCreating 0 10s

==> v1/Prometheus

NAME AGE

pdabc-prom-prometheus-oper-prometheus 8s

==> v1/PrometheusRule

NAME AGE

pdabc-prom-prometheus-oper-alertmanager.rules 7s

pdabc-prom-prometheus-oper-etcd 7s

pdabc-prom-prometheus-oper-general.rules 7s

pdabc-prom-prometheus-oper-k8s.rules 6s

pdabc-prom-prometheus-oper-kube-apiserver.rules 6s

pdabc-prom-prometheus-oper-kube-prometheus-node-alerting.rules 6s

pdabc-prom-prometheus-oper-kube-prometheus-node-recording.rules 6s

pdabc-prom-prometheus-oper-kube-scheduler.rules 5s

pdabc-prom-prometheus-oper-kubernetes-absent 5s

pdabc-prom-prometheus-oper-kubernetes-apps 5s

pdabc-prom-prometheus-oper-kubernetes-resources 5s

pdabc-prom-prometheus-oper-kubernetes-storage 5s

pdabc-prom-prometheus-oper-kubernetes-system 5s

pdabc-prom-prometheus-oper-node-network 4s

pdabc-prom-prometheus-oper-node-time 4s

pdabc-prom-prometheus-oper-node.rules 4s

pdabc-prom-prometheus-oper-prometheus-operator 4s

pdabc-prom-prometheus-oper-prometheus.rules 3s

==> v1/Role

NAME AGE

pdabc-prom-grafana-test 13s

pdabc-prom-prometheus-oper-prometheus 3s

pdabc-prom-prometheus-oper-prometheus-config 13s

==> v1/RoleBinding

NAME AGE

pdabc-prom-grafana-test 13s

pdabc-prom-prometheus-oper-prometheus 3s

pdabc-prom-prometheus-oper-prometheus-config 13s

==> v1/Secret

NAME TYPE DATA AGE

alertmanager-pdabc-prom-prometheus-oper-alertmanager Opaque 1 16s

pdabc-prom-grafana Opaque 3 17s

==> v1/Service

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

pdabc-prom-grafana ClusterIP 10.109.19.232 80/TCP 13s

pdabc-prom-kube-state-metrics ClusterIP 10.107.17.244 8080/TCP 12s

pdabc-prom-prometheus-node-exporter ClusterIP 10.107.151.149 9100/TCP 12s

pdabc-prom-prometheus-oper-alertmanager ClusterIP 10.103.111.68 9093/TCP 12s

pdabc-prom-prometheus-oper-coredns ClusterIP None 9153/TCP 12s

pdabc-prom-prometheus-oper-kube-controller-manager ClusterIP None 10252/TCP 11s

pdabc-prom-prometheus-oper-kube-etcd ClusterIP None 2379/TCP 11s

pdabc-prom-prometheus-oper-kube-scheduler ClusterIP None 10251/TCP 11s

pdabc-prom-prometheus-oper-operator ClusterIP 10.109.197.188 8080/TCP 11s

pdabc-prom-prometheus-oper-prometheus ClusterIP 10.107.195.114 9090/TCP 11s

==> v1/ServiceAccount

NAME SECRETS AGE

pdabc-prom-grafana 1 15s

pdabc-prom-grafana-test 1 15s

pdabc-prom-kube-state-metrics 1 15s

pdabc-prom-prometheus-node-exporter 1 15s

pdabc-prom-prometheus-oper-alertmanager 1 14s

pdabc-prom-prometheus-oper-operator 1 14s

pdabc-prom-prometheus-oper-prometheus 1 14s

==> v1/ServiceMonitor

NAME AGE

pdabc-prom-prometheus-oper-alertmanager 3s

pdabc-prom-prometheus-oper-apiserver 3s

pdabc-prom-prometheus-oper-coredns 3s

pdabc-prom-prometheus-oper-grafana 2s

pdabc-prom-prometheus-oper-kube-controller-manager 3s

pdabc-prom-prometheus-oper-kube-etcd 2s

pdabc-prom-prometheus-oper-kube-scheduler 2s

pdabc-prom-prometheus-oper-kube-state-metrics 2s

pdabc-prom-prometheus-oper-kubelet 2s

pdabc-prom-prometheus-oper-node-exporter 2s

pdabc-prom-prometheus-oper-operator 2s

pdabc-prom-prometheus-oper-prometheus 2s

==> v1beta1/ClusterRole

NAME AGE

pdabc-prom-kube-state-metrics 14s

psp-pdabc-prom-prometheus-node-exporter 14s

==> v1beta1/ClusterRoleBinding

NAME AGE

pdabc-prom-kube-state-metrics 13s

psp-pdabc-prom-prometheus-node-exporter 13s

==> v1beta1/DaemonSet

NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE

pdabc-prom-prometheus-node-exporter 12 12 0 12 0 11s

==> v1beta1/Deployment

NAME READY UP-TO-DATE AVAILABLE AGE

pdabc-prom-kube-state-metrics 0/1 1 0 10s

==> v1beta1/PodSecurityPolicy

NAME PRIV CAPS SELINUX RUNASUSER FSGROUP SUPGROUP READONLYROOTFS VOLUMES

pdabc-prom-grafana false RunAsAny RunAsAny RunAsAny RunAsAny false configMap,emptyDir,projected,secret,downwardAPI,persistentVolumeClaim

pdabc-prom-grafana-test false RunAsAny RunAsAny RunAsAny RunAsAny false configMap,downwardAPI,emptyDir,projected,secret

pdabc-prom-kube-state-metrics false RunAsAny MustRunAsNonRoot MustRunAs MustRunAs false secret

pdabc-prom-prometheus-node-exporter false RunAsAny RunAsAny MustRunAs MustRunAs false configMap,emptyDir,projected,secret,downwardAPI,persistentVolumeClaim,hostPath

pdabc-prom-prometheus-oper-alertmanager false RunAsAny RunAsAny MustRunAs MustRunAs false configMap,emptyDir,projected,secret,downwardAPI,persistentVolumeClaim

pdabc-prom-prometheus-oper-operator false RunAsAny RunAsAny MustRunAs MustRunAs false configMap,emptyDir,projected,secret,downwardAPI,persistentVolumeClaim

pdabc-prom-prometheus-oper-prometheus false RunAsAny RunAsAny MustRunAs MustRunAs false configMap,emptyDir,projected,secret,downwardAPI,persistentVolumeClaim

==> v1beta1/Role

NAME AGE

pdabc-prom-grafana 13s

==> v1beta1/RoleBinding

NAME AGE

pdabc-prom-grafana 13s

==> v1beta2/Deployment

NAME READY UP-TO-DATE AVAILABLE AGE

pdabc-prom-grafana 0/1 1 0 11s

NOTES:

The Prometheus Operator has been installed. Check its status by running:

kubectl --namespace monitoring get pods -l "release=pdabc-prom"

Visit https://github.com/coreos/prometheus-operator for instructions on how

to create & configure Alertmanager and Prometheus inst

kubectl get all -n monitoring

kubectl get alertmanager -n monitoring

kubectl describe pod prometheus-pdabc-prom-prometheus-oper-prometheus-0 -n monitoring

systemctl status kubelet.service

journalctl -f

kubectl get deploy -n monitoring

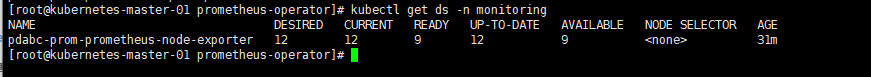

kubectl get ds -n monitoring

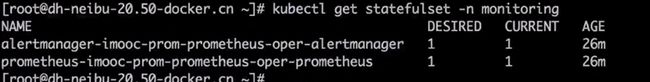

kubectl get statefulset -n monitoring

kubectl get svc -n monitoring

删除所有安装的插件

helm delete pdabc-prom --purge

还需要手动删除crd

kubectl get svc -n monitoring pdabc-prom-prometheus-oper-prometheus -o yaml

apiVersion: v1

kind: Service

metadata:

creationTimestamp: "2020-01-05T08:56:54Z"

labels:

app: prometheus-operator-prometheus

chart: prometheus-operator-8.5.1

heritage: Tiller

release: pdabc-prom

self-monitor: "true"

name: pdabc-prom-prometheus-oper-prometheus

namespace: monitoring

resourceVersion: "21983792"

selfLink: /api/v1/namespaces/monitoring/services/pdabc-prom-prometheus-oper-prometheus

uid: 9bec1e79-b061-47cf-a185-26b8f40852cc

spec:

clusterIP: 10.105.52.79

ports:

- name: web

port: 9090

protocol: TCP

targetPort: 9090

selector:

app: prometheus

prometheus: pdabc-prom-prometheus-oper-prometheus

sessionAffinity: None

type: ClusterIP

status:

loadBalancer: {}

修改ingress-prometheus.yaml

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: prometheus

namespace: monitoring

spec:

rules:

- host: prometheus.pdabc.com

http:

paths:

- backend:

serviceName: pdabc-prom-prometheus-oper-prometheus

servicePort: web

path: /

kubectl apply -f ingress-prometheus.yaml

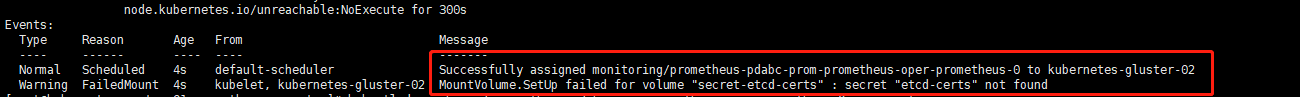

报错1:

解决:

kubectl -n monitoring create secret generic etcd-certs --from-file=/etc/kubernetes/pki/etcd/server.crt --from-file=/etc/kubernetes/pki/etcd/server.key

kubectl delete pod pdabc-prom-prometheus-node-exporter-fcwrn pdabc-prom-prometheus-node-exporter-gfwdd pdabc-prom-prometheus-node-exporter-ghmm5 pdabc-prom-prometheus-node-exporter-kgj8v -n monitoring --force --grace-period=0

报错2:

docker使用非root用户启动容器出现“running exec setns process for init caused \"exit status 40\"": unknown”

环境为centos7,linux内核版本为3.10

出现该问题的原因是内核3.10的bug,升级linux内核即可,升级办法如下,升级完成后重启系统,选择对应的内核版本启动即可。

解决 :

1、导入key

rpm --import https://www.elrepo.org/RPM-GPG-KEY-elrepo.org

2、安装elrepo的yum源

rpm -Uvh http://www.elrepo.org/elrepo-release-7.0-2.el7.elrepo.noarch.rpm

3、安装内核

在yum的ELRepo源中,有mainline颁布的,可以这样安装:

yum --enablerepo=elrepo-kernel install kernel-ml-devel kernel-ml -y

报错no matches for kind "ServiceMonitor" in version "monitoring.coreos.com/v1"

1 能显示prometheus界面但是alermanager界面没法显示

2 修改之后 可以出现alermanager界面但是prometheus的就不能显示

[root@kubernetes-master-01 tmp]# kubectl apply -f kube-prometheus/manifests/

alertmanager.monitoring.coreos.com/main created

secret/alertmanager-main created

service/alertmanager-main created

serviceaccount/alertmanager-main created

servicemonitor.monitoring.coreos.com/alertmanager created

secret/grafana-datasources created

configmap/grafana-dashboard-apiserver created

configmap/grafana-dashboard-cluster-total created

configmap/grafana-dashboard-controller-manager created

configmap/grafana-dashboard-k8s-resources-cluster created

configmap/grafana-dashboard-k8s-resources-namespace created

configmap/grafana-dashboard-k8s-resources-node created

configmap/grafana-dashboard-k8s-resources-pod created

configmap/grafana-dashboard-k8s-resources-workload created

configmap/grafana-dashboard-k8s-resources-workloads-namespace created

configmap/grafana-dashboard-kubelet created

configmap/grafana-dashboard-namespace-by-pod created

configmap/grafana-dashboard-namespace-by-workload created

configmap/grafana-dashboard-node-cluster-rsrc-use created

configmap/grafana-dashboard-node-rsrc-use created

configmap/grafana-dashboard-nodes created

configmap/grafana-dashboard-persistentvolumesusage created

configmap/grafana-dashboard-pod-total created

configmap/grafana-dashboard-pods created

configmap/grafana-dashboard-prometheus-remote-write created

configmap/grafana-dashboard-prometheus created

configmap/grafana-dashboard-proxy created

configmap/grafana-dashboard-scheduler created

configmap/grafana-dashboard-statefulset created

configmap/grafana-dashboard-workload-total created

configmap/grafana-dashboards created

deployment.apps/grafana created

service/grafana created

serviceaccount/grafana created

servicemonitor.monitoring.coreos.com/grafana created

clusterrole.rbac.authorization.k8s.io/kube-state-metrics created

clusterrolebinding.rbac.authorization.k8s.io/kube-state-metrics created

deployment.apps/kube-state-metrics created

role.rbac.authorization.k8s.io/kube-state-metrics created

rolebinding.rbac.authorization.k8s.io/kube-state-metrics created

service/kube-state-metrics created

serviceaccount/kube-state-metrics created

servicemonitor.monitoring.coreos.com/kube-state-metrics created

clusterrole.rbac.authorization.k8s.io/node-exporter created

clusterrolebinding.rbac.authorization.k8s.io/node-exporter created

daemonset.apps/node-exporter created

service/node-exporter created

serviceaccount/node-exporter created

servicemonitor.monitoring.coreos.com/node-exporter created

apiservice.apiregistration.k8s.io/v1beta1.metrics.k8s.io created

clusterrole.rbac.authorization.k8s.io/prometheus-adapter created

clusterrole.rbac.authorization.k8s.io/system:aggregated-metrics-reader created

clusterrolebinding.rbac.authorization.k8s.io/prometheus-adapter created

clusterrolebinding.rbac.authorization.k8s.io/resource-metrics:system:auth-delegator created

clusterrole.rbac.authorization.k8s.io/resource-metrics-server-resources created

configmap/adapter-config created

deployment.apps/prometheus-adapter created

rolebinding.rbac.authorization.k8s.io/resource-metrics-auth-reader created

service/prometheus-adapter created

serviceaccount/prometheus-adapter created

clusterrole.rbac.authorization.k8s.io/prometheus-k8s created

clusterrolebinding.rbac.authorization.k8s.io/prometheus-k8s created

servicemonitor.monitoring.coreos.com/prometheus-operator created

prometheus.monitoring.coreos.com/k8s created

rolebinding.rbac.authorization.k8s.io/prometheus-k8s-config created

rolebinding.rbac.authorization.k8s.io/prometheus-k8s created

rolebinding.rbac.authorization.k8s.io/prometheus-k8s created

rolebinding.rbac.authorization.k8s.io/prometheus-k8s created

role.rbac.authorization.k8s.io/prometheus-k8s-config created

role.rbac.authorization.k8s.io/prometheus-k8s created

role.rbac.authorization.k8s.io/prometheus-k8s created

role.rbac.authorization.k8s.io/prometheus-k8s created

prometheusrule.monitoring.coreos.com/prometheus-k8s-rules created

service/prometheus-k8s created

serviceaccount/prometheus-k8s created

servicemonitor.monitoring.coreos.com/prometheus created

servicemonitor.monitoring.coreos.com/kube-apiserver created

servicemonitor.monitoring.coreos.com/coredns created

servicemonitor.monitoring.coreos.com/kube-controller-manager created

servicemonitor.monitoring.coreos.com/kube-scheduler created

servicemonitor.monitoring.coreos.com/kubelet created

[root@kubernetes-master-01 tmp]# kubectl get ns | grep monitoring

monitoring Active 2d7h

![[kubernetes]11-6 监控部署实战 - Helm+PrometheusOperator暂未解决_第1张图片](http://img.e-com-net.com/image/info8/f239315625244fda96cb913a2900eecd.jpg)

![[kubernetes]11-6 监控部署实战 - Helm+PrometheusOperator暂未解决_第2张图片](http://img.e-com-net.com/image/info8/8fc8caaf16a14ebbbceac56ca89785cf.jpg)

![[kubernetes]11-6 监控部署实战 - Helm+PrometheusOperator暂未解决_第3张图片](http://img.e-com-net.com/image/info8/d4b31402b28f4f7db634a42e8430facc.png)

![[kubernetes]11-6 监控部署实战 - Helm+PrometheusOperator暂未解决_第4张图片](http://img.e-com-net.com/image/info8/1b9e08a1bf38447690afbf01ae2c933f.png)

![[kubernetes]11-6 监控部署实战 - Helm+PrometheusOperator暂未解决_第5张图片](http://img.e-com-net.com/image/info8/333e34d4f00344099811d591a765d512.jpg)

![[kubernetes]11-6 监控部署实战 - Helm+PrometheusOperator暂未解决_第6张图片](http://img.e-com-net.com/image/info8/d7e9db9debe744bc9889851af357e4b1.jpg)