那些年,VS编译和测试XGBOOST走过的坑

回首一下,XGBOOST在C++下安装和测试还是很简单的,只是有些坑注定第一次要踩。

XGBOOST源码地址:https://github.com/dmlc/xgboost,

环境:windows 7,VS2017 , cmake和gcc/g++都是最新版,至于安装gcc的方法,大家爱怎么安装就怎么安装吧。

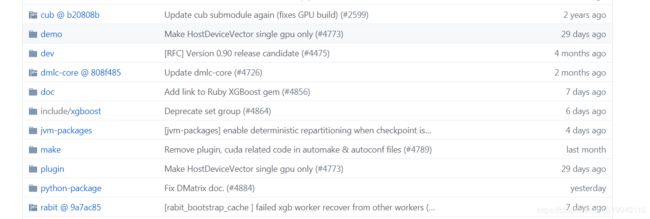

首先clone源码,这地方有坑,git clone完后cmake会有问题,主要原因是git clone时间没有把图中带@后缀的cub,dmlc-core,rabit中的内容clone下来,这里要分别进入这些文件夹下clone。如果遇到坑,千万不要先怀疑gcc版本问题,毕竟XGBOOST的代码也在更新,会注意到gcc的版本的。

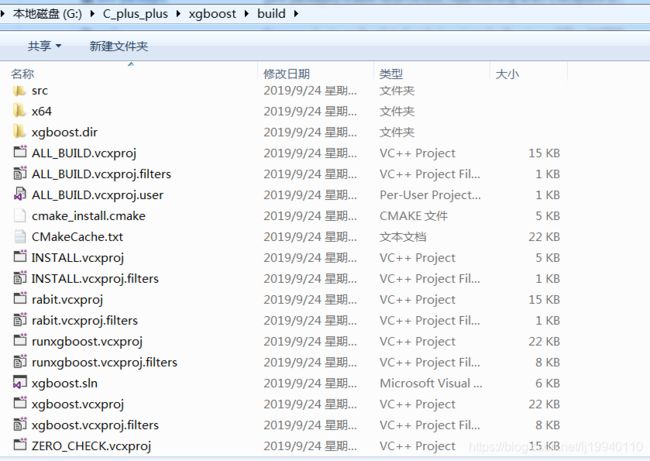

完成clone后就顺利了,创建build文件夹并进入,执行一波cmake,指令:

cmake .. -G"Visual Studio 15 2017 Win64"

VS版本不同,执行命令会稍有变化,执行完后会在build文件夹下生成sln的工程文件,

打开xgboost.sln,编译就完事了,之后会在xgboost/lib中生成xgboost.dll,把xgboost\include的h文件,xgboost\build\Release下的lib文件添加到你的新工程,并将xgboost.dll放到环境变量中或者拷贝到你的工程文件夹中就可以执行调用了。

在这里,就给大家展示一个例子吧,例子在xgboost\demo\c-api中,代码如下:

/*!

* Copyright 2019 XGBoost contributors

*

* \file c-api-demo.c

* \brief A simple example of using xgboost C API.

*/

#include

#include

#include "xgboost/c_api.h"

#define safe_xgboost(call) { \

int err = (call); \

if (err != 0) { \

fprintf(stderr, "%s:%d: error in %s: %s\n", __FILE__, __LINE__, #call, XGBGetLastError()); \

exit(1); \

} \

}

int main(int argc, char** argv) {

int silent = 0;

int use_gpu = 0; // set to 1 to use the GPU for training

// load the data

DMatrixHandle dtrain, dtest;

safe_xgboost(XGDMatrixCreateFromFile("agaricus.txt.train", silent, &dtrain));

safe_xgboost(XGDMatrixCreateFromFile("agaricus.txt.test", silent, &dtest));

// create the booster

BoosterHandle booster;

DMatrixHandle eval_dmats[2] = {dtrain, dtest};

safe_xgboost(XGBoosterCreate(eval_dmats, 2, &booster));

// configure the training

// available parameters are described here:

// https://xgboost.readthedocs.io/en/latest/parameter.html

safe_xgboost(XGBoosterSetParam(booster, "tree_method", use_gpu ? "gpu_hist" : "hist"));

if (use_gpu) {

// set the GPU to use;

// this is not necessary, but provided here as an illustration

safe_xgboost(XGBoosterSetParam(booster, "gpu_id", "0"));

} else {

// avoid evaluating objective and metric on a GPU

safe_xgboost(XGBoosterSetParam(booster, "gpu_id", "-1"));

}

safe_xgboost(XGBoosterSetParam(booster, "objective", "binary:logistic"));

safe_xgboost(XGBoosterSetParam(booster, "min_child_weight", "1"));

safe_xgboost(XGBoosterSetParam(booster, "gamma", "0.1"));

safe_xgboost(XGBoosterSetParam(booster, "max_depth", "3"));

safe_xgboost(XGBoosterSetParam(booster, "verbosity", silent ? "0" : "1"));

// train and evaluate for 10 iterations

int n_trees = 10;

const char* eval_names[2] = {"train", "test"};

const char* eval_result = NULL;

for (int i = 0; i < n_trees; ++i) {

safe_xgboost(XGBoosterUpdateOneIter(booster, i, dtrain));

safe_xgboost(XGBoosterEvalOneIter(booster, i, eval_dmats, eval_names, 2, &eval_result));

printf("%s\n", eval_result);

}

// predict

bst_ulong out_len = 0;

const float* out_result = NULL;

int n_print = 10;

safe_xgboost(XGBoosterPredict(booster, dtest, 0, 0, &out_len, &out_result));

printf("y_pred: ");

for (int i = 0; i < n_print; ++i) {

printf("%1.4f ", out_result[i]);

}

printf("\n");

// print true labels

safe_xgboost(XGDMatrixGetFloatInfo(dtest, "label", &out_len, &out_result));

printf("y_test: ");

for (int i = 0; i < n_print; ++i) {

printf("%1.4f ", out_result[i]);

}

printf("\n");

// free everything

safe_xgboost(XGBoosterFree(booster));

safe_xgboost(XGDMatrixFree(dtrain));

safe_xgboost(XGDMatrixFree(dtest));

while (1);

return 0;

}

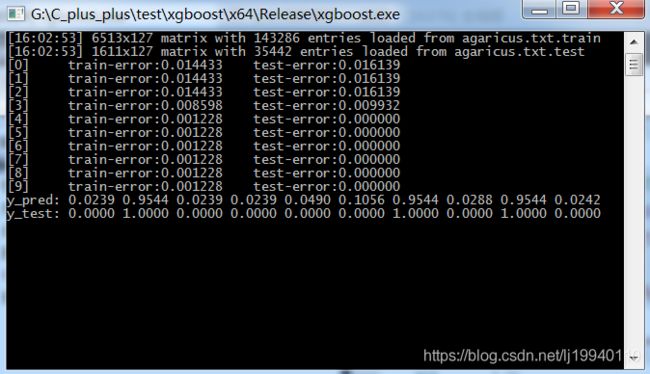

其中,我修改了agaricus.txt.test和agaricus.txt.train的文件路径,他们原本在xgboost\demo\data中,执行结果:

分类效果还是挺好的,实际应用加一级LR,效果更佳。