iOS音频开发之Audio Session

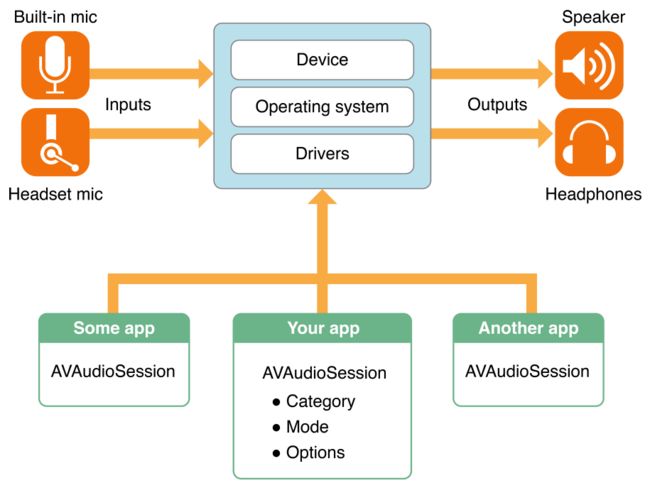

系统通过Audio Session来管理App,App之间的和设备级别的音频行为。通过设置AVAudioSession的category和mode,可以告知系统如何在你的App中使用音频;可以监听音频中断(例如来电话)和音频路由(例如插上耳机)改变的通知;可以使用设置音频的采样率,I/O缓存持续时间和音频频道数。

系统默认的AudioSession有以下默认行为:1.只支持音频播放,不支持录音 2.设置响铃静音开关为静音模式下不会播放声音 3.设备锁屏不会播放声音 4.当你的App音频播放时,其他在后台播放的音频不会播放声音。如果需要与系统默认不同的音频播放行为,需要设置AudioSession,可以通过它的category和mode属性来实现。

// Access the shared, singleton audio session instance

let session = AVAudioSession.sharedInstance()

do {

// Configure the audio session for movie playback

try session.setCategory(AVAudioSessionCategoryPlayback,

mode: AVAudioSessionModeMoviePlayback,

options: [])

} catch let error as NSError {

print("Failed to set the audio session category and mode: \(error.localizedDescription)")

}

multiroute category 不同与其它category,它可以同时支持多个外接设备,例如同时插入了耳机和HDMI,那么音频流会同时流向这两个音频接口,也可以控制,不同的音频流流向不同的设备。

只有如下的category支持AirPlay的镜像和非镜像版本,

-

AVAudioSessionCategorySoloAmbient -

AVAudioSessionCategoryAmbient -

AVAudioSessionCategoryPlayback

AVAudioSessionCategoryPlayAndRecord category 下 modes 只支持 AirPlay的镜像版本:

-

AVAudioSessionModeDefault -

AVAudioSessionModeVideoChat -

AVAudioSessionModeGameChat

对于不同App之间的AVAudioSession竞争,由系统来管理。默认情况下,当你的App在前台运行并播放音频时,系统会默认激活AVAudioSession,你也可以通过session.setActive(true)来显式激活音频会话,但是当你的App进入后台时,如果不需要再使用AVAudioSession,那么需要显式释放它,让系统可以回收音频资源,供其他App使用。

可以在applicationDidBecomeActive:中通过secondaryAudioShouldBeSilencedHint来检测,也可以通过订阅AVAudioSessionSilenceSecondaryAudioHintNotification通知来实现

func setupNotifications() {

NotificationCenter.default.addObserver(self,

selector: #selector(handleSecondaryAudio),

name: .AVAudioSessionSilenceSecondaryAudioHint,

object: AVAudioSession.sharedInstance())

}

func handleSecondaryAudio(notification: Notification) {

// Determine hint type

guard let userInfo = notification.userInfo,

let typeValue = userInfo[AVAudioSessionSilenceSecondaryAudioHintTypeKey] as? UInt,

let type = AVAudioSessionSilenceSecondaryAudioHintType(rawValue: typeValue) else {

return

}

if type == .begin {

// Other app audio started playing - mute secondary audio

} else {

// Other app audio stopped playing - restart secondary audio

}

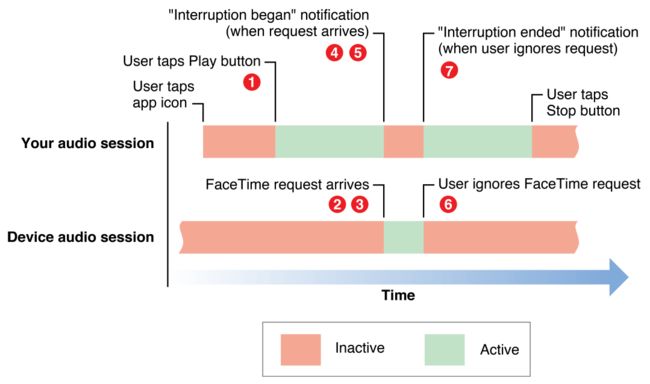

}当来电话,或者闹铃响了,或者是日历提醒发生时,你App的音频会话会被系统中断,需要注册监听系统通知来处理该类事件。

Figure 3-1 An audio session is interrupted

func registerForNotifications() {

NotificationCenter.default.addObserver(self,

selector: #selector(handleInterruption),

name: .AVAudioSessionInterruption,

object: AVAudioSession.sharedInstance())

}

func handleInterruption(_ notification: Notification) {

guard let info = notification.userInfo,

let typeValue = info[AVAudioSessionInterruptionTypeKey] as? UInt,

let type = AVAudioSessionInterruptionType(rawValue: typeValue) else {

return

}

if type == .began {

// Interruption began, take appropriate actions (save state, update user interface)

}

else if type == .ended {

guard let optionsValue =

userInfo[AVAudioSessionInterruptionOptionKey] as? UInt else {

return

}

let options = AVAudioSessionInterruptionOptions(rawValue: optionsValue)

if options.contains(.shouldResume) {

// Interruption Ended - playback should resume

}

}

}当系统的音频服务被重置的时候,如果你需要对这种情况作出处理,需要监听AVAudioSessionMediaServicesWereResetNotification通知来处理,需要是实现以下操作

-

处理孤立的音频对象(如播放器,录音机,转换器或音频队列)并创建新的音频对象

-

重置正在跟踪的所有内部音频状态,包括所有属性

AVAudioSession -

适当时,

AVAudioSession使用该setActive:error:方法重新激活实例

另外当音频的路由变化时,也需要做相应的处理

Figure 4-1 Handling audio hardware route changes

func setupNotifications() {

NotificationCenter.default.addObserver(self,

selector: #selector(handleRouteChange),

name: .AVAudioSessionRouteChange,

object: AVAudioSession.sharedInstance())

}

func handleRouteChange(notification: NSNotification) {

guard let userInfo = notification.userInfo,

let reasonValue = userInfo[AVAudioSessionRouteChangeReasonKey] as? UInt,

let reason = AVAudioSessionRouteChangeReason(rawValue:reasonValue) else {

return

}

switch reason {

case .newDeviceAvailable:

let session = AVAudioSession.sharedInstance()

for output in session.currentRoute.outputs where output.portType == AVAudioSessionPortHeadphones {

headphonesConnected = true

}

case .oldDeviceUnavailable:

if let previousRoute =

userInfo[AVAudioSessionRouteChangePreviousRouteKey] as? AVAudioSessionRouteDescription {

for output in previousRoute.outputs where output.portType == AVAudioSessionPortHeadphones {

headphonesConnected = false

}

}

default: ()

}

}还可以设置硬件相关的属性:采样率,输入输出缓存等。

let session = AVAudioSession.sharedInstance()

// Configure category and mode

do {

try session.setCategory(AVAudioSessionCategoryRecord, mode: AVAudioSessionModeDefault)

} catch let error as NSError {

print("Unable to set category: \(error.localizedDescription)")

}

// Set preferred sample rate

do {

try session.setPreferredSampleRate(44_100)

} catch let error as NSError {

print("Unable to set preferred sample rate: \(error.localizedDescription)")

}

// Set preferred I/O buffer duration

do {

try session.setPreferredIOBufferDuration(0.005)

} catch let error as NSError {

print("Unable to set preferred I/O buffer duration: \(error.localizedDescription)")

}

// Activate the audio session

do {

try session.setActive(true)

} catch let error as NSError {

print("Unable to activate session. \(error.localizedDescription)")

}

// Query the audio session's ioBufferDuration and sampleRate properties

// to determine if the preferred values were set

print("Audio Session ioBufferDuration: \(session.ioBufferDuration), sampleRate: \(session.sampleRate)")设置 麦克风

// Preferred Mic = Front, Preferred Polar Pattern = Cardioid

let preferredMicOrientation = AVAudioSessionOrientationFront

let preferredPolarPattern = AVAudioSessionPolarPatternCardioid

// Retrieve your configured and activated audio session

let session = AVAudioSession.sharedInstance()

// Get available inputs

guard let inputs = session.availableInputs else { return }

// Find built-in mic

guard let builtInMic = inputs.first(where: {

$0.portType == AVAudioSessionPortBuiltInMic

}) else { return }

// Find the data source at the specified orientation

guard let dataSource = builtInMic.dataSources?.first (where: {

$0.orientation == preferredMicOrientation

}) else { return }

// Set data source's polar pattern

do {

try dataSource.setPreferredPolarPattern(preferredPolarPattern)

} catch let error as NSError {

print("Unable to preferred polar pattern: \(error.localizedDescription)")

}

// Set the data source as the input's preferred data source

do {

try builtInMic.setPreferredDataSource(dataSource)

} catch let error as NSError {

print("Unable to preferred dataSource: \(error.localizedDescription)")

}

// Set the built-in mic as the preferred input

// This call will be a no-op if already selected

do {

try session.setPreferredInput(builtInMic)

} catch let error as NSError {

print("Unable to preferred input: \(error.localizedDescription)")

}

// Print Active Configuration

session.currentRoute.inputs.forEach { portDesc in

print("Port: \(portDesc.portType)")

if let ds = portDesc.selectedDataSource {

print("Name: \(ds.dataSourceName)")

print("Polar Pattern: \(ds.selectedPolarPattern ?? "[none]")")

}

}请求录音授权:iOS10以后需要在plist文件里加NSMicrophoneUsageDescription。

AVAudioSession.sharedInstance().requestRecordPermission { granted in

if granted {

// User granted access. Present recording interface.

} else {

// Present message to user indicating that recording

// can't be performed until they change their preference

// under Settings -> Privacy -> Microphone

}

}