大数据集群搭建之Linux安装hadoop3.0.0

目录

一、安装准备

1、下载地址

2、参考文档

3、ssh免密配置

4、zookeeper安装

5、集群角色分配

二、解压安装

三、环境变量配置

四、修改配置文件

1、检查磁盘空间

2、修改配置文件

五、初始化集群

1、启动zookeeper

2、在zookeeper中初始化元数据

3、启动zkfc

4、启动JournalNode

5、格式化NameNode

6、启动hdfs

7、同步备份NameNode

8、启动备份NameNode

9、查看集群状态

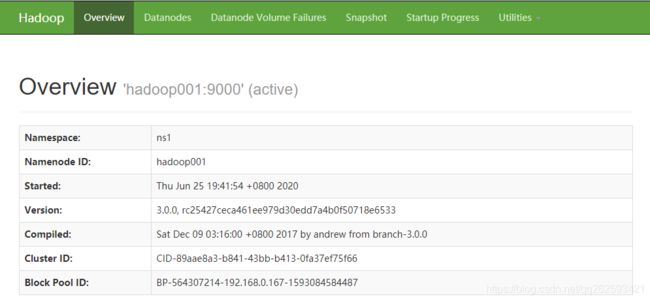

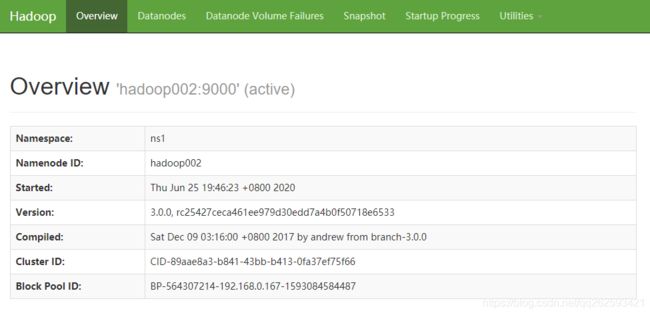

10、访问集群

六、集群高可用测试

1、停止Active状态的NameNode

2、查看standby状态的NameNode

3、重启启动停止的NameNode

4、查看两个NameNode状态

一、安装准备

1、下载地址

https://www.apache.org/dyn/closer.cgi/hadoop/common

2、参考文档

https://hadoop.apache.org/docs/r3.0.0/hadoop-project-dist/hadoop-common/ClusterSetup.html

3、ssh免密配置

https://blog.csdn.net/qq262593421/article/details/105325593

4、zookeeper安装

https://blog.csdn.net/qq262593421/article/details/106955485

5、集群角色分配

| hadoop集群角色 | 集群节点 |

| NameNode | hadoop001、hadoop002 |

| DataNode | hadoop003、hadoop004、hadoop005 |

| JournalNode | hadoop003、hadoop004、hadoop005 |

| ResourceManager | hadoop001、hadoop002 |

| NodeManager | hadoop003、hadoop004、hadoop005 |

| DFSZKFailoverController | hadoop001、hadoop002 |

二、解压安装

解压文件

cd /usr/local/hadoop

tar zxpf hadoop-3.0.0.tar.gz创建软链接

ln -s hadoop-3.0.0 hadoop三、环境变量配置

编辑 /etc/profile 文件

vim /etc/profile添加以下内容

export HADOOP_HOME=/usr/local/hadoop/hadoop

export PATH=$PATH:$HADOOP_HOME/bin四、修改配置文件

1、检查磁盘空间

首先查看磁盘挂载空间,避免hadoop的数据放在挂载空间小的目录

df -h磁盘一共800G,home目录占了741G,故以下配置目录都会以 /home开头

2、修改配置文件

worker

hadoop003

hadoop004

hadoop005core-site.xml

fs.defaultFS

hdfs://ns1

hadoop.tmp.dir

/home/cluster/hadoop/data/tmp

io.file.buffer.size

131072

Size of read/write buffer used in SequenceFiles

ha.zookeeper.quorum

hadoop001:2181,hadoop002:2181,hadoop003:2181

DFSZKFailoverController

hadoop-env.sh

export HDFS_NAMENODE_OPTS="-XX:+UseParallelGC -Xmx4g"

export HDFS_NAMENODE_USER=root

export HDFS_DATANODE_USER=root

export HDFS_JOURNALNODE_USER=root

export HDFS_SECONDARYNAMENODE_USER=root

export YARN_RESOURCEMANAGER_USER=root

export YARN_NODEMANAGER_USER=root

export JAVA_HOME=/usr/java/jdk1.8hdfs-site.xml

dfs.namenode.name.dir

/home/cluster/hadoop/data/nn

dfs.datanode.data.dir

/home/cluster/hadoop/data/dn

dfs.journalnode.edits.dir

/home/cluster/hadoop/data/jn

dfs.nameservices

ns1

dfs.ha.namenodes.ns1

hadoop001,hadoop002

dfs.namenode.rpc-address.ns1.hadoop001

hadoop001:9000

dfs.namenode.http-address.ns1.hadoop001

hadoop001:50070

dfs.namenode.rpc-address.ns1.hadoop002

hadoop002:9000

dfs.namenode.http-address.ns1.hadoop002

hadoop002:50070

dfs.ha.automatic-failover.enabled.ns1

true

dfs.client.failover.proxy.provider.ns1

org.apache.hadoop.hdfs.server.namenode.ha.ConfiguredFailoverProxyProvider

dfs.permissions.enabled

false

dfs.replication

3

dfs.blocksize

64M

HDFS blocksize of 128MB for large file-systems

dfs.namenode.handler.count

100

More NameNode server threads to handle RPCs from large number of DataNodes.

dfs.namenode.shared.edits.dir

qjournal://hadoop001:8485;hadoop002:8485;hadoop003:8485/ns1

dfs.ha.fencing.methods

sshfence

dfs.ha.fencing.ssh.private-key-files

/root/.ssh/id_rsa

mapred-site.xml

mapreduce.framework.name

yarn

Execution framework set to Hadoop YARN.

mapreduce.map.memory.mb

4096

Larger resource limit for maps.

mapreduce.map.java.opts

-Xmx4096M

Larger heap-size for child jvms of maps.

mapreduce.reduce.memory.mb

4096

Larger resource limit for reduces.

mapreduce.reduce.java.opts

-Xmx4096M

Larger heap-size for child jvms of reduces.

mapreduce.task.io.sort.mb

4096

Higher memory-limit while sorting data for efficiency.

mapreduce.task.io.sort.factor

400

More streams merged at once while sorting files.

mapreduce.reduce.shuffle.parallelcopies

200

Higher number of parallel copies run by reduces to fetch outputs from very large number of maps.

mapreduce.jobhistory.address

hadoop001:10020

MapReduce JobHistory Server host:port.Default port is 10020

mapreduce.jobhistory.webapp.address

hadoop001:19888

MapReduce JobHistory Server Web UI host:port.Default port is 19888.

mapreduce.jobhistory.intermediate-done-dir

/tmp/mr-history/tmp

Directory where history files are written by MapReduce jobs.

mapreduce.jobhistory.done-dir

/tmp/mr-history/done

Directory where history files are managed by the MR JobHistory Server.

yarn-site.xml

yarn.resourcemanager.ha.enabled

true

yarn.resourcemanager.ha.automatic-failover.enabled

true

yarn.resourcemanager.ha.automatic-failover.embedded

true

yarn.resourcemanager.cluster-id

yarn-rm-cluster

yarn.resourcemanager.ha.rm-ids

rm1,rm2

yarn.resourcemanager.hostname.rm1

hadoop001

yarn.resourcemanager.hostname.rm2

hadoop002

yarn.resourcemanager.recovery.enabled

true

yarn.resourcemanager.zk.state-store.address

hadoop001:2181,hadoop002:2181,hadoop003:2181

yarn.resourcemanager.zk-address

hadoop001:2181,hadoop002:2181,hadoop003:2181

yarn.resourcemanager.address.rm1

hadoop001:8032

yarn.resourcemanager.address.rm2

hadoop002:8032

yarn.resourcemanager.scheduler.address.rm1

hadoop001:8034

yarn.resourcemanager.webapp.address.rm1

hadoop001:8088

yarn.resourcemanager.scheduler.address.rm2

hadoop002:8034

yarn.resourcemanager.webapp.address.rm2

hadoop002:8088

yarn.acl.enable

true

Enable ACLs? Defaults to false.

yarn.admin.acl

*

yarn.log-aggregation-enable

false

Configuration to enable or disable log aggregation

yarn.resourcemanager.hostname

hadoop001

host Single hostname that can be set in place of setting all yarn.resourcemanager*address resources. Results in default ports for ResourceManager components.

yarn.scheduler.maximum-allocation-mb

20480

yarn.nodemanager.resource.memory-mb

28672

yarn.nodemanager.log.retain-seconds

10800

yarn.nodemanager.log-dirs

/home/cluster/yarn/log/1,/home/cluster/yarn/log/2,/home/cluster/yarn/log/3

yarn.nodemanager.aux-services

mapreduce_shuffle

Shuffle service that needs to be set for Map Reduce applications.

yarn.log-aggregation.retain-seconds

-1

yarn.log-aggregation.retain-check-interval-seconds

-1

五、初始化集群

1、启动zookeeper

由于hadoop的HA机制依赖于zookeeper,因此先启动zookeeper集群

如果zookeeper集群没有搭建参考:https://blog.csdn.net/qq262593421/article/details/106955485

zkServer.sh startzkServer.sh status2、在zookeeper中初始化元数据

hdfs zkfc -formatZK3、启动zkfc

hdfs --daemon start zkfc4、启动JournalNode

格式化NameNode前必须先格式化JournalNode,否则格式化失败

这里配置了3个JournalNode节点,hadoop001、hadoop002、hadoop003

hdfs --daemon start journalnode5、格式化NameNode

在第一台NameNode节点上执行

hdfs namenode -format6、启动hdfs

start-all.sh7、同步备份NameNode

等hdfs初始化完成之后(20秒),在另一台NameNode上执行

hdfs namenode -bootstrapStandby如果格式化失败或者出现以下错误,把对应节点上的目录删掉再重新格式化

Directory is in an inconsistent state: Can't format the storage directory because the current directory is not empty.

![]()

![]()

![]()

rm -rf /home/cluster/hadoop/data/jn/ns1/*

hdfs namenode -format8、启动备份NameNode

同步之后,需要在另一台NameNode节点上启动NameNode进程

hdfs --daemon start namenode9、查看集群状态

hadoop dfsadmin -report10、访问集群

http://hadoop001:50070/

http://hadoop002:50070/

六、集群高可用测试

1、停止Active状态的NameNode

在active状态上的NameNode执行(hadoop1)

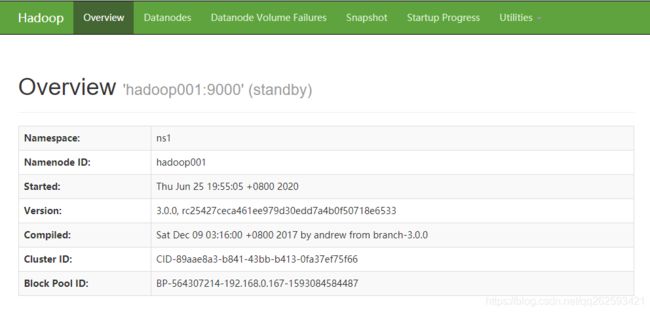

hdfs --daemon stop namenode2、查看standby状态的NameNode

http://hadoop002:50070/ 可以看到,hadoop2从standby变成了active状态

3、重启启动停止的NameNode

停止之后,浏览器无法访问,重启恢复

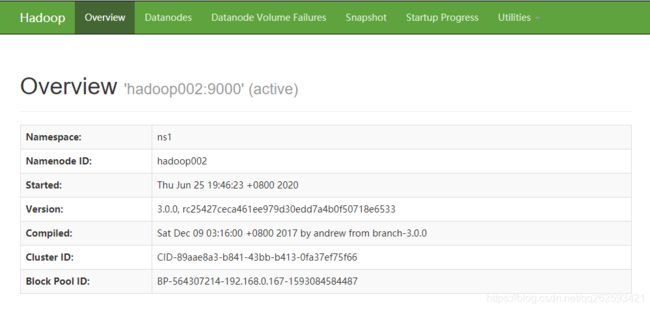

hdfs --daemon start namenode4、查看两个NameNode状态

http://hadoop001:50070/

http://hadoop002:50070/