Opengl ES系列学习--纹理

有时间了,就学学Opengl,很久之前就发现了一篇非常赞的Opengl教程:LearnOpengl CN,不知道为什么,之前还是可以打开的,现在电脑上却访问不了了,只能在手机上看,真是资源浪费啊!!

活到老,学到老!技术积累到一定程度后,我们必须在某个方向不断的深挖,目的就是行业顶尖的水平,大家想想,我们自己的技术能力,哪个方面能达到行业顶尖??估计没有一个人敢自信的回答,所以,还是要不断的学习。

也是基于这样的思考,自己才想着不断的学习Opengl,把这个方面的能力掌握的精通,后续的课程也是沿着LearnOpengl CN的脚步一步一步向前的。

今天我们来学习一下纹理这一课,代码已上传,源码下载:LearnOpengl es--纹理效果实现源码。

先看一下最终实现的效果,如下图:

第一张效果图,是我们在盒子纹理的基础上传入一个颜色顶点属性,由纹理和颜色进行混合得到的结果;第二张效果图是两个纹理mix混合的结果。在效果实现的过程中,对Opengl API的理解也更加透彻了。Render就一个GlTextureRender类,非常简单,该类的所有源码如下,

package com.opengl.learn.aric.texture;

import android.content.Context;

import android.opengl.GLES32;

import android.opengl.GLSurfaceView;

import android.util.Log;

import com.opengl.learn.OpenGLUtils;

import com.opengl.learn.R;

import java.nio.ByteBuffer;

import java.nio.ByteOrder;

import java.nio.FloatBuffer;

import java.nio.ShortBuffer;

import javax.microedition.khronos.egl.EGLConfig;

import javax.microedition.khronos.opengles.GL10;

import static android.opengl.GLES20.GL_ARRAY_BUFFER;

import static android.opengl.GLES20.GL_COLOR_BUFFER_BIT;

import static android.opengl.GLES20.GL_ELEMENT_ARRAY_BUFFER;

import static android.opengl.GLES20.GL_FLOAT;

import static android.opengl.GLES20.GL_STATIC_DRAW;

import static android.opengl.GLES20.GL_TEXTURE0;

import static android.opengl.GLES20.GL_TEXTURE1;

import static android.opengl.GLES20.GL_TEXTURE_2D;

import static android.opengl.GLES20.GL_TRIANGLES;

import static android.opengl.GLES20.GL_UNSIGNED_SHORT;

import static android.opengl.GLES20.glGenBuffers;

import static android.opengl.GLES20.glGetUniformLocation;

public class GlTextureRender implements GLSurfaceView.Renderer {

private final float[] mVerticesData =

{

-0.5f, 0.5f, 0.0f,

-0.5f, -0.5f, 0.0f,

0.5f, -0.5f, 0.0f,

0.5f, 0.5f, 0.0f,

};

private final float[] mColorsData =

{

1.0f, 0.0f, 0.0f,

0.0f, 1.0f, 0.0f,

0.0f, 0.0f, 1.0f,

1.0f, 1.0f, 0.0f,

};

private final float[] mTextureData =

{

0.0f, 0.0f,

0.0f, 1.0f,

1.0f, 1.0f,

1.0f, 0.0f,

};

private final short[] mIndicesData =

{

0, 1, 2,

0, 2, 3,

};

private static final String TAG = GlTextureRender.class.getSimpleName();

private static final int BYTES_PER_FLOAT = 4;

private static final int BYTES_PER_SHORT = 2;

private static final int POSITION_COMPONENT_COUNT = 3;

private static final int COLOR_COMPONENT_COUNT = 3;

private static final int TEXTURE_COMPONENT_COUNT = 2;

private static final int INDEX_COMPONENT_COUNT = 1;

private Context mContext;

private int mProgramObject;

private int uTextureContainer, containerTexture;

private int uTextureFace, faceTexture;

private FloatBuffer mVerticesBuffer;

private FloatBuffer mColorsBuffer;

private FloatBuffer mTextureBuffer;

private ShortBuffer mIndicesBuffer;

private int mWidth, mHeight;

private int mVAO, mVBO, mCBO, mTBO, mEBO;

public GlTextureRender(Context context) {

mContext = context;

mVerticesBuffer = ByteBuffer.allocateDirect(mVerticesData.length * BYTES_PER_FLOAT)

.order(ByteOrder.nativeOrder()).asFloatBuffer();

mVerticesBuffer.put(mVerticesData).position(0);

mColorsBuffer = ByteBuffer.allocateDirect(mColorsData.length * BYTES_PER_FLOAT)

.order(ByteOrder.nativeOrder()).asFloatBuffer();

mColorsBuffer.put(mColorsData).position(0);

mTextureBuffer = ByteBuffer.allocateDirect(mTextureData.length * BYTES_PER_FLOAT)

.order(ByteOrder.nativeOrder()).asFloatBuffer();

mTextureBuffer.put(mTextureData).position(0);

mIndicesBuffer = ByteBuffer.allocateDirect(mIndicesData.length * BYTES_PER_SHORT)

.order(ByteOrder.nativeOrder()).asShortBuffer();

mIndicesBuffer.put(mIndicesData).position(0);

}

@Override

public void onSurfaceCreated(GL10 gl, EGLConfig config) {

mProgramObject = OpenGLUtils.loadProgram(mContext, R.raw.gltexture_vertex, R.raw.gltexture_fragment);

uTextureContainer = glGetUniformLocation(mProgramObject, "uTextureContainer");

uTextureFace = glGetUniformLocation(mProgramObject, "uTextureFace");

int[] array = new int[1];

GLES32.glGenVertexArrays(1, array, 0);

mVAO = array[0];

array = new int[4];

glGenBuffers(4, array, 0);

mVBO = array[0];

mCBO = array[1];

mTBO = array[2];

mEBO = array[3];

Log.e(TAG, "onSurfaceCreated, " + mProgramObject + ", uTexture: " + uTextureContainer + ", uTextureFace: " + uTextureFace);

containerTexture = OpenGLUtils.loadTexture(mContext, R.mipmap.container);

faceTexture = OpenGLUtils.loadTexture(mContext, R.mipmap.awesomeface);

loadBufferData();

}

private void loadBufferData() {

GLES32.glBindVertexArray(mVAO);

mVerticesBuffer.position(0);

GLES32.glBindBuffer(GL_ARRAY_BUFFER, mVBO);

GLES32.glBufferData(GL_ARRAY_BUFFER, BYTES_PER_FLOAT * mVerticesData.length, mVerticesBuffer, GL_STATIC_DRAW);

GLES32.glVertexAttribPointer(0, POSITION_COMPONENT_COUNT, GL_FLOAT, false, 0, 0);

GLES32.glEnableVertexAttribArray(0);

mColorsBuffer.position(0);

GLES32.glBindBuffer(GL_ARRAY_BUFFER, mCBO);

GLES32.glBufferData(GL_ARRAY_BUFFER, BYTES_PER_FLOAT * mColorsData.length, mColorsBuffer, GL_STATIC_DRAW);

GLES32.glVertexAttribPointer(1, COLOR_COMPONENT_COUNT, GL_FLOAT, false, 0, 0);

GLES32.glEnableVertexAttribArray(1);

mTextureBuffer.position(0);

GLES32.glBindBuffer(GL_ARRAY_BUFFER, mTBO);

GLES32.glBufferData(GL_ARRAY_BUFFER, BYTES_PER_FLOAT * mTextureData.length, mTextureBuffer, GL_STATIC_DRAW);

GLES32.glVertexAttribPointer(2, TEXTURE_COMPONENT_COUNT, GL_FLOAT, false, 0, 0);

GLES32.glEnableVertexAttribArray(2);

mIndicesBuffer.position(0);

GLES32.glBindBuffer(GL_ELEMENT_ARRAY_BUFFER, mEBO);

GLES32.glBufferData(GL_ELEMENT_ARRAY_BUFFER, BYTES_PER_SHORT * mIndicesData.length, mIndicesBuffer, GL_STATIC_DRAW);

}

@Override

public void onSurfaceChanged(GL10 gl, int width, int height) {

mWidth = width;

mHeight = height;

}

@Override

public void onDrawFrame(GL10 gl) {

GLES32.glViewport(0, 0, mWidth, mHeight);

GLES32.glClear(GL_COLOR_BUFFER_BIT);

GLES32.glClearColor(1.0f, 1.0f, 1.0f, 0.0f);

GLES32.glUseProgram(mProgramObject);

GLES32.glEnableVertexAttribArray(0);

GLES32.glEnableVertexAttribArray(1);

GLES32.glEnableVertexAttribArray(2);

GLES32.glActiveTexture(GL_TEXTURE0);

GLES32.glBindTexture(GL_TEXTURE_2D, containerTexture);

GLES32.glUniform1i(uTextureContainer, 0);

GLES32.glActiveTexture(GL_TEXTURE1);

GLES32.glBindTexture(GL_TEXTURE_2D, faceTexture);

GLES32.glUniform1i(uTextureFace, 1);

GLES32.glBindVertexArray(mVAO);

GLES32.glDrawElements(GL_TRIANGLES, mIndicesData.length, GL_UNSIGNED_SHORT, 0);

GLES32.glDisableVertexAttribArray(0);

GLES32.glDisableVertexAttribArray(1);

GLES32.glDisableVertexAttribArray(2);

}

}

其中的OpenGLUtils类是一个工具类,就是直接封装了Opengl es的一些操作方法,该类的完整源码如下:

package com.opengl.learn;

import android.content.Context;

import android.content.res.Resources;

import android.graphics.Bitmap;

import android.graphics.BitmapFactory;

import android.opengl.GLES30;

import android.util.Log;

import java.io.BufferedReader;

import java.io.IOException;

import java.io.InputStream;

import java.io.InputStreamReader;

import static android.opengl.GLES20.GL_COMPILE_STATUS;

import static android.opengl.GLES20.GL_FRAGMENT_SHADER;

import static android.opengl.GLES20.GL_LINK_STATUS;

import static android.opengl.GLES20.GL_NO_ERROR;

import static android.opengl.GLES20.GL_TEXTURE_2D;

import static android.opengl.GLES20.GL_VERTEX_SHADER;

import static android.opengl.GLES20.glAttachShader;

import static android.opengl.GLES20.glBindTexture;

import static android.opengl.GLES20.glCompileShader;

import static android.opengl.GLES20.glCreateProgram;

import static android.opengl.GLES20.glCreateShader;

import static android.opengl.GLES20.glDeleteProgram;

import static android.opengl.GLES20.glDeleteShader;

import static android.opengl.GLES20.glDeleteTextures;

import static android.opengl.GLES20.glGenTextures;

import static android.opengl.GLES20.glGenerateMipmap;

import static android.opengl.GLES20.glGetError;

import static android.opengl.GLES20.glGetProgramInfoLog;

import static android.opengl.GLES20.glGetProgramiv;

import static android.opengl.GLES20.glGetShaderInfoLog;

import static android.opengl.GLES20.glGetShaderiv;

import static android.opengl.GLES20.glLinkProgram;

import static android.opengl.GLES20.glShaderSource;

import static android.opengl.GLUtils.texImage2D;

public class OpenGLUtils {

private static final String TAG = OpenGLUtils.class.getSimpleName();

public static int loadProgram(Context context, int vertexId, int fragmentId) {

String vShaderStr = readTextFileFromResource(context, vertexId);

String fShaderStr = readTextFileFromResource(context, fragmentId);

int vertexShader;

int fragmentShader;

int programObject;

int[] linked = new int[1];

// Load the vertex/fragment shaders

vertexShader = loadShader(GL_VERTEX_SHADER, vShaderStr);

fragmentShader = loadShader(GL_FRAGMENT_SHADER, fShaderStr);

// Create the program object

programObject = glCreateProgram();

if (programObject == 0) {

return programObject;

}

glAttachShader(programObject, vertexShader);

glAttachShader(programObject, fragmentShader);

// Link the program

glLinkProgram(programObject);

// Check the link status

glGetProgramiv(programObject, GL_LINK_STATUS, linked, 0);

if (linked[0] == 0) {

Log.e(TAG, "Error linking program:");

Log.e(TAG, glGetProgramInfoLog(programObject));

glDeleteProgram(programObject);

return 0;

}

return programObject;

}

public static int loadShader(int type, String shaderSrc) {

int shader;

int[] compiled = new int[1];

// Create the shader object

shader = glCreateShader(type);

if (shader == 0) {

return 0;

}

// Load the shader source

glShaderSource(shader, shaderSrc);

// Compile the shader

glCompileShader(shader);

// Check the compile status

glGetShaderiv(shader, GL_COMPILE_STATUS, compiled, 0);

if (compiled[0] == 0) {

Log.e(TAG, "compile shader error: " + glGetShaderInfoLog(shader));

glGetError();

glDeleteShader(shader);

return 0;

}

Log.i(TAG, "load " + type + " shader result: " + shader);

return shader;

}

public static String readTextFileFromResource(Context context,

int resourceId) {

StringBuilder body = new StringBuilder();

try {

InputStream inputStream = context.getResources()

.openRawResource(resourceId);

InputStreamReader inputStreamReader = new InputStreamReader(

inputStream);

BufferedReader bufferedReader = new BufferedReader(

inputStreamReader);

String nextLine;

while ((nextLine = bufferedReader.readLine()) != null) {

body.append(nextLine);

body.append('\n');

}

} catch (IOException e) {

throw new RuntimeException(

"Could not open resource: " + resourceId, e);

} catch (Resources.NotFoundException nfe) {

throw new RuntimeException("Resource not found: "

+ resourceId, nfe);

}

return body.toString();

}

public static int loadTexture(Context context, int resourceId) {

final int[] textureObjectIds = new int[1];

glGenTextures(1, textureObjectIds, 0);

if (textureObjectIds[0] == 0) {

return 0;

}

final BitmapFactory.Options options = new BitmapFactory.Options();

options.inScaled = false;

// Read in the resource

final Bitmap bitmap = BitmapFactory.decodeResource(

context.getResources(), resourceId, options);

if (bitmap == null) {

glDeleteTextures(1, textureObjectIds, 0);

return 0;

}

// Bind to the texture in OpenGL

glBindTexture(GL_TEXTURE_2D, textureObjectIds[0]);

// Set filtering: a default must be set, or the texture will be black.

GLES30.glTexParameteri(GLES30.GL_TEXTURE_2D, GLES30.GL_TEXTURE_WRAP_S, GLES30.GL_REPEAT);

GLES30.glTexParameteri(GLES30.GL_TEXTURE_2D, GLES30.GL_TEXTURE_WRAP_T, GLES30.GL_REPEAT);

GLES30.glTexParameteri(GLES30.GL_TEXTURE_2D, GLES30.GL_TEXTURE_MIN_FILTER, GLES30.GL_LINEAR);

GLES30.glTexParameteri(GLES30.GL_TEXTURE_2D, GLES30.GL_TEXTURE_MAG_FILTER, GLES30.GL_LINEAR);

// Load the bitmap into the bound texture.

texImage2D(GL_TEXTURE_2D, 0, bitmap, 0);

// Note: Following code may cause an error to be reported in the

// ADB log as follows: E/IMGSRV(20095): :0: HardwareMipGen:

// Failed to generate texture mipmap levels (error=3)

// No OpenGL error will be encountered (glGetError() will return

// 0). If this happens, just squash the source image to be

// square. It will look the same because of texture coordinates,

// and mipmap generation will work.

glGenerateMipmap(GL_TEXTURE_2D);

// Recycle the bitmap, since its data has been loaded into

// OpenGL.

bitmap.recycle();

// Unbind from the texture.

glBindTexture(GL_TEXTURE_2D, 0);

return textureObjectIds[0];

}

public static void checkError(String op) {

int error = glGetError();

if (GL_NO_ERROR != error) {

Log.e(TAG, op + " checkError: " + error);

}

}

}

本节的实现使用了VAO、VBO、EBO,其实我当前对这些概念的理解还不够深入,所以只限于会使用而已,loadBufferData方法中的代码也是各种调试最终才成型的,可以看到该方法中的代码非常规整,大家不需要考虑,如果有使用到的,直接复制过来,改一下数据就好了,反倒是顺序不对的话,就会各种出错,要么画不出任何东西,要么直接卡住。我就是在调试过程中修改了GLES32.glBindVertexArray(mVAO);方法的调用时序,就导致卡死了。

好,我们就代码中需要注意的点说明一下,首先是如下几个常量:

private static final int BYTES_PER_FLOAT = 4;

private static final int BYTES_PER_SHORT = 2;

private static final int POSITION_COMPONENT_COUNT = 3;

private static final int COLOR_COMPONENT_COUNT = 3;

private static final int TEXTURE_COMPONENT_COUNT = 2;

private static final int INDEX_COMPONENT_COUNT = 1;看着很简单,但是强烈建议大家必须这样使用,各地方统一,也不容易出错,任何在设置顶点数据的地方,都必须使用这些常量,否则有哪一个地方size不对,就会导致画不出来任何东西,这也是调试Opengl最麻烦的地方,想想看,这不同于我们写一般的代码,如果我们要处理逻辑,最终的数据不对,我们还可以一步步跟踪分析,哪个阶段有问题。但是如果不理解Opengl的话,界面显示不出来任何东西,我们根本没办法跟踪,这时候考验功底的时候就来了,我们必须要对Opengl有全面的认识,知道每句API的作用,这样才能很快的找到问题的根因。

private void loadBufferData() {

GLES32.glBindVertexArray(mVAO);

mVerticesBuffer.position(0);

GLES32.glBindBuffer(GL_ARRAY_BUFFER, mVBO);

GLES32.glBufferData(GL_ARRAY_BUFFER, BYTES_PER_FLOAT * mVerticesData.length, mVerticesBuffer, GL_STATIC_DRAW);

GLES32.glVertexAttribPointer(0, POSITION_COMPONENT_COUNT, GL_FLOAT, false, 0, 0);

GLES32.glEnableVertexAttribArray(0);

mColorsBuffer.position(0);

GLES32.glBindBuffer(GL_ARRAY_BUFFER, mCBO);

GLES32.glBufferData(GL_ARRAY_BUFFER, BYTES_PER_FLOAT * mColorsData.length, mColorsBuffer, GL_STATIC_DRAW);

GLES32.glVertexAttribPointer(1, COLOR_COMPONENT_COUNT, GL_FLOAT, false, 0, 0);

GLES32.glEnableVertexAttribArray(1);

mTextureBuffer.position(0);

GLES32.glBindBuffer(GL_ARRAY_BUFFER, mTBO);

GLES32.glBufferData(GL_ARRAY_BUFFER, BYTES_PER_FLOAT * mTextureData.length, mTextureBuffer, GL_STATIC_DRAW);

GLES32.glVertexAttribPointer(2, TEXTURE_COMPONENT_COUNT, GL_FLOAT, false, 0, 0);

GLES32.glEnableVertexAttribArray(2);

mIndicesBuffer.position(0);

GLES32.glBindBuffer(GL_ELEMENT_ARRAY_BUFFER, mEBO);

GLES32.glBufferData(GL_ELEMENT_ARRAY_BUFFER, BYTES_PER_SHORT * mIndicesData.length, mIndicesBuffer, GL_STATIC_DRAW);

}可以看到,在loadBufferData方法中调用API操作数据时,不能使用魔鬼数字,而要用我们定义的常量,这样的代码可读性更强,也更易于维护。

如下逻辑对纹理的数据绑定需要放在onDrawFrame方法中,也就是说每一帧都需要执行:

GLES32.glActiveTexture(GL_TEXTURE0);

GLES32.glBindTexture(GL_TEXTURE_2D, containerTexture);

GLES32.glUniform1i(uTextureContainer, 0);

GLES32.glActiveTexture(GL_TEXTURE1);

GLES32.glBindTexture(GL_TEXTURE_2D, faceTexture);

GLES32.glUniform1i(uTextureFace, 1);针对这个我有专门测试过,设置数据的逻辑尽量都放在onDrawFrame方法中,要不然绘制结果可能会异常。比如下面截图,我把glUseProgram(mBlendProgram)逻辑移出来,放在其他地方,让它只执行一次,那么watermark的效果就绘制不出来了。

所以,如果大家对某些命令不确定的话,优先还是放在onDrawFrame方法中;不过反过来又说了,需要知道,Opengl的逻辑是以command命令的方式执行在GPU上的,命令应该尽可能的少(大家可以使用GAPID分析我们的绘制过程,可以明确看到一次绘制有多少条指令)。这和我们的功能实现又是相反的,对这个问题我也不能确定的回答,有时候可以移出来只执行一次,有时候就需要放在onDrawFrame方法中,大家还是需要自己结合实际工作酌情处理。

纹理的使用基本就如下三行代码,第一句激活一个纹理单元,第二句把我们生成的纹理绑定到2D纹理上,faceTexture也就是我们调用faceTexture = OpenGLUtils.loadTexture(mContext, R.mipmap.awesomeface)加载bitmap或者其他方式生成的纹理,它当中保存的纹理原始的数据,那么第二行执行完,基本的意思就可以理解为,把我们bitmap生成的纹理和纹理单元一(GL_TEXTURE1)绑定在一起,第三行再把纹理单元一赋值给片段着色器中的uniform变量,这样就完成纹理的传递了。

GLES32.glActiveTexture(GL_TEXTURE1);

GLES32.glBindTexture(GL_TEXTURE_2D, faceTexture);

GLES32.glUniform1i(uTextureFace, 1);还有一个问题,就是纹理方向,我们来看看顶点坐标和纹理坐标的定义:

private final float[] mVerticesData =

{

-0.5f, 0.5f, 0.0f,

-0.5f, -0.5f, 0.0f,

0.5f, -0.5f, 0.0f,

0.5f, 0.5f, 0.0f,

};

private final float[] mTextureData =

{

0.0f, 0.0f,

0.0f, 1.0f,

1.0f, 1.0f,

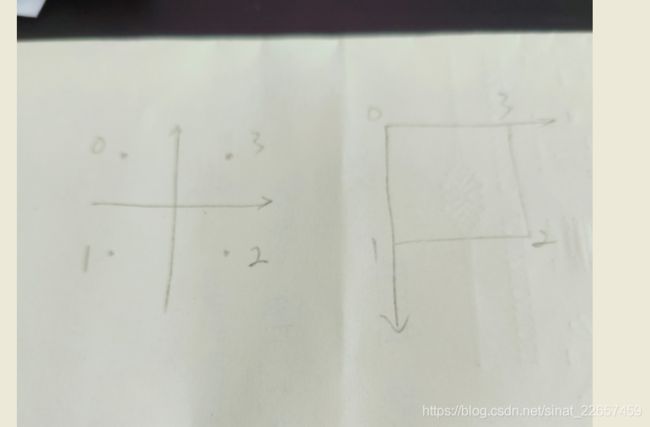

1.0f, 0.0f,

};我们定义的顶点坐标是左上,左下,右下,右上,到现在为止,我们没有对坐标进行变换,所以顶点坐标的中心点就是屏幕的中心点,屏幕最左上角的顶点坐标应该是(-1,0),最右上角的顶点坐标是(1,1);而纹理坐标就有些不同了,我们记住结果:纹理坐标的原点在屏幕左上角,而纹理坐标的右下角就是(1,1),如下图:

所以也可以看到,我们定义顶点坐标和纹理坐标的顺序了,否则纹理坐标顺序出错的话,就会导致图形扭曲。

要拿出来说的点基本就这几个,最后我们来看一下顶点着色器和片段着色器,顶点着色器完整源码如下:

#version 320 es

layout(location = 0) in vec3 aPosition;

layout(location = 1) in vec3 aColor;

layout(location = 2) in vec2 aTexCoord;

out vec3 outColor;

out vec2 outTexCoord;

void main()

{

outColor = aColor;

outTexCoord = aTexCoord;

gl_Position = vec4(aPosition, 1.0);

}片段着色器完整源码如下:

#version 320 es

precision mediump float;

out vec4 FragColor;

in vec3 outColor;

in vec2 outTexCoord;

uniform sampler2D uTextureContainer;

uniform sampler2D uTextureFace;

void main()

{

// FragColor = texture(uTextureContainer, outTexCoord) * vec4(outColor, 1.0);

FragColor = mix(texture(uTextureContainer, outTexCoord), texture(uTextureFace, outTexCoord), 0.2);

}

main函数中注释掉的一行就是我们效果图一的逻辑,把传入的盒子纹理和顶点颜色进行混合;第二行就是效果图二的逻辑,mix函数是进行纹理加权混合,第三个参数0.2就是权重,计算方法就是(texture1 * (1 - 0.2) + texture2 * (0.2)),从这个计算过程就可以看到,第三个参数越大,texture2占据的分量就会越重。

对了,还有一个点需要说一下,我们的实现只注重效果,没有考虑内存,大家在实际工作中,一定要小心,完成效果后,看一下meminfo内存会不会有泄露,Opengl相关的单位比如context、program、texture等等对象都要适时的删除掉,防止内存泄露。

好了,本节的内容就这么多了,下节我们继续看变换一节。